The AI Hype Cycle - heading for a fall?

It’s after Labor Day, vacations are over, kids are back to school, and summer is coming to a close. As seasons change, our routines and habits change up. So too with the seasons of AI. Are we in a new phase of AI?

In recent months,we’ve noticed some chatter, articles, and YouTube videos about a possible die-down in AI hype. Some declared the AI hype cycle was over based on news that OpenAI’s ChatGPT was reported to have lower usage in June than prior.

It’s a good time to ask: Where are we in the AI hype cycle?

Gartner did just that, in their August report, “What’s New in Artificial Intelligence from the 2023 Gartner Hype Cycle.” Looking at a high level at where the AI hype cycle is, Gartner sees Generative AI as the main AI driver and sees two sides to it:

Innovations that will be fueled by Generative AI, e.g., AI systems and smart robots.

Innovations that will fuel advances in Generative AI, e.g., foundation models, ModelOps, Synthetic data, and AI simulations.

Their own analysis of where each of their categories fits on the hype cycle was presented in the following.

While Gartner’s chart is cute and give some sense of what might be ‘up and coming’ versus ‘old hat’, it is not very illuminating, at least with respect to what we are seeing in the short term (matters of months). Nor does it tell us the time-frame and pace of future progress.

For example, their chart suggests foundation models are at peak hype. To a large extent, the newness of ChatGPT has indeed worn off, and people are starting to really use the AI tools, picking apart where it is useless and what is useful. So maybe peak is correct for the hype.

But does that tell us if Google’s upcoming Gemini will be game-changer? Or a flop? Or taken in stride? No telling.

AI Is Getting Real

If there is a die-down, it’s not in actual progress or activity. Attention is shifting from novelty-driven hype to the question of where and how to make AI useful. On that front, real activity is not slowing down at all:

Google has been busily improving Bard, their Generative Search Experience, multi-modal models and more. Google plans to release Gemini as a GPT-4 beating foundational AI model by the end of the year; if so, Google might retake the AI crown.

OpenAI is continuing to make their tooling more useful; note how they have rolled out Code Interpreter, GPT-3.5 fine-tuning, Enterprise models, tooling options with Functions, and user customizations. There is more to come.

Open source models have been coming out at a surprising quick pace. Following up with Llama 2 release earlier this summer, Falcon 180B was released, the most advanced and largest open source foundation AI model. There are releas

AI research on increasing context window length, improving input datasets and pre-training processes, and more is continuing.

Dozens of companies are continuing to build generative AI into their products

If the progress of AI continues at the same pace it has been progressing all year, we will say many major advances in AI models, tools, datasets and AI applications and tools. The whole ecosystem is continuing to evolve and march forward at a rapid pace. This step-change in AI across the board is what would cap off The Year of AI.

This is all to say that the feeling of acceleration that you feel from a step-change like ChatGPT might not be with us, but the velocity of change is not slowing. Rapid advancement will be the new normal.

After the 2023 “Year of AI”, we will have multiple years where the advances will be no slower. It’s why I am confident that, no matter if you shift the goalposts on what AGI means or not, we will get to AGI this decade.

What’s hard? What’s easy? What’s soon? What’s far off?

Artificial Intelligence is hard to pin down. It has been defined as things that are ‘easy for humans but hard for machines.’ The problem with that definition is that once some researchers figured out a way for AI to tackle a particular problem, we somehow convinced ourselves that it wasn’t one of those uniquely human activities, it was a machine activity.

So it was with symbolic AI, and then with AI that could beat a world chess champion. AI for chess was ‘narrow AI’ and we wanted AGI, the idea of an AI that could have the flexibility to see a new problem and actually adapt to tackle it.

In 2021, when OpenAI researchers uncovered how LLMs like GPT-3 were “few-shot learners” it opened the door to a path to AGI, by even more scaling of LLMs. The Microsoft team that studied GPT-4 declared that it exhibited ‘Sparks of AGI’ because of its general reasoning. We are still unsure of the limits of scaling, but we have reason to believe we are not there yet and plenty more input data and compute to test the question.

What Google creates out of Gemini will tell us much on that score.

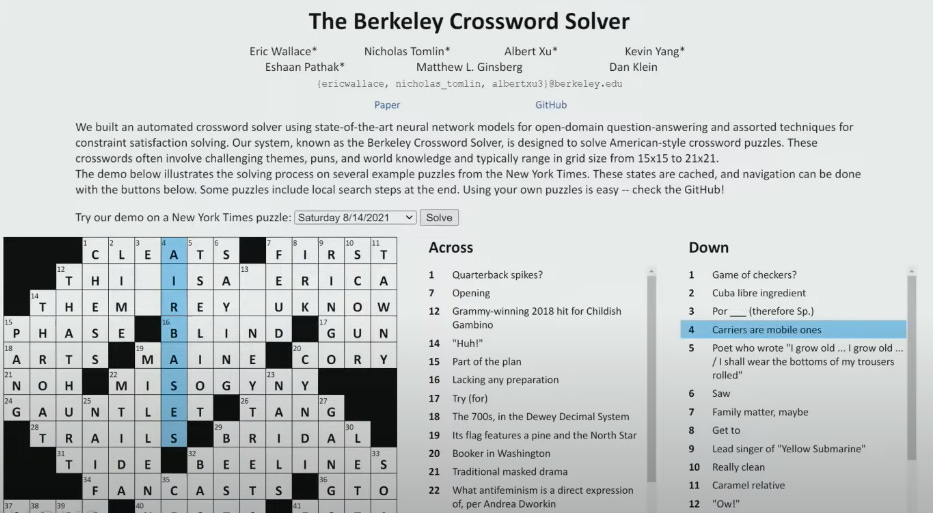

Did you know that AI has managed to tackle crossword solving? (Wallace, Tomlin, et al, 2022) Perhaps that should not be a surprise, but I only found out about it via a talk by Prof Dan Klein of UC Berkeley.

I find this notable because if I didn’t know it, I suspect few others would know it. I believe this will be template for how AI is perceived: Most people will NOT know all of what AI can do until they see it, and they won’t see it until AI is out in the wild for some time. AI is advancing rapidly and most people are vastly underestimating what AI can do now, let alone what AI can do in the near-term future.

So, to answer my own questions. What’s near and/or ‘easy’:

The massive amount of H100 compute sold by NVidia massively increases AI model training capacity, which will translate into a deluge of new AI models in the next 12 to 24 months.

Releases of many open source and proprietary AI models at a very fast clip.

The AI ecosystem keeps improving, building upon itself to make more useful AI applications, frameworks, and tools.

Major software, social media and other tech companies expand AI model use and applications and features in their product lines.

Rapid adoption of AI-level capabilities for additional work tasks.

Software engineering is changed drastically with AI ‘co-pilot’ programming assistants.

What is far or hard:

There still may be hurdles to get to AGI; I am still sticking with 2029 as my estimate for when we see it.

Scaling models to be bigger and bigger won’t be effective nor efficient, hence we will see MoE models (the way GPT-4 was done) instead of large dense models.

Useful household and workplace utility robots may take some time. It’s harder than building an LLM.

Our summer is over. But while the ‘newness’ of generative AI has worn off, the reality, utility and power of AI hasn’t been fully appreciated, nor has it made its full impact. AI’s summer has just begun.