Enhancing The AI Chatbot

Design Convergence hits the AI chatbot interface, as they evolve towards a robust standard interface.

AI Model and Chatbot Choices

AI users now have access to several great AI frontier models through web interfaces and mobile apps:

OpenAI’s ChatGPT, hosting GPT-4.5 and o3-mini.

Google Gemini 2.0, including Gemini 2.0 Pro, Gemini 2.0 Flash Thinking, and Deep Research.

Qwen Chat, hosting Qwen 2.5-Max and Qwen-2.5-Max-QwQ.

We have written about most of these AI models individually; each has distinctive strengths and capabilities. This article is about the enhanced and expanded AI chatbot interface around these models, with AI chatbot interfaces evolving to become standardized around a core set of enhanced features.

AI Interface Design Convergence

When sharing the news about Qwen’s Max-QwQ-Preview release this past week, I went to check out the Qwen chat interface and try out the Max-AI model. Qwen chat is impressive, with both a thinking (QwQ) mode and web search that can be turned on or off separately or together. It struck me that the interface was so familiar, I couldn’t tell from the interface what AI model it is.

Qwen is following in the footsteps of DeepSeek, so it’s not surprising that it’s got the same Thinking Mode as the DeepSeek R1 chat interface. It has also adopted web search that has been available on DeepSeek’s chat interface as well.

The trend is clear: One AI chat interface will adopt the successful features of another AI model interface, leading to a convergence to a consistent set of features among all leading AI chat interfaces.

ChatGPT

Since OpenAI’s ChatGPT is the OG of AI interfaces, it deserves a special mention on its evolution. The original ChatGPT was a spartan text chatbot interface. Over time, it has accumulated key features such as adding voice interaction mode and Canvas (to display generated artifacts) to GPT-4o, and o1/ o3-mini for reasoning. It can also generate SVG images inline and images via DALL-E.

Much of the access to features depends on the model, and Sam Altman expressed dissatisfaction with the model picker as adding to user confusion. Until a unifying AI model comes along, there will be these two flagship models to choose from, plus older models such as GPT-4o:

GPT-4.5: GPT-4.5 emphasizes creative tasks, an expanded knowledge base, a natural conversational style, and supports ChatGPT tools including image inputs.

o3-mini: This reasoning model is designed for coding, mathematics, science, and research-based tasks.

While routing queries might be better than a model picker, I’m not a fan of any approach that hides information from users. For example, OpenAI’s o1 originally didn’t share reasoning traces; now it does and it’s much better. I hope they show what model they use for a query and keep some manual control over model choices.

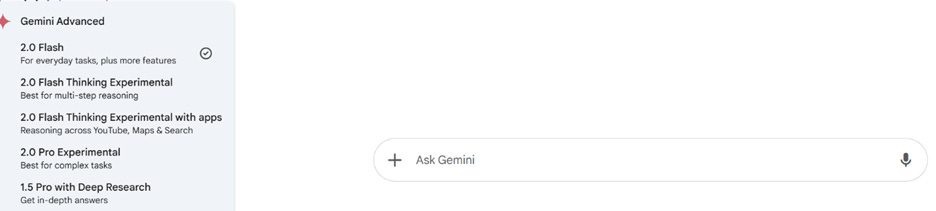

Gemini’s Sparse Interface

Google Gemini 2.0 offers variants like Flash, Pro, and Flash-Lite, as well as Deep Research. Key features include multimodal understanding and interaction, coding, reasoning, and controllable text-to-speech, and access to YouTube, Maps, and search. The specific AI model chosen for a query dictates what features are available.

Gemini 2.0 uses a model picker with several choices without explicitly turning on features. I have found myself just favoring the “2.0 Flash Thinking with apps” model over the Gemini 2.0 Pro model because access to search is almost always useful.

The Answer Engine

Back in 2023, I spoke of the AI-based LLM chatbot as the “Answer Engine” replacing the search engine as the interface for users to get access to desired information. Perplexity pioneered the mix of web search and AI to answer factual queries and generate reports. Others have emulated their success, adding web search connectors and the more power Deep Research feature.

Mistral’s Le Chat leans in on access to high quality data and web search, and it includes access to “recent information balanced across web search, robust journalism, social media, and multiple other sources” for better factuality. Mistral Le Chat also has code execution for data analysis and other uses, making this a power combination to analyze and interpret public web datasets. Mistral Le Chat, hides the model from you and picks what you need based on your query.

One step beyond answer engines is Deep Research, introduced in Gemini 2.0 in December, then by OpenAI last month. Deep Research combines multiple steps of web search and AI model generation to conduct a deep dive on a topic and generate a thorough report. Grok-3 has a DeepThink feature, but its output is more comparable to web search than Deep Research.

Creating Artifacts

One of the major enhancements to the chat interface has been the ability to view generated code, text or images as artifacts. The Claude artifacts feature first introduced this feature and does an excellent job here.

The other innovation Claude 3.7 Sonnet brings to the table is being a “hybrid” reasoning model that can switch between rapid responses and deep thinking on command. It features "thinking content blocks" to display its internal reasoning and has a 200k context window.

The Rundown

Thanks to the aforementioned design convergence, all major AI chatbots are an enhanced interface converging on a common set of features:

Thinking / Reasoning.

Web search.

Canvas to display code, images, or writing Artifacts.

Image upload for visual understanding tasks.

Code Interpreter to run code.

Execution of tools.

Deep Research to generate longer topic-specific reports.

As the table shows, not all AI chatbots have all these features, but they are largely common features. Competition will drive various AI model interfaces to further converge to support all these features.

Conclusion

Top use cases for an AI model chatbot include:

Getting answers to questions.

Generating code and aiding with software.

Research, writing, and generating reports.

Data analysis.

The AI chatbot interface has evolved to support these use cases. The addition of code interpreter aids with checking generated code and conducting data analysis. Canvas and Artifacts aid also aid with that and with creative writing output and reports as well.

Since I do much research and writing, most of my queries that aren’t coding-related are fact-related. These queries need grounding - an answer engine - to work the best, making web search the most valuable feature for these use cases. I personally find Deep Research from Gemini and OpenAI the most useful AI tools for both work and curiosity.

More complex use cases will require more complex AI agents. For example, getting an AI agent to research, comparison shop, and buy some online products for you, will require other features, such as Operator, which is out-of-scope for an AI Chatbot. A new class of interface will support control of these web-browsing and computer-using AI agents.