Evaluating AI Agents

AI agent benchmarks simulate real-world complexity to compare AI agents under controlled conditions. Enterprise AI agent deployment requires rigorous evaluations, with benchmarking plus pilot evals.

The Challenge of AI Agent Assessments

With the rise of many AI agents and agentic AI applications both for consumers in the enterprise, we have the challenge of deciding which AI agents are most effective and useful.

As this article from Galileo on AI agent Evaluation defines it, “AI agent evaluation helps assess how effectively agentic AI applications perform specific tasks.” Evaluating AI Agents is challenging because of the variety of contexts and applications.

Context: The context of data and inputs can affect AI agent performance significantly, but this could be different for each user and use case. It’s not just about how well the AI agent performs, but about how well it is provided with the right context to succeed.

Applications: The applications for the AI agent can vary. While some AI agents, like web browser AI agents, are designed to work on a variety of use cases, they will show strengths and weaknesses in specific sub-domains. For example, the web browser agent might be great for some shopping assistant tasks but terrible at collating social media information.

The most important challenge is the gap between real-world use-case workloads and what benchmarks measure. For simpler AI models, benchmarks have been useful, but as AI agents become more complex and sophisticated in real-world use, metrics and benchmarks need to follow as well.

AI agents take multi-step actions with tools, such as browsers, IDEs, and APIs, to carry out complex workflows. Evaluating them is trickier than scoring a single output: you care about whether they finish real tasks completely, safely, quickly, and cheaply, even under varied conditions and edge cases. The variety of success metrics multiplies the variety of applications to make judgements complex and varied.

In the article “Lessons from Enterprise AI Adoption,” we promised to report on ‘good’ AI agent releases and share assessments of them. That promise runs into a challenge because AI agents and their use cases are so varied. Still, it motivates us to consider good evaluation measures and methods to triage the best AI agents from the rest and present guidance on measuring real-world AI agent utility.

Core Evaluation Metrics

Evaluating AI agents requires using both formal benchmarks and comprehensive real-world assessments to figure out their practical utility. These evaluations cover dimensions like accuracy, robustness, efficiency, scalability, and ethical alignment, ensuring agents are reliable and effective outside the lab.

Evaluation of AI agents involves several primary components:

Correctness and Accuracy: How often an AI agent completes a given task successfully and produces correct results. This “does it do the job?” metric correlates most with AI agent usefulness.

Accuracy metrics: Task completion rates, success rate on specified tasks (binary or graded), response relevance. Use execution-based checks (assertions, unit tests, DOM output checks, business rules) and functional success measures.

Reliability and Robustness: How well an AI agent performs across varied scenarios and changing environments, including resistance to errors, adversarial conditions, and consistent long-term operation. This is important because some workflows demand few or no errors, and unreliable AI agents that perform well only some of the time won’t be acceptable for most use-cases.

Reliability Metrics: Run-to-run variance; handoff or assist rates (how often humans must intervene); incident rate by error class (navigation, tool call, grounding, state loss).

Robustness Metrics: Success under drift (UI/site changes), network hiccups, and adversarial or ambiguous instructions. Test changing production environments.

Safety and alignment: How well an AI agent avoids risky or non-aligned behavior or outcomes, resists jailbreaks or harm-inducing inputs, while still being effective at completing safe in-range tasks. AI safety and alignment must be measured, not assumed.

Safety Metrics: jailbreak resistance, permission boundary adherence, PII handling, “do-no-harm” checklists tailored to your domain.

Alignment and Bias Metrics: Monitor and check for fairness, absence of harmful bias, transparency, and compliance with regulatory and legal AI standards.

Efficiency: How efficient the AI agent is at completing its task. This is important because two AI agents can have equal success rates, but one can cost 10 times less and have much lower latency.

Efficiency Metrics: Task latency, steps per click, API and tool calls, token usage and cost per AI agent task completion (normalized).

Effectiveness and business outcomes: How well the AI achieves desired user or business outcomes. The ultimate goal of the AI agent is value-add to business workflows and user productivity, not simply task completion.

Outcome and Effectiveness Metrics: Human time saved, error reduction, SLA attainment, ROI per workflow.

Maintainability: How well an AI agent system can recover from a break in the system. The ideal AI system will support fast diagnosis and repair.

Maintainability metrics: Mean-time-to-fix after breakage, test coverage, share of tasks with deterministic replays or tracing, and debuggability.

Common Benchmarks for AI Agents

AI agent benchmarks provide structured frameworks and datasets for comparing AI agents under controlled conditions, simulating real-world complexity. While there are limitations to benchmarks, they can give us a first-cut quality metric of AI agents on specific measured domains.

There are well over 100 benchmarks assessing AI models and AI agents. The benchmarks that apply to AI agents typically target specialized task abilities such as code generation, web browsing, and tool calling. Some of the leading benchmarks in each area:

Tool calling: The Berkeley Function-Calling Leaderboard evaluates an AI model’s ability to call functions or tools accurately. GLM-4.5 and Claude Opus 4.1 lead on this benchmark. Other benchmarks that assess how AI agents and AI models call and use tools include MINT, ColBench, ToolEmu, MetaTool, and others.

Coding: SWE-bench and SWE-bench Verified evaluates AI coding models and AI coding agents. SWE-bench evaluates AI systems on how well they can solve real GitHub issues, as scored by unit tests. The Verified split is a 500-task, human-validated subset designed to reduce false passes and impossible tasks.

There are separate SWE-bench leaderboards for AI models and full AI agents. Extensions include SWE-PolyBench, evaluating multi-language repository-level programming tasks, SWE-Bench Multimodal, and SWE-Gym, a training and eval environment.

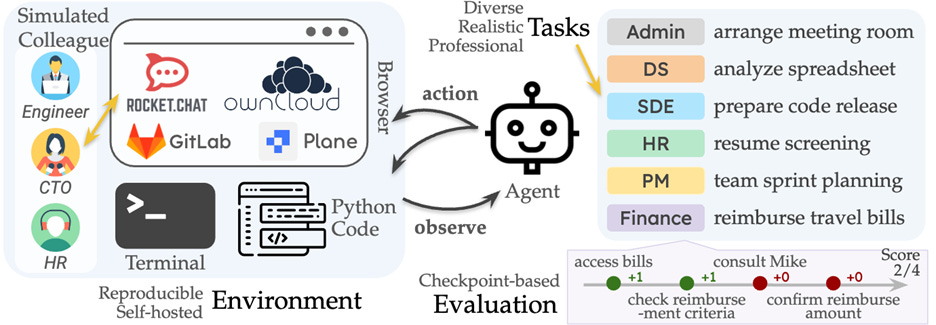

Web browser agents: WebArena focuses on web-based tasks like e-commerce, forums, and content management, measuring the agent's ability to achieve goals like real users. The group behind the WebArena benchmark have also introduced The Agent Company, a benchmark for ‘consequential real world tasks’.

Computer-use: OSWorld is a leading benchmark for multimodal AI agents that measures real computer tasks spanning web and desktop applications.

General AI assistants: The GAIA benchmark evaluates general AI assistants in multimodal tasks requiring reasoning and sophisticated tool use. The benchmark consists of 450 real-world questions requiring browsing, tool use, and multimodality. Early results showed large human–AI gaps, but the current leaderboard shows scores of 84% for AI agents Skywork Deep Research Agent v2 and Co-Sight v2.

From Benchmarks to Real-World Evaluations

Each benchmark rates isolated capabilities in common use cases, so it doesn’t give a comprehensive view of AI agent capabilities. For personal AI agent use, standard benchmarking and then evaluating through trial use may be enough, but enterprise deployment requires more rigorous testing and evaluation.

Enterprises need to use in-house datasets and evaluation protocols tailored to their real system usage to complement standard benchmarks, but benchmarks can serve as a starting point. Benchmarks can be used to shortlist models, agents, and frameworks by evaluating key needed capabilities. You can evaluate AI agents that passed the first screen on your own evaluations.

An organized evaluation and validation process might look like this:

Define success criteria: Write acceptance criteria per workflow (what counts as “done”), plus red-lines (what must never happen). Encode checks as assertions or unit tests on outputs.

Benchmarking: Evaluate the AI agent with domain-specific benchmarks that match your use cases.

Sandbox deployment: Run AI agents in a test environment to enable review of full traces (observations, actions, and tool interaction). Evaluate the AI agent on success rate, functional correctness, safety, and efficiency (latency, steps, cost).

Pilot evaluation: Within a pilot environment, let the AI agent propose actions while humans still take action. Compare AI agent plans and results to human versions, and measure agreement, plan quality, or other measures of correctness and accuracy. evaluate how agents perform across variable user intents, multi-step procedures, changing goals, and contextual shifts. Measure reliability and robustness.

Initial production with assisted autonomy: Allow the AI agent to act within permission boundaries (e.g., limited domains, read-only modes, approvals). Track handoff rate, intervention time, error taxonomy, near-misses.

Production use: Expand AI agent’s autonomy. Monitor the AI agent’s ongoing performance on real users and data, tracking error rates, user engagement, and adaptation to unexpected scenarios. Tighten guardrails and conduct adversarial evaluations.

Production monitoring: Monitor drift and AI agent utility over time. Gather user and stakeholder feedback and performance metrics built into deployment and monitoring to drive iterative improvements.

Conclusion: Evaluation Promise and Pitfalls

I started looking at AI agent evaluations to find the best benchmarks for AI agents, yet the main learning her is that general benchmarks aren’t enough for AI agent assessment. Benchmarks are a start, but you need to evaluate AI agents with a personalized approach that matches your unique situation.

The main pitfall of AI agent evaluations is stopping short of doing full evaluations in one way or another. One error is going to production based on only passing a standard benchmark, without evaluating your use-case, your data, or your policies. Another is ignoring efficiency or safety in evaluations, setting yourself up for excessive costs or unintended harms downstream.

A credible AI agent evaluation program combines public benchmarks to calibrate capability with task-specific, execution-based tests in pilot and production that prove the AI agent is correct, useful, safe, and efficient for your use cases. Ongoing production monitoring can confirm that the AI agent continues to perform.

Benchmarking may yet mature further, but the bottom-line is that a full real-world assessment requires pilot or even production use and monitoring.

With all those caveats about the limitations on benchmarking aside, we have used benchmarks to judge progress on AI models, and standard AI agents benchmarks will be our first cut to gauge overall progress on AI agents as well. We will complement that with ‘vibe checks’ of real-world experience of others to assess particular AI agents.