Explaining the AI Infrastructure Boom

AI runs on compute. Hyperscalers and AI labs see a $10 trillion AI opportunity and are racing to build out AI data centers to scale AI capacity. Two AI visionaries explain why it all makes sense.

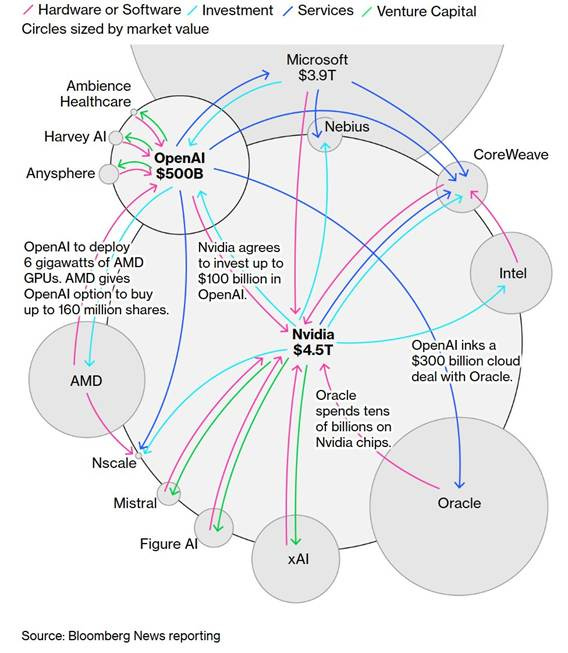

Big Tech’s Big Deals for AI Compute

By 2030, we will be in a world of material abundance [with AI] but I think will be a world of absolute compute scarcity … Your ability to produce and create will be limited by the compute power behind it. … one thing we think about a lot is how to increase the supply of compute in the world. – Greg Brockman, OpenAI

This Monday, AMD rose a whopping 34% on news of a multi-year deal worth tens of billions to supply AI chips to OpenAI. The deal was for 6 gigawatts of compute capacity.

Wasn’t it just last month that Nvidia and OpenAI announced an even bigger hundred-billion AI chip deal? Why is OpenAI making multiple deals? What’s going on?

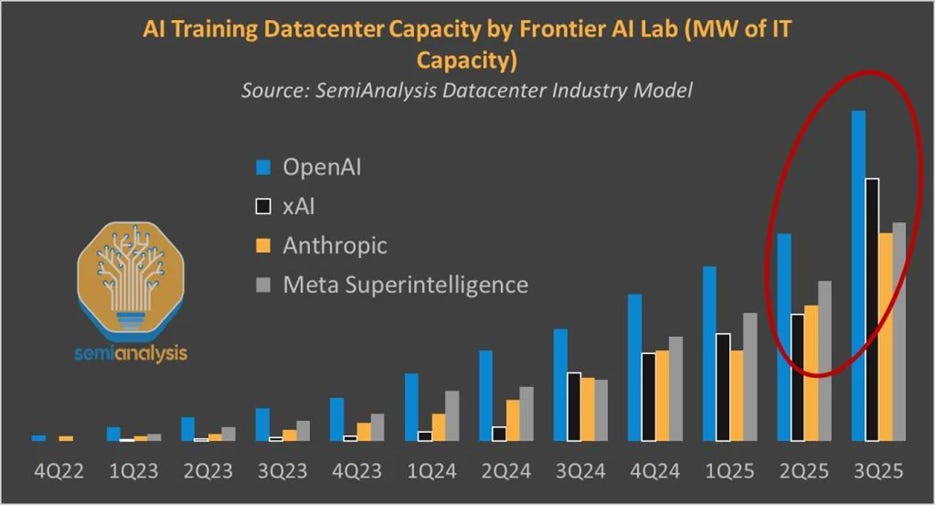

What’s driving this is AI demand. AI usage is growing exponentially, which is driving an insatiable demand for compute to serve AI demand. It can’t keep up. The entire AI industry is currently AI computing supply-constrained.

The Big Tech hyperscalers that need and use massive compute - Google, Microsoft, Amazon (AWS), Meta - and AI labs like xAI, OpenAI, and Anthropic have been investing in AI infrastructure and executing massive partnerships to support AI demand, with bigger announced investments this year than ever:

Microsoft is investing $80 billion in AI in 2025.

OpenAI announced the $500 billion Stargate project in January, and has since been executing on that, collaborating with Oracle on $300 billion deal, adding 4.5 gigawatts of AI data center capacity and recently announcing five new data center sites.

Meta is spending $72 billion on AI infrastructure in 2025, with even more in 2026, amounting to hundreds of billions in AI investment in coming years.

Google is also increasing their CapEx to $85 billion in 2025, $10 billion more than they expected. In July, they reported that “demand is so high for Google’s cloud services that it now amounts to a $106 billion backlog. It’s a tight supply environment.” Google is accelerating data center construction to meet Cloud customer demand, announcing investments in cloud and AI infrastructure across many U.S. states in coming years.

Elon Musk’s xAI has made their mark as the fastest mover by building Colossus in a matter of months. They are doubling the size of Colossus to 200,000 GPUs. Now xAI is laying groundwork for Colossus 2, the first 1 GW datacenter in the world, raising $20 billion for Colossus 2 and signing a deal for Nvidia to supply AI chips.

Beyond the US big tech firms, Chinese tech companies and other sovereign nations are also betting big on AI infrastructure. For example, Chinese cloud provider Alibaba is spending $50 billion on AI, and every country wants sovereign AI capabilities for their own economic and national security in the AI era. Nvidia announced in May that telcos across five continents are building sovereign AI infrastructure with Nvidia, with countries like Japan, Indonesia, Qatar, France, Canada, and Ecuador building out their own sovereign AI infrastructure.

What’s Driving the AI Infrastructure Boom

While stock prices in companies like Oracle, Nvidia, and AMD are jumping on AI infrastructure deals, the excitement is tempered with concerns that a market bubble could be forming around AI hype. The AI infrastructure boom shares parallels with railroads in the 1800s and the internet dot-com boom of the last1990s. In both cases, there were booms, building infrastructure to over-capacity, investment bubbles, and busts.

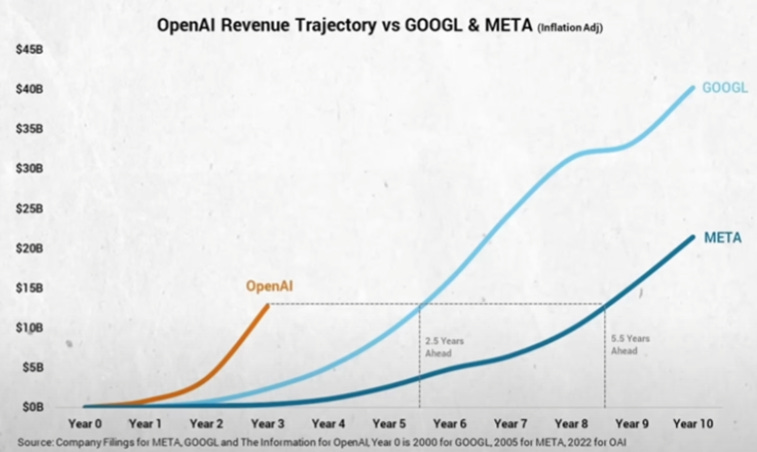

However, there are a few differences. A large portion of AI data center buildout is not speculative but serves AI demand that is already here. The revenue of OpenAI and Anthropic is real and in the billions, ramping faster than any new product in history.

As AI improves, it is compounding AI demand and revenue. In the past last 2 years, AI went from LLM-based chatbots to AI reasoning model-based AI agents, capable of more useful work and hungrier for more tokens. Even aggressively high estimates for AI demand growth haven’t kept up with actual AI demand.

The Big Tech hyperscalers and AI companies like OpenAI expect to see AI demand increase exponentially as AI accelerates towards superintelligence levels and AI use cases multiply. They are planning and building AI data centers to meet this projected future AI demand, believing that AI will grow into a $10 trillion business.

Two visionary AI chip company CEOs, Nvidia CEO Jensen Huang and Groq CEO and founder Jonathan Ross, have given interviews recently that help explain where this number comes from and how it justifies the AI buildout.

The View from Nvidia

“AI inference isn’t going to hundred x, or a thousand x, it’s going to one billion x.” – Jensen Huang

This recent Nvidia CEO Jensen Huang interview on the BG2 podcast discussed the future of computing in the AI era and Nvidia’s role in AI and the AI data center buildout. Jensen Huang has been an incredibly visionary AI leader, making bold bets on AI for almost 20 years that is paying off with making Nvidia the most valuable company in the world now. When Jensen speaks, we ought to listen.

Jensen Huang’s key points:

Demand for compute due to AI is growing exponentially. The demand for compute is compounding due to two exponentials. First, the increasing number of customers using AI, and second, the increasing computational requirements of AI reasoning, where we scale reasoning with more inference compute.

AI augmentation of human intelligence is highly scalable. This scaling is so rapid because AI is rapidly improving. As AI improves, the portion of human labor it can displace increases. Jensen Huang used the analogy of augmenting a $100,000 employee with a $10,000 AI that makes them two times more productive. Any business would make that AI investment in a heartbeat.

As AI reaches a threshold to augment significant portions of human intellectual labor, demand for AI will increase commensurately to cost of human labor. Applying his example to global GDP, Jensen suggests that when the $50 trillion of global GDP (a bit over half of total GDP) that is based on human intelligence can be augmented by AI, a fraction of that, about 20% or “$10 trillion needs to run on a machine.”

Thus, Huang sees a $10 trillion AI market opportunity, which could be reached as soon as the end of this decade.

Scalable AI needs scalable compute to run AI inference and generate tokens. Huang projects that about $5 trillion of CapEx annually “makes sense” in a world with $10 trillion of generated tokens per year. The current market is estimated at $400 billion, but it could easily increase four or five times.

Also benefitting Nvidia is that GPU demand for data centers is driven by both AI and a shift to accelerated computing. Existing general-purpose computing infrastructure is being refreshed with AI capabilities, driving more demand for GPUs. Jensen Huang even boldly stated that Nvidia reaching a trillion dollars of AI-driven revenues by 2030 is “near certain because we’re almost already there.”

Groq founder Jonathan Ross Interview

“The new economy is going to be AI, and it’s going to be built on compute.” – Groq founder Jonathan Ross

Groq Founder Jonathan Ross was a developer of Google’s TPU before he founded Groq to build AI chips for inference. As a visionary AI leader, he shared cogent perspectives on AI technology and the AI chip business in his interview.

He sees that everything in AI infrastructure is supply-constrained and the demand for compute power for AI is insatiable. This is because AI demand itself is insatiable.

The compute bottleneck for AI is driving major tech companies to double down on AI infrastructure spending to scale revenue. He explained that if OpenAI or Anthropic had double their current inference compute, their revenue would almost double within a month due to ability to serve more users and improve product quality.

Because the AI market is supply-constrained, Groq focuses on a faster supply chain (6-month lead time for LPUs compared to 2 years for GPUs) to address the compute capacity shortage.

From his perspective, AI is added-value at any scale. If using AI provides leverage for wealth-creation by displacing intellectual labor, then compute is the volume knob:

“I can add compute to the economy and the economy gets stronger.”

In this view, AI inference is infinitely scalable, leading to a “virtuous cycle” of improvement and demand. Unlike the industrial revolution, where limited physical machinery limited energy use, AI can constantly improve its quality and expand its reach with more compute.

This further implies that the compute needed for AI has been dramatically underestimated.

That doesn’t mean there aren’t any limits. One is energy. Jonathan Ross stated that the countries that control compute will control AI, and compute cannot exist without energy. He sees the ultimate limiters to AI as computing capacity and energy and believes in encouraging innovation in energy to support scaling of AI computing.

Ross predicts AI will lead to deflationary lowering of costs of goods and services through automation and labor displacement. The use of AI and lower costs will support lifestyles of working less, allowing people to opt out of the economy. However, it will also create new jobs and industries that currently don’t exist, ultimately leading to a labor shortage rather than widespread unemployment.

Competition and Valuations

Both Jensen Huang and Jonathan Ross covered the competitive landscape for AI chips and systems. Jensen Huang claimed Nvidia’s competitive advantage is increasing, due to its annual release cycle, co-design across entire systems (chips, software, networking), and the massive scale of its supply chain and customer deployments.

Yet AI chip buyers want to avoid an Nvidia monopoly and want more capacity. Ross believes that AI companies and hyperscalers will try to build their own chips to secure compute allocation, even though building chips is difficult and new design would be behind where Nvidia and Groq are.

Both Jensen Huang and Jonathan Ross believe that AI that AI labs like OpenAI and Anthropic are undervalued and will grow significantly. Ross spoke of them potentially joining the Mag 7 to become “Mag 9” or “Mag 11.” Huang felt that OpenAI is “likely going to be the next multi-trillion dollar hyperscale company.”

About 700 million people, 10% of the world, are weekly active users of AI. AI can still become 100 times bigger than it is today. – Jonathan Ross on OpenAI

Some Wall Street analysts are coming around to this view. JP Morgan released a report stating that OpenAI’s total potential market TAM could reach $700 billion by 2030, noting that it currently has 800 million monthly activations and nearly $10 billion in annualized revenues, most of which comes from subscribers.

Conclusions and Predictions

The AI data center buildout is real and massive. It’s an AI infrastructure gold rush, and Nvidia is making a mint selling picks and shovels.

AI demand is driving the AI infrastructure investment boom. AI adoption has gone mainstream, and AI demand is growing exponentially. Computing is the raw material for AI, running the AI model training and AI model inference.

Michael Dell made a safe hedge by saying that at some point there will be too many AI data centers, but we are not there yet. At some point the AI infrastructure may overshoot AI needs, but the current AI buildout is simply to catch up to exploding AI demand. We are pretty far from any possible over-shoot of demand.

We are in inning three or four of the AI revolution. As AI improves, it provides greater benefits in business automation and creative uses in arts and science, increasing productivity, lowering costs, and transforming many sectors of the economy. AI supply scales with the AI computing power that is available to run AI. Thus, this ramp in AI will continue for some time as AI grows in usage and benefits.

Whether AI infrastructure boom endures or goes bust depends on whether AI lives up to the predictions of 100 time increases in AI revenues, a billion-fold increase in AI inference tokens, and a $10 trillion AI business opportunity in coming years.

These predictions are a tall order, but visionary AI leaders are telling us we have been under-estimating not over-estimating AI demand and the AI opportunity. I wouldn’t bet against them.

Sources

Nvidia CEO Jensen Huang interview on the BG2 podcast.

Groq Founder Jonathan Ross interview on 20VC with Harry Stebbings.

Dylan Patel interview on “Inside the Trillion-Dollar AI Buildout” with Patrick O’Shaughnessy.