Gemini 2.5, MiniMax-M1, and Advances in AI Reasoning

Gemini 2.5 and MiniMax-M1 teams share details on how their AI reasoning models were made.

Introduction

This week, Gemini 2.5 released stable versions of Gemini 2.5 flash and Pro and expanded their 2.5 models to include Gemini 2.5 Flash Lite. In addition, Chinese AI company MiniMax has released MiniMax-M1, an open source mixture of experts (MoE) hybrid-attention reasoning model. These are excellent AI model releases.

Both Google and the MiniMax team have included technical papers with their recent AI model releases that shed light on the AI model training, model architecture, and AI reasoning model capabilities. The MiniMax team shares more technical specifics regarding architecture and training, but both Technical Reports are quite informative about the state of training and architecture for AI reasoning models.

Gemini 2.5 Release

Google has released Gemini 2.5 as “stable and generally available.” To get to this stable release, Google has been releasing Gemini 2.5 model checkpoints since their March release of Gemini 2.5 Pro Experimental (gemini-2.5-pro-exp-03-25). They tweaked how these models reason, improving their performance with each iteration.

The result is that the whole suite of Gemini 2.5 models is high performance across the board, strong on long context tasks, reasoning, and multi-modality. They natively accept text, image, video and audio input, and Gemini 2.5 Flash and Pro can output in both text and audio. Gemini 2.5 Flash and Flash-Lite aren’t the strongest models on coding, but they are respectable, and Gemini 2.5 Pro comes in with 69% on LiveCodeBench and a state-of-the-art 82% on Aider Polyglot.

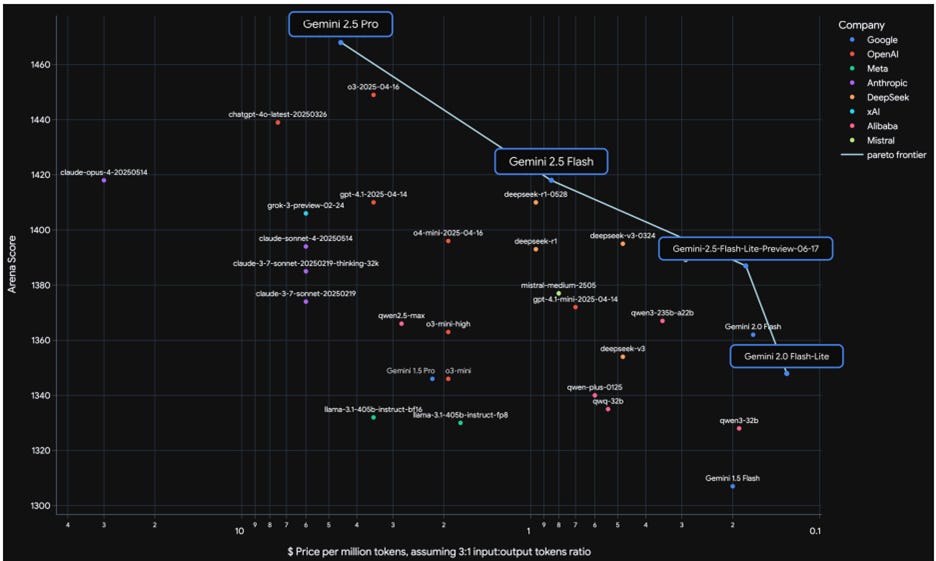

The Gemini 2.5 models are highly cost-competitive, as shown by Google’s chart comparing ELO Arena score versus API price. For example, the Gemini 2.5 Flash-Lite ELO arena score is above Gemini 1.5 Pro and o3-mini while being less than one tenth the cost to serve, with its API cost at only 10 cents per million token input and 40 cents per million token output.

Gemini 2.5 Technical Report

Google shared further details on Gemini 2.5 performance, architecture and training in a Technical Report “Gemini 2.5: Pushing the Frontier with Advanced Reasoning, Multimodality, Long Context, and Next Generation Agentic Capabilities.”

Gemini 2.5 models are sparse mixture-of-experts (MoE) models with native multi-modal (text, vision, and audio) support. The efficiency of the sparse MoE architecture contributes to the significantly improved performance of Gemini 2.5 over Gemini 1.5. Google overcame challenges in training stability of MoE architectures to achieve the boost in performance.

Much of the improved performance of Gemini 2.5 over prior Gemini generations is due to significant advancements in post-training. Google mention a focus on data quality, algorithmic improvements in RL, and using the model itself to assist in the post-training process, saying:

These advancements have enabled Gemini 2.5 to learn from more diverse and complex RL environments, including those requiring multi-step reasoning.

Keeping their methods proprietary, Google does not mention specific used to improve RL-based post-training. However, recent published results suggest what these optimizations might be. For example, RL optimization doesn’t require external rewards but can use an LLM’s own assessed confidence; see “Confidence Is All You Need: Few-Shot RL Fine-Tuning of Language Models.” Using LLM-as-a-judge to assess responses bootstraps better results without externally curated answers.

Google improved Gemini 2.5 on code over its predecessors by increasing the code data in pre-training and adding a diverse set of novel software engineering tasks for training reasoning in post-training, so that Gemini can solve a range of software engineering tasks.

They improved video understanding in Gemini 2.5, supporting interesting use cases: Comprehending a video to make an interactive learning app; creating animations from video; and retrieving moments from a video and reasoning over it.

Another advance in Gemini 2.5 is integrating tool use and reasoning. for example, native calling of Google Search is integrated with advanced reasoning. Specifically:

Gemini 2.5 integrates advanced reasoning, allowing it to interleave these search capabilities with internal thought processes to answer complex, multi-hop queries and execute long-horizon tasks. The model has learned to use search and other tools, reason about the outputs, and issue additional, detailed follow-up queries to expand the information available to it and to verify the factual accuracy of the response.

Combining this with agentic use cases is quite powerful. They note that Gemini Deep Research, based on the Gemini 2.5 Pro model, is able to achieve a state-of-the-art 32.4% on Humanity’s Last Exam.

The Gemini 2.5 Technical Report concludes by noting the saturation of benchmarks due to rapidly improving AI models, including improved Gemini models:

Over the space of just a year, Gemini Pro’s performance has gone up 5x on Aider Polyglot and 2x on SWE-bench verified (one of the most popular challenging agentic benchmarks). Not only are benchmarks saturating quickly, but every new benchmark that gets created can end up being more expensive and take longer to create than its predecessor …

Minimax M1

MiniMax-M1 is the first open source mixture of experts hybrid-attention reasoning model. The MiniMax-M1 Mixture-of-Experts (MoE) architecture has 456B total parameters and 45B active parameters, and it has a massive 1 million token native context window for input and 80K token output, larger than any other AI model.

As an AI reasoning model, Minimax-M1 shows impressive performance on key benchmarks, on par with DeepSeek-R1-0528 and Gemini 2.5 Pro: 56% on SWE Bench Verified; 86% on AIME 2024 (math); 68.3% on FullStack Bench (coding). On long context reasoning, Minimax-M1 is on par with Gemini 2.5 and ahead of almost everything else open-weight.

Minimax M1 Technical Report

The Minimax M1 Technical Report “MiniMax-M1: Scaling Test-Time Compute Efficiently with Lightning Attention” explains the innovations that made the AI model highly efficient in both training and inference.

Thanks to the lightning attention mechanism, MiniMax-M1 is highly efficient in inference of long token lengths, helping it to support long input context and output generation lengths. It consumes 25% of the FLOPs compared to R1 at a generation length of 100k. This is particularly helpful for reasoning since it can help efficiently scale test-time compute. They released two versions of MiniMax-M1 models, with 40K and 80K thinking budgets, respectively.

The lightning attention mechanism is based on innovations in the linear attention mechanism called transnormer and published in “The Devil in Linear Transformer” in 2022. This has higher performance than other linear attention mechanisms while being more space-time efficient than traditional SoftMax attention. In MiniMax, they interleave six lightning attention layers with one SoftMax attention layer to yield a hybrid-attention architecture.

Linear alternatives to traditional attention have suffered from limited performance, but they are still able to get high performance metrics for its size with hybrid attention.

Another innovation in MiniMax-M1 is an RL training method called CISPO (Clipped IS-weight Policy Optimization) that improves the efficiency in the RL-training for AI reasoning:

CISPO clips importance sampling weights rather than token updates, outperforming other competitive RL variants.

They were able to show that CISPO significantly outperforms both DAPO and GRPO with the same number of training steps.

Thanks to CISPO and the hybrid-attention, the RL training for this model was remarkably efficient:

Combining hybrid-attention and CISPO enables MiniMax-M1's full RL training on 512 H800 GPUs to complete in only three weeks, with a rental cost of just $534,700.

Even though MiniMax-M1 was trained quickly and cheaply, the result is comparable to the best open AI models out there DeepSeek-R1-0528) and even proprietary AI models like Claude 4 Opus:

Experiments on standard benchmarks show that our models are comparable or superior to strong open-weight models such as the original DeepSeek-R1 and Qwen3-235B, with particular strengths in complex software engineering, tool utilization, and long-context tasks.

The MiniMax-M1 model is based on MiniMax-Text-01 model and takes only text input; it lacks multi-modality. Although it is open source, the 456B parameter MiniMax-M1 is impractical to run locally on consumer devices. Instead, you can try it out at the MiniMax website.

Conclusion

Some lessons from these latest Gemini 2.5 and MiniMax-M1 releases and their technical reports:

AI labs continue to make AI models better without making them bigger. Improvements in RL training algorithms (CISPO) and model architectures (sparse MoE, hybrid lightning attention) are driving gains in AI model performance and training and inference efficiency.

Scaling AI reasoning with RL training is cheap. MiniMax-M1 RL training cost only $534,700 and took three weeks. With new RL training techniques that can scale RL training without external rewards or curated data, AI reasoning will scale massively and quickly in coming months. We will have really smart AI reasoning models.

China is the hub of open source AI model development. Now that Llama 4 has fallen behind (and Meta CEO Zuckerberg tries to buy his way back to top tier status), it’s been left to Chinese AI labs like Qwen, MiniMax, DeepSeek, and others to advance open source AI. The top open source AI models are now Chinese.

This poses significant risks to AI labs in the USA. Open source AI is a better way to advance AI than closed AI models, and the lead for proprietary AI models is shrinking. The USA risks falling behind China in AI if China leads on creating and sharing most AI model training and architecture innovations.

Patrick, I’d be super interested in an article on your workflow for using AI in your writing process!