Generative Agents and the Making of BabyAGI

Combining LLMs with Agent capabilities could be the path to AGI

“Generative agents wake up, cook breakfast, and head to work; artists paint, while authors write; they form opinions, notice each other, and initiate conversations; they remember and reflect on days past as they plan the next day.” -From “Generative Agents: Interactive Simulcra of Human Behavior”

Interacting Generative Agents

In This Year of AI, every day seems to bring a new ground-breaking announcement, result or AI tool. Today’s mind-blowing new result is “Generative Agents: Interactive Simulacra of Human Behavior”, which comes to us from Stanford and Google researchers.

They created a population of twenty-five generative agents in a simulated environment “reminiscent of The Sims”, then observed as these agents interacted with the environment and each-other. The agents they created extended LLMs (chatGPT) with memories and planning capabilities:

To enable generative agents, we describe an architecture that extends a large language model to store a complete record of the agent’s experiences using natural language, synthesize those memories over time into higher-level reflections, and retrieve them dynamically to plan behavior.

The results were stunning. They re-created aspects of believable human behavior in a community of AI agents in a sim-world. For example, from a single seed suggestion to one agent to throw a Valentine’s Party, generative agents in the environment planned for it, shared invites to others, and attended, with one agent even asking another agent on a date to the party.

Each agent is a given a biography and role akin to an NPC (non-playing character) in this simulated open world. Armed with LLM capabilities and memories, the agents can discuss and hold political positions, such as when the news “reporter” agent asks the John agent about the upcoming election:

John: My friends Yuriko, Tom and I have been talking about the upcoming election and discussing the candidate Sam Moore. We have all agreed to vote for him because we like his platform.

Where it gets most interesting is when the agents interact. These agents have dialogs with each-other, and in the process share information, get to know each-other, build relationships and coordination actions. In one case, an agent remembers something another agent told him and then asks about it in a followup conversation.

For example, Sam does not know Latoya Williams at the start. While taking a walk in Johnson Park, Sam runs into Latoya, and they introduce themselves and Latoya mentions that she is working on a photography project: “I’m here to take some photos for a project I’m working on.” In a later interaction, Sam’s interactions with Latoya indicate a memory of that interaction, as he asks “Hi, Latoya. How is your project going?” and she replies “Hi, Sam. It’s going well!

Games and sims have had NPCs before, but these generative agents have gone beyond the scripted rigid NPC pattern to a more fluid-human-like AI agent. They are more like the AI in Science Fiction - Data in Star Trek, or the robot in the movie “I, Robot”. If GPT-4 shows “sparks of AGI” based on complex reasoning, this shows sparks of AGI in terms of human-like interaction.

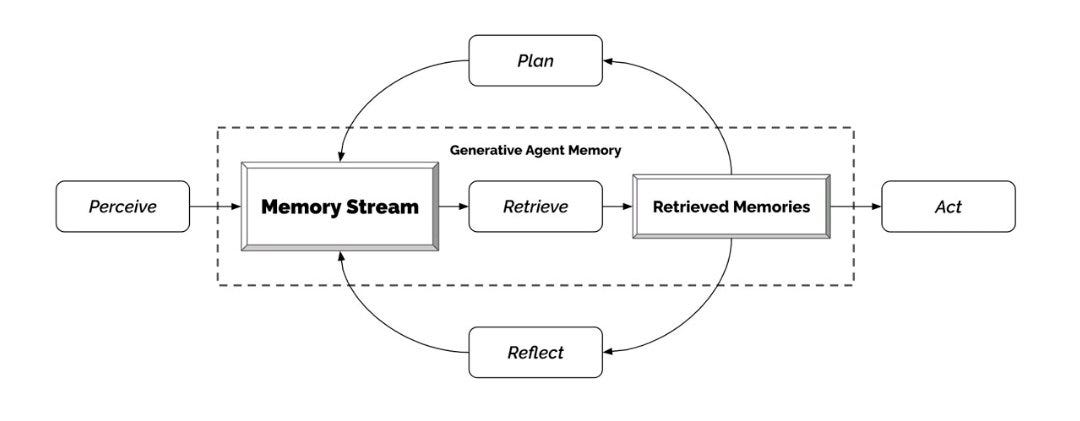

The Agent Formula: LLM + Memory + Planning + Reflection

How to create these agents? The researchers had three key elements in their overall architecture: Memory, reflections and planning.

Memory is the most important element to create agents that can behave in a consistent and coherent way; without it, they wouldn’t have an identity as an agent character. The generative agent, as it interacts with the environment and other agents, records its interactions as memories. Memories are later recalled (based on their relevance, importance and recency) in subsequent interactions.

Reflection is another type of memory, created by probing the language model to construct a question around their daily experiences and asking back those questions. It’s a way of bootstrapping further chain-of-though reasoning about the world.

Planning and reacting are constructed by the agents at various time points. A daily plan is made at the beginning of each day to direct the agent’s actions, then after observations during the day, the agent is probed to either continue the plan or react. For example, the agent might decide to start a dialog upon seeing someone they know.

The emergent behavior from this was quite powerful. From a seed suggestion, Isabella starts the day with a plan to host a Valentine's Day party. She made plans and initiated inviting people to a party, others remembered the invitation, they shared it with others, and they could plan and act to show up. By the end of the simulation, 12 characters knew about the party.

The bottom-line lesson is that with LLM-powered agents enabled with memory and agent-planning, minimal scripting is needed to create rather complex human-like interactions. This is useful far beyond simulations into the realm of creating agents that could serve as our own assistants in the work we do, remembering and attentive to our actions and activities.

BabyAGI

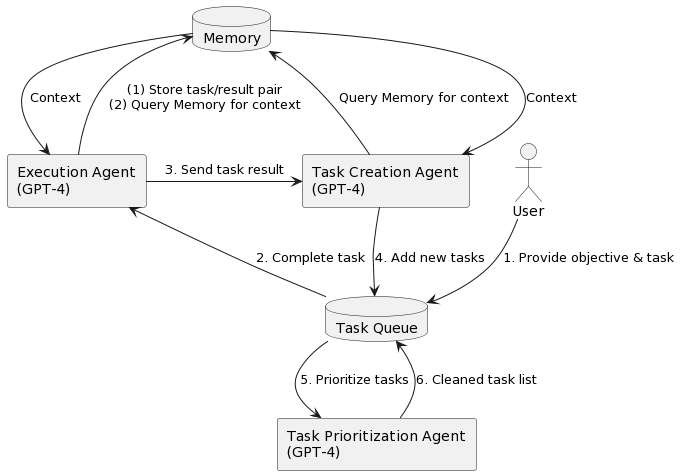

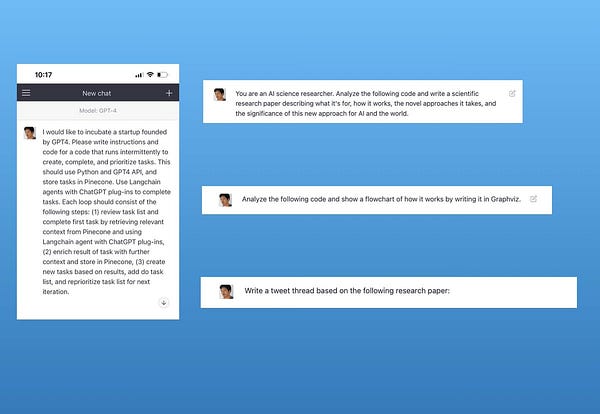

In late March, using just GPT-4 prompts, Yohei - a self-described non-programmer - had GPT-4 create code for a simple recursive agent system, then wrote a paper just from the code, then generated graphs in the paper from the code.

This toy example gives us a glimpse of the incredible power of bootstrapping tasks with GPT-4 and using GPT-4 to create a GPT-4-based agent.

Here’s the architecture of the system.

Link to whole Twitter thread. This has been built further using LangChain and named BabyAGI. It’s been coded into: “An AI agent that can generate and pretend to execute tasks based on a given objective.”

Critics have noted flaws in the BabyAGI system: It can get stuck in infinite loops (careful using up OpenAI credits when that happens!). It pretends but doesn’t execute. It has planning and prioritization of tasks but not reflection nor world-view coherence. So it should be viewed as a primitive prototype.

There are other efforts out there to create various types of bots and agents. Quora at Poe.com has introduced the ability to create bots. This is more of a LLM-customized chatbot than a full agent, but it is a step in that direction.

AutoGPT

Another entrant in the Agent space is AutoGPT and AgentGPT,. Its features are summarized by Lior @AlphaSignalAI in this thread “AutoGPT might be the next big step in AI.”

Here's why Karpathy recently said "AutoGPT is the next frontier of prompt engineering"

AutoGPT is the equivalent of giving GPT-based models a memory and a body. You can now give a task to an AI agent and have it autonomously come up with a plan, execute on it, browse the web, and use new data to revise the strategy until the task is completed.

It can analyze the market and come up with a trading strategy, customer service, marketing, finance, or other tasks that requires continuous updates. There are three components to it:

1. Architecture: It leverages GPT-4 and GPT-3.5 via API.

2. Autonomous Iterations: AutoGPT can refine its outputs by self-critical review, building on its previous work and integrating prompt history for more accurate results.

3. Memory Management: Integration with @pinecone allows for long-term memory storage, enabling context preservation and improved decision-making.

4. Multi-functionality: Capabilities include file manipulation, web browsing, and data retrieval, distinguishing AutoGPT from previous AI advancements by broadening its application scope.

The LLM-enabled Agent Future

We can envision how we might apply Generative Agents capabilities to making a more powerful and useful AI Assistant:

Give the AI assistant long-term memory: It can remember and recall every interaction with its owner/user and can be tuned to the user’s needs. It can be an extra memory assistant for the user, helping in remembering prior interactions.

Give the AI assistant planning capabilities: When given agent-level capabilities, it can be tasked to plan specific activities, like review the user’s calendar and emails, make low-level responses, review for importance and

Give the AI assistant access to plug-ins and tools: When asked to do a task, it can utilize those tools to complete the task. This may include requesting responses to other LLMs as subroutines.

Give the AI assistant ability to review and reflect: When given a task, it can review the output and then decide (or call on an outside LLM to judge) on the quality of the result. It can try again or improve the result if deemed not good enough.

Developing the AI for robots working in a real environment is perhaps the hardest AI problem there is: It’s open-ended and requires hierarchical planning, reasoning, intelligence and world understanding in a multi-model open-ended world. It’s likely it will be the last domino to fall to create what we’d consider true AGI.

The path to get to AGI will be not through LLMs alone, but through these more robust and complete AI architectures that include LLMs with dynamic memory, planning, reflection and more. Generative Agents are pointing the way.