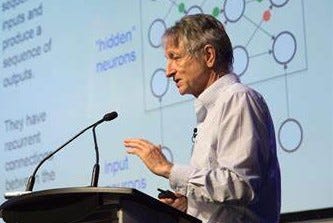

Geoff Hinton, Godfather of AI, Quits Google

The man who got AI this far doesn't want to go further

I was readying another article to post today, but this news hit:

‘Godfather of AI’ quits Google with regrets and fears about his life’s work

Geoff Hinton, a pioneer in Artificial Intelligence who had been working for Google since 2013, has resigned from his Google position, explaining that he wants to "freely speak out about the risks of AI." Now is as good a time as any to go into the many contributions of Geoff Hinton to AI, and to inquire as to what his quitting Google to warn about AI means.

A late-breaking update from Hinton himself, responding to NYT’s slant on why he left Google:

Hinton’s Contributions to AI

Prof Geoff Hinton was educated at King’s College Cambridge, got his PhD in Artificial Intelligence at University of Edinburgh, and had an academic career spanning over three decades.

One early contribution of Hinton’s was the 1986 paper co-authored with David Rumelhart and Ronald Williams, “Learning representations by back-propagating errors.” Back-propagation “adjusts the weights of the connections in the network so as to minimize a measure of the difference between the actual output vector of the net and the desired output vector.” This process iteratively updates neural networks to reduce output errors on training data, which enables the ‘learning’ in machine learning. This paper has thousands of citations.

Prof Hinton also co-authored some of the key ideas and top-cited papers in deep learning, including these seminal papers:

1985: Boltzmann Machines with David Ackley and Terry Sejnowski,

2012: Imagenet classification with deep convolutional neural networks, with Alex Krizhevsky and Ilya Sutskever.

2013: Speech Recognition with deep recurrent neural networks

2014: Dropout: a simple way to prevent neural networks from overfitting

The famous “AlexNet” paper, presented at 2012 NeurIPS, is perhaps the most important paper in the field of deep learning. This result took the world by storm, showed a path to solve image classification, and kicked off the drive to apply deep learning across many domains, leading us to the current state-of-the-art deep-learning-based foundational AI models.

In total, Hinton authored and co-authored over 200 papers in the field of deep learning and artificial intelligence, with many more important contributions besides those mentioned above.

What gives Geoff Hinton “Godfather” status besides his direct contributions is the pedigree of the important researchers that studied under him over the years. Early on in his career, Yan LaCunn worked as a post-doc in his group. Other notable former PhD students and postdoctoral researchers who worked in his lab include various notable AI researchers, including Peter Dayan, Sam Roweis, Max Welling, Richard Zemel, Brendan Frey, Radford M. Neal, Yee Whye Teh, Ruslan Salakhutdinov, Ilya Sutskever, Alex Graves, and Zoubin Ghahramani.

Geoff Hinton, together with Yann LeCun and Yoshua Bengio won the ACM’s 2018 Turing Award. In reporting on it, ACM stated:

Working independently and together, Hinton, LeCun and Bengio developed conceptual foundations for the field, identified surprising phenomena through experiments, and contributed engineering advances that demonstrated the practical advantages of deep neural networks. In recent years, deep learning methods have been responsible for astonishing breakthroughs in computer vision, speech recognition, natural language processing, and robotics—among other applications.

While the use of artificial neural networks as a tool to help computers recognize patterns and simulate human intelligence had been introduced in the 1980s, by the early 2000s, LeCun, Hinton and Bengio were among a small group who remained committed to this approach. Though their efforts to rekindle the AI community’s interest in neural networks were initially met with skepticism, their ideas recently resulted in major technological advances, and their methodology is now the dominant paradigm in the field.

It’s important to point out that deep learning neural networks were not considered a very promising approach to artificial intelligence for most of Hinton’s career. From the 1980s to the 2000s, Hinton was pursuing an approach most saw as too hard and which had only been able to solve “toy problems.”

ACM notes that “Geoffrey Hinton, who has been advocating for a machine learning approach to artificial intelligence since the early 1980s, looked to how the human brain functions to suggest ways in which machine learning systems might be developed.” This was the original underlying belief in neural nets:

If you want to get computers to think like humans, then build a computing architecture that works like the human brain.

Sticking to that intuition with conviction, the AI pioneers delivered the key ideas over those decades, even when computers could only train these nets to do small or trivial tasks. The AlexNet paper breakthrough showed that GPU-based deep learning training could scale up and solve real problems. Those decades of AI research bore fruit when deep learning ideas were applied to GPUs that were capable enough to support training of large deep neural nets. Thus, Hinton’s ideas planted the seeds in deep learning that have changed AI and the world.

After the 2012 breakthrough AlexNet paper, Big Tech giants took notice of the promise of deep learning and started investing more in deep learning AI research. Google bought Hinton’s company and he divided his time between Google and his position at the University of Toronto.

Hinton Hits the Pause Button

Hinton is quoted in today’s news reports to be worried that the accelerating “AI race” may have bad consequences:

Hinton cautioned that generative AI could be used to flood the internet with large amounts of false photos, videos and text. He believes the phenomenon may lead to a situation where users will “not be able to know what is true anymore.” Hinton also expressed concerns about AI’s long-term impact on the job market.

“The idea that this stuff could actually get smarter than people — a few people believed that,” Hinton told the Times. “But most people thought it was way off. And I thought it was way off. I thought it was 30 to 50 years or even longer away. Obviously, I no longer think that.”

None of Hinton’s concerns are particularly novel. It mirrors the concerns expressed in the “Pause letter” over a month ago. Yes, misinformation and deep fake images and video will be easier than ever. Yes, AI will disrupt every industry and upend the jobs market, displacing many careers.

The fact that Geoff Hinton, the Godfather of AI, is telling us this should serve as a wake-up call.

It’s interesting that even Hinton is surprised by the pace of progress. He has been toiling in the field for decades and AI plodded along for most of it, so it’s caught him by surprise that AI progress has turned into a rocket ship and gone supersonic recently. If AGI is nearer than he thinks, AGI is nearer than you think.

I’ve stated before that penny-per-insight mundane AI (say at GPT-4 level) is enough to change the world. We don’t need AGI or even get beyond GPT-4-level AI to replace people’s jobs or refactor many industries.

Hinton is jumping off Google because they are determined to respond to the competitive threat OpenAI and Microsoft initiated by launching chatGPT.

Until last year, Hinton detailed, Google acted as a “proper steward” of its internally developed AI technology. Since then, the search giant and Microsoft Corp. have both introduced advanced chatbots powered by large language models. Hinton expressed concerns that the competition between the two companies in the AI market may prove “impossible to stop.”

He’s right about that. Pandora’s box of AI chatBots has been opened. ChatGPT and Bing Chat are a superior innovation to the two-decades-old search engine paradigm that Google dominates. It’s here to stay. Google has to respond, but even if they don’t, the march of AI progress won’t be stopped.

Too many players have too much invested in AI technology progress to stop. As I’ve said, the Singularity is inevitable.

Geoff Hinton quitting Google reminds me of the Anthropic founders who quit OpenAI because of concerns OpenAI was going too fast. They started their company in a bid to focus on AI safety, claiming OpenAI was going too fast and without enough guardrailes. But they too have joined the race themselves, with their Claude AI offering and pitching to investors about plans for a next-gen Claude 10x bigger than GPT-4.

Will Prof Hinton continue creating the future with AI research, while doubting his efforts’ ultimate impact on humanity?

I hope Hinton doesn’t doubt his positive contribution to the world. For all the risks and problems AI may bring, there is a hundred-fold abundance beyond that of positive and beneficial outcomes of artificial intelligence that counter-balance it. I also hope that Hinton’s final contribution to AI will be in making sure we get AI’s benefits while mitigating those risks.

The world is too much with us;

late and soon, Getting and spending, we lay waste our powers;

Little we see in Nature that is ours;

We have given our hearts away, a sordid boon!

- William Wordsworth