Google I/O '24: A Gaggle of AI Updates

Many updates: Gemini 1.5 Pro gets 2M context, Gemini 1.5 flash, Pali Gemma, Gemma 2 27B, context cashing, Gems, AI Overviews, LM Notebook audio, Project Astra, Imagen3, Veo, Trillium.

Top Announcements at Google I/O

Google I/O was perhaps the antithesis of OpenAI’s event. Instead of a focused live demo of a single product, it was a sprawling multi-hour event that jumped around, sharing dozens of AI models, features and applications.

AI was so much the focus of the event, CEO Sundar Pichai joked about the number of times AI was mentioned: 120 times, oops, now it’s 121.

Let’s go through the top Google I/O AI announcements by category: Gemini Model updates; AI in Search; AI in Applications; AI Agents and Assistants; Generative AI for images, music and video; and hardware.

Model Updates - Gemini and Gemma

Google had model updates around Gemini and Gemma, with focus on their native multi-modality and long context selling points.

Gemini 1.5 pro updates: “We made a series of quality improvements across key use cases, such as translation, coding, reasoning and more.” It has 1 million token context now available to all users; they are expanding Gemini context window to 2 million tokens in private preview now, a 'full hour of video' in a single context window.

Gemini 1.5 Flash: This new Gemini model is lighter-weight, low-latency, fully multi-modal (video, audio, and text inputs), and comes with 1 million token context. It will be available in Google AI Studio and vertex AI via API, and it’s cost-efficient with API cost of only $0.35 per 1M tokens.

Pali Gemma: This is a 3B open Gemma model with vision. It’s available on HuggingFace as well as their vertex AI model garden.

Gemma 2: The Gemma 2 27B open model follows up on Google’s prior 2B and 7B Gemma models and will come out in June. The 27B model size is designed to fit on GPUs or a single Google TPU; a 4-bit quantization could fit in my 24GB 4090 GPU. Gemma 2 benchmarks are MMLU of 75, close to Llama 3 70B.

Gemini API context caching feature is designed to reduce the cost of requests that contain repeat content with high input token counts. Context caching may become a substitute for RAG or fine-tuning; for example, if you are working on the same code base or need to use the same technical documentation to answer a query.

Gems: This is a new feature to customize a Gemini model for a specific use-case, by pre-setting system prompts and making customized instructions for interaction. They mentioned examples of “your peer reviewer, yoga bestie, chef, mentor.” This sounds like custom GPTs or Meta’s persona AIs.

AI in Search

Google recognizes that AI is a "Platform shift" as term they used, and they clearly want to make the search paradigm work with AI. Their pitch:

"With generative AI, search will do more for you than ever"

They use AI in search as both a researcher and orchestrator of information. Google’s AI-enabled search features should help maintain their dominance in search, or at minimum help compete with similar features from Perplexity.

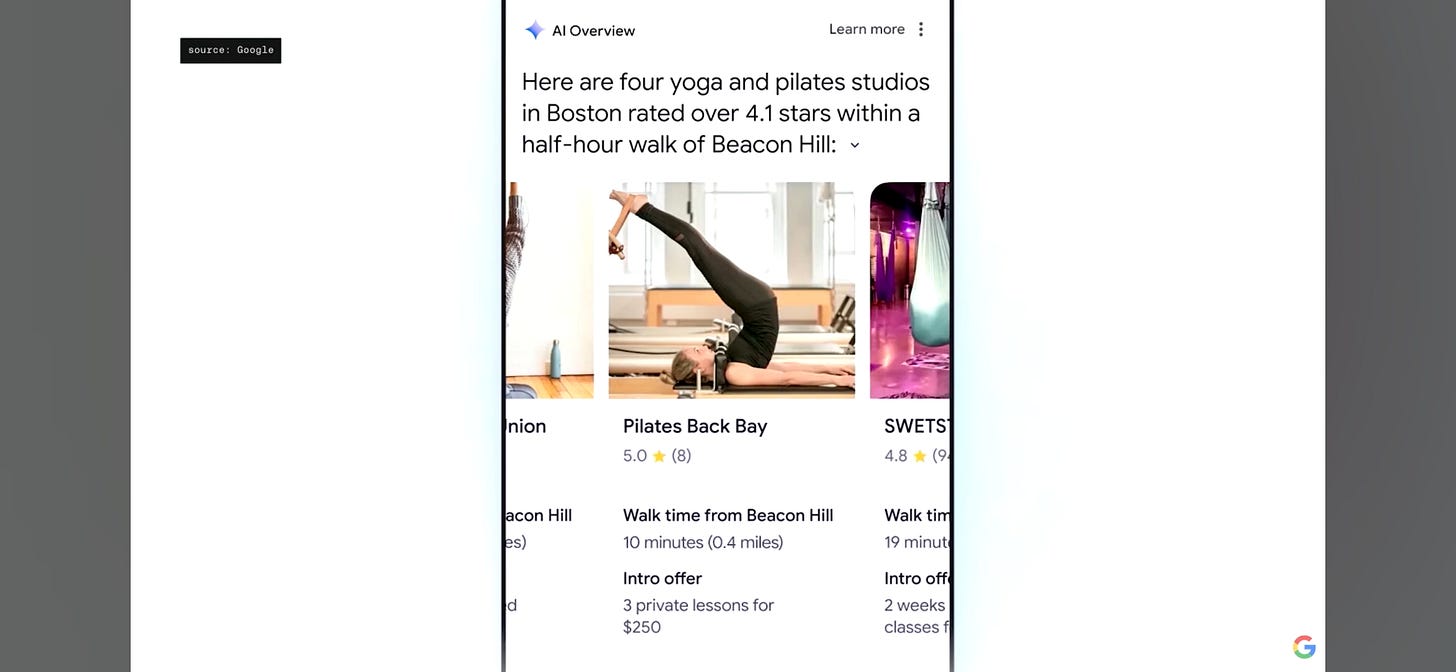

AI Overviews: Google Search has expanded AI Overviews, advanced planning capabilities, and AI-organized search results. Users can also now ask questions with video, AI in Search analyzes visual content to provide response in AI Overviews. This update is rolling out to US users now.

Search multi-step reasoning: Allows Gemini to break down questions and speed up research. Using Gemini will allow Search to execute steps through agents, such as planning, maintaining, and updating trip itineraries. As they put it, “Google will do the googling for you.”

AI in Apps and Tools

Google is incorporating Gemini and AI features into virtually every one of its offerings far beyond just search, including Android apps, workplace, photos, Gmail and more.

Ask Photos: To highlight AI features in Google photos, CEO Sundar gave a demo of asking Google Photos "what's my license plate number?" and "When did Lucia learn to swim?" where the AI would search throughout your photos intelligently to

NotebookLM: They showed off an impressive audio feature in their notebookLM research and writing tool. They used Gemini AI to generate an audio discussion around topics in the document. It’s a speaking AI tutor or like having a podcaster over your documents.

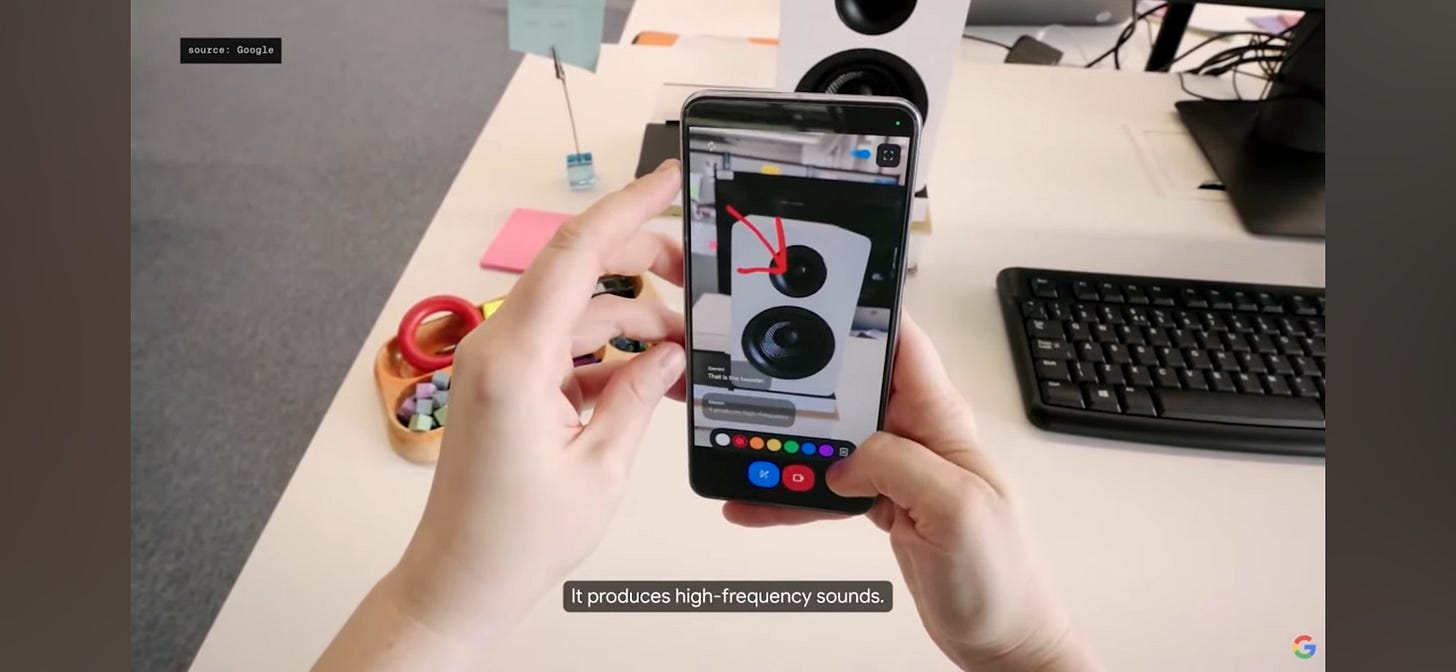

Ask with video: Multiple demos showed off how you can ask a query from an image: Circle to search; asking from video snapshot. Multi-modal AI is becoming a feature embedded across various Google applications.

AI Agents And Assistants

They brought “Sir Demis” Hassabis to speak about some of the DeepMind work on AI agents and models. One important project they are working on is Project Astra.

Project Astra is Google’s approach to AI Assistants. They want it to be proactive, teachable, and can see and hear what you do live in real-time. They spoke of the challenge to get more natural responses, the same challenge GPT-4o has overcome (lower latency, lets you interrupt, etc.) They shared a demo of a woman interacting with the AI video app on an iPhone, and the AI speaking in real-time, recognizing code, everyday objects, and even remembering where she left her glasses.

Google’s Gemini mobile Apps for iOS and Android will be getting video and speaking features from Gemini Astra later this year.

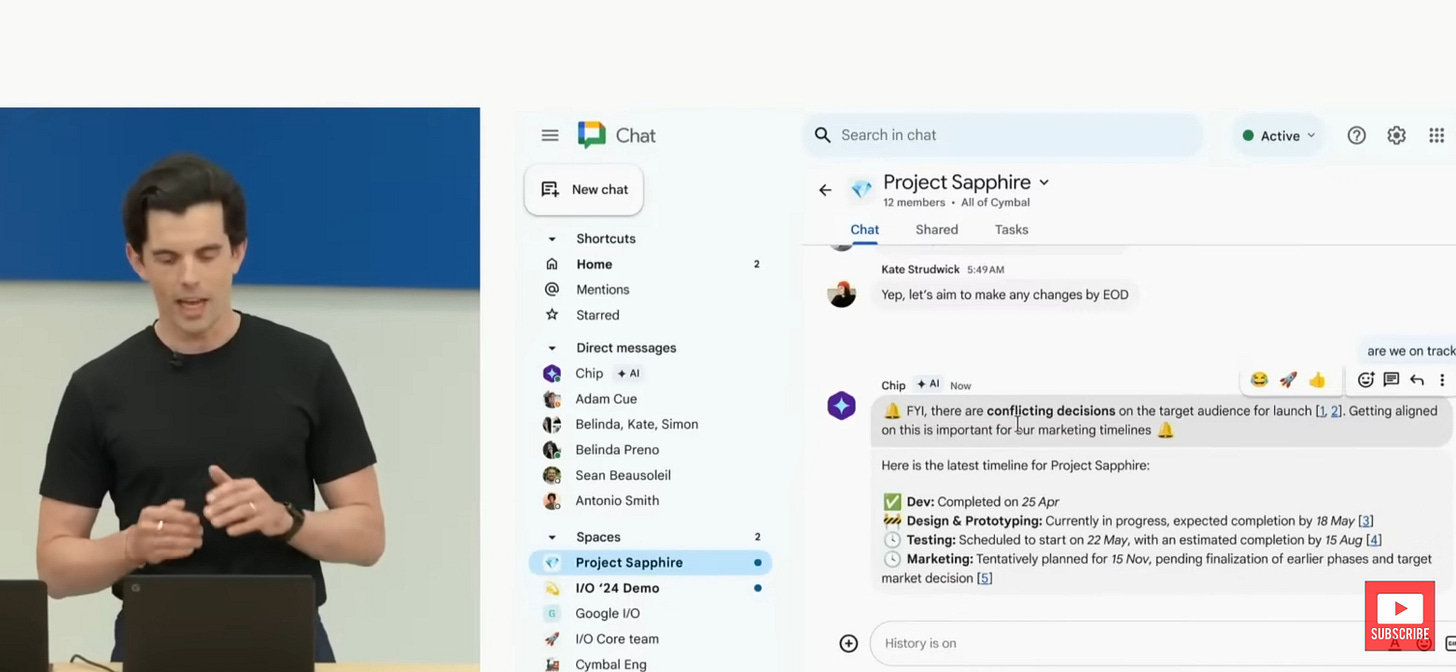

AI Teammates: AI agents that can answer questions on emails, meetings, and other data within Workspace. This example showcased the agent organizing all receipts that land in your inbox into a spreadsheet continuously. Of all the features, this seems to be the most productivity-saving one of all. They literally showed how an AI agent “chip” could be the team PM (program manager).

Generative AI for Images, Music, and Video - Imagen3, Veo

This is an area where Google has been quietly making a lot of progress. Maybe “quietly” is the wrong term, since their loudest demos were around the music generation.

Imagen 3: Imagen 3 is Google’s "most capable and photo-realistic" image generation model yet, with more faithful text rendering, and understands prompts the way people write. Imagen3 seems to hold its own against Midjourney 6. It’s available now at ImageFX and coming soon to vertex AI.

In AI generative music, they showed off Music AI Sandbox, which can create new sections and styles, and showcased the use of AI for music by various musicians. MusicFX is available in Google’s test kitchen.

Veo: Google’s first foray into video generation, Veo is an AI video generation model that generates 1080p videos from text, image, and video prompts. Since Sora is the benchmark these days, it’s not as good as that, but it’s based on years of Google research in the field:

Veo builds upon years of our generative video model work, including Generative Query Network (GQN), DVD-GAN, Imagen-Video, Phenaki, WALT, VideoPoet and Lumiere — combining architecture, scaling laws and other novel techniques to improve quality and output resolution.

You can get on the waitlist for Veo / VideoFX at labs.google.

AI Hardware - Trillium

Google TPU, an alternative to NVidia GPUs, has been an important part of Google’s ML and AI stack for many years. This year, they announced their 6th generation TPU - Trillium, a 4.7x improvement over the TPU v5.

Recap

I got a sense of confusion coming out of this event. Google made dozens of announcements in a jumble of existing features with slight improvements, new product releases, ground-breaking new tech coming soon, and vaporware demos with no certain release. This mix made it harder to assess how much real progress Google is making.

For all the tech they shared, they could have been better at marketing it. One critic put it this way: “Google should feed their Google I/O video to their own AI and ask how to make their demo better.”

Several demos were really impressive, but the real quality and capabilities behind some of these is unclear. We will have to evaluate and benchmark these new releases to determine how good they really are.

Where’s the Beef, er, Ultra? I was disappointed to not hear anything about Gemini 1.5 Ultra (are they even working on it?). Project Astra is inspiring, but it’s just promises and demos until they launch. No announcement took us past GPT-4 in reasoning capabilities.

However, as I review the breadth and sheer number of new applications, models and features announced, I am a lot more positive. Google’s contribution to AI has been their long-standing depth of research. They have been slow and cautious, but are turning their research depth into AI products and features across their product line.

Google is firmly betting on AI and delivering. Gemini and Google’s AI capabilities across the board are good and keep getting better.