Google I/O 25 - Google Delivers!

Releases: Gemini 2.5 Deep Think, Gemini 2.5 Flash audio, Gemini Diffusion, Veo 3, Imagen 4, Flow filmmaking tool, Lyria 2, Agent Mode, Gemini Live, Project Jules, Google Beam, AI Mode in Search.

Google I/O Recap

Google delivered both fundamental and incremental AI release updates across AI models, agents, and applications at their Google I/O 2025, and sizzle came along with the steak, literally, with Google’s Veo 3 video generation model generating sounds along with video.

This year’s Google I/O had a much bigger emphasis on AI products you can use, not just AI models or demonstration projects. CEO Sundar Pichai headlined his keynote “from research to reality,” noting rapid AI model improvements and massive increases in adoption of Gemini models and apps:

What all this progress means is that we’re in a new phase of the AI platform shift. Where decades of research are now becoming reality for people, businesses, and communities all over the world.

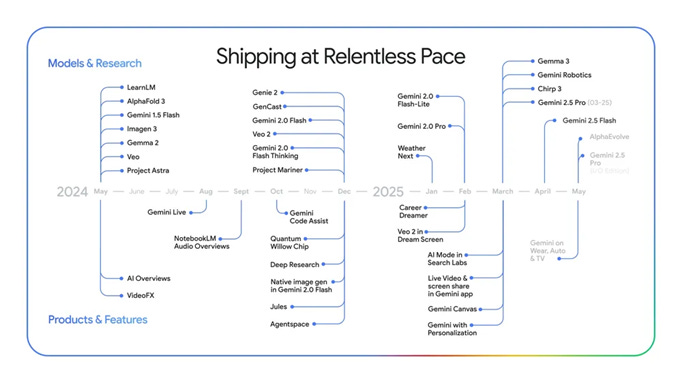

The TL; DR for Google I/O: Google’s momentum is continuing. Google is delivering competitive AI models and products across the board and is not slowing down. Gemini models are SOTA and are improving incrementally. Google is productizing their AI agent experiments. Gemini is going everywhere. AI usage is up and so is the highest-priced Google AI subscription, at $249 a month.

We will break it down below.

Gemini Model Updates

Gemini 2.5 Pro has achieved top performance on the Chatbot Arena leaderboard across all categories and has had several updates since its initial version, showing significant advancements in coding and significant increase in ELO scores, securing the number one position on WebDev Arena with their latest Gemini 2.5 Pro 5-06 preview version.

Gemini 2.5 Pro is adding Deep Think, making Gemini 2.5 Pro reasoning more capable than ever. This new mode for Gemini 2.5 Pro maximizes model performance through advanced reasoning techniques. It will initially be accessible to trusted testers via the Gemini API.

Thinking Budgets have been added for Gemini 2.5 Pro, allowing users to control the number of tokens the model uses to think, bringing a feature previously launched with 2.5 Flash to the Pro model.

Thought summaries have been added to Gemini APIs, organizing Gemini reasoning output into a clear format with headers and key details. providing increased transparency for model thinking.

Google added native audio output to Gemini 2.5 Flash, with text-to-speech support for expressive and nuanced conversation, including first-of-their-kind multi-speaker support for two voices as well as seamless language switching.

Google announced an upgraded Gemini 2.5 Flash model with improvements on coding and other benchmarks, making it even more compelling as a great performance-for-low-cost model. The new Gemini 2.5 Flash achieves 82% on GPQA Diamond and 63.9% on LiveCodeBench. You can test Gemini 2.5 Flash Preview 05-20 and the native audio dialogue on Google’s AI studio.

The new experimental text diffusion model Gemini Diffusion generates text five times faster than 2.0 Flash-lite, almost 1500 tokens per second, while maintaining comparable performance. Diffusion models are used in image generation, where it can be slow, but obtaining such a fast generation for text generation is surprising.

Audio and Video Models

Google announced Veo 3, the latest version of Google’s AI video generation model, and it is state-of-the-art. Veo 3 features enhanced visual quality, a stronger understanding of physics, and a significant advancement: Native audio generation, including sound effects, background sounds, and dialogue.

Imagen 4, Google's latest AI image generation model, is capable of producing richer images with refined colors, intricate details, and improved text rendering, at up to 2k resolution. It is on par with GPT-4o image generation for rendering text faithfully. A high-speed option is also available.

Google also announced Flow, a new AI filmmaking tool for creatives that integrates Veo 3, Imagen 4, and Gemini. With a scene-builder interface and asset management, it enables users to generate video with consistent characters. MattVidPro reviewed Flow and Veo 3. He found the Flow interface to be buggy, but Veo 3 video generation is high-quality with good audio. Flow is available on Google Labs for Pro and Ultra subscribers.

Lyria 2 is Google’s latest music generation model, capable of generating high-fidelity music and professional-grade audio, including vocals and choirs.

Gemini Live

Gemini Live, formerly Project Astra's camera and screen sharing feature, is an AI assistant that interprets the user's surroundings through camera and screen sharing, allowing for real-time conversational interaction with AI about the user's environment. Gemini Live can read and interpret texts, signs, or identify objects that you share on camera or screen.

Gemini Live is being integrated with applications like Calendar, Maps, Keep, and Tasks, and it is part of the Gemini app on Android and iOS.

Project Mariner and Agent Mode in Gemini

The Project Mariner computer interaction capabilities, designed to interact with the web and perform virtual tasks for users autonomously, are being made available to developers via the Gemini API and integrated into the Gemini app. It now includes multitasking for up to 10 tasks at a time and a "teach and repeat" function.

Agent Mode in the Gemini app is an experimental feature that allows the app to operate in the background to find information and execute actions for the user, such as locating apartments and scheduling tours. It uses Project Mariner and MCP to work.

For AI developers, Google enhanced their Agent Developer Kit. Google is also updating and promoting their Agent2Agent (A2A) protocol. The Gemini SDK is now compatible with MCP tools.

Jules Coding Assistant

The Jules AI Code Assistant is now in public beta. The Jules asynchronous coding agent can manage complex tasks in large codebases autonomously. It allows users to submit coding tasks, and Jules handles the rest, including bug fixes and updates, with integration into platforms like GitHub.

Personalization

You can add your sources and files to Deep Research to guide the research agent.

Personalized Smart Replies in Gmail are AI-powered suggestions that can be tailored to mimic the user's style by leveraging personal context from prior emails and other Google applications. This feature will be available in Gmail for subscribers.

Google Beam and Real-time Translation in Google Meet

Project Starline has become Google Beam. Google Beam is a new AI-first video communications platform that converts 2D video into a realistic 3D experience using an array of six cameras and AI for merging and rendering.

Utilizing technology from Starline, Google Meet now provides real-time English and Spanish translation for subscribers, with plans to add more languages in future releases.

AI Mode in Search

AI Mode in Google Search offers a reimagined search experience with advanced reasoning, supporting longer, more complex queries and conversational follow-ups. They announced several enhancements to the AI Mode experience:

Search Live integrates Project Astra's live capabilities into AI Mode.

Personal Context in AI Mode provides personalized suggestions based on past searches and connected Google apps like Gmail.

Deep Search in AI Mode utilizes multiplied query fanout to conduct extensive searches and generate expert-level, fully cited reports.

Complex Analysis and Data Visualization in AI Mode allows search to generate easy-to-read tables and graphs. This feature is coming this summer for sports and financial questions.

Agentic Capabilities in AI Mode integrates Project Mariner's computer use capabilities to take action on the user's behalf, for example, making restaurant reservations or service appointments.

Agentic Checkout in Google Search assists users in purchasing items found in search, including features like price tracking and automated checkout.

Visual Shopping in AI Mode provides visual inspiration from Google Images and product listings from the Shopping Graph.

Virtual Try-On in AI Mode allows users to virtually try on clothes using a custom image generation model trained for fashion; this is available in labs beginning today.

Gemini Everywhere

Gemini in Chrome integrates Gemini into Chrome to assist users while navigating the web.

Beyond the desktop, Gemini is being integrated across various platforms, including Android 16, Wear OS 6, watches, car dashboards, and TVs, to provide a helpful AI assistant experience on the go. Android XR is a new Android platform designed for XR devices like headsets and glasses, optimized for different use cases.

Google Raises Pricing with Ultra

Google is introducing new AI subscription plans. Google AI Pro offers a full suite of AI products with higher rate limits, including the pro version of the Gemini app (what used to be Gemini Advanced).

Google AI Ultra is their pricey $249 a month premium plan with the highest rate limits and earliest access to new Google AI features and products. Only Ultra plan users get immediate access to the Flow video-making tool with Veo 3 or access to Gemini 2.5 Pro Deep Thinking.

CEO Sundar Pichai touted the massive increase in Google AI model use in the past year: Monthly tokens processed grew from 9.7 T (trillion) tokens in April 2024 to 480 T tokens in April 2025, a 50-fold increase. The Gemini app has over 400 million active monthly users. This surge aligns with our observations in “The Era of AI Adoption.”

Given that, will people sign up for Ultra? Some will. Power AI users are willing to pay up for extra usage or access to the best AI models.

The Future is … no, the Present is Agentic

There’s a lot of new AI tools and features to try out from Google. There was so much announced at Google I/O, we have neglected details and left important news out, like Gemini Robotics, Gemma 3n that can run on phones, and much more.

What’s most important is Google’s foundation AI models. The core of Google’s AI offerings are their Gemini models, and Google’s delivery updates are keeping Gemini 2.5 models state-of-the-art.

Google is using their strength in its Gemini models to deliver AI agents and agentic AI features, and most of the new announcements were in this category: Agent Mode, Project Jules, Project Mariner, Gemini Live, and the AI Mode features in Search.

Delivering these agentic AI products and features is a clear part of Google’s vision, as expressed by Demis Hassabis:

We’re extending Gemini to become a world model that can make plans and imagine new experiences by simulating aspects of the world. …

Our ultimate vision is to transform the Gemini app into a universal AI assistant that will perform everyday tasks for us, take care of our mundane admin and surface delightful new recommendations — making us more productive and enriching our lives. – Demis Hassabis

We have expected “a flood of new AI agents” in 2025, so Google releasing AI agents and agentic AI features is not a surprise. What is surprising is Google’s speed and volume of releases, executing with fast iteration cycles that marks a significant speedup from their prior record. Well played, Google.