Grok 3 is a Colossus

X.AI releases Grok-3 with a live demo, showing off SOTA math benchmarks using reasoning, coding abilities, and DeepSearch.

X.AI and Elon Demo the New Grok 3

"Grok-3 … smartest AI on Earth” – Elon Musk

Elon Musk and the team at X.AI announced the release of Grok 3, their latest and most advanced AI model, with a casual late-night dark-mode demo on February 17th.

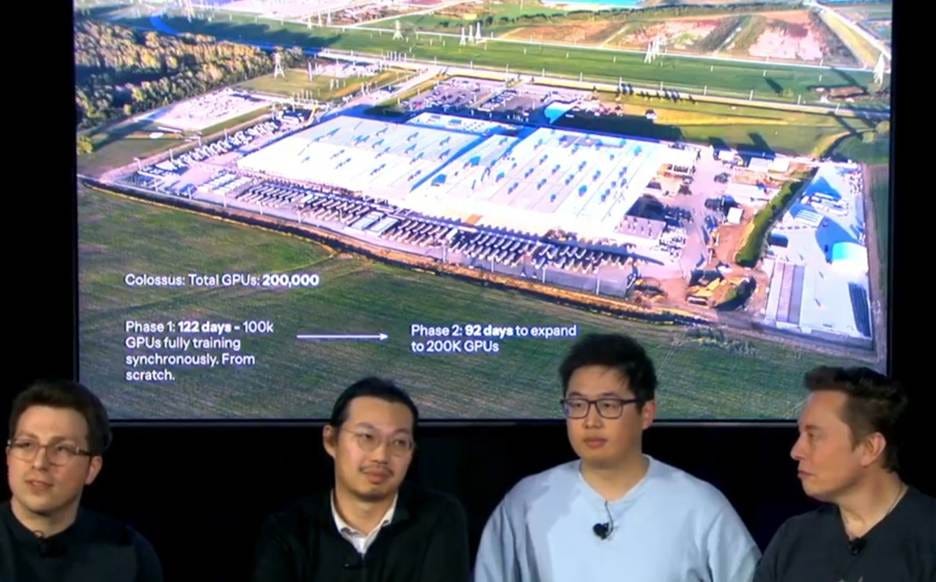

The quartet hyped up the power of Grok-3, showed off its very impressive benchmarks, presented code generations it could do – a mission to mars simulation and some creative games – and discussed the achievement of building Colossus, the massive compute cluster of 200,000 GPUs used to train Grok-3.

Grok-3 Features and Benchmarks

Grok-3 is a powerful SOTA frontier AI model that ranks number one on LMsys Chatbot Arena, with an ELO score of 1400, and scores impressively on pretraining and reasoning evals.

Grok-3 was trained as a base LLM, comparable to GPT-4o, then the team further trained Grok-3 to reason with test-time compute, creating Grok-3 Reasoning (beta).

From a user perspective, there will be Grok-3, and then a Grok-3 “Thinking” to invoke the reasoning model. They shared benchmarks on both.

In addition to achieving the number one position and an ELO of 1400 on Chatbot Arena, Grok 3 (without thinking) comes out ahead of Gemini-2 Pro, GPT-4o, and Claude 3.5 Sonnet on the AIME, Science GPQA, and coding benchmarks.

The Grok 3 Reasoning (beta) was trained for better reasoning on math and coding problems. Grok 3 Reasoning can review its own output and correct mistakes to achieve logical consistency, just like other reasoning models. It shows superior results on logical reasoning, math, and coding, matching or exceeding o3-mini and Deepseek-R1 on their presented benchmark – AIME 2024, GPQA, and LiveCodeBench (LCB).

It’s incredible that the highly challenging AIME 2024 math benchmark is near saturation, while GPQA results are now above expert human-level performance. Yet the Grok-3 reasoning training is still ongoing, so more improvements are expected.

Since benchmarks can be cherry-picked and I don’t have the Premium Plus to try Grok 3 myself, I did a “vibe check” on X and on the YouTube channels:

Grok-3 without reasoning will fail on simple queries like “Is 9.8 < 9.11?” but with the reasoning mode, Grok-3 will reason correctly on many logic questions.

Coding feedback from a bouncing ball in hexagon test is that it’s not that good at coding.

All about AI channel on YouTube found good results on coding and logic from the base Grok 3, not even using reasoning.

Andrej Karpathy gave Grok 3 Thinking mode a try for reasoning and found:

The overall impression I got here is that this is somewhere around o1-pro capability, and ahead of DeepSeek-R1, though of course we need actual, real evaluations to look at.

Grok-3 also has a DeepSearch feature, similar to Deep Research on Perplex that can also looked at the Grok-3 DeepSearch. Karpathy tried it as well:

The impression I get of DeepSearch is that it's approximately around Perplexity DeepResearch offering (which is great!), but not yet at the level of OpenAI's recently released "Deep Research", which still feels more thorough and reliable.

The overall reactions are a mix of hype, solid results and some fails, but the balance of impressions is that Grok 3 is a SOTA AI model, on par if not better than the best reasoning models out there.

Building the Grok 3 Beast with Colossus

Grok 3 was trained on the massive computing data-center cluster called Colossus, which consisted of 100,000 H100s and then was expanded to 200,000 GPUs. It’s incredible to consider that just in mid-2024, the data center used to train this model didn’t exist.

Starting last summer, they built the Colossus data center in Memphis, Tennessee. X.AI completed this data-center building feat themselves because the cloud providers gave them lead times of 18 months or more. They first deployed 100,000 H100s in 122 days, and then immediately turned around to double the data center size in another next 92 days. This data center is a 0.25 GW power cluster.

To deploy this and then use it to train an AI model required overcoming many challenges. They used an existing building in Memphis to get it set up more quickly. They used mobile cooling and mobile generators until utility power and built-in cooling was put in. Power fluctuations for a GPU cluster are dramatic during AI model training, so they used Tesla megapacks to flatten out power fluctuations.

Beyond the building and hardware challenges was the challenge of coordinating 100,000 GPUs for training. As they put it:

“We had to get every single detail of the training right. You have to be good at the details …”

They trained Grok 3 for 200 million GPU-hours for training, about 15 times more compute than used to train Grok 2. While pre-training was finished in early January, there is continued training on reasoning and features.

Access to Grok 3

All features are released on the Grok-3 AI model for Premium Plus subscribers on X. There will also be a separate subscription called SuperGrok for early and extensive access to Grok models and features. At least for now, Premium (non-plus) X subscribers will not get access to Grok 3 nor is there any free tier access to Grok 3.

They are also hosting Grok models on the new website grok.com to host their latest models in a chatbot interface, and a Grok app is in the iOS app store. For developers, the Grok 3 API will be delivered in the coming weeks.

What’s Missing – Model details, Voice, and Multimodality

Model size and training details: The announcement left out many details, such as the number of tokens used for training, the number of parameters in the model. Proprietary AI model builders are leaving that obscure, but other AI labs produce a thorough technical report or model card to establish their real capabilities.

Voice Interaction: They do not have a voice-mode model yet, but they are developing a single model (variant of Grok 3) that will have voice capabilities and will release it soon.

Multimodality: Also missing from the demos was any presentation on visual understanding or related multi-modal features, similar to what you can get in say Gemini 2.0.

Open source: Grok 3 is not open source, which undermines Elon Musk’s complaints about OpenAI not being open; he’s just another commercial AI model competitor. However, they will open-source last year’s Grok 2 when Grok 3 is fully released, and will open-source previous generation models as a general rule:

“We will open source the last version when the next version is full out.”

Final Thoughts - Grok 3 Busts a Moat

They scaled compute massively: The Grok 3 pre-training required 200 million H100 GPU hours, achieved by running a 100,000 GPU supercomputing cluster round the clock for 80 days. Converting that into FLOPS, it equates to as much as 2.85 x 10^27 FLOPS, or almost two orders of magnitude more than used to train GPT-4.

This is likely the largest pre-training run (in terms of compute used) yet conducted.

There is no moat: The xAI team was built from practically nothing in early 2023, to now achieving the release of a SOTA AI model. The xAI team moved extremely quickly. This accomplishment shows that a cracked team of good AI researchers and massive amount of compute (that costs billions to set up and consumes Gigawatt hours of power) is all you need.

Pretraining diminishing returns: While Grok 3 is impressive, it was the Grok 3 Reasoning model results they touted most in their demo; the Grok 3 base model itself doesn’t compete with reasoning models. Combining this fact with the massive compute used on pre-training shows that pre-training is suffering from diminishing returns, while RL for reasoning delivers better ROI, at least for now.

While Grok 3 is a great SOTA AI model, it’s not unique in its capabilities. As with everything AI, wait a few weeks, and a newer, better model will show up.