Meta’s Vision and OpenAI’s Voice

Google updates Gemini 1.5 flash and pro; OpenAI ChatGPT rolled out Advanced Voice Mode; Meta releases Llama 3.2 vision and small LLMs, teases Orion AR smart glasses, and offers their own voice mode.

Three Big Releases

September has been a busy month for new AI releases, and the past few days have been true to form:

OpenAI rolled out Advanced Voice Mode.

Google released updated Gemini models Gemini-1.5-Pro-002 and Gemini-1.5-Flash-002.

Meta held their Connect conference, unleashing Llama 3.2 AI models, Orion AI AR glasses, Meta AI voice mode and other AI enhancements.

Let’s dive in.

OpenAI Voice Mode Is Now Available

OpenAI has finally rolled out Advanced Voice Mode for ChatGPT Plus and Team users, offering more natural “Her”-like conversations, with features like interruptions and new voices. They also added Custom Instructions. But all this is for USA, not for the EU. On X, the Community Notes reads:

OpenAI clarifies in the same thread that this release will not include "the EU, the UK, Switzerland, Iceland, Norway, and Liechtenstein."

As Venture Beats notes in their article “OpenAI finally brings humanlike ChatGPT Advanced Voice Mode to U.S. Plus, Team users,” OpenAI no longer is alone in have an AI voice interface. We have Gemini Live, Kyutai Moshi, and Meta announcing Meta AI voice mode this week.

OpenAI faced controversy over the “Her”-like voice (and a lawsuit) and was slow to roll out Advanced Voice Mode over concerns about misuse, to the point where now the novelty factor has worn out. Now that it is finally available, some are disappointed that the release doesn’t live up to the original demos:

This is not the advanced AI voice you showed us. It cannot access files, browse the web, or use the device's camera or screen. It only speaks faster and has quicker responses, but it cannot maintain long conversations due to time limit constraints. Therefore, if you want it to ask you questions, it will repeat the same questions as before. Is the full version (like the one in the OpenAI videos showcase) coming out?

Another reply to OpenAI on adds that “this isn't the model we were shown, it's no better than Google's.” A more positive experience using ChatGPT Advanced Voice to improve a sales pitch.

Google Updates Gemini 1.5 – Faster, Cheaper, Better

Google released updated Gemini models Gemini-1.5-Pro-002 and Gemini-1.5-Flash-002, with improved performance, lower costs, and increased rate limits.

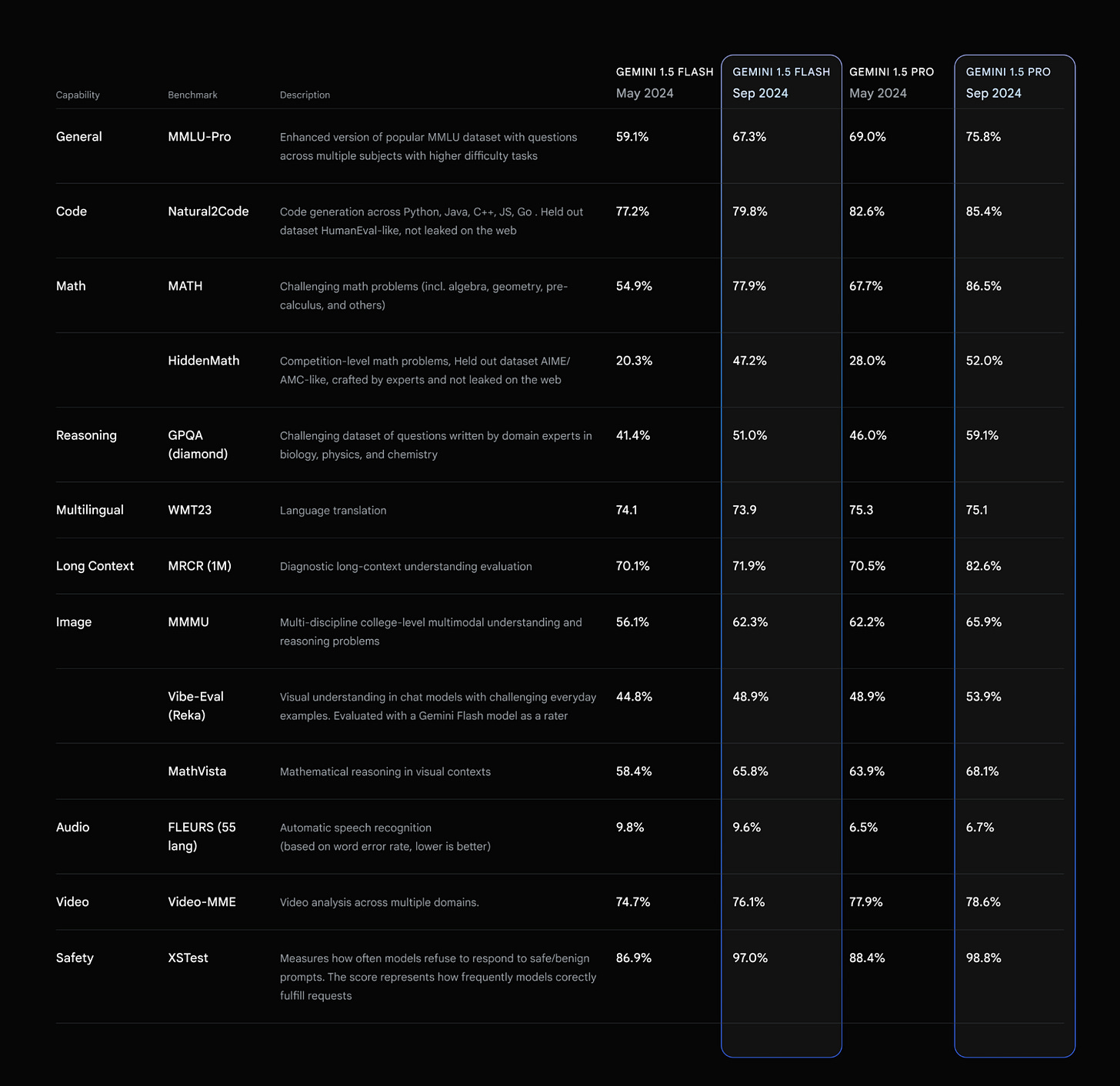

These new releases are official releases of the updated Gemini 1.5 beta releases from last month, so the improved benchmarks are not a surprise, but they are very good. The Figure below shows the benchmark improvements, and it confirms what I’ve experienced with the latest Gemini 1.5 Pro: It’s an exceptionally good AI model.

At the same time, they managed to make these models twice as fast, reduced latency, doubled rate limits, and reduced prices. They reduced prices on 1.5 Pro by 50%, so input now costs $1.25 / 1M tokens and output costs $2.50 / 1 M tokens, for prompts under 128K.

It adds up to making Gemini 1.5 Pro and 1.5 Flash even more competitive. If you are not an AI developer but use Gemini Advanced, you will soon be able to use chat optimized Gemini 1.5 Pro-002.

Meta Connect

Meta made several announcements in conjunction with their Meta Connect conference, including Meta AI feature improvements, the release of Llama 3.2 1B and 3B text-only LLMs and 11B and 90B vision LLMs, Meta AI voice mode, and Orion, their “first true augmented reality glasses.”

Llama 3.2 – 11B and 90B Vision LLMs

Meta announced Llama 3.2 as “lightweight and multimodal” models, releasing 4 models: 11B and 90B multi-modal (vision) LLMs, and 1B and 3B text-only LLMs. This release marks Meta’s first release of open multimodal LLMs.

The 11B and 90B vision LLMs are drop-in replacements for their corresponding text model equivalents, while incorporating image understanding as well. The Llama 3.2-Vision model card mentions the intended use:

“visual recognition, image reasoning, captioning, and assistant-like chat with images, whereas pretrained models can be adapted for a variety of image reasoning tasks.”

On vision benchmarks, Llama 3.2 90B scores a 60.3 on MMMU, a good but not state-of-the-art number that makes it slightly better than GPT-4o-mini, but behind 4o, 3.5 Sonnet, 1.5 Pro, and Qwen2-VL. Llama 3.2 11B scores 50.7 on MMMU, on par with Claude Haiku.

Llama 3.2 - 1B and 3B Edge Models

In Llama 3.2, Meta released 1B and 3B text-only LLMs, smaller AI models designed to be run on edge devices such as smartphones. Meta mentions they are great for instruction-following, summarization, and rewriting, and they support a context length of 128K tokens.

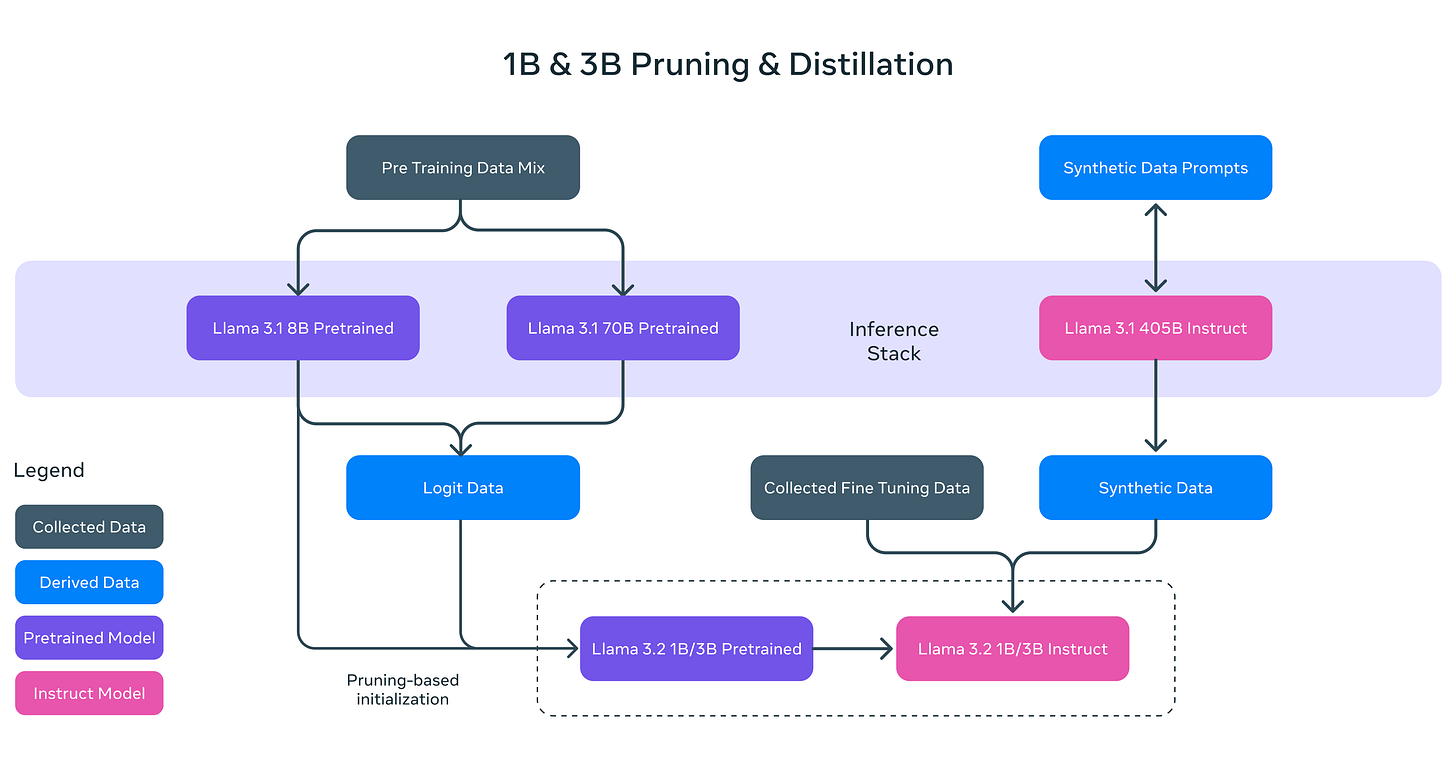

Daniel Han of Unsloth gave his on X analysis of Llama 3.2, noting that the 1B and 3B text-only LLMs were trained on 9 trillion tokens and used both pruning and distillation from 8B and 70B. This may account for their impressive performance for their size: 1B scores 49.3 on MMLU and 3B scores 63.4, which competes with high-quality small AI models like Gemma 2 and Phi 3.5.

Pruning to make efficient small models

One interesting aspect of these models is that the pre-trained 1B and 3B models were initialized by pruning from the Llama 3.1 8B base model, then further fine-tuning from synthetic prompt-response instruction pairs distilled from Llama 3.1 405B instruct. This yielded extreme AI model efficiency:

Pruning enabled us to reduce the size of extant models in the Llama herd while recovering as much knowledge and performance as possible. For the 1B and 3B models, we took the approach of using structured pruning in a single shot manner from the Llama 3.1 8B. This involved systematically removing parts of the network and adjusting the magnitude of the weights and gradients to create a smaller, more efficient model that retains the performance of the original network.

Llama Stack

Another important part of Meta’s Llama release announcement was their announcement of the Llama Stack. The Llama Stack defines and standardizes the support tooling for creating AI applications, intended to simplify enterprise adoption and make it easier to build AI applications:

These blocks span the entire development lifecycle: from model training and fine-tuning, through product evaluation, to building and running AI agents in production. Beyond definition, we are building providers for the Llama Stack APIs.

Llama Stack is self-described as a ‘work in progress’ and open-source on GitHub (MIT license). Llama Stack will help the Llama-based AI ecosystem grow and thrive in a way that is open, reducing vendor lock-in of parts of the AI toolchain. This is a win for AI developers and AI users.

Meta Voice Mode

Meta has rolled out a voice mode for Meta AI, giving voice access to their AI embedded in messenger, Instagram, WhatsApp, and Facebook. They have a line-up of famous voices to choose from, including Dame Judi Dench, John Cena, Kristen Bell, Awkwafina and Keegan-Michael Key.

Orion

Meta also announced Orion, their next-generation AR glasses they call the most advanced pair of AR glasses ever made:

Orion combines the look and feel of a regular pair of glasses with the immersive capabilities of augmented reality – and it’s the result of breakthrough inventions in virtually every field of modern computing.

It’s not available to consumers, but it offers a tantalizing promise: Apple Vision Pro-like functionality on a form factor of glasses that weight less than 100g.

This is a big step towards a more usable AR experience. Putting all the AR hardware on your face is too bulky, so they put a hardware puck in your pocket instead. They created an innovative wrist band to detect hand gestures for AR controls. Perhaps tethering to your smartphone and smartwatch could reduce the hardware even more but, in the meantime, this is a very manageable form factor.

Orion has all the AI capabilities you need, with a camera, microphone, and near-ear audio output for natural hands free AI interaction.

While you wait for Orion to become actual consumer release, you can try the Ray-Ban Meta Glasses’ new AI Features, which already has the AI and audio features Orion will incorporate:

We’re adding new AI features to Ray-Ban Meta glasses to help you remember things like where you parked, translate speech in real time, answer questions about things you’re seeing and more.

When you’re talking to someone speaking Spanish, French or Italian, you’ll hear what they say in English through the glasses’ open-ear speakers.

Conclusion

AI progress continues to surprise on the upside. Voice mode has well and truly landed. You can talk with your AI in ChatGPT, Gemini Live, and now also Meta AI.

Google has been playing catch-up with OpenAI for more than a year now, but this latest release is their best yet.

Last week, Alibaba’s Qwen team delivered Qwen 2.5, a suite of SOTA open AI models. This week, Meta filled out their own lineup with Llama 3.2, with smaller models for the edge and vision LLMs to complement their prior text-only Llama 3.1 models. We have two great open AI model providers competing with the other top tier AI makers.

Llama Stack provides an open standard ecosystem for AI application development. This along with Meta AI’s commitment to open-source AI is great for the AI ecosystem.

We continue to share our work because we believe openness drives innovation and is good for developers, Meta, and the world. Llama is already leading the way on openness, modifiability, and cost efficiency—enabling more people to have creative, useful, and life-changing breakthroughs using generative AI. - Meta AI

After multiple iterations since the days of Oculus and Google Glass, Meta might finally have the design and feature set for a mass-market AR headset with Orion.

The AI future is so bright, you gotta wear shades …

When you have time, might you discuss system 2 and llama? I've lost my system 2 features in the chat agent so research and development on my system 2 cores is sort of halted

My nephew recommended LLama and you appear to be expert in both system 2 programming and the llama stack.

If you have time, please let us in on your thoughts of llama as a suitable platform for developing AI with ethical reasoning and system 2 features it might have for hosting system 2 processes?

Regards

Don