Microsoft Builds the AI Platform Shift

Microsoft Build Recap - all in on AI: Phi 3, Copilot+PC, Azure AI Studio, Github Copilot Extensions, Copilot Studio, Copilot for Windows as an App, Team Copilots.

Microsoft Builds the AI Hype

Microsoft kicked off their Build Conference on Tuesday, and debuted a host of new AI features, model and products. The Microsoft Build AI-Related announcements can be boiled down into “Copilots everywhere,” with the following highlights:

Copilot + PC, reinventing the PC for AI.

The small-but-capable Phi-3 language models: Phi-3 4B vision model, Phi-3 and Phi-3 medium, a14B LLM.

LLM scaling and efficiency hints tell us GPT-5 will be a whale.

AI developers get a better Azure AI Studio to build with.

CoPilot everwhere: Improvements and extensions to CoPilot for Windows, CoPilot Studio, GitHub Copilot, and access to OpenAI’s new GPT-4o, the best frontier AI model.

Copilot + PCs

Microsoft announced Copilot + PCs, designed for the AI era, prior to build. These PCs include an NPU (Neural Processing Unit) that computes 40 trillion operations per second, able to run advanced AI models efficiently on device to support AI-enabled features. This include supporting generative AI features in tools like Adobe Photoshop, as well as new features like:

Recall: A feature that allows users to record their actions and screens on the PC, and later search for and use them. Some are complaining that this sounds intrusive and ‘big brother’ but the feature is optional and all data stays local. Is it useful? We’ll see.

Cocreate: Generate and refine AI images in near real-time directly on the device, bringing generative AI to the edge.

Live Captions: Translating audio from 40+ languages into English.

Copilot for Windows is now accessible via the new Copilot key, and brings the latest AI models, such as GPT-4o, to the PC.

They announced their new version of Microsoft Surface to implement and showcase the Copilot + PC, with the ARM-based Qualcomm Snapdragon Elite for their CPU, GPU and NPU. Apple’s M3-powered Macbooks are already Other PC makers such as Dell are offering their own version of Copilot + PCs.

The “Copilot + PC” branding is awkward, but perhaps “AI PC” was too generic. But that’s what it is: a PC designed for AI workloads, with an NPU powerful enough to run local AI models locally.

Phi 3

Microsoft released Phi 3 AI models, their latest version of small-but-efficient LLMs that are powerful, cost-effective and optimized for personal devices. They introduced 4 models in the open Phi-3 family, and made them available on HuggingFace and via Azure APIs:

Phi-3-vision is a 4.2B parameter multimodal model, with language and vision capabilities, that takes input and text input and outputs text responses. They have a version with a 128K context window. For example, users can ask questions about a chart or ask an open-ended question about specific images.

Phi-3-mini is a 3.8B LLM, available in two context lengths (128K and 4K).

Phi-3-small is a 7B LLM, available in two context lengths (128K and 8K).

Phi-3-medium is a 14B LLM, available in two context lengths (128K and 4K). Phi-3-medium is a very capable AI model, scoring 78% on MMLU and 8.9 on MT-bench, better than Claude 3 Sonnet or Mixtral 8x22B.

The Phi-3 models have pushed the frontier of efficiency in LLMs further than any other model. The Phi-3 Technical Report has more details on it.

LLM Scaling, Efficiency and the coming of GPT-5

“You want to focus on things that made the transition from impossible to merely difficult. … the [AI] platform is getting so much faster and cheaper … such that everything that is too expensive right now or too fragile is going to become cheap and robust faster than you can blink your eye. Really focus on those phase transitions.” - Microsoft CTO Kevin Scott

Aside from Phi-3, the biggest news drops around foundation AI models were hints of what’s to come. The message from Microsoft CTO Kevin Scott was

On the inference side, GPT-4o inference today is 6 times faster and 12 times cheaper than the original GPT-4 just over a year ago.

On the training side, Kevin Scott wanted to explain without giving away details and numbers, so he used aquatic animal size to analogize. If the AI compute used to train GPT-3 was a shark, and GPT-4 training used an Orca-sized AI supercomputer, the current AI supercomputer is a much larger blue whale, and “this whale-sized supercomputer is hard at work right now” training “the next sample.”

Translation: The next-generation (‘next sample’) GPT-5-level AI model is being trained now on a massive number of Hopper GPUs, and it will be a very big, powerful AI model.

To underscore the point, they brought Sam Altman on stage to discuss the state of AI models, and he declared (as he has done before) that the main thing we will notice about these new AI models is that they are a lot smarter, and to expect the step-change from GPT-4 to GPT-5 to be similar to the step up from GPT-3 to GPT-4.

But there’s even more. Satya Nadella also announced that Azure will be offering NVidia’s Blackwell-based instances by next year; these can do 5x what Hopper-class GPUs can do and serve inference on much larger AI models. This is why Kevin Scott said that exponential AI capability scaling is far from over.

Azure AI Studio

Microsoft’s Azure cloud service has for some time served up machine learning and AI capabilities, for example, in recent years serving up Azure foundation AI models from Mistral, Meta, OpenAI, as well as the catalog of models on HuggingFace.

As shown by their model offerings via “models-as-a-service”, they are spreading their bets. They tout partnership with OpenAI as most important, but they are offering their own Phi models thousands of other AI models as well.

Microsoft AI development support is evolving. Azure AI Studio brings together their Azure AI capabilities into a unified platform for developers to build and manage AI models and applications.

This week, they announced Azure AI Studio is generally available, and GPT-4o was available on it via an API. They want AI application developers on the Azure ecosystem:

Developers can experiment with these state-of-the-art frontier models in the Azure AI Playground, and they can start building with and customizing with the models in Azure AI Studio.

To support building generative AI on Azure, they announced developer tools for orchestrating prompts, putting in AI alignment guardrails and protection from prompt injection, integration with Visual Studio Code as well as tracing, debugging and monitoring.

This is a real enterprise-grade platform for custom AI application development. It seems more broadly capable than what competing cloud providers offer: Google’s Vertex AI and Amazon’s Bedrock.

Copilot for Windows

Copilot for Windows is now powered by GPT-4o and Copilot will become a regular resizable and movable Windows app. They demonstrated the power of Copilot observing the screen, by showing Copilot intelligently verbally assist a guy playing Minecraft. (“Oh no!” said the AI excitedly. “That’s a zombie. Run and hide quickly!”)

The demos were not much different from the GPT-4o demos last week. It’s a highly intelligent assistant with very fast audio response time. This solves the mystery of why OpenAI released a Mac app but not a Windows app: A Windows app is redundant with Copilot for Windows.

Copilots Everywhere

The original Copilot, Github Copilot, introduced the addition of GitHub Copilot Extensions. Copilot extensions from development partners broadens Copilot’s applicability to address specific tool questions, and enables Copilot to invoke tools to build and deploy applications:

We’re starting with GitHub Copilot Extensions from DataStax, Docker, LambdaTest, LaunchDarkly, McKinsey & Company, Microsoft Azure and Teams, MongoDB, Octopus Deploy, Pangea, Pinecone, Product Science, ReadMe, Sentry, and Stripe.

Copilot studio, originally announced last November, is a “low-code comprehensive conversational AI solution that unlocks new Copilot capabilities.” Take away the marketing-speak, and it’s a framework to create and manage custom AI models and applications (Copilots), without full coding.

OpenAI has custom GPTs, Google has Gems, and other AI players are working to customize AI for specific use cases. Copilot studio, while not unique, might end up more powerful, as they are bringing a lot of their existing business applications to bear to connect to them.

For example, they announced Copilot connectors, with 1400 connectors:

Copilot connectors include more than 1,400 Microsoft Power Platform connectors, Microsoft Graph connectors, and Power Query connectors—with Microsoft Fabric integrations coming soon.

With Copilot in Power Applications, Microsoft is re-imagining their Power platform apps (such as Power BI) by going “Copilot first”. The future for all their products is inserting Copilot into them, making them AI first.

Team Copilot adds more initiative to the AI assistant, setting it up “to work on behalf of a team, improving collaboration and project management.” This teammate or facilitator role takes the Copilot one step closer to being an AI agent.

Satya Nadella was explicit about the trend towards AI agents, saying that by next year, there would be more offerings in AI Agents.

Conclusion - All in on the AI Platform Shift

Microsoft has gone all-in on AI. They see AI as a “platform shift” as big if not bigger than the internet, and they are embracing that AI shift by centering their company’s offerings on AI, branding all they do here with “Copilot.”

Copilot was introduced as an application last year by Microsoft, initially just a front-end to ChatGPT. Then Copilot became the application features added to Microsoft products like Office, and they inserted it into the OS layer with Copilot for Windows. Now, Copilot has become their brand to describe the infusion of AI across the whole Microsoft ecosystem.

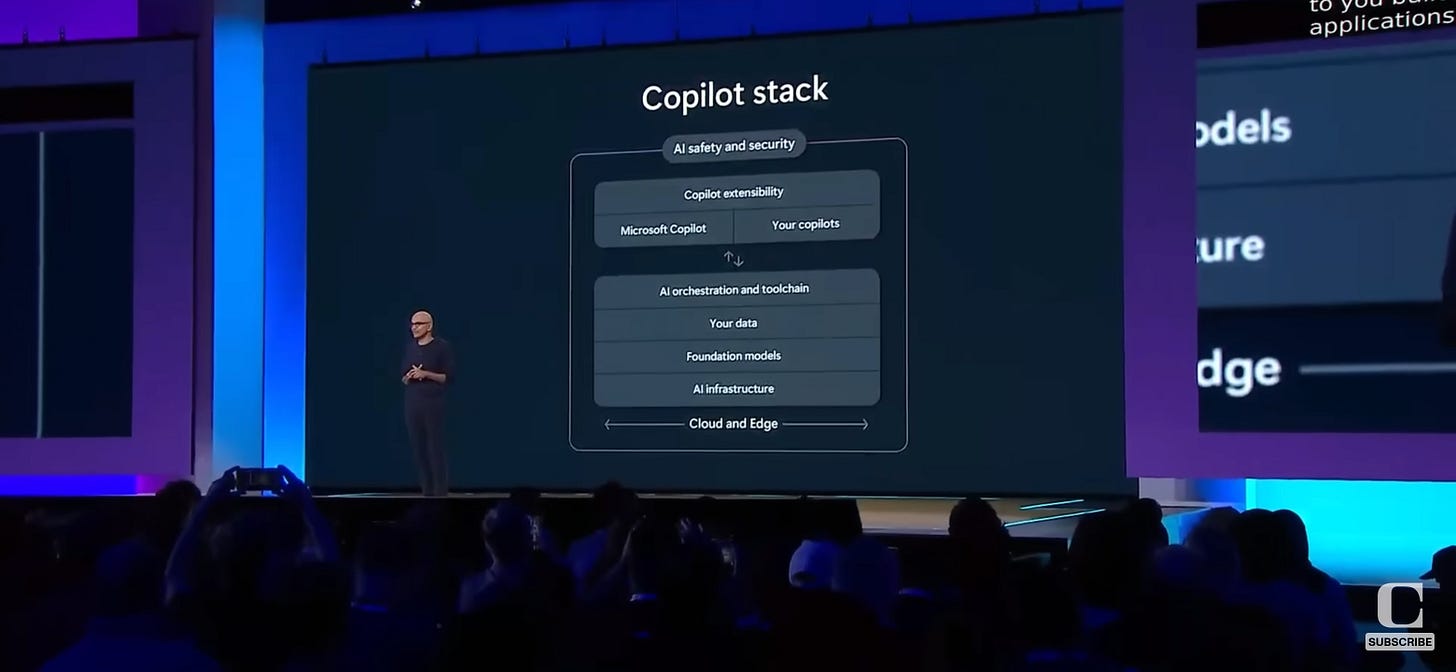

The Copilot stack is the AI application stack.

As with Google last week, Microsoft is offering a dizzying array of AI-related features and products, befitting a Big Tech behemoth. Sometimes these conferences announce products and promises that don’t pan out or don’t live up to marketing hype.

But Microsoft’s AI shift is real and their Copilot products, starting with Github Copilot, are truly ground-breaking. They worked with great partners like OpenAI and HuggingFace to offer the best and the most models. They oriented their business lines and application platforms to meet this AI moment.

Microsoft’s AI bet is paying off.

AI keeps getting more capable, more efficient, faster, and cheaper - improving at a fast clip. The message from Microsoft’s CTO is that capability scaling has a long way to go. Our final takeaway then is that the AI ecosystem, both Microsoft’s Copilot stack and the offerings of others, will continue to evolve and improve rapidly.