New AI Models Drop

Google's Gemini 1.5 Pro for all, Code assist, Agents & Google Vids, OpenAI's GPT-4 upgrade, Mistral's new Mixtral 8x22B

TL;DR - This week, Google opened up Gemini 1.5 Pro to the world, and announced Gemini Code Assist, Agent Builder Google Vids, and other new tools and features at their Cloud Next conference. OpenA announced GPT-4 Turbo upgrades to bring Vision to their API and improve GPT-4 reasoning. Finally, Mistral brought the largest and best open source AI model yet, Mixtral 8x22B. Let’s dig in!

Google releases Gemini 1.5 to the world

We’ve had a whirlwind of new AI models releases already this month, and this week just amped it up. Google announced Gemini 1.5 Pro is now available in 180+ countries, pretty much everywhere. Gemini 1.5 Pro features include:

Gemini 1.5 pro has a huge 1 million token context window, which equates to 700,000 words, 1 hour of video, 30,000 lines of code, or 11 hours of audio. This expands use cases, allows for fast digestion of large amounts of data, and may displace need for RAG in some cases.

Native Audio Understanding: Gemini 1.5 Pro understands audio and video as direct input, yielding many multimodal analysis capabilities. It can process speech, music or audio in videos directly, so it can transcribe conversations easily and be spoken to directly without needing speech-to-text conversion.

System Instructions: Users can guide the model’s overall responses to queries.

JSON Mode: Instruct the model to only output JSON objects. This helps with structured data extraction and AI applications chaining AI model responses.

Function calling improvements: You can now select modes to limit the model’s outputs, improving function-calling reliability. Choose text, function call, or just the function itself.

Gemini 1.5 Pro is a highly capable model, on par with GPT-4 Turbo, and adding system instructions, JSON mode and function calling makes very useful for building agents and AI apps and agents. This is a major step forward in Gemini’s utility.

The Gemini 1.5 Pro system prompt was also helpfully leaked on X.

Google Cloud Next: Agents, Code Assist, Vids and More

Google’s Cloud Next 2024 in Las Vegas this week brought out Gemini 1.5 Pro news, but Google had a lot more to say. One of the themes at this conference was agents. Lots of different agents.

Google announced Vertex AI Agent Builder, a platform for building AI agents. The Vertex AI Agent Builder aims to make it easy to build agents using Google’s Gemini AI models and other Vertex features in a no-code environment. Thanks to Gemini 1.5 Pro’s new capabilities in function calling, system prompts and JSON outputs, Google has a strong no-code platform for building useful AI agents.

“Vertex AI Agent Builder allows people to very easily and quickly build conversational agents. … You can build and deploy production-ready, generative AI-powered conversational agents and instruct and guide them the same way that you do humans to improve the quality and correctness of answers from models.” - Google Cloud CEO Thomas Kurian

This agent builder can utilize RAG APIs and vector search to provide grounding and reduce hallucinations. They shared multiple agent demos, including an agent that analyzes marketing campaigns, as well as an agent to handle your health insurance plan enrollment.

Google launched Gemini Code Assist, which use the latest Gemini 1.5 Pro and takes advantage of its huge context window. This makes it a strong contender with GitHub’s Copilot and others in this space. Gemini Code Assist’s main role is AI-powered coding assistance in the IDE (VS Code, IntelliJ, Cloud Workstations, Cloud Shell Editor): Code completions, generate functional code or unit tests, help debug, etc. It can also help troubleshoot issues in Google Cloud.

Google also announced Gemini in Databases, a bundle of AI-powered tools and co-pilots for developing and monitoring application databases. It can generate SQL code, explain database configurations, migrate databases and more.

Google Vids is a new AI-powered video generation tool that generates video presentations from your own content: Outlines, slides, and documents. You can prompt it to write scripts, pull in b-roll clips, have it do AI-generated voice-overs or add your own. It’s more of a video editing tool with shareability (like other Google docs) than a Sora or Runway-like AI video generation tool. It’s been added to Google Workspace apps and is targeting business use cases like marketing, webinars, product demos, etc.

Other news items:

Imagen 2.0 has new features including text-to-live that turns images into short videos, digital watermarking, and image editing.

Google Meet now has AI meeting notes/summaries.

Google unveiled an Arm-based data center processor and new TPU v5p chip, designed to run AI workloads in Google cloud.

With their full release of Gemini 1.5 Pro and these other updates, Google is finally bringing their A-game to AI.

GPT-4 Update

Not to be upstaged, OpenAI also released an improved version of GPT-4 Turbo with Vision for all developers to use via the API. They say it is “majorly improved” in speed and on reasoning, and “Vision requests can now also use JSON mode and function calling.”

They mention several AI tools that are already using GPT-4 Vision capabilities:

Cognition Labs’ AI agent for software development, Devin, used GPT-4 Vision for coding tasks.

“The healthifyme team built Snap using GPT-4 Turbo with Vision to give users nutrition insights through photo recognition of foods from around the world.”

“Make Real, built by Tldraw, lets users draw UI on a whiteboard and uses GPT-4 Turbo with Vision to generate a working website powered by real code.”

As OpenAI has touted overall improvements, and there have been reports on the benchmark performance of the latest GPT-4 Turbo version. However, it’s been scattershot in the reporting from different users, and OpenAI hasn’t released specifications or benchmark claims themselves.

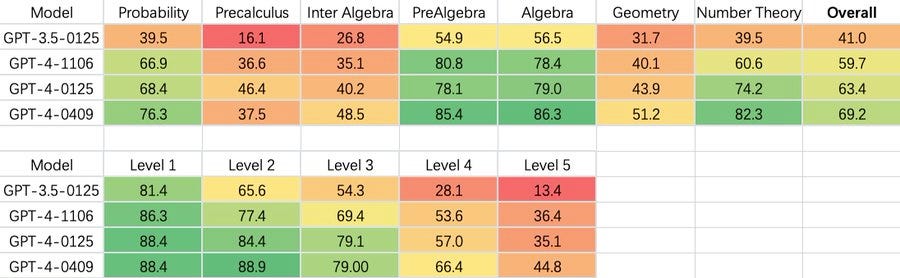

Zheng Yuan on X tested MATH 500 test set on GPT-4-0409-turbo versus previous GPT-4-turbo and found big performance improvement on the hardest reasoning problems.

Wen-Ding Li shared that GPT-4 04--09 shows a big jump in math and reasoning on the LiveCodeBench coding benchmark, a Pass @1 of 66.1, putting it ahead of prior GPT-4 Turbo (55.7) and out-performing all other LLMs (Claude-3 Opus at 58.7). Bindu Reddy says “At least in coding, it is heads and shoulders above anyone else.”

Pietro Schirano on X did a comparison between the latest version of gpt-4-turbo and the previous one, 0125-preview for coding. He found the new version “less verbose and it goes directly into code,” also saying:

From a full day of testing with the new gpt-4-turbo, a few things stand out: It's much less lazy and more willing to output large chunks of complete code. It seems better at reasoning. Plus, function calling with vision is going to be a huge unlock.

On the other had, there are claims that GPT-4 Turbo is a “lazy coder” and that it performs worse on aider’s coding benchmark. Kyle Corbitt found the new GPT-4 update a wash (for non-coding tasks): “Conclusion: The GPT-4 April release is pretty comparable on most things, but much worse on guided summarization.”

More use will determine its real performance, but it’s now cheaper to do so. gpt-4-turbo-2024-04-09 input tokens are now three times cheaper at $10.00 per 1 million tokens, while output tokens are half the price of before at $30.00 per million tokens.

Mistral’s New 8x22B MoE model

Mistral dropped a new Mixtral-8x22B MoE model via a torrent link on April 9th, releasing an open AI model in their own understated way and making April 9th a triple-header AI model release day.

Mixtral-8x22B is an 8-way mixture-of-experts, with a total of 141B parameters with about 39B parameters active at a time. The Mixtral-8x22B model weights have been put on HuggingFace. What was released is a pretrained base model and does not have any moderation mechanisms; that would come with instruct-tuning and fine-tuning to make refined Instruct models.

How does Mixtral-8x22B perform? With the caveat that this is a base model, and instruct models will be better and more reliable, some early benchmarks show that this model may be comparable to Claude 3 Sonnet, and one user found Mixtral-8x22B base model somewhat comparable to GPT-4 pre-release base model of a year ago. This is the new open AI model leaderboard king.

The open AI model community is excited by what we can do with this AI model. Quantized local versions, fine-tunes and more have and will be developed by the fast-moving open-source AI model community.

Quantizing it can make it smaller. Already quantized versions of Mixtral-8x22B have been developed, It’s even been quantized down to 1-bit.

To try this model via an API, it is available to try on Together on their AI model playground. Other services will host it shortly.

If you have a Mac equipped with an M2 Ultra chip and 96GB, the new Mixtral 8x22B runs nicely in MLX on an M2 Ultra. “You can run inference locally on your Mac (+96GB URAM).”

A really fast-turnaround fine-tune of Mixtral-8x22B was released on HuggingFace: Zephyr-orpo-141B-A35B. They were able to release this fine-tune within an amazingly fast single-day turnaround, thanks to an efficient instruction fine-tuning dataset called Capybara, a new alignment technique called ORPO, and access to 32 H100s:

Zephyr is a series of language models that are trained to act as helpful assistants. Zephyr 141B-A35B is the latest model in the series, and is a fine-tuned version of mistral-community/Mixtral-8x22B-v0.1 that was trained using a novel alignment algorithm called Odds Ratio Preference Optimization (ORPO) with 7k instances for 1.3 hours on 4 nodes of 8 x H100s.

Gemma Updates

Also this week, Google expanded the Gemma family with Instruct Gemma, Code Gemma and Recurrent Gemma. These are all 7B open AI models, available to run and use locally.

Summary

Google upped their game with Gemini 1.5 Pro and AI agent tools, Mixtral’s 8x22B advanced open AI models as best-performing open AI model, and OpenAI gave us major upgrades with GPT-4 Turbo Vision. A huge news week for AI and it’s still Thursday.