NVidia GTC - Nvidia revvs up the AI revolution

NVidia GTC 2024: Blackwell B200, 40 petaflop GB200, new DGX AI supercomputer, NIMs, NeMo microservices, Project Groot, DRIVE Thor. Nvidia is the indispensable company for the AI era.

Intro

Nvidia’s annual technology showcase, GTC, has become all about AI, and Nvidia’s GTC 2024 has become a pretty big deal, with hype is as big as Nvidia’s market cap. Jensen Huang showed up on stage for his GTC 2024 keynote in a leather jacket and announced ‘this is not a concert’ to a concert-sized audience in the massive San Jose SAP center, then delivered a two-hour keynote touting their next generation Blackwell AI chips and systems and a number of other AI-related technologies and products. Nvidia is now built entirely around generative AI.

NVidia’s Big Bets Pay Off

How did Nvidia become the AI star they are now?

Nvidia made some really smart bets long ago to become the dominant AI chip and system hardware supplier - the pick and shovel seller in the AI Gold Rush. It started with their founding as a company focused on ‘accelerated computing’ the idea of parallelizing computing; a valuable but difficult challenge.

For its first 20 years, Nvidia’s niche was applying parallel computing to the realm of graphics cards, becoming the leading graphics chip and card seller. But in 2006, Nvidia developed CUDA drivers for their GPU to enable broad parallel programming application in other applications.

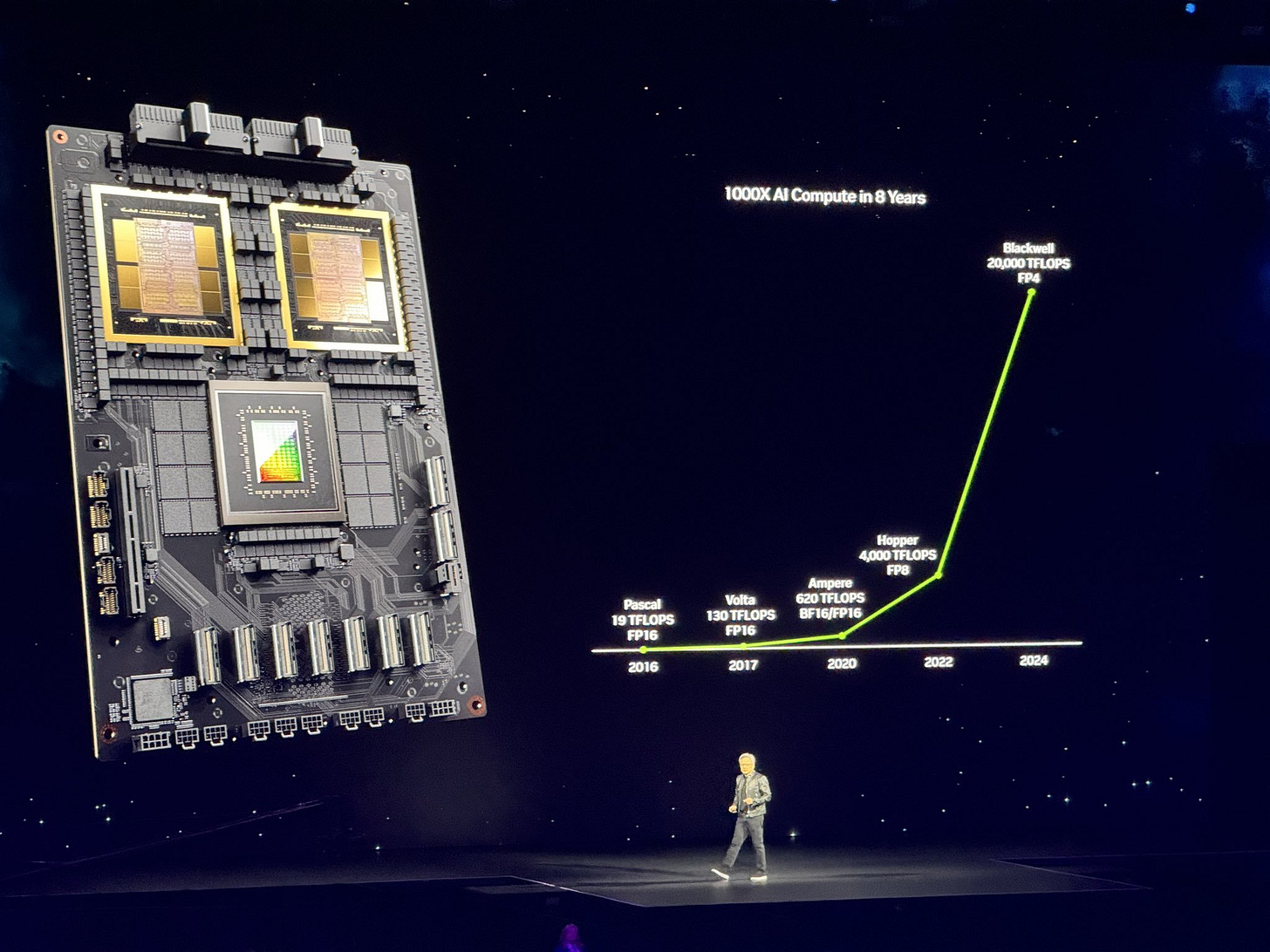

Their development and support for general parallel programming led to the use of GPUs in training deep learning neural networks. Nvidia embraced this growing market for using GPUs to train AI models, and in 2016 they delivered the first DGX-1, an “AI supercomputer in a box,” to a startup called OpenAI.

As scaled up training of AI models started using larger networks of GPUs in the late 2010s, Nvidia saw it required ever more interconnect bandwidth. So Nvidia bought Mellanox Technologies in 2019 to integrate their high-performance networking technologies with their own GPUs. With this combination, they developed NVLink switch, a key component in higher-performance systems.

Lastly, Nvidia has for over a decade been betting on the AI market by working on a supporting software stack, and conducting research in AI, autonomous vehicles, robotics, simulation, and more. Their research seeds the new applications that drive demand for their chips. Pretty smart.

All of Nvidia’s bets since their founding have come together into a massive payoff: AI has exploded in importance, activity and impact. They sell the highest-performing GPUs and systems for AI model training and inference, and they are leading the competition by so much they have a near-monopoly on the trillion-dollar AI opportunity at a time when demand far outstrips supply. Twenty years to an overnight success.

GTC 2024 Key Announcements

Nvidia GTC 2024 showed us that Nvidia is not slowing down but is doubling down. Nvidia brought us updates on the Nvidia product line, roadmaps, and shared trends and technology applications in artificial intelligence and many verticals. They fully expect the AI revolution to keep going and they will make it happen, by supplying the chips, systems and infrastructure for AI and related verticals, such as self-driving cars, robotics, healthcare, etc.

Their roadmap for GPUs is aggressive, but even more aggressive are the systems, software and other elements they are bringing to bear to AI. It’s a mistake to call Nvidia a chip company. Nvidia is making itself the AI hardware, software and systems supplier.

To make the point, the top takeaways from the Jensen Huang GTC 2024 keynote were:

Blackwell platform

NIMs - Nvidia Inference Microservice

Nemo and NVidia AI Foundry

Omniverse and Isaac Robotics

We will go through each one in detail below, but none of these is a ‘just a chip’.

Blackwell

The centerpiece of Jensen Huang’s GTC keynote was the announcement of Blackwell.

The Blackwell B200 GPU is the largest chip in the world, with 208 billion transistors in 2 dies, doubling the size of its predecessor GPU, Hopper. Blackwell’s 2 dies behave as one (extremely large) chip, sharing 10 terabytes per second of bandwidth between the dies.

They used the TSMC 4NP process, a refined version of the process used for Hopper. As Jensen himself has noted, Moore’s Law is running out of steam; there was little raw transistor speedup or density gains in Blackwell versus Hopper. So they found other ways to make improvements: Improved memory bandwidth, next-generation NVlink doubling interconnect capacity, and FP4, a lower-precision 4-bit floating point option.

In particular, FP4 effectively doubles LLM inference speed relative to FP8 by using fewer bits of precision, but 4-bit quantized LLMs perform almost as well as their FP8 brethren; fewer bits is more efficient. This efficiency speed-up will make FP4 popular for LLM inference.

Put that all together, and the Blackwell B200 GPU delivers up to 20 petaflops of compute and other massive improvements. It improves a massive 30 times on inference performance relative to the Hopper predecessor. This huge leap in efficiency is almost all from multiple systems optimizations.

The Grace-Blackwell GB200 GPU system takes two B200 Blackwell chips connected to a Grace chip. In FP4 mode, this delivers 40 Petaflops of performance.

Nvidia is selling not just GPU chips and boards, but whole AI Supercomputers. Jensen Huang proudly pointed out that with Blackwell GPUs, they can deliver a single-rack (with 36 GB200s) Blackwell DGX GB200-based system that achieves 1.4 Exaflops of performance in FP4. It’s a one ton, 600,000 parts beast:

Altogether, the GB200 NVL72 has 36 Grace CPUs and 72 Blackwell GPUs, with 720 petaflops of FP8 and 1,440 petaflops of FP4 compute. There’s 130 TB/s of multi-node bandwidth, and Nvidia says the NVL72 can handle up to 27 trillion parameter models for AI LLMs. The remaining rack units are for networking and other data center elements.

To put the DGX GB200 Blackwell performance in perspective, its incredible 1.44 ExaFlops performance dwarfs the performance of the original 170 TeraFlops 2016 DGX-1 system by 8500 times. It has a higher performance than all but a handful of the most powerful supercomputers.

Much of the magic of this system’s high performance is the interconnect. Their 50 billion transistor NVLink switch provides enough interconnect capacity so that “every single GPU talks to every single other GPU at full speed at the same time.” On a single rack DGX, there is “enough interconnect to carry the internet.”

This last point is critical for serving up larger AI models that need to run inference across many GPUs. This system is built to serve up GPT-5 (or some other ~20 trillion parameter AI model).

You can scale further, to a full SuperPOD AI supercomputer, with 11.5 ExaFlops of FP4 compute performance. Then take it to the data center level and build a full “AI factory.”

AWS is building out CUDA-enabled Bedrock for their data centers, and other cloud providers will be lining up to buy these systems. In January, Meta announced they will buy 350K GPUs. That’s 10 times the size of the 32,000 Blackwell 645 ExaFlops data center. That’s enough compute to make a GPT-4 model in less than a day.

NIMs and NeMo Microservices

NIMs stands for Nvidia Inference Microservice, and is a microservice focused on serving AI models. For developers, think of it like Gen AI Docker containers. They are launching specific Generative AI Microservices for healthcare to support drug discovery, digital health, and medical technology.

The new suite of Nvidia healthcare microservices includes optimized NIM AI models and workflows with industry-standard APIs, or application programming interfaces, to serve as building blocks for creating and deploying cloud-native applications. They offer advanced imaging, natural language and speech recognition, and digital biology generation, prediction and simulation.

They demonstrated a collaboration with Hippocratic AI, showing AI “nurses” assisting with patient queries and interactions.

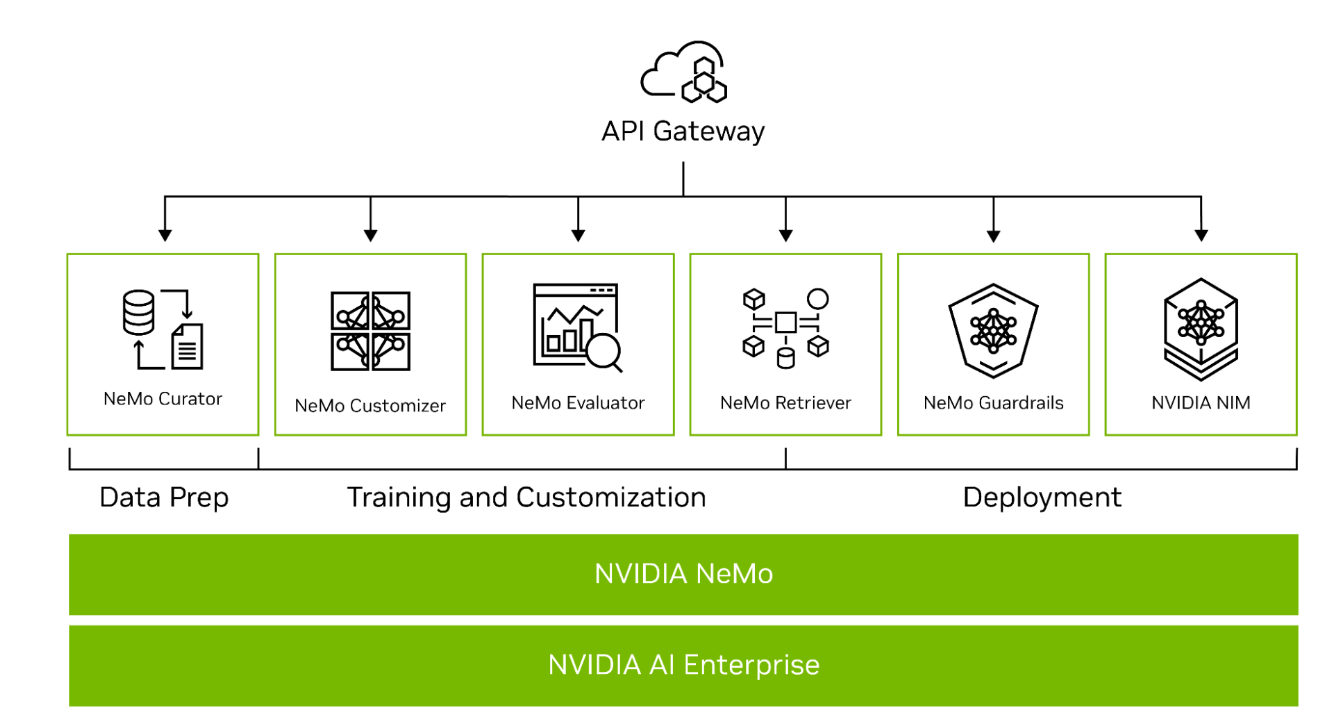

AI developers can simplify custom generative AI development with Nvidia’s NeMo Microservices. The suite of NeMo tools help with AI model training, customization, security and deployment.

Nvidia’s AI Foundry service ties together their hardware and service offerings.

Project Groot and Isaac Robotics

“Building foundation models for general humanoid robots is one of the most exciting problems to solve in AI today. The enabling technologies are coming together … to take giant leaps toward artificial general robotics.” - Nvidia CEO Jensen Huang

Project GR00T, a multimodal AI to power humanoids of the future could be a path towards embodied AGI.

Project GR00T taps a general-purpose foundation model that enables humanoid robots to take text, speech, videos or even live demonstrations as input and process it to take specific general actions.

The project is out of Nvidia’s GEAR robotics research lab, headed by Dr Jim Fan. He explains more about GR00T on X:

GR00T is born on NVIDIA’s deep technology stack. We simulate in Isaac Lab (new app on Omniverse Isaac Sim for humanoid learning), train on OSMO (new compute orchestration system to scale up models), and deploy to Jetson Thor (new edge GPU chip designed to power GR00T).

GR00T attacks the critical issue for robotics: The AI intelligence supporting the robot and how to train an AI model to achieve it.

Other Announcements

NVIDIA Announces Earth Climate Digital Twin Earth-2, a cloud platform that uses AI and digital twin tech to forecast climate change and weather with high resolution (2 kilometer scale) and speed. Leveraged by scientists and multiple startups, this will be a massive win for predicting extreme weather faster and more accurately, helping to mitigate extreme weather impacts.

Nvidia’s Omniverse APIs are now available on the Apple Vision Pro. Developers can build Omniverse applications that stream to the Apple Vision Pro, so users can live inside the simulation environments, created with Universal Scene Description (OpenUSD).

GTC 2024 previewed automotive tech offerings: DRIVE Thor is in-vehicle AI technology that combines advanced driver assistance and infotainment. Multiple level 4 self-driving automotive solutions will be built on it. Nvidia will be delivering their own self-driving software solutions in coming years.

The Big Picture

The scaling of transistors via Moore’s Law is on its last legs as transistors shrink to atomic scale, but Nvidia and others find ways to continue to improve computer performance, as Nvidia’s Blackwell shows. The industry will continue to improve AI chips to take us to AGI levels and beyond.

Nvidia is not just a chip supplier, they are selling generative AI supercomputers. Their Blackwell DGX systems are best-in-class AI supercomputers that will sell very well in coming years.

Nor is Nvidia is just a hardware vendor. They are offering AI software and services, such as NIMS microservices, software frameworks like CUDA, Tensor-LLM, Omniverse and Isaac Sim, all of which serve into the Generative AI market and ecosystem. This makes Nvidia an indispensable company for the AI era.