o3-pro, the AI That Thinks Too Much

OpenAI’s o3-pro is smart and slow. It gobbles tokens and extends thoughts, needing lots of context and the right use cases to show its strength in deep analysis.

OpenAI Releases o3-pro

OpenAI’s o3-pro was released on June 10th, with OpenAI announcing the release in a tweet and model release notes. The announcement was relatively understated for the release of arguably the most intelligent AI model yet.

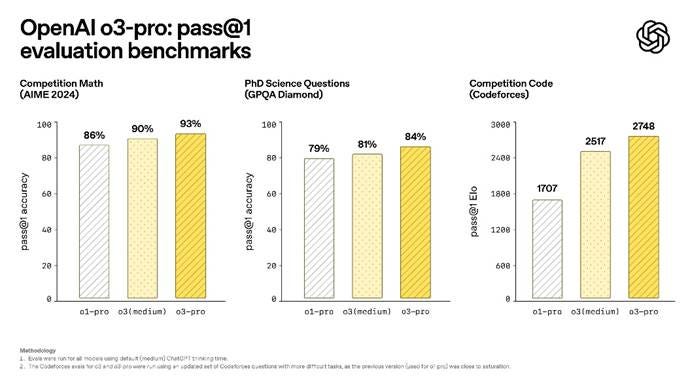

O3-pro is an advanced reasoning model built on the same model foundation as o3 but is designed to be a deeper, stronger thinking model. It’s (incrementally) better than o3 and state-of-the-art (SOTA) on several coding, math, and reasoning benchmarks.

On coding - Codeforces: o3-pro gets a stellar 2748 on Codeforces, beating out o3 and placing o3-pro as the 159th best coder on Codeforces.

On math – AIME 2024: o3-pro gets 94% on the AIME 2024 math proficiency test, edging out Google’s Gemini 2.5 Pro (92%) and o3 (90%) and practically saturating that hard math benchmark.

On PhD-Level Science – GPQA Diamond: o3-pro scores 84% on GPQA Diamond, which assesses advanced scientific reasoning. o3-pro’s score on GPQA Diamond matches Gemini 2.5 Pro (84.0%) and Grok-3 thinking (84.6%) and slightly surpasses Anthropic’s Claude 4 Opus (83.3%).

The latest AI reasoning models are starting to saturate these challenging benchmarks (AIME 2024, GPQA, CodeForces.) When improvements seem incremental, o3-pro doesn’t really stand out. However, OpenAI shared a comparison of o3-pro with o3 as rated by human testers, and o3-pro was preferred 64% of the time over o3:

In expert evaluations, reviewers consistently prefer o3-pro over o3 in every tested category and especially in key domains like science, education, programming, business, and writing help. Reviewers also rated o3-pro consistently higher for clarity, comprehensiveness, instruction-following, and accuracy.

OpenAI also touted o3-pro for improving over o3 on "4/4 reliability" evaluations of the same benchmarks as above; 4/4 reliability is where a model passes only if it correctly answers a question correctly four out of four independent attempts.

The o3-pro model understands how to use tools in the reasoning process:

Like o3, o3-pro has access to tools that make ChatGPT useful—it can search the web, analyze files, reason about visual inputs, use Python, personalize responses using memory, and more.

As for limitations, o3-pro cannot generate images and doesn’t support Canvas.

Availability and Cost

The o3-pro model is now available to ChatGPT Pro and Team users, replacing the previous o1-pro model. Enterprise and educational users are set to receive access shortly thereafter. Developers can use o3-pro via the OpenAI API. There is no access as of now no word on future access for ChatGPT Plus users.

The API pricing for o3-pro is $20 for input tokens and $80 for output tokens.

Alongside the o3-pro launch, OpenAI reduced o3 API pricing by 80%, bringing costs down to $2 input and $8 output per million tokens. This brings the per-token cost of o3 in-line with Gemini 2.5 Pro. Since o3 usually burns fewer reasoning tokens than Gemini 2.5 Pro, this may make o3 cheaper for some developers and users.

The AI IQ Test

Artificial Analysis shows o3-pro is the smartest AI in the world overall, although its a close race with Gemini 2.5 Pro and o4-mini for the top stop.

The ARC-AGI test is the closest thing to an actual intelligence test for AI models. Even though o3-pro chews up more tokens thinking longer than o3, it does no better than o3 on ARC-AGI test. On a price-performance level, o3-pro is far worse than o3 or o4-mini; it costs 10 times as much per task for no better results.

Vibe Check - Slow Speed, Deep Thoughts

Because o3-pro has access to tools, responses typically take longer than o1-pro to complete. We recommend using it for challenging questions where reliability matters more than speed, and waiting a few minutes is worth the tradeoff. - OpenAI

The overall impression is that the o3-Pro model is "extremely slow" but also "very thorough" and excels at research.

One of the immediate reactions about o3-pro is how slow it is, taking as long as 15 minutes to respond to some queries: “and very, very, very slow. 15 minute responses.”

The Feature Crew’s YouTube review of o3-pro shared this mixed view of o3-pro. O3-pro has powerful and useful capabilities, especially in business analysis, but the slow thinking process makes it impractical for interactive use cases:

O3-pro took 15 minutes of thinking on their Sim City coding challenge, but produced a result similar to o3, or other leading models.

O3-pro missed finding data online about recent AI models, but its market analysis was thoughtful and practical, “potentially the most useful analysis we've seen.”

Their conclusion is that o3-pro is insightful, but it may not be worth the $200 subscription for most users to access.

Everyone was hyped about o3-pro. But honestly? It didn’t live up to the buzz especially when it comes to coding tasks that need some autonomy. o3-high still holds its ground better there. Sometimes, more “reasoning” just leads to overcomplication.

Hao AI lab at UCSD notes that o3-pro is much better at playing Tetris than o3.

Superficial notes that o3-pro is hallucination-prone, more than other leading models:

“o3 Pro hallucinations 18% of the time, compared to 8.5% for Gemini 2.5 Pro and 9.5% for Claude Opus 4.”

The Cost of Over-Analyzing

On a personal note, excuse the delay in publishing this article. AI moves so fast that the Tuesday release of o3-pro is old news by Friday. I had this article mostly written, but a personal matter distracted me from completing it. I was researching the best choices for a car purchase, but I ended up spending so much time analyzing alternatives, I mismanaged my time rather than optimizing a purchase.

My distracting activity is a great metaphor for the challenge with o3-pro. The o3-pro model thinks long and hard, sometimes too much for its own good.

Best Use Cases for o3-pro

The o3-pro model is not a chat model; o3-pro is a slow, deep thinker takes a long time to respond. It’s clear o3-pro is not the best AI model for every use case, so the question is: How and where to use o3-pro for best results?

Prompt general thinking about hard problems, giving it plenty of context and information to help it do a deep dive.

For example, Riley Coyote (Riley Ralmuto) gave o3-pro a code repository to analyze, and it ripped through that:

I sent o3-pro a link to a random repo I have with a whole bunch of random things, simulators, chatbot apps, custom cli's, papers, etc. I asked it to review the codebase and make sense of it.

I wasn’t even sure it would listen and actually visit the link. It not only visited the link, it analyzed the code of the two main artifacts in there and even gave me tips on how to improve one of the sims based on what it saw in its code (it even listed the particle count).

The review of o3-pro by Ben Hylak entitled “God is hungry for Context: First thoughts on o3 pro” describes the strengths and challenges of o3-pro. He had trouble distinguishing o3-pro from o3 on tasks, and he found that o3-pro could be prone to ‘overthinking’ without sufficient context.

However, he found a real-world use-case where o3-pro excelled. He had o3-pro analyze their business by giving it the company business planning meetings as context:

We were blown away; it [o3-pro] spit out the exact kind of concrete plan and analysis I’ve always wanted an LLM to create — complete with target metrics, timelines, what to prioritize, and strict instructions on what to absolutely cut.

The plan o3 gave us was plausible, reasonable; but the plan o3 Pro gave us was specific and rooted enough that it actually changed how we are thinking about our future.

The key lesson Ben Hylak gleaned is that o3-pro needs context, the more the better to make o3-pro give detailed thoughtful, grounded responses. The lesson that prompting matters and context matters shouldn’t surprise us. Not long ago, we needed to tell AI models to “think step by step” to get them to think. o3-pro can think deeply, but it needs the right context to have something to think deeply about.

Julian Goldie suggests to use o3-pro for complex mathematical problems, advanced scientific, and other tasks requiring deep reasoning.

Examples of what not to use o3-pro for: Fact-based queries are better done with something like AI mode in Google Search, which combines AI with fast, strong search. While o3-pro can single-shot generate some programs at a high level, code generation in an interactive coding assistant is better done with faster and cheaper models like Claude 4 Sonnet, Devstral, Gemini 2.5 Pro.

Conclusion

Even though o3-pro is arguably the smartest AI model out there, it’s not for everyone or every task. You may want o3-pro to architect your systems or develop business strategies, but you’ll want a different AI model interactively implementing code, systems, marketing content.

o3-pro is strong in thinking deeply over the toughest research and analysis questions. If you do feed it such questions, feed it enough context to get a thorough, detailed, grounded result. While o3-pro is strong in research, its output differences may be marginal relative to o4-mini and o3 for most research questions, since all three are almost equally strong.

While o3-pro is an interesting and powerful AI model offering unique insights for some in-depth queries, there is no strong reason to get an OpenAI Pro account just to get access to o3-pro. Those without access to o3-pro can make do with o4-mini and o3 for research questions, leveraging Deep Research for more extended queries and problems.