OpenAI releases GPT-4.1, o3 and o4-mini

OpenAI refreshes their AI model lineup with GPT-4.1, GPT-4.1-mini, and GPT-4.1-nano, and releases the smartest AI reasoning models yet: o3 and o4-mini.

Introducing GPT 4.1, o3 and o4-mini

OpenAI’s CEO Sam Altman has been hinting at upcoming new releases in recent weeks, and there have been mystery models floating around that looked like OpenAI models. When OpenAI released 3 GPT-4.1 models in their API - GPT-4.1, GPT-4.1-mini and GPT-4.1-nano - it was both expected and surprising.

The surprise was that the GPT-4.1 models are not the AI reasoning models they hinted at releasing, and they released these models through their API only. The GPT-4.1 models are a step up from GPT-4o, aimed as a competitive drop-in replacement for GPT-4o for target enterprise users and coders that rely on GPT-4o via the API in their AI applications.

Then two days later, OpenAI released the o3 and o4-mini AI reasoning models. The expected release surprised us with the stellar performance of both o3 and o4-mini on a range of reasoning, math, and coding benchmarks. The o3 and o4-mini AI models are not just state-of-the-art, they have saturated key hard benchmarks and set new standards in combining AI reasoning, multi-modality, and tool use.

OpenAI has refreshed their AI model lineup to be as competitive as ever.

GPT-4.1 Performance and Features

The three GPT-4.1 models (GPT-4.1, GPT-4.1-mini, GPT-4.1-nano) all support a 1 million long context window and show near-SOTA performance for a reasonable cost and latency. Both GPT-4.1 and GPT-4.1 mini improve over GPT-4o on overall benchmarks, with GPT-4.1 significantly outperforming on GPQA (66%) and AIME 2024 (48.1%).

The GPT-4.1 models have been trained to improve on instruction following. GPT-4.1 is also outstanding in visual understanding, scoring SOTA 87.3% on MMMU as well as on long video understanding.

OpenAI shared various benchmarks in detail and also touted real-world domain-specific and enterprise use case results from various companies, including results in coding, code reviews, tax scenarios, and long context understanding.

Comparing GPT-4.1 and GPT-4.1 mini with competitors’ frontier AI models, GPT-4.1 are competitive with the best frontier AI models but are not SOTA. The GPT-4.1 models improve over GPT-4o in coding, closing gaps with competitor AI models like Claude 3.7 Sonnet and Gemini 2.5 Pro. Still, Gemini 2.5 Pro retains their lead over non-reasoning AI models.

With GPT-4.1’s better instruction-following, longer context, better coding chops, and better pricing, this makes GPT-4.1 far more competitive as an API model than before.

GPT-4.1 has flaws. It doesn’t have audio understanding. It faces the same challenge as all non-reasoning AI models, when it comes to math, coding, reasoning, and complex queries.

GPT-4.1 - Responding to Competition

Sam Witteveen in his YouTube review of GPT-4.1, said:

“These models are not for you. They are the catchup models.”

He’s right. These models are not for you, they are for OpenAI’s corporate user base.

Enterprise AI users are OpenAI’s bread-and-butter. OpenAI built up a huge lead in the use of LLMs in the enterprise thanks to GPT-4’s lead in 2023 and 2024, but Google’s Gemini and Anthropic’s Claude are chipping away at that lead with new releases. Gemini 2.5 Pro and Claude 3.7 Sonnet are superior to GPT-4o.

OpenAI’s release of GPT-4.1 is a response to that competition, delivering an AI model that is better on price performance and coding for the enterprise. This is why it’s been released as an API model only.

The GPT-4.1 models are attractive options if you are using AI models through an API, for example, in AI coding assistants like RooCode or Cline. In particular, GPT-4.1 mini provides excellent coding performance at a competitive price, comparable with cost-effective AI models like Gemini 2.0 Flash.

The new state-of-the-art: o3 and o4-mini

OpenAI o3 is our most powerful reasoning model that pushes the frontier across coding, math, science, visual perception, and more. It sets a new SOTA on benchmarks including Codeforces, SWE-bench (without building a custom model-specific scaffold), and MMMU. It’s ideal for complex queries requiring multi-faceted analysis and whose answers may not be immediately obvious. It performs especially strongly at visual tasks like analyzing images, charts, and graphics. - OpenAI

OpenAI’s newly released o3 and o4-mini set a new standard of stellar AI reasoning performance.

The o3 model is a large‑scale AI reasoning model tailored for high‑precision tasks in coding, mathematics, and scientific analysis. o3 achieves state‑of‑the‑art performance across a range of benchmarks: 91.6% on AIME 2024, 83.3% on GPQA Diamond. On coding, o3 gets 2706 of Codeforces competition code, 69.1% on SWE‑bench Verified, and 81.3% on Aider Polyglot.

In visual understanding, it scores 82.9% on MMMU. It also excels on instruction-following and function calling, and agentic browsing. This is not just a math, coding, and reasoning whiz, but an all-around agentic AI model.

The o4‑mini model likewise offers stellar performance in math, code, and vision tasks in a streamlined efficient architecture. While o3 is superior overall, o4‑mini is consistently close to o3 in performance and occasionally outperforms o3. For example, o4-mini gets a SOTA score of 93.4% in AIME 2024.

In evaluations by external experts, o3 makes 20 percent fewer major errors than OpenAI o1 on difficult, real-world tasks — especially excelling in areas like programming, business/consulting, and creative ideation. Early testers highlighted its analytical rigor as a thought partner and emphasized its ability to generate and critically evaluate novel hypotheses—particularly within biology, math, and engineering contexts.

Agentic Reasoning with Tools

OpenAI’s o3 and o4-mini mark a new milestone in AI reasoning. AI reasoning models are designed to think for longer by employing extended internal deliberations before producing output. Since OpenAI introduced the o1 AI reasoning model, we have seen a new scaling law at work, scaling reinforcement learning (RL) reasoning training and test-time compute to scale their ability to reason.

These releases have scaled RL-based reasoning training further than ever. There is no wall yet, and it’s likely there is more headroom to advance reasoning further.

However, o3 and o4-mini don’t just advance raw intelligence in processing tokens. The range and type of thinking has expanded to use tools, code, external information (web), and even images:

For the first time, our reasoning models can agentically use and combine every tool within ChatGPT, including web search, Python, image analysis, file interpretation, and image generation. - OpenAI

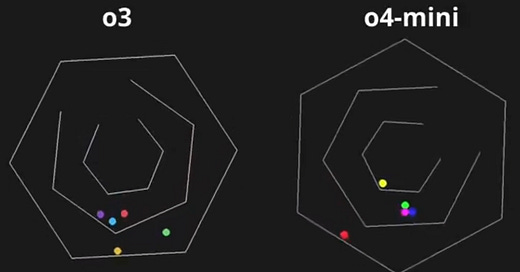

Specific to reasoning on images, o3 and o4-mini are able to transform images - cropping, zooming, and rotating them - as part of their visual chain‑of‑thought reasoning.

Both o3 and o4‑mini provide full tool access, enabling web browsing, Python code execution, file and image analysis, and generative functions. With that, they can perform complex multi‑step mathematical derivations, solve software engineering problems, and visualize data.

The models also reason with tools, autonomously sequencing tool calls, planning steps, running code for verification, and iterating on intermediate results to produce high‑quality answers. They can dynamically invoke tools based on prompt context. They used RL to train this capability:

We also trained both models to use tools through reinforcement learning—teaching them not just how to use tools, but to reason about when to use them. Their ability to deploy tools based on desired outcomes makes them more capable in open-ended situations — particularly those involving visual reasoning and multi-step workflows. - OpenAI

By thinking agentically, o3 and o4-mini are able to tackle increasingly complex tasks, unlocking new AI agent capabilities we haven’t achieved before.

Pricing and Efficiency – o4-mini shines

ChatGPT users can already use o3 and o4-mini. OpenAI is replacing older reasoning models with the more capable o3 and o4-mini models across its paid ChatGPT tiers:

ChatGPT Plus, Pro, and Team users will see o3, o4-mini, and o4-mini-high in the model selector starting today, replacing o1, o3-mini, and o3-mini-high. ChatGPT Enterprise and Edu users will gain access in one week. Rate limits across all plans remain unchanged from the prior set of models. We expect to release o3-pro in a few weeks with full tool support.

For API usage, the pricing for o4‑mini is set at $1.10 input and $4.40 output, below o3 as other AI reasoning models from OpenAI, positioning it as an economical choice. The pricing for o3 is almost 10 times that of o4-mini, yet it delivers marginal reasoning performance gains, so o3 may be suitable only for the hardest tasks.

The Coding CLI

Complementing these models, OpenAI released Codex CLI, a lightweight open‑source terminal‑based coding agent that leverages o3 and o4‑mini for local software development. This can be viewed as an alternative and competitor to Anthropic’s Claude Code.

Additionally, o3 and o4‑mini are integrated into GitHub Copilot Models, allowing developers to experiment and deploy AI features alongside other leading models. OpenAI may be getting into AI coding assistant development more directly, as OpenAI is reportedly in talks to buy AI coding assistant maker Windsurf for $3B.

Conclusion – On to GPT-5

GPT-4.5 dropped two months ago as a (non-reasoning AI model) placeholder for the long-awaited GPT-5. It didn’t fare well. Last month, Sam Altman announced a delay for GPT-5, but he promised o3 release in the meantime. This week, they delivered: Three GPT-4.1 models – GPT-4.1, GPT-4.1 mini and GPT-4.1 nano – as well as o3 and o4-mini AI reasoning models.

The o3 and o4-mini are great SOTA AI models. By combining advanced chain‑of‑thought reasoning, full tool integration, and multimodal capabilities, o3 and o4‑mini set a new SOTA standard for AI reasoning model performance and versatility. The o4-mini model in particular balances incredible AI reasoning performance with reasonable cost and extensibility.

The combination of reasoning and agentic behavior, including tool use, is the most important new capability in these AI models, perhaps the most important milestone in the evolution of AI models since o1 itself.

The GPT-4.1, o3 and o4-mini AI models all have a cutoff of May 31, 2024. We can only speculate on what’s under the hood, since OpenAI is opaque about their AI training process, models sizes and derivations. However, it’s likely these new AI models are based on GPT-5 pre-training checkpoints and OpenAI is releasing iteratively improved AI models as stepping-stones to the full GPT-5.

We can anticipate that GPT-5 will further scale pre-training and RL-for-reasoning to support better reasoning and AI agentic capabilities, getting AI ever closer to AGI.