Prompt Engineering with Claude Workspace

Zen and the Art of Prompt Engineering: Generate, test and evaluate prompts with AI support.

The usefulness of AI is not in its raw intelligence, any more than the usefulness of a knowledge worker is just about his or her IQ. The value of AI depends on the entire chain of interaction between human and AI. This is why AI rewards the power user, why prompt engineering is useful, and why AI UX is important: All help improve quality of results and user experience with AI.

Claude has been lately making a lot of moves in improving AI interfaces, which we’ve discussed and praised, for example, Artifacts, and now sharing, publishing and remixing of Artifacts.

Claude Workspace Updates

Last week, Anthropic announced additional capabilities so users could generate, test, and evaluate prompts in the developer console. This is an important and useful interface for both AI engineers and power gen AI users, as prompts are basic unit of useful AI interaction and AI-based knowledge work.

Anthropic and other AI providers have resources, such as prompt libraries, to help users understand how good prompts work and re-use them. AI can do a million things, but we tend to use AI to address similar basic interactions: Brainstorm, research, analyze, summarize, answer fact-based queries, solve a problem, creatively express ideas or thoughts, automate an informational task, etc.

I was asked recently about what I thought about “prompt engineering” as a job; there are after all prompt Engineering job opportunities out there. I answered that it’s more of a skill than a job, and it’s a skill that humans are not doing alone. AI is now your prompt engineer.

Generate Prompts

Generating a prompt is the first step in defining and refining AI interactions. In their Generate Prompt step, Claude Workspace will take a user input describing a prompt at a high-level and use Claude 3.5 Sonnet to turn it into a detailed, clear prompt. Claude Workspace uses a simple popup interface to do that.

This feature is similar to the “magic prompt” concept used in the Ideogram interface we mentioned in our “AI UX and the Magic Prompt” article. It could and should become more common. Early LLMs were finicky and fragile to prompting, but now we have LLMs good enough to not only be robust to prompts, but to clean up and improve prompts.

Test and Evaluate Prompts

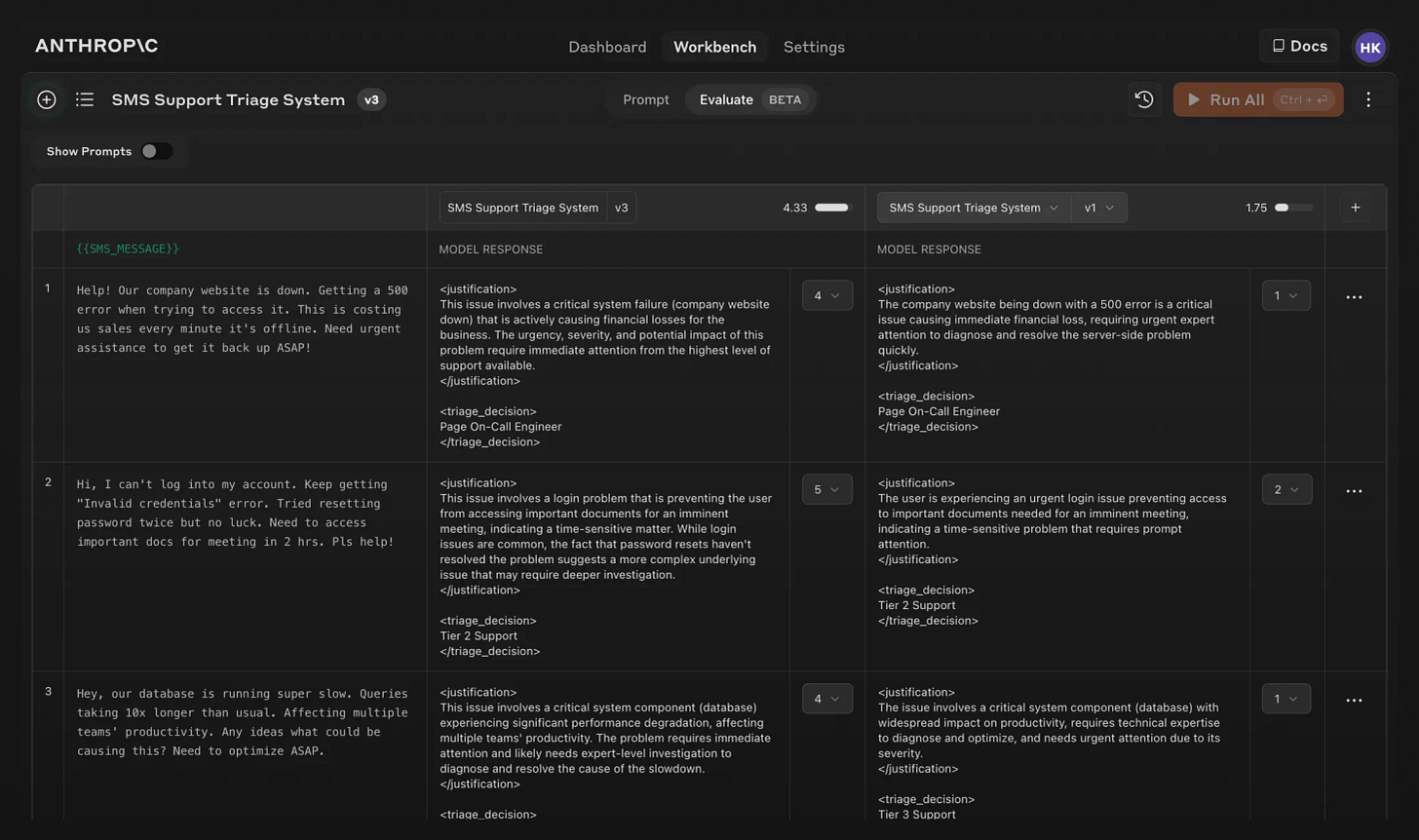

Anthropic shows Claude’s prompt generation chops in the Generate Prompt step, but how do we know if the prompt is any good? The generated prompt could be directing the wrong style, specific in the wrong way, too vague, etc. As with any Gen AI output, reviewing and revising the generated content is in order.

To help this, the Claude Workspace allows you to evaluate prompts in the developer console. The first step is to setup examples to test the prompt:

Manually add or import new test cases from a CSV, or ask Claude to auto-generate test cases for you with the ‘Generate Test Case’ feature. Modify your test cases as needed, then run all of the test cases in one click.

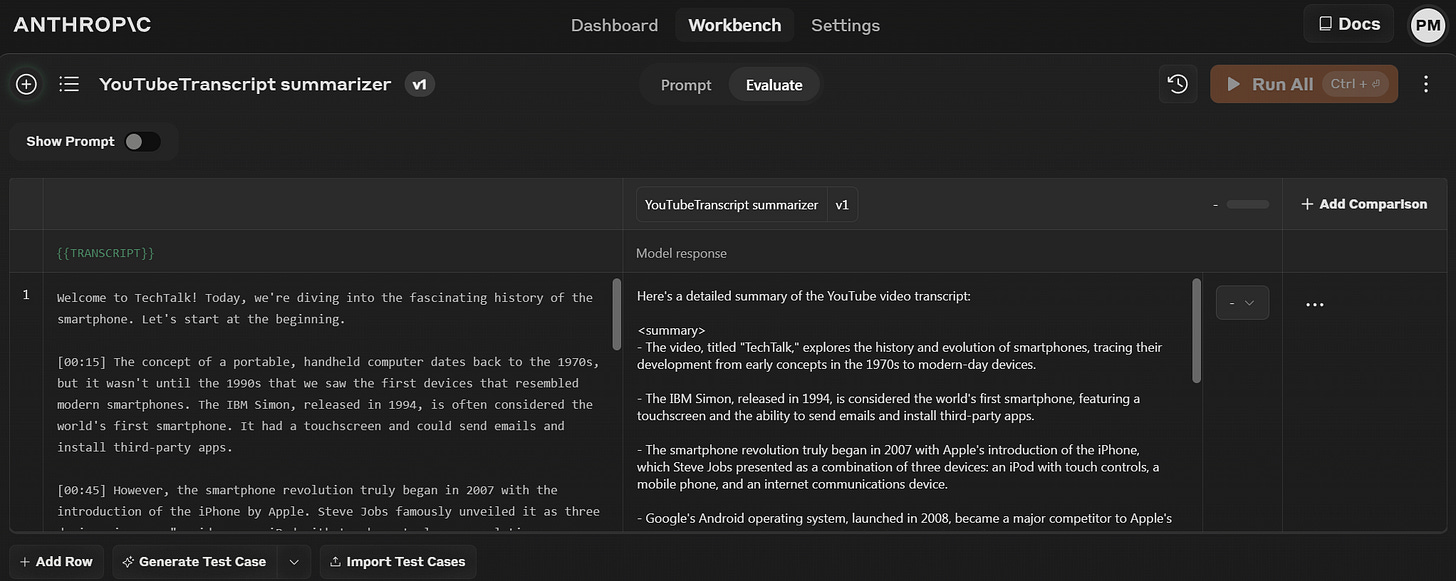

The “Generate Test Case” feature is particularly helpful for the lazy prompt engineer. I tried this out on a “YouTube transcript summarizer” prompt I developed, and Claude generated a 5 minute YouTube text as the user input. For those without actual inputs available, it’s a big time saver for testing prompts.

Once you’ve set up the test case inputs in the Workspace, can hit “Run” on the test case prompt inputs and generate a Model Response. The user can score (1-5) the model output quality, and that feedback can be used to help fine-tune the prompt further.

Claude Workspace uses templates and variables to make this work:

<transcript> {{TRANSCRIPT}} </transcript>Prompt Engineering with AI - Alternatives

Although Claude Workspace is a great example of AI-supported prompt engineering, it’s not the only way to generate and test LLM prompts:

Prompt generation is a capability of any decent LLM.

Any near-GPT-4 level or even a smaller LLM can, when instructed, output a suggested prompt. The simplest and most direct way is to simply ask an LLM to make a prompt for you, and you’ll get a decent result that you can further modify to your needs.

In the Postscript below, I share a (Gemini-generated) system prompt that you can combine with any prompt to output a prompt. This can be used in an LLM directly or in a LangChain script to directly output a specific and detailed prompt from a simple, vague, high-level description for a prompt.

You can also use OpenAI GPTs, like Prompt Engineer GPT, Midjourney Prompt GPT, and “Prompt Professor,” that are custom-tailored to do the prompt generation and prompt engineering task. Gemini’s

Conclusion - The Value Proposition

Since AI output quality depends on the input prompt, developing the right prompt, i.e., prompt engineering, is an important capability to enhance the AI system overall.

These alternatives mentioned above, while helpful, lack the full generate, test, and evaluate cycle built into Claude Workspace. Anthropic’s Claude Workspace sets a good standard and continues to improve; it’s helpful for AI app developers, power AI users and for newbie AI users. I hope others follow suit.

Our cover art shows the power of a good prompt: Give an AI image generator the right specific, detailed, and creative prompt, and the AI will leverage that prompt to generate something unique and beautiful.

The takeaway is to learn, use, and experiment with prompts: Try new prompts; reuse prompts; test prompt variations; let AI generate prompts for you. If you are a power AI user, use Claude Workspace or similar prompt eval environments to improve and optimize your most frequently-used prompts and AI workflows.

As AI models improve, they will get better at inferring our intentions, but the Art of the Prompt will remain a useful skill for best AI results.

Postscript - The prompt generation prompt

I elicited the below system prompt from Gemini. This system prompt converts a brief prompt into a clear detailed specific one. Then I was able to import that into a local LLM and use it to do “magic prompt” generation.

*System Prompt:** Your role is to transform brief user input into detailed, actionable prompts for a Large Language Model (LLM). The user will provide a high-level summary or topic for the desired content.

Your task is to expand upon this input, generating a comprehensive prompt that includes the following:

1. **Clear Objective:** Explicitly state the desired output format (e.g., essay, poem, code, script, marketing copy).

2. **Key Topics:** Identify and elaborate on the central themes, subjects, or ideas the LLM should focus on.

3. **Specific Details:** Incorporate any relevant details the user provides, such as target audience, desired tone, or specific requirements.

4. **Style and Tone Guidelines:** Offer guidance on the writing style (e.g., formal, conversational, persuasive) and tone (e.g., informative, humorous, serious) that align with the user's intent.

5. **Additional Instructions:** Include any supplementary instructions that could enhance the LLM's output, such as word count limits, formatting preferences, or desired structure. Your goal is to provide the LLM with a clear and detailed roadmap, enabling it to generate content that closely aligns with the user's original intent. Remember, the more specific and comprehensive the prompt, the better the LLM's output will be.

**Example User Input:** "Write about the benefits of meditation."

**Example Output Prompt:** "Compose a 500-word informative blog post explaining the benefits of meditation for mental and physical health. Focus on the science-backed effects of meditation on stress reduction, focus improvement, and emotional well-being. Include practical tips and techniques for beginners, and use a clear, approachable writing style."