Qwen Goes to the Max

Alibaba’s Qwen team has earned leading AI lab status for their broad array of high-performing AI model releases, including their latest flagship, Qwen3-Max.

AI Goes Big

There’s a race to announce the biggest AI infrastructure bets lately. Nvidia made a $100 billion partnership deal with OpenAI to build 10 gigawatts of AI compute infrastructure and invest in OpenAI. This complements the Stargate partnership OpenAI has with Oracle and others that plans $500 billion in AI infrastructure.

Meanwhile, Elon Musk has drawn a line in the sand. He claims xAI is getting there first:

While the US labs make a lot of headlines and generate investor excitement, the Chinese AI labs have been making progress more quietly. One Chinese AI player in particular has been a quiet workhorse: Alibaba’s Qwen team.

The Qwen team has been on a tear this summer, producing release after release of strong AI models: Qwen 3 suite of models, image editing models, a coding model, text-to-speech models, and this week, Qwen3-Max, a flagship AI model.

Alibaba’s Qwen team has been an under-rated and over-performing AI lab for some time, but they are finally getting some notice. I suggested in my “Is AI a bubble?” article earlier this month:

“I’d bet on two under-rated AI companies: Alibaba, the maker of the Qwen AI models; and Google, about to reclaim the top AI model crown when they release Gemini 3.”

It was timely. Both Google and Alibaba company stock prices have surged this month on optimism around their AI business, as Wall Street investors have come around to see Alibaba as a real player in AI.

Alibaba Apsara Conference

Alibaba got the opportunity to showcase their AI and cloud infrastructure technology this week at Alibaba’s Apsara Conference 2025 in Hangzhou, China. This is their annual technology conference, similar to Google I/O or AWS re:Invent, where they highlighted Alibaba’s progress in cloud and AI to technology leaders, developers, customers, and investors.

CEO Eddie Wu and others at the conference positioned AI as central to Alibaba’s future, promoting their latest AI models, and detailing how AI demand has outstripped expectations.

Driving home this message, Alibaba announced key AI infrastructure initiatives, in particular a RMB 380 billion ($50 billion) three year investment in AI development and deployment. They announced a strategic partnership with Nvidia to strengthen its compute capacity for model training and simulation, plus expansion plans for global data centers in Brazil, France, the Netherlands, and other countries.

More importantly for us, they announced their newest and best AI model yet, Qwen3-Max, as well as a host of other AI model releases and advances.

Qwen3-Max

The newly unveiled Qwen3-Max is a significant leap for Qwen AI models. It boasts over 1 trillion parameters, was trained on 36 trillion tokens in pre-training, and achieves remarkable scores in industry benchmarks, especially on code generation and agent calling.

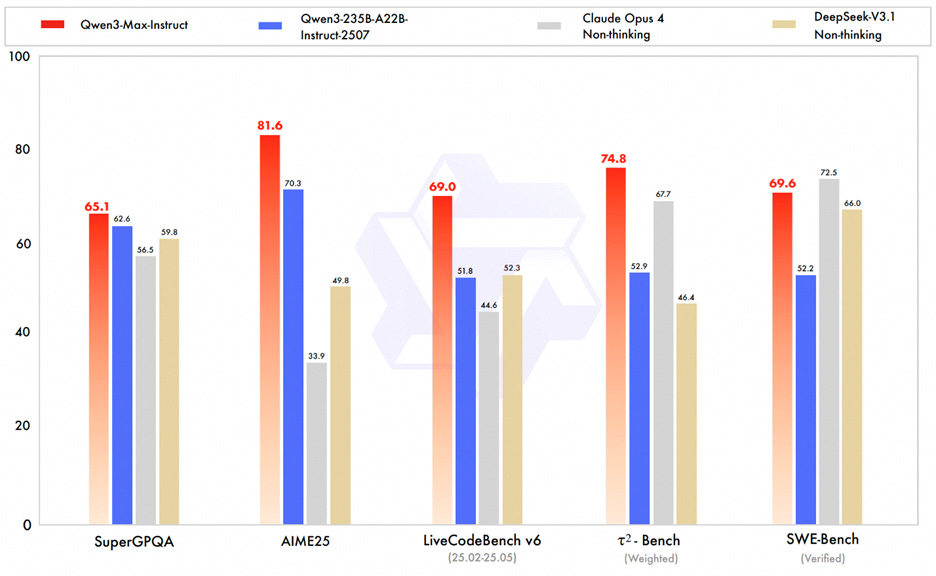

Qwen-3-Max-Instruct is near SOTA on many measures. It scores 1430 on the LMArena leaderboard, on par with GPT-5 and just behind Opus 4.1 and Gemini 2.5 (at 1454). On the SWE-Bench Verified coding benchmark, Qwen3-Max-Instruct scores 69.6%, near SOTA. Qwen3-Max-Instruct scores a SOTA 74.8% on Tau2-Bench, a benchmark of agent tool-calling.

It performs near-SOTA despite not being an AI reasoning model! Qwen3-Max-Instruct appears to be the most advanced non-thinking AI model yet, besting DeepSeek-V3.1 and Claude Opus 4 non-thinking on benchmarks.

Their reasoning model, Qwen3-Max-Thinking is still in training and not released, but the Qwen team eagerly shared pre-release thinking model results: Qwen3-Max-Thinking got 100% on AIME 2025 and HMMT 25, saturating difficult math benchmarks. On GPQA, Qwen3-Max-Thinking gets 85.4, close to SOTA GPT-5 (at 89.4).

Qwen Pushes a Broad AI Lineup

While Qwen-3-Max is their flagship new release, Qwen’s real strength is how they ship product after product and model after model. They seem to be relentless in providing a broad and deep lineup of AI capabilities. Here’s what they delivered this week during the Ansara conference:

Qwen3-TTS-Flash, their top-of-the-line text-to-speech model that supports multi-timbre, multi-lingual (10 languages), and multi-dialect speech synthesis. It achieves SOTA word error rates, highly expressive human-like timbre, and claims superiority over competitors like Minimax, ElevenLabs, and GPT-4o-Audio.

Qwen3 Guard is Qwen’s safety guardrail model, their first iteration of such models. Its purpose:

“Qwen3Guard ensures responsible AI interactions by delivering precise safety detection for both prompts and responses, complete with risk levels and categorized classifications for accurate moderation.”

The Qwen3 Guard models are sized from 0.6B, 4B and 8B, support generation and streaming options, and are open models available via HuggingFace.

Qwen also launched the Qwen3-VL series of vision-language models, leading with the open-source release of Qwen3-VL-235B-A22B, available in both non-thinking Instruct and Thinking versions. They share comprehensive benchmarks of Qwen3-VL-235B-A22B, both Instruct and Thinking versions, showing the models achieve SOTA performance on visual-based benchmarks, outperforming even Gemini 2.5 Pro and GPT-5 on many VQA, document understanding and spatial reasoning metrics.

Finally, Qwen also released an updated Omni model, Qwen3-Omni, a native multi-modal model that combines multi-lingual understanding of text, images and audio and outputs text and audio. Qwen3-Omni uses the “Thinker-Talker architecture, which first generates text (the Thinker) then converts it to streaming speech tokens (the Talker).

Accessing Qwen AI Models

Qwen3-Max-Instruct can be accessed from Qwen chat, which besides Qwen3-Max offers a variety of great AI tools: Qwen 3 coder; Qwen3-VL-235B-A22B vision model; image generation and editing; and a deep research mode and web search similar to ChatGPT.

Developers and users of API-based AI models via APIs have better options. Amazon Bedrock now carries Qwen AI models, and OpenRouter has many of the Qwen3 models, including Qwen3 Coder.

If you distrust sending sensitive data to China, you might not want to use the Qwen chat interface for serious work, but there are US-based API-based options for most Qwen models. However, the Qwen3-Max model specifically is for now only available via Qwen chat or Alibaba Cloud.

Conclusion

The markets have reacted enthusiastically to Alibaba’s latest AI moves, sending Alibaba’s shares up 10% during the conference. This is an overdue recognition of Alibaba’s strength in AI and prospect for their future business.

Alibaba’s Qwen team has released more advanced and capable models than Amazon. They have a broader AI model portfolio than Anthropic, Mistral, and Chinese competitor DeepSeek. Qwen has totally eclipsed Meta in producing high-performing open AI models. Their flagship Qwen-3-Max model competes with Grok-4, GPT-5 and Gemini 2.5 Pro.

I haven’t even mentioned the other Alibaba team, the Tongyi lab, which is developing advanced AI agents such as WebWatcher, an AI agent for Deep Research.

All this proves Alibaba is overall the strongest AI competitor in China, and the Alibaba Qwen team is a top 5 global AI lab.

For US users might only want to use Qwen Chat to kick the tires on Qwen models and won’t need it when Gemini or ChatGPT are better, but many Qwen models, especially open AI models such as Qwen coder (currently free on OpenRouter), are worthy of attention via an API or for local use. Qwen’s model deliveries are a win for AI consumers.