Adapting Large Language Models to UI Interactions

LLMs reading HTML in UIs for HCI

There is a reason why chatGPT has taken the world by storm since late last year in a way that other (arguably technically superior) large language models (LLMs) like PaLM, Chinchilla, and several other recent groundbreaking LLMs of 2022 did not: Accessibility. You could call it ease-of-use, interface, etc. The interface and ability to simply ‘try it out’ was made just easy enough and the powers and capabilities were impressive enough that chatGPT 'went viral’.

In recent years, AI researchers in the top AI labs have been in an arms race scaling up LLMs to tackle more input data, train longer, and go bigger, and as scale went up, qualitative features and capabilities. LLMs are large, powerful and general. The term Foundational Models has been applied to them. Now comes the challenge and opportunity to leverage these LLMs. They do one thing really, really well: String words together in response to a prompt. Using that capability to solve many problems will require additional adaptation.

Creating interface capabilities that include but go beyond chatbot or conversational interface mode will be one important avenue to extending the utility of LLMs. That’s where the recent paper “Enabling Conversational Interaction with Mobile UI using Large Language Models”,1 to be presented at CHI 2023, comes in. As they state:

This paper investigates the feasibility of enabling versatile conversational interactions with mobile UIs using a single LLM. We designed prompting techniques to adapt an LLM to mobile UIs.

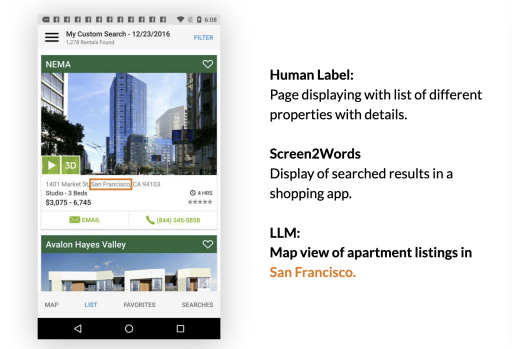

By reading the raw code of a mobile UI, they can use the LLM to understand and interact with users as intermediary for it. They identified four specific tasks where the LLM is an enabler: Screen summarization, screen question generation, screen question answering, and mapping instructions to UI actions. They feed the UI code in HTML itself into the prompts, following the prompt structure of “Language Models are Few-Shot Learners”2 to engineer few-shot learning: A preamble; example screen HTML and output pairs; and screen HTML input. (Chain-of-thought prompting was tried for all tasks but used only for screen question-generation; for other tasks, it did not improve performance.) This would elicit the desired response. Results presented were a significant improvement on the baseline of prior methods, Screen2Words, etc.

One of their key takeaways is that this is not hard to generalize and apply:

prototyping novel language interactions on mobile UIs can be as easy as designing a data exemplar

Since LLMs are few-shot learners, the skill of making LLMs useful in a wide variety of tasks is finding the right ways to train them in those specific tasks via prompt engineering. Their studies for this use case show:

first shot may be the most helpful, and additional examples may provide only marginal benefits in narrowing the model’s focus.

Final thoughts: LLMs can and will play a big role in being an intermediary between human and machine in ways that go beyond the chatbot as UI. The right kind of prompt engineering of interfaces will lead to powerful, specific applications of LLMs in that space. And if UIs need to interface with LLMs, will they be redone (like you make a website SEO-friendly) to make that work better?

References:

Bryan Wang, Gang Li, and Yang Li. 2023. Enabling Conversational Interaction with Mobile UI using Large Language Models. In Proceedings of the 2023 CHI Conference on Human Factors in Computing Systems (CHI ’23), April 23–28, 2023, Hamburg, Germany. ACM, New York, NY, USA, 17 pages. https://doi.org/10.1145/3544548.3580895 https://arxiv.org/pdf/2209.08655.pdf

Tom B. Brown, Benjamin Mann, Nick Ryder, Melanie Subbiah, Jared Kaplan, Prafulla Dhariwal, Arvind Neelakantan, Pranav Shyam, Girish Sastry, Amanda Askell, Sandhini Agarwal, Ariel Herbert-Voss, Gretchen Krueger, Tom Henighan, Rewon Child, Aditya Ramesh, Daniel M. Ziegler, Jeffrey Wu, Clemens Winter, Christopher Hesse, Mark Chen, Eric Sigler, Mateusz Litwin, Scott Gray, Benjamin Chess, Jack Clark, Christopher Berner, Sam McCandlish, Alec Radford, Ilya Sutskever, and Dario Amodei. 2020. Language Models are Few-Shot Learners. arXiv:2005.14165 [cs.CL] https://arxiv.org/pdf/2005.14165.pdf