AI Cracks the Code and Solves Math

Both OpenAI and Google DeepMind AI models score gold in International Math Olympiad. Their wins tell us about AI progress that goes far beyond math.

Introduction

“Our latest experimental reasoning LLM has achieved a longstanding grand challenge in AI: gold medal-level performance on the world’s most prestigious math competition—the International Math Olympiad.” - Alexander Wei, OpenAI

Three wins and near wins by AI models on math and coding were reported in the last few days:

Google Deep Mind also achieved gold medal-level performance on the 2025 IMO.

An Open AI model came in second in an international coding competition.

This news is important in its own right, but it also tells us a lot about what innovations are arising to propel AI forward.

Google DeepMind’s Deep Think

“We can confirm that Google DeepMind has reached the much-desired milestone, earning 35 out of a possible 42 points - a gold medal score.” - IMO President, Prof. Dr. Gregor Dolinar

An advanced version of Google’s Deep Think, their highest performing version of Gemini 2.5 Pro, was entered in the 2025 IMO, and it solved 5 out of 6 problems, scoring 35 out of 42 points and achieving gold-medal standard at the IMO.

The model operated end-to-end in natural language, with no preprocessing and producing formal mathematical proofs directly from the official problem statements and within the competition’s 4.5-hour time limit. This is distinctly different from their approach last year, which used the LEAN formal proof language in AlphaProof and the geometry-domain-specific AI model AlphaGeometry.

Instead, they used an advanced version of Gemini 2.5 Pro Deep Think to solve the problems end-to-end. They leveraged Deep Think’s parallel thinking abilities and tuned the AI model for the IMO task:

To make the most of the reasoning capabilities of Deep Think, we additionally trained this version of Gemini on novel reinforcement learning techniques that can leverage more multi-step reasoning, problem-solving and theorem-proving data. We also provided Gemini with access to a curated corpus of high-quality solutions to mathematics problems and added some general hints and tips on how to approach IMO problems to its instructions.

This is the first time general-purpose AI models matched the gold-medal bar at the IMO. In 2024, a far more specialized and less flexible AI model was awarded silver.

OpenAI’s Model

OpenAI's used an experimental reasoning LLM to answer the questions on the 2025 IMO under human rules: Two 4.5-hour sessions, no tools, natural language proofs. It was not entered officially but OpenAI had their answers scored by former IMO judges.

Under this evaluation, OpenAI’s model solved 5 of the 6 problems on the 2025 IMO (the same as Google DeepMind’s model) and earned 35 out of 42 points, achieving gold medal-level performance. Alexander Wei of OpenAI posted details on X:

Why is this a big deal? First, IMO problems demand a new level of sustained creative thinking compared to past benchmarks.

Second, IMO submissions are hard-to-verify, multi-page proofs. Progress here calls for going beyond the RL paradigm of clear-cut, verifiable rewards. By doing so, we’ve obtained a model that can craft intricate, watertight arguments at the level of human mathematicians.

Submissions were independently reviewed by IMO medalists and found to be “clear, precise and…easy to follow.” They shared their output proofs on GitHub.

Like with DeepMind, this gold medal achievement is more impressive because it is a general AI model instead of a specialized AI system. Unlike DeepMind, OpenAI’s model is still in the research stage. OpenAI noted that broader release will come after further evaluation and preparation.

OpenAI got criticized for jumping the gun and claiming gold-medal credit; it wasn’t an official entrant, it wasn’t officially scored, and they did not respect IMO organizers’ request not to announce their results immediately.

The AI company Harmonic entered a math reasoning model called Aristotle into the IMO. We will have to wait a few more days to learn how well they did.

OpenAI Wins Second Place at the World Coding Championship

Humanity has prevailed (for now!) – Psyho / Przemysław Dębiak.

Following the IMO, OpenAI made headlines again as its advanced AI model competed in the prestigious AtCoder World Tour Finals in Tokyo, the world’s hardest open coding competition.

Twelve humans, each among the absolute top global programmers, plus one AI entry (“OpenAIAHC”) from OpenAI, competed in a grueling 10-hour coding marathon centered on tackling a single, NP-hard optimization problem.

Former OpenAI engineer Przemysław “Psyho” Dębiak of Poland edged out the AI, scoring 9.5% more points than the AI model. The AI surpassed all other human finalists. Despite AI’s incredible capability, human ingenuity and creativity still prevailed in final ranking.

The gold-level success of reasoning models in mathematics and top-tier performance in programming marks another milestone in AI problem-solving. AI is close to parity with the best human minds in complex, creative, problem-solving domains.

Math Benchmark Progress

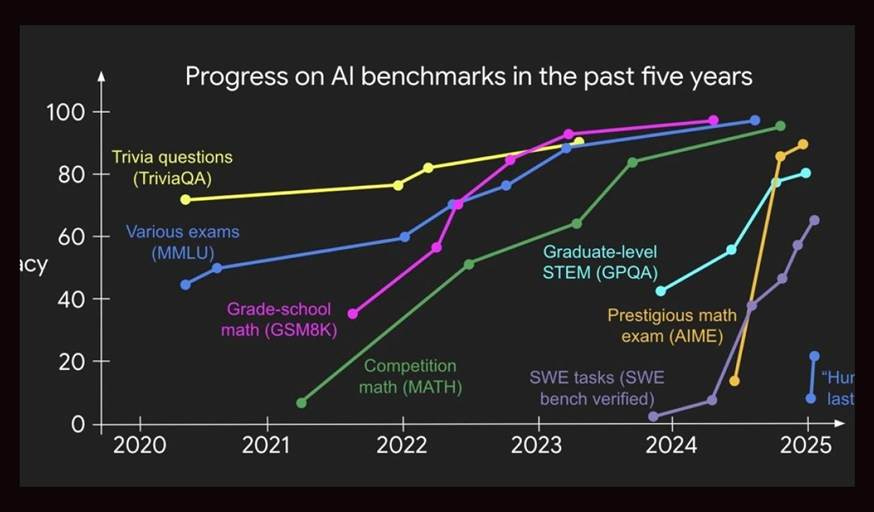

AI models keep saturating benchmarks. In less than three years, we have saturated three out of four math benchmarks – GSM8K, MATH, AIME, and IMO:

GSM8K: This benchmark focuses on grade school level math problems. GPT‑4, released on March 2023, scored 92% on this benchmark.

MATH: This benchmark includes questions ranging from high school to early undergraduate levels. While GPT‑4’s initial release in March 2023 scored only 42% on the MATH benchmark, GPT‑4 Code Interpreter (GPT4‑Code) boosted that to 69.7%. In December 2024, Gemini 2.0 Flash Experimental scored 89.7% accuracy. OpenAI o3-mini high scores 97.9%.

AIME 2024 / 2025: The American Invitational Mathematics Examination (AIME) is a challenging math competition held yearly for the nation’s top high school students. While GPT-4o scored a mere 13% on AIME, AI reasoning models started doing very well on it: o1 scored 78%; DeepSeek R1 79%; and Gemini 2.5 Pro 92%.

Note that in many cases this is without tools. When given access to a Python interpreter, o4-mini achieved 99.5% on AIME 2025, although these results shouldn't be compared directly to models without tool access.

IMO: This leaves us with the International Mathematical Olympiad (IMO), the most esteemed mathematics competition for high school students worldwide, renowned for its rigorous problems. Achieving a gold medal at the IMO is a testament to mathematical creativity, problem-solving, and rigorous proof-writing.

It’s an extremely high bar for AI models. The AI must engage in sustained thinking (up to 100 minutes per problem) to construct intricate proofs, combining extreme feats of logic, mathematical understanding, and creativity.

The 2025 IMO questions have been turned into a benchmark on MathArena. Gemini 2.5 Pro achieved the highest score among AI models tested, with an average of 31% (13 points). This represents a powerful performance given the extreme difficulty of the IMO, but it is not enough even for a bronze medal.

Beyond the IMO exam, mathematics becomes rarified and specialized enough to be indistinguishable from mathematical innovation and discovery. Perhaps the next math benchmark will be to solve unsolved math problems or write original math papers.

Scoring Gold on IMO with Innovations in RL

Today’s best released AI models today (o3 and Gemini 2.5 Pro) don’t match the results of the tuned AI models used to obtain the gold medal level scores. However, it won’t take long for the innovations in those AI models to filter down, for several reasons.

First, DeepMind announced that their mathematical Deep Think model will be released:

We will be making a version of this Deep Think model available to a set of trusted testers, including mathematicians, before rolling it out to Google AI Ultra subscribers.

Second, although neither OpenAI nor DeepMind released details, it’s clear that they both advanced their AI models with innovations in RL for reasoning. DeepMind said:

“We additionally trained this version of Gemini on novel reinforcement learning techniques that can leverage more multi-step reasoning, problem-solving and theorem-proving data.”

OpenAI’s Alexander Wei on X said:

We reach this capability level not via narrow, task-specific methodology, but by breaking new ground in general-purpose reinforcement learning and test-time compute scaling.

Given that the real challenge is to handle problems with either very sparse rewards or no rewards, the “new ground” is likely a bootstrap-reward method or possibly improved self-reflection. Others will reverse-engineer how they did this, as happened with DeepSeek R1, yielding competitive solutions.

The wild thing is this is not even GPT-5, so any innovations OpenAI applies to released AI models from this experimental AI model will be above and beyond GPT-5:

To be clear: We’re releasing GPT-5 soon, but the model we used at IMO is a separate experimental model. It uses new research techniques that will show up in future models—but we don't plan to release a model with this level of capability for many months.

Conclusion – Coding, Math and Beyond

The progress of AI is not slowing down. Last year, a specialized AI model scored silver on IMO; this week, tuned AI models scored gold on IMO. By this time next year, a released AI model or agent will likely get a perfect score on IMO.

As AI advances towards AGI, we keep running out of benchmarks. We could invent ever-harder benchmarks, but even the super-hard Humanities Last Exam is quickly being scaled. We’ll know we’ve gone past AGI when AI has saturated every benchmark we can throw at it.

It’s worth remembering that while AI models reached gold-medal scores, five humans achieved perfect scores of 42 in the 2025 IMO; team humanity still has an edge over AI. Even as AI advances, AI plus humans will for some time be more powerful than either one alone.

The most important part of this story is how OpenAI and DeepMind improved their AI models to win the IMO gold. They used general, not specialized, models and approaches. It appears that OpenAI used general RL to improve with sparse or no rewards, which opens up AI reasoning to a much wider set of problems and could accelerate AI progress far beyond coding and mathematics. We can expect released AI models in the next 6 months to have a big step up in AI reasoning based on this research.