AI for Coding – Models

The best current models for AI coding, including Claude 3.5 Sonnet and Gemini 1.5 Pro 8/27 release.

Introduction

We will cover the AI for coding topic in two parts: AI coding models, and then AI coding assistants. Since all AI coding assistants are only as good as the AI model they use under the hood, I first need to set the table by reviewing the best current AI coding models. I will review the current best-in-class AI coding assistants in a follow-up.

The AI Coding Ecosystem

Ever since the first LLM-based coding assistant, Github Copilot, programming and software development support has been a leading use-case for generative AI. You might even call it the killer App for generative AI. As we noted in prior articles, “AI is Eating the software world.” It’s worth repeating the top-line takeaway from our year-ago article:

If the only practical application of today’s Foundational AI Model-based Artificial Intelligence was a doubling of software productivity, AI would still be one of the most important technologies ever developed.

As AI has advanced, the AI ecosystem for has been rapidly improving. Our latest update on AI for coding in June, “AI keeps eating the software world,” mentioned many leading AI models for coding: Claude 3 Opus, Gemini 1.5 pro (May release), Code Llama, Code Qwen, GPT-4o. All were released in 2024.

AI advances this summer have continued these positive trends:

AI models keep improving on coding tasks. Both leading frontier AI models and local open AI models are more reliable at generating good code, score better on coding benchmarks, take in more context, and have better usability features, such as Claude Artifacts. The best AI models for coding have all been recent (past 3 months) releases: Claude 3.5 Sonnet, Mistral’s Codestral, DeepSeek V2 Coder, Gemini 1.5 Pro (8/27 experimental release), Grok-2.

The best AI coding assistants rely on the best AI coding models. As AI models have improved, so have AI coding assistants.

We've transitioned from AI coding chatbots to intelligently integrated AI applications. The features of AI coding assistants have improved. These new and improved tools seamlessly blend AI with user interfaces, resulting in a genuine valuable AI-first user experience. For example, Cursor has become a leading AI-first coding environment thanks to its great AI UX.

AI agents are becoming more common, more diverse, and more useful. AI-powered assistants now excel at more complex software development tasks, including code generation, refactoring, debugging, and optimization.

The distinction between AI coding assistants and AI agents for software development is getting erased, as AI assistants add more capable AI code generation features and AI agents allow for more ‘human-in-the-loop’ features.

AI Coding Model Benchmarks

We know AI models have gotten steadily better at coding both by the improvement of AI models across various benchmarks and the strides made by those using AI for coding. We evaluate the AI models across several different coding-related benchmarks: Lmsys Arena scores; Human Eval and HumanEval+ scores; and BigCodingBench.

Lmsys Arena coding scores are based on human ratings of AI models responses to coding-related prompts. Figure 3 below shows the current leaderboard standings.

Any individual benchmark for AI models can be flawed or incomplete, or it can have evaluation data in training datasets pollute its validity. HumanEval has been the standard benchmark for coding, but it is flawed and the best AI models are scoring at levels around 90%, starting to supercede it.

The authors of the paper “Is Your Code Generated by ChatGPT Really Correct? Rigorous Evaluation of Large Language Models for Code Generation” noted limits of this coding benchmark: “prior popular code synthesis evaluation results do not accurately reflect the true performance of LLMs for code synthesis.”

They developed HumanEval+ to improve upon the HumanEval benchmark; see EvalPlus leaderboard here. However, HumanEval is still much most common and our only benchmark to compare some models. Likewise, the recently developed BigCodeBench is measured on many but not all AI models. BigCodeBench benchmark leaderboard rankings are published on Github. A further complication is that some of the recent releases, like Gemini 1.5 Pro 8/27 release, don’t have any benchmarks beyond Lmsys ranking.

We collated multiple coding benchmark results on leading AI models, and share them below.

Best AI Coding Models

However, collectively, these benchmarks tell a consistent story about the leading AI models for coding:

Leading frontier AI models are all performing well on coding benchmarks; Claude 3.5 Sonnet and GPT-4o are the leaders.

Recent releases - Grok-2 and Gemini 1.5 Pro 8-27 - are also highly rated on Lmsys, and Grok-2 also scoring 88.4% on HumanEval, near SOTA.

Grok-2 mini and GPT-4o mini also perform well.

Llama 3.1 405B is the best performing open AI model for coding.

Open AI models Qwen 2 72B and DeepSeek Coder V2 Instruct models are competitive. Good AI models for coding you can run locally (depending on your system) include DeepSeek Coder V2 ‘lite’ 16B, Codestral, and Qwen 2 7B (79.9 on HumanEval).

These 4 frontier AI models are all excellent for coding: Claude 3.5 Sonnet, GPT-4o (latest, 8-08), Gemini 1.5 Pro (experimental version of 8-27), and Grok-2. Claude 3.5 Sonnet seems favored by most developers, and it is viewed as the best AI model to work with Zed AI, Aider, Cursor, and other AI coding assistants.

The Gemini AI Studio Deal

The cost for using the top AI models via an interface is:

3.5 Sonnet: $3/M input tokens, $15/M output tokens.

GPT-4o: $5/M input tokens, $15/M output tokens.

Both can be accessed via their chatbot interfaces as well.

Thanks to their desire to get their latest Gemini AI model tested, Google offers a generous Gemini API “free tier” for testing (but also general light use). It runs 50 RPD (requests per day), 32,000 TPM (tokens per minute). It is “Used to improve our products” so you don’t get privacy. However, their deal gets even better: You can run Gemini’s latest and greatest AI model for free in Google’s AI Studio.

Testing Gemini 1.5 Pro 8-27 for Coding Tasks

Given that opportunity to try out the latest Gemini 1.5 Pro, I decided to test it on coding and also answer the question: How far can you go just using the AI model itself for coding?

I tested the latest Gemini 1.5 Pro experimental release of 8/27 on several simple and then more complex tasks. First, I prompted Gemini 1.5 Pro to code up various calculator apps, then other web-scraping and summarization tasks and apps.

Given a simple request prompt, Gemini 1.5 Pro was able to generate a GUI-based arithmetic calculator in python in 1-shot - success. A more complex calculator was similarly successful. After one prompt resulted in a blocked output, a re-prompt yielded a flawless scientific calculator written in Python with Tkinter library for GUI display.

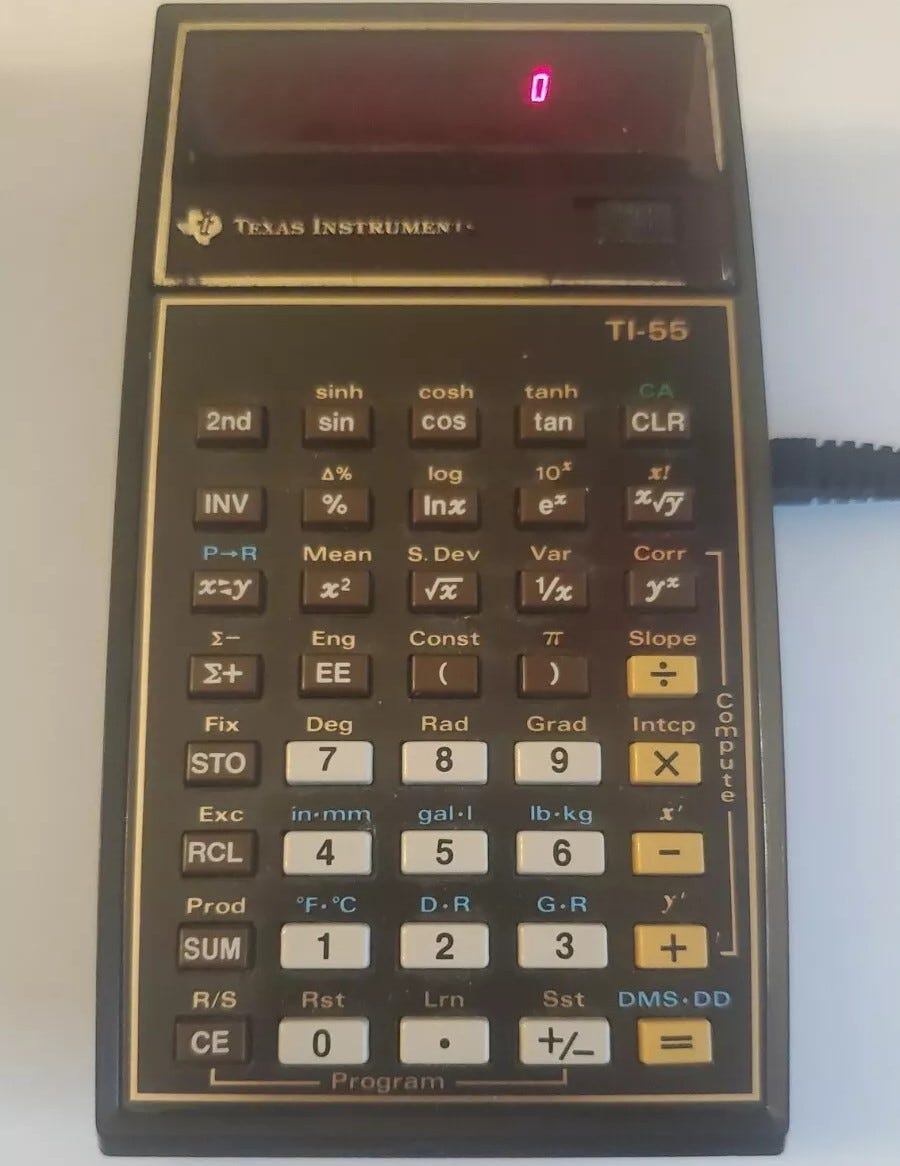

I then shared a picture of TI-55 with Gemini to make a T-55 replica calculator app. With over 100 total functions including complex alt and 2nd functions, this proved too complex and ran into several problems. It yielded a partly working calculator with layout issues and many functions / buttons not mapped correctly. I got into editing cycles to fix layout and functions, and other issues, so I bowed out of completing this, as it was showing the limits of the prompt-result-cut-paste-run iteration loop with AI.

Next, a utility to find and report weblinks on a webpage, was a one-shot success. It took a few iterations to do a summarization task, using Gemini Flash, of web content, but overall quick and successful.

My next task was to combine weblink collection and webpage summarization into a web application. Using flask for the web app kept the application simple (under 150 lines), but needed the right libraries and prompts to call on an AI model (Gemini Flash) to do summarization.

The generated code initially had errors. It used an obsolete langchain LLM call (another library issue). I directed it to use Google’s own library to invoke the AI model, that got some errors with that as well (“module 'google.generativeai' has no attribute 'text'”). Gemini recognized each error as it was pointed out, and after about six iterations to fix issues, it was successful.

Observations on Gemini

Gemini 1.5 Pro experimental token output on AI Studio is slow, taking 30 seconds to fully respond to some prompts completely.

Gemini 1.5 Pro has well-organized responses, with the code generated followed by detailed explanations of changes, helpful instructions to implement, and other reminders. For the right task and right user, it can be quite educational.

Gemini 1.4 Pro produced some errors in its code output, but it recovered its mistakes once it was pointed out. Some issues were due to gaps in knowing about certain libraries, which can be an issue fixable with putting documentation into the context or via a RAG system.

Overall, Google Gemini 1.5 Pro 8/27 was quite good, but not perfect. It may be close to Claude 3.5 Sonnet, as benchmarks attest.

General Learnings

The latest AI models are getting really good at coding. It is possible, and even easy, to build simple programs, web pages, dashboards, and applications with just an AI model chatbot. Some simple programs can be ‘one and done’, and that’s impressive.

When you go beyond simple apps, you end up iterating a lot. We need to iteration both to fix imperfect AI output and implement variations or enhancement to evolve the application. This is the natural way to do software development, one git commit at a time. Software development is inherently iterative.

Current AI is more helpful to the unskilled than the skilled. It can 'level up' the newbie, creating code a novice might not know how to write, but for those already highly skilled, its best use is to speed up known tasks. Once the AI goes outside its skill-set, you are stuck with bugs or undone requirements, trying to fix and figure it out on your own. Iterating and cut-n-paste can be slower for a skilled programmer than simply doing it directly.

Conclusion and Recommendations

AI models are now good enough to generation simple software apps on the fly. The most popular AI model for coding is Claude 3.5 Sonnet, but several other AI models are at or near SOTA. Try out Gemini 1.5 Pro 8-27 release AI model for coding, and use Google’s free tier API while you are at it.

Smaller AI models such as Qwen 2 7B and Codestral can run locally and perform well on some coding tasks.

As a software task becomes more complex, the iterative coding process exposes the limits of the AI model chat interface. The constant switching between the AI chat window and your code editor, coupled with repetitive copying and pasting, quickly becomes tedious. Surely, there is a better way to edit and clean up an application than cut-n-paste?

There is, and that’s a great motivation for AI coding assistants, which streamline the process of integrating AI into your coding workflow. You can swiftly edit new lines and generate fresh code without resorting to copy-and-paste. The result is a more fluid and efficient coding experience that combines the strengths of AI with human expertise.

We will review the best AI coding assistants in a follow-up article.

Postscript

On the side, I used DeepSeek Coder V2 “lite” 16B (local install with Ollama) to use Langchain to summarize a weblink. Alas, DeepSeek Coder didn’t know about the Langchain library and so produced errors. It’s a reminder that AI models are better at older and common libraries where they are trained on data. AI model outputs for rapidly evolving libraries could be flawed if they were trained on older deprecated code. Since DeepSeek Coder V2 16B didn’t perform well on this, I dropped it and didn’t explore it thoroughly.

DeepSeek Coder V2 is a highly rated open AI model for coding, but its an MoE model with 236B parameters, not practical for local use. However, my own experience with the ‘lite’ version is such that I would use other AI models for coding.