AI Research Review 25.01.24 - Reasoning

Toward Large Reasoning Models, DeepSeek-R1, Kimi k1.5 and scaling RL, Mind Evolution for search in planning, improving Process Reward Models in math reasoning.

Introduction

The release of DeepSeek R1 and a similar AI reasoning model, Kimi k1.5, has put the spotlight on AI reasoning. This week, we review recent AI research results in that area:

Towards Large Reasoning Models: A Survey on Scaling LLM Reasoning Capabilities

DeepSeek-R1: Incentivizing Reasoning Capability in LLMs via Reinforcement Learning

Kimi k1.5: Scaling Reinforcement Learning with LLMs

Mind Evolution: Evolving Deeper LLM Thinking

The Lessons of Developing Process Reward Models in Mathematical Reasoning

Towards Large Reasoning Models: A Survey on Scaling LLM Reasoning Capabilities

The release of o1 and subsequent AI reasoning models has driven a surge in AI research on getting LLMs to perform complex reasoning. The paper “Towards Large Reasoning Models: A Survey on Scaling LLM Reasoning Capabilities” from Tsinghua University presents a current comprehensive survey on recent progress in this area, calling this new class of emerging models Large Reasoning Models.

The survey highlights the three key technical components driving this development: learning-to-reason techniques, automated data construction, and test-time scaling.

To get LLMs to move from simple token-at-a-time linear (“System 1”) thinking to a complex reasoning process (“System 2”) requires training the LLM to generate token sequences representing reasoning steps or “thoughts”. Fine-tuning using Reinforcement Learning (RL) is applied to train LLMs to master reasoning processes:

This approach (RL-based training) enables the automatic generation of high-quality reasoning trajectories through trial-and-error search algorithms, significantly expanding LLMs' reasoning capacity by providing substantially more training data.

The survey covers the various RL policy and reward model approaches, including DPO (Direct Preference Optimization), and the use of Outcome Reward Model (ORM) and Process Reward Model (PRM) for guiding the RL reward signal for reasoning. They also mention the use of searching, e.g., with MCTS to generate high-quality data for reasoning.

The survey highlights the importance of test-time scaling, where more tokens during inference can significantly boost reasoning accuracy, and the significance of OpenAI’s o1 in proving the value of combined train-time and test-time scaling. They review recent open-source projects aimed at building o1-like reasoning models, and they also mention three training-free test-time enhancing methods: verbal reinforcement search, memory-based reinforcement, and agentic system search.

Overall, this survey summarizes this increasingly active AI research area well, covering the techniques for LLM reasoning as well as the open challenges and future research directions.

DeepSeek-R1: Incentivizing Reasoning Capability in LLMs via Reinforcement Learning”

In our prior article on the release of the DeepSeek-R1 model, we also covered DeepSeek’s concurrent publication of their technical report on R1, “DeepSeek-R1: Incentivizing Reasoning Capability in LLMs via Reinforcement Learning.”

As a recap: The R1 training pipeline involves interleaved Supervised Fine-Tuning (SFT) and RL-based fine-tuning to both train the model for reasoning while maintaining its performance in other tasks. The RL steps used GPRO, a rules-based reward for reasoning, and had the characteristics of not using Monte-Carlo Tree Search (MCTS), nor using Process Reward Model (PRM), both decisions simplifying their flow.

The DeepSeek-R1 training pipeline is transparent and simple enough for other AI labs to replicate.

Kimi k1.5: Scaling Reinforcement Learning with LLMs

“Scaling reinforcement learning (RL) unlocks a new axis for the continued improvement of artificial intelligence, with the promise that large language models (LLMs) can scale their training data by learning to explore with rewards.” - Kimi k1.5 Technical Report

Another Chinese AI lab, Moonshot AI, has come out with a remarkably good AI reasoning model. They presented their model in “Kimi k1.5: Scaling Reinforcement Learning with LLMs,” sharing the technical details on how Kimi k1.5 was trained.

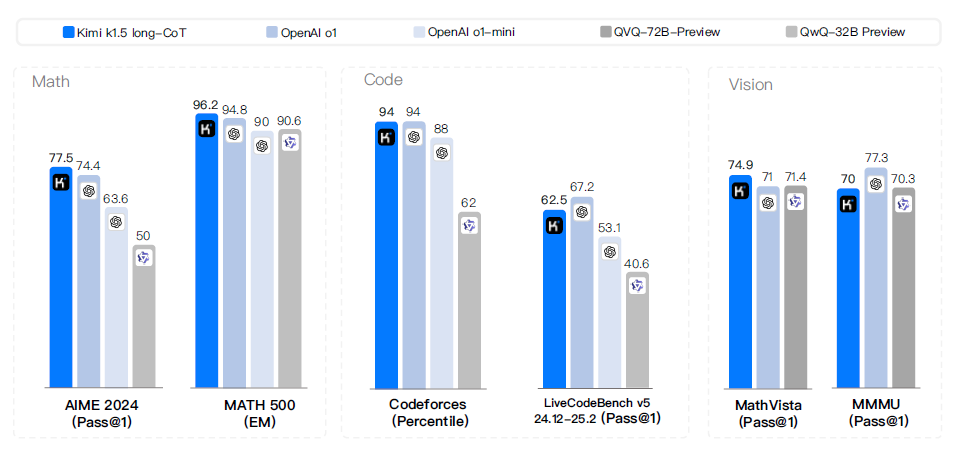

The model itself achieves excellent results on AI reasoning benchmarks, on par with OpenAI o1. It is also a multimodal model, jointly trained on text and vision data to be able to jointly reason on both modalities.

Key elements to the training of Kimi k1.5:

• Long context scaling. We scale the context window of RL to 128k and observe continued improvement of performance with an increased context length.

• Improved policy optimization. We derive a formulation of RL with long-CoT and employ a variant of online mirror descent for robust policy optimization.

• Simplistic Framework. Long context scaling, combined with the improved policy optimization methods, establishes a simplistic RL framework for learning with LLMs. … strong performance can be achieved without relying on more complex techniques such as Monte Carlo tree search, value functions, and process reward models.

The training process itself involves three-stage post-training for AI reasoning: An initial supervised fine-tuning (SFT), followed by long-CoT supervised fine-turning, and finally reinforcement learning (RL).

Their training approach bears marked similarities to the approach used in DeepSeek R1. They use an initial SFT step to control the AI model performance, use a simpler RL framework through direct and robust policy optimization, which affords them the ability to improve reasoning without complex search techniques or process reward models (PRMs).

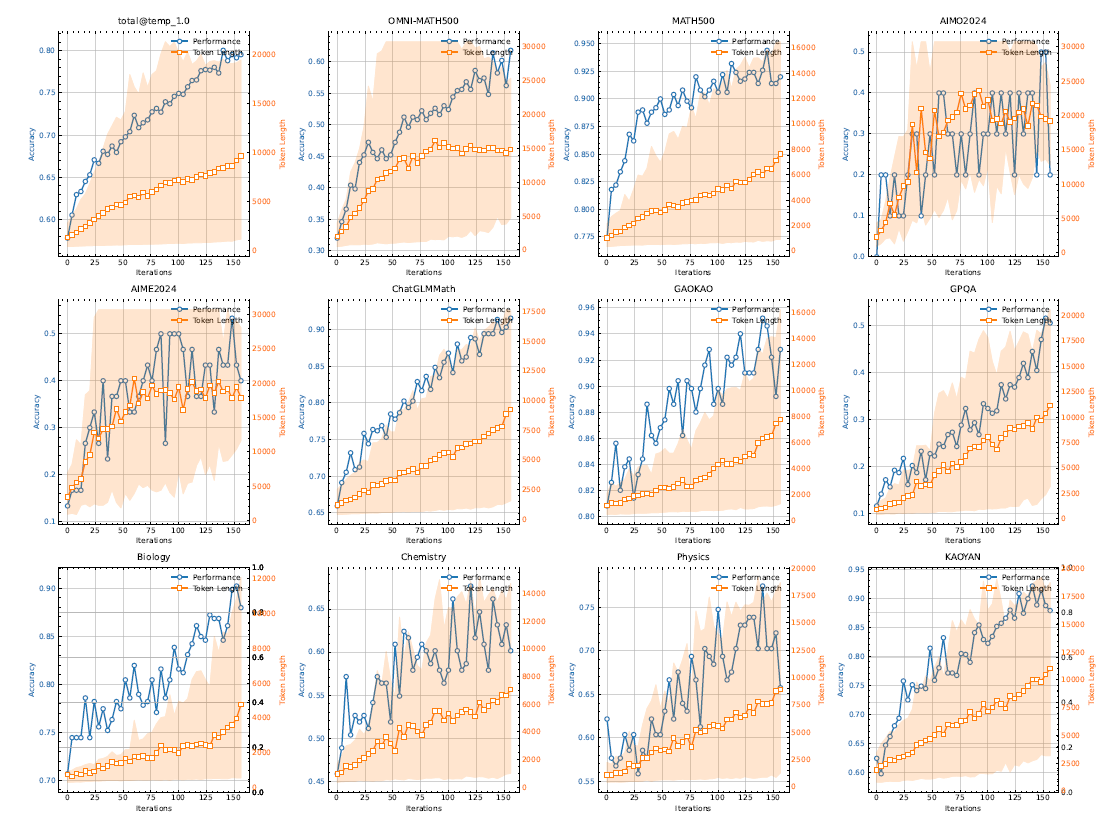

They are able to get superior results from this simpler RL approach because the search and reasoning behavior the AI model needs will emerge naturally from the RL-directed optimization. This is similar to how the Alpha-Zero model learned to play Go from self-play. AI reasoning scales performance as RL iterations increase, as shown in the figure below.

Unfortunately, Kimi k1.5 is not an open-source AI model nor released for public use, so its actual performance cannot be verified yet. Still, the methods used confirm that general RL approaches, combined with the right policy functions, may be sufficient to obtain o1-level AI reasoning model performance.

Mind Evolution: Evolving Deeper LLM Thinking

The paper Evolving Deeper LLM Thinking from Google DeepMind presents Mind Evolution, an approach to problem-solving with LLMs by using evolutionary search and scaling inference time computation.

The Mind Evolution approach leverages an LLM to iteratively generate, recombine, and refine candidate solutions, guided by feedback from a solution evaluator. Unlike traditional methods like Best-of-N, which broadly searches for independent solutions, Mind Evolution combines broad exploration with deep refinement, optimizing solutions globally rather than stepwise.

Results demonstrated Mind Evolution's superior performance for planning tasks, sharing examples like planning travel:

Controlling for inference cost, we find that Mind Evolution significantly outperforms other inference strategies such as Best-of-N and Sequential Revision in natural language planning tasks. In the TravelPlanner and Natural Plan benchmarks, Mind Evolution solves more than 98% of the problem instances using Gemini 1.5 Pro without the use of a formal solver.

This work demonstrates the potential of evolutionary search in optimizing LLM performance in complex, real-world scenarios.

The Lessons of Developing Process Reward Models in Mathematical Reasoning

The paper The Lessons of Developing Process Reward Models in Mathematical Reasoning addresses challenges in developing Process Reward Models (PRMs) for mathematical reasoning in LLMs. PRMs aim to improve the AI reasoning process by rewarding correct reasoning steps. However, PRMs face challenges in proper data annotation and evaluation accuracy.

The study identifies limitations in using Monte Carlo (MC) estimation for data annotation, which often leads to inferior performance compared to LLM-as-a-judge and human annotation methods. The authors attribute this to MC estimation's reliance on completion models, which can produce correct answers from incorrect steps, thereby introducing noise.

The paper also highlights biases in Best-of-N (BoN) evaluation strategies, where unreliable policy models generate responses with correct answers but flawed processes, misaligning BoN evaluation with PRM objectives.

Their solution to these PRM challenges:

To address these challenges, we develop a consensus filtering mechanism that effectively integrates MC estimation with LLM-as-a-judge and advocates a more comprehensive evaluation framework that combines response-level and step-level metrics. Based on the mechanisms, we significantly improve both model performance and data efficiency in the BoN evaluation and the stepwise error identification task.

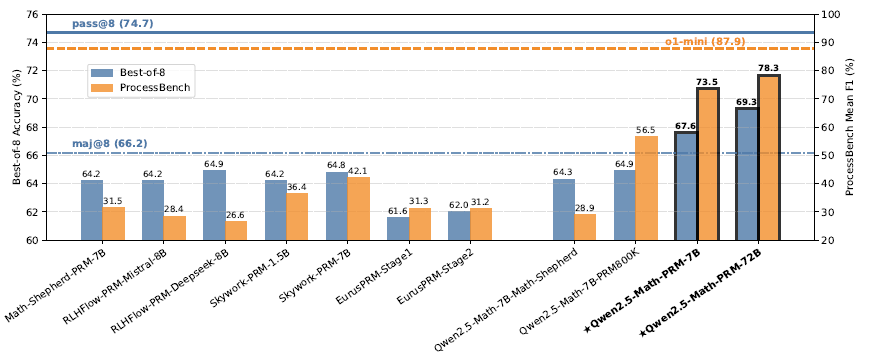

The authors demonstrate improved model performance and data efficiency using their consensus filtering mechanism and release a new state-of-the-art PRM, "Qwen2.5-Math-PRM," which outperforms existing open-source alternatives.

The study provides practical guidelines for future research in building better PRM-based AI reasoning models, and it shows the importance of stringent data filtering and comprehensive evaluation strategies when using Process Reward Models.