AI Research Review 25.04.25 – DAM and Reasoning

Describe Anything Model (DAM), Reasoning Without Thinking, Test-Time Reinforcement Learning (TTRL), Genius - Unsupervised Self-Training for Reasoning.

Introduction

This AI research update covers Nvidia’s Describe Anything Model (DAM) and several AI reasoning papers that extend RL training and AI reasoning:

Nvidia’s Describe Anything Model (DAM)

Reasoning Models Can Be Effective Without Thinking

TTRL: Test-Time Reinforcement Learning

Genius: A Generalizable and Purely Unsupervised Self-Training Framework for Advanced Reasoning

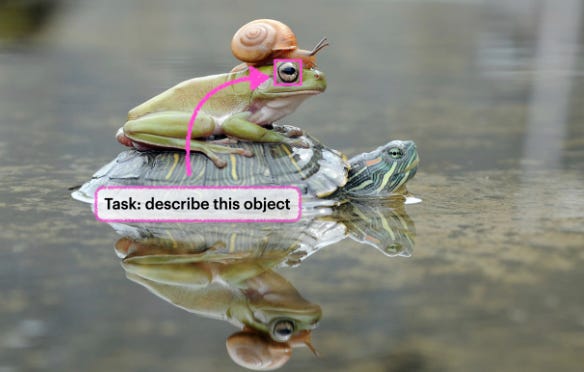

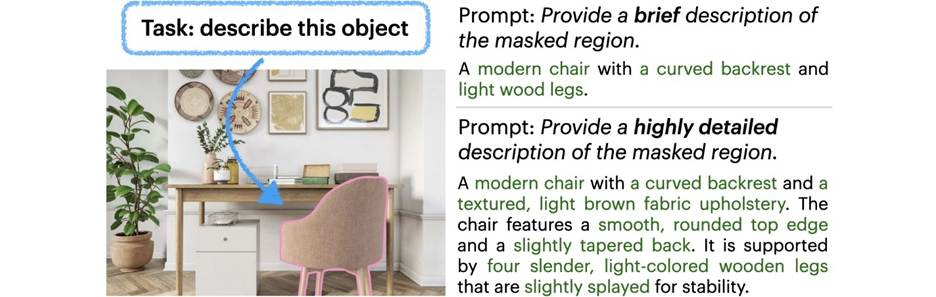

Describe Anything: Detailed Localized Image and Video Captioning

Nvidia released Describe Anything Model (DAM-3B), a 3B parameter multimodal LLM designed for detailed, localized image and video captioning by processing user-specified regions (points, boxes, scribbles, or masks) and generating descriptive text. They shared technical details in the paper “Describe Anything: Detailed Localized Image and Video Captioning.”

The DAM model employs focal prompting to fuse high-resolution crops with global context and gated cross-attention to blend regional and overall scene features, enabling precise and context-rich descriptions of specific selected regions or items.

To tackle the scarcity of high-quality DLC data, we propose a Semi-supervised learning (SSL)-based Data Pipeline (DLC-SDP). DLC-SDP starts with existing segmentation datasets and expands to unlabeled web images using SSL.

The authors also introduce DLC-Bench, a benchmark designed to evaluate DLC without relying on reference captions, using predefined positive and negative attributes for each region to assess caption quality.

DAM achieves state-of-the-art results on seven benchmarks spanning keyword-level, phrase-level, and detailed multi-sentence localized image and video captioning, demonstrating its ability to generate informative and precise descriptions.

The model architecture, training data curation, and benchmark evaluation methods are detailed, providing a comprehensive approach to advancing localized captioning.

Nvidia has publicly released the code, model, data, and the DLC-Bench evaluation suite to open source on GitHub and Hugging Face, accompanied by interactive demos for hands-on exploration.

Reasoning Models Can Be Effective Without Thinking

AI reasoning is remarkable effective, but scaling on test-time compute is expensive, in terms of tokens and compute. Researchers are now exploring ways to make the AI reasoning process more efficient. One remarkable result is that you can ‘fake’ it and leave out test-time thinking tokens entirely!

The paper "Reasoning Models Can Be Effective Without Thinking" challenges the necessity of explicit reasoning process token output in AI reasoning models. The authors of the paper introduce "NoThinking," a method that bypasses the explicit chain of thought (CoT) by prompting the model to generate a final solution directly after a fabricated "Thinking" block:

Using the state-of-the-art DeepSeek-R1-Distill-Qwen, we find that bypassing the thinking process via simple prompting, denoted as NoThinking, can be surprisingly effective. When controlling for the number of tokens, NoThinking outperforms Thinking across a diverse set of seven challenging reasoning datasets--including mathematical problem solving, formal theorem proving, and coding--especially in low-budget settings, e.g., 51.3 vs. 28.9 on ACM 23 with 700 tokens.

The effectiveness of NoThinking increases with parallel scaling, where multiple independent outputs are generated and aggregated using task-specific verifiers or best-of-N strategies. This parallel approach achieves comparable or better accuracy with significantly reduced latency, up to 9 times faster than Thinking methods.

The study suggests that lengthy thinking processes might not always be essential for strong reasoning performance. This challenges the conventional reliance on extensive chain-of-thought in reasoning models and offers a competitive baseline for achieving high accuracy with low latency and token usage.

TTRL: Test-Time Reinforcement Learning

TTRL: Test-Time Reinforcement Learning presents Test-Time Reinforcement Learning (TTRL), a novel method that leverages Reinforcement Learning (RL) to train LLMs for reasoning tasks on unlabeled test data. TTRL addresses the challenge of reward estimation during inference when ground truth labels are unavailable by using Test-Time Scaling (TTS) and majority voting, which generates effective reward signals suitable for driving RL training.

TTRL operates by repeatedly sampling outputs from the LLM given a prompt, then using majority voting on these outputs to estimate a pseudo-label. This pseudo-label is then used to compute rule-based rewards, which guide the RL training process. The RL objective is to maximize the expected reward, and the model parameters are updated through gradient ascent.

Our experiments demonstrate that TTRL consistently improves performance across a variety of tasks and models. Notably, TTRL boosts the pass@1 performance of Qwen-2.5-Math-7B by approximately 159% on the AIME 2024 with only unlabeled test data. Furthermore, although TTRL is only supervised by the Maj@N metric, TTRL has demonstrated performance to consistently surpass the upper limit of the initial model and approach the performance of models trained directly on test data with ground-truth labels.

This approach builds on the ideas in Test-Time Training (TTT), published last November. It also moves towards a direction of self-learning, i.e., RL learning from unsupervised (unlabeled) data, as well as test-time learning. As the authors conclude:

We view TTRL as a preliminary step toward RL with self-labeled rewards, marking an important direction of learning from continuous streams of experience.

Code for TTRL is available on GitHub.

Genius: A Generalizable and Purely Unsupervised Self-Training Framework for Advanced Reasoning

The paper Genius: A Generalizable and Purely Unsupervised Self-Training Framework For Advanced Reasoning introduces a novel approach to enhance reasoning skills in LLMs without relying on labeled data or external reward models. Like the previous paper, the Genius framework addresses the limitations of existing post-training techniques that depend on labelled data and supervision, which can be costly and difficult to scale.

Genius employs a self-supervised training paradigm where the LLM generates responses to general queries, evaluates these responses, and then optimizes itself based on the evaluation. The framework introduces a stepwise foresight re-sampling strategy to explore potential steps in the reasoning process and identify the optimal ones. This involves simulating future outcomes to estimate the value of each step.

To mitigate the inherent noise and uncertainty in the unsupervised setting, the authors propose an advantage-calibrated optimization (ACO) loss function. ACO penalizes inconsistencies between the foresight score and the step advantage, leading to more robust optimization.

The effectiveness of Genius is demonstrated through experiments on diverse reasoning benchmarks, showing significant performance improvements compared to baseline methods. For example, both Llama 3.1 8B and Qwen 2.5 7B improved by 6 pts on AIME 2024 over baseline with this method.

Combining these techniques together, Genius provides an advanced initial step towards self-improve LLM reasoning with general queries and without supervision, revolutionizing reasoning scaling laws given the vast availability of general queries.

Since Genius can effectively leverage general queries to enhance LLM reasoning abilities, this paves the way for more scalable and efficient post-training techniques. The code is available on GitHub.