AI Research Roundup 24.08.02

SAM2: Segment Anything in Images and videos, AFM: Apple Foundation Models, Meltemi: Greek LLMs. SeaLLMs 3: Southeast Asia language LLMs, SaulLM-54B & SaulL: LLMs for legal domain.

Introduction

Our AI research highlights for this week show that there’s a robust and healthy ecosystem of open AI models, with multi-lingual open models (Meltemi, SeaLLMs 3), legal open LLM (SaulLM), and Meta’s SAM 2 vision segmenting AI model:

SAM 2: Segment Anything in Images and Videos

AFM: Apple Intelligence Foundation Language Models

Meltemi: The first open Large Language Model for Greek

SeaLLMs 3: Open Foundation and Chat Multilingual Large Language Models for Southeast Asian Languages

SaulLM-54B & SaulLM-141B: Scaling Up Domain Adaptation for the Legal Domain

SAM 2: Segment Anything in Images and Videos

Meta released SAM 2, a visual segmentation model for video and images that sets a new state-of-the-art. Some of the key features that makes SAM 2 an important release:

Single fast, accurate model: SAM 2 is a single model for image and video that improves on Meta’s SAM for image segmentation and is 6x faster. SAM 2 has strong zero-shot performance for objects, images and videos not previously seen during model training.

Excellent video performance: SAM 2 outperforms existing video object segmentation models. Its efficient video processing enables fast inference for real-time, interactive results.

Simple object selection: Using SAM 2, you can select one or multiple objects in a video frame, track an object across any video interactively with as little as a single click, and use additional prompts to refine the model predictions.

This has a host of uses for classifying, understanding and manipulating images and video, such as creating fun effects. Users can try the demo.

Meta describes the SAM 2 model training and evaluations in the paper SAM 2: Segment Anything in Images and Videos. The model is a transformer architecture with streaming memory for real-time video processing:

The SAM 2 model extends the promptable capability of SAM to the video domain by adding a per session memory module that captures information about the target object in the video. This allows SAM 2 to track the selected object throughout all video frames, even if the object temporarily disappears from view, as the model has context of the object from previous frames. SAM 2 also supports the ability to make corrections in the mask prediction based on additional prompts on any frame.

There are many innovations that make SAM 2 state-of-the-art, but the biggest contributor is that SAM 2 was trained on a large set of videos and masklets (object masks over time) using “the largest video segmentation dataset to date,” called SA-V. Meta is open sourcing the SA-V dataset, which consists of consists of 51K diverse videos and 643K masklets. Users can explore the dataset here.

Apple Intelligence Foundation Language Models

Apple introduced their Apple Intelligence AI models at their June WWDC, and we noted then that Apple’s adaptive AI models that can run locally may be a “great strategy.”

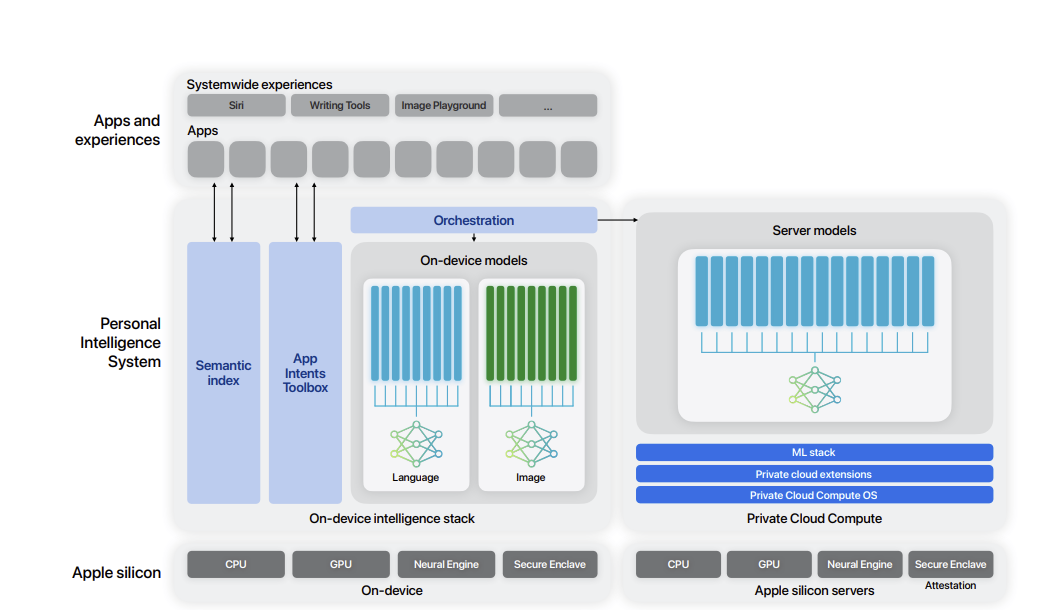

The paper Apple Intelligence Foundation Language Models shares technical details on Apple’s approach to building what they call AFM, Apple Foundation Models, which consist of two types of models:

AFM-on-device is a ~3B parameter model optimized for on-device deployment, while AFM-server is larger and server-based.

Both AFM-server and AFM-on-device are transformer models, with shared input/output embeddings, pre-normalization, query/key normalization, and grouped query attention for efficiency. RoPE positional embeddings are used for long-context support.

AFM-server is trained on 6.3T tokens from “high-quality publicly-available datasets” consisting of web, code, math and other data. To speed efficiency of training AFM-on-device, they use knowledge distillation and pruning, so they don’t have to start from scratch. Post-training uses human annotations and synthetic data to instill instruction following and perform RLHF for alignment.

While Apple’s AI model training process isn’t unique, their task-specific adapters added to the AFMs are a distinctive ecosystem suited for local deployment. These adapters use rank decomposition (LoRA) for efficiency, and are added on top of the AFM models to specialize them for users' everyday tasks while keeping the base model frozen.

It’s encouraging to see Apple sharing information on their AFM suite. However, the latest news is Apple Intelligence has been delayed, so we will have to wait to assess how well Apple’s AI model suite really performs.

Meltemi and SeaLLMs: LLMs for Greek and Southeast Asian Languages

The English-speaking world is privileged to have most LLMs based on a large corpus of internet and other data that is dominated by English. There are efforts to serve other languages: Leading LLMs such as Gemini 1.5 Pro and Mistral Large 2 have incorporated multi-lingual understanding; Chinese AI model makers serve up Chinese and bi-lingual LLMs.

There are still gaps for LLMs for other languages. Two recent papers show a ways to build languages for serve specific ‘low resource’ languages.

Our first example is Meltemi, The first open Large Language Model for Greek, which developed Meltemi 7B LLM as an adaptation of the Mistral 7B model.

The authors curated a corpus of 40 billion tokens of Greek corpus of text, then applied it to continuous pretraining on Mistral 7B to make Meltemi 7B. They also curated a Greek instruction corpus and used it for instruction-tuning of a chat model, named Meltemi 7B Instruct.

To evaluate its capabilities, they translated established benchmarks for language understanding and reasoning from English to Greek. Both Meltemi 7B and Meltemi 7B Instruct are on HuggingFace and are open source.

SeaLLMs 3: Multilingual LLMs for Southeast Asian Languages

The second multi-lingual LLM research effort to highlight is SeaLLMs 3: Open Foundation and Chat Multilingual Large Language Models for Southeast Asian Languages. SeaLLMs is an ongoing effort from DAMO Academy and Alibaba group.

Southeast Asia has rich linguistic diversity and many speakers, but lacks adequate language technology support:

SeaLLMs 3 aims to bridge this gap by covering a comprehensive range of languages spoken in this region, including English, Chinese, Indonesian, Vietnamese, Thai, Tagalog, Malay, Burmese, Khmer, Lao, Tamil, and Javanese.

As with Meltemi LLM, they apply continuous pre-training, but the SeaLLMs 3 model based on the Qwen2 model family, with further conducted language enhancement to augment its capability in SEA (South-East Asia) languages, through a continuous pre-training process followed by SFT. Data is translated high-quality English data to SEA languages with quality filtering, conduct self-instruction to automatically generate SFT data of certain types

They apply an approach to efficient multi-lingual support by training language specific neurons only based on observation that “certain language-specific neurons in language models are responsible for processing specific languages.” Training only language-specific neural weights and freezing others significantly reduces overall training cost, and it also ensures that overall performance on other languages remains unaffected.

Their evaluations show SeaLLMs 3 achieves high multi-lingual performance on knowledge, math and reasoning benchmarks.

“This work underscores the importance of inclusive AI, showing that advanced LLM capabilities can benefit underserved linguistic and cultural communities.” - SeaLLMs 3 authors

SaulLM-54B & SaulLM-141B: Scaling Adaptation for the Legal Domain

Researchers at Equall, a French AI legal firm developing frontier AI systems for legal work, partnering with MICS at CentraleSupélec (Université Paris-Saclay), asked the question:

How much can we improve the specialization of generic LLMs for legal tasks by scaling up both model and corpus size?

Their answer is to develop SaulLM, a family of open AI models for law. They shared further details in SaulLM-54B & SaulLM-141B: Scaling Up Domain Adaptation for the Legal Domain. SaulLM-54B and SaulLM-141B apply large-scale domain adaptation to a Mixtral base AI model (54B and 141B Mixtral models respectively) in 3 parts:

(1) the exploitation of continued pretraining involving a base corpus that includes over 540 billion of legal tokens, (2) the implementation of a specialized legal instruction-following protocol, and (3) the alignment of model outputs with human preferences in legal interpretations.

Just as we can adapt LLMs for different languages with continuous pre-training and specialized fine-tuning, we can also adapt LLMs for specific domains as well. What sets SaulLM apart is the large legal corpus, 520 billion tokens; much of it is web data with legal content, with a focus on US, UK and EU legal jurisdictions (see Figure).

SaulLM also differentiates itself with its synthetic generation of instruction data for legal instruction-following use-cases.

The base, instruct, and aligned versions on top of SaulLM-54B and SaulLM-141B are being released under the MIT License, and can be found on HuggingFace.