AI Week in Review 23.05.13

Google AI's everything, HF Transformer Agents, Claude expands context, EU regulates, Meta goes max multi-modal & more

Top AI Tools

I don’t have any Top AI Tools this week. Instead, I have the next 10,000 tools. Hugging Face’s Transformers Agents is all you need.

Hugging Face announced it on Twitter: “We just released Transformers' boldest feature: Transformers Agents. This removes the barrier of entry to machine learning. Control 100,000+ HF models by talking to Transformers and Diffusers. Fully multimodal agent: text, images, video, audio, docs...”

This makes it very easy to add tools, build agents, and reuse them all to build-out the AI ecosystem. Since this provides easy interface to thousands of models, and it’s all extensible and open-source, it’s sure to be the basis of a thriving ecosystem.

AI Tech and Product Releases

This was another busy week!

As we covered in our article on PaLM 2 this week, Google made a slew of AI-related announcements in their Google I/O conference, including introducing PaLM 2 and upgrading Bard to run on it. It took Google execs saying “AI” hundreds of times, but Google I/O was a success if you go by its stock price. Alphabet’s shares jumped to their highest since August and rallied 11% for the week. As always, there’s a (Google engineer created) meme for that:

Anthropic is “Introducing 100K Context Windows” for their Claude AI model. They shared business use cases for this larger context of about 75,000 words, such as analyzing an 85-page 10K filing in one go, as well as this: “AssemblyAI put out a great demonstration of this where they transcribed a long podcast into 58K words and then used Claude for summarization and Q&A.”

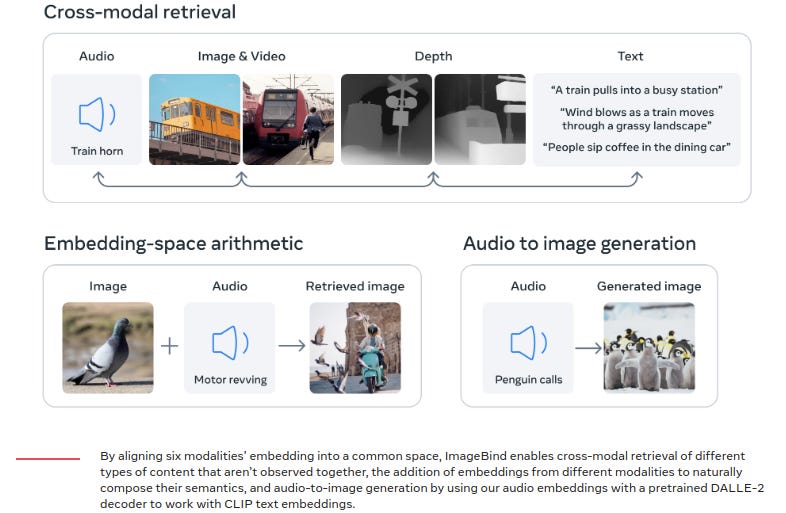

Meta’s ImageBind: Holistic AI learning across six modalities just made multi-modality go wild. “The model learns a single embedding, or shared representation space, not just for text, image/video, and audio, but also for sensors that record depth (3D), thermal (infrared radiation), and inertial measurement units (IMU), which calculate motion and position.” From this, you can convert from any modality any other, meaning you can give it an audio and get an image representing the same thing, and vice versa. This opens up a whole new set of possible generative AI modalities and extends multi-modal AI models into new dimensions.

IBM is getting back in the AI game with Watson.X. One-time AI leader and now laggard, IBM is pitching customizable foundation AI models and partnership with HuggingFace to build, tune and deploy them.

The latest entrant into the coding assistant space is BigCode’s Starcoder, available as an open-source model (released under an OpenRAIL license) on Hugging Face:

“StarCoder models are 15.5B parameter models trained on 80+ programming languages … it is not an instruction model and commands like "Write a function that computes the square root." do not work well. However, by using the Tech Assistant prompt you can turn it into a capable technical assistant.”

We’ve shared a ton of open-source LLM release announcements in the past two months, and the activity has been incredible. But how good are the models? HuggingFace spaces has created an “Open LLM Leaderboard” to keep track of the progress. Of the measured smaller (sub 20B parameter) LLMs, stable Vicuna 13B shows the best rankings across benchmarks.

AI Research News

Another way to do prompt tuning LLMs: Residual Prompt Tuning: Improving Prompt Tuning with Residual Reparameterization. Residual Prompt Tuning is a simple and efficient method that significantly improves the performance and stability of prompt tuning, showing improvements on SuperGLUE benchmark over prompt tuning.

FrugalGPT: How to Use Large Language Models While Reducing Cost and Improving Performance. FrugalGPT implements a simple yet flexible way of calling LLMs in a cascade, using smaller and cheaper models if they are sufficient, in order to reduce cost and improve accuracy. “FrugalGPT can match the performance of the best individual LLM (e.g. GPT-4) with up to 98% cost reduction or improve the accuracy over GPT-4 by 4% with the same cost.”

Principle-Driven Self-Alignment of Language Models from Scratch with Minimal Human Supervision: “We propose a novel approach called SELF-ALIGN, which combines principle-driven reasoning and the generative power of LLMs for the self-alignment of AI agents with minimal human supervision.” The main benefit of this approach is to train for alignment while minimizing the overhead of human supervision and feedback.

OpenAI peeks into the “black box” of neural networks with the paper “Language models can explain neurons in language models.” OpenAI used GPT-4 to develop and evaluate natural language explanations for the behavior of a simpler AI model, GPT-2, by looking at and interpreting neural network activations. This is helpful in making LLMs more interpretable.

AI Business News

Under the revised AI Act, AI tools will be categorized based on their perceived risk levels, ranging from low to unacceptable. The act imposes different obligations on governments and companies depending on the level of risk associated with the use of these tools. … One of the key provisions of the revised AI Act is the ban on the use of facial recognition technology in public spaces

Not exactly news, but this article reviews how Google and OpenAI have been reining in the public sharing of their AI research as the competition to lead in AI intensifies.

Chai Research announces the Guanaco LLM Competition with a $1 Million Cash Prize, for an “open community challenge with real-user evaluations,” and with a goal of “accelerating community AGI.”

Alexis Ohanian, Reddit co-founder: The AI revolution is “bigger than the smartphone.” He said, “I have not seen anything like this before in my career.” Sorry, that’s not enough hype.

VC interest in AI is insatiable. AI2 Incubator’s new $30M fund triples down on early-stage AI startups.

AI Opinions and Articles

Mustafa Suleyman, DeepMind co-founder, warns that AI will create a ‘serious number of losers’ in job market: “Unquestionably, many of the tasks in white-collar land will look very different in the next five to 10 years.”

A Look Back …

JSTOR shares an annotated AI bibliography, made by AI: “ChatGPT generated this annotated bibliography for us. Don’t worry, we’ll ask (and pay) a human to write one too.” This list includes famous seminal works such as:

“Computing Machinery and Intelligence,” by Alan Turing (1950)

“A Proposal for the Dartmouth Summer Research Project on Artificial Intelligence,” by John McCarthy, Marvin Minsky, Nathaniel Rochester, and Claude Shannon (1956)

“The Unreasonable Effectiveness of Mathematics in the Natural Sciences,” by Eugene Wigner (1960)

Perceptrons, by Marvin Minsky and Seymour Papert (1969)

Their final entry was “Generative Adversarial Networks,” by Ian J. Goodfellow et al. (2014):

This paper introduced the concept of generative adversarial networks (GANs), a type of neural network architecture that can generate new data samples that are similar to a given dataset. The authors discuss the theoretical foundations of GANs and provide several examples of their use in practice. GANs have been used in a wide range of applications, including image and video generation, and the production of deepfakes.

Given how important generative AI for images and audio is becoming, with profound impacts to our culture and society, it was appropriate to highlight this seminal paper.

If you liked this article, then please share it, like it, and restack it on Substack. Please share any thoughts and feedback on it with a comment.