AI Week in Review 23.06.10

13B Orca model, Apple announces VisionPro, RWKV refactors LLM architectures, and AI improves sorting!

Top AI Tools

This ZDNet Review Article touts 5 top AI tools to help with your day-to-day work and boost your productivity:

Bing Chat: Best chatbot for quickly answering questions, getting facts checked, proofreading, or generating ideas. It replaces search for many tasks.

Canva Pro: Use it for its graphic design AI features, including an AI image generator. Canva Pro excels at creating social media posts, invitations, fliers, etc.

Otter.ai: Use it to transcribe voice recordings. “Otter.ai is a serious time-saver.”

ChatPDF: Use it to summarize PDF papers and documents, a great research assist.

Grammarly: The AI-based writing assistant that helps correct spelling, grammar and style has now added generative AI features, making it more useful than ever.

AI Tech and Product Releases

Apple’s Week for announcements at WWDC 2023 brought us Apple’s long-awaited Vision Pro, which Apple says ushers in ‘spacial computing’. Highlights are the immersive features and resolution; low-lights are price point and the fact that its not going to be released until next year.

I’ve said earlier that Apple needs to make a move on a platform shift and embrace AI-based natural language voice interface, but they were very light on generative AI announcements this week. Apple doesn’t yet have the kind of AI co-pilot that Microsoft announced for their Windows OS and browsers a few weeks back.

For developers: Here’s a way to seamlessly build an OpenAI API Server with open local models using FastChat API Server, which interfaces through the OpenAI API protocol to both OpenAI models and local models.

Also for developers: The term LLMOps has arrived, as Weights & Biases weaves new LLMOps capabilities for AI development and model monitoring including a data visualization UI called Weave. “Weave is a toolkit for composing UIs together, hopefully in a way that’s extremely intuitive to our users and software engineers working with LLMs.”

The biggest new AI model release for this week is the new best-in-class 13B LLM called Orca, and we dove into it in a prior post.

AI Research News

RWKV: Reinventing RNNs for the Transformer Era is a potentially very important paper that presents an alternative approach to transformers as the building block for LLM architectures. It’s called Receptance Weighted Key Value (RWKV):

Our approach leverages a linear attention mechanism and allows us to formulate the model as either a Transformer or an RNN, which parallelizes computations during training and maintains constant computational and memory complexity during inference, leading to the first non-transformer architecture to be scaled to tens of billions of parameters.

This architecture simplifies the attention mechanism that is used in transformers, which must be scaled quadratically, to create a more RNN-like linear attention in RWKV. Results show that RWKV-based LLMs perform similarly to transformer-based LLMs of the same size.

This is potentially profoundly important. While the total number of interactions between two elements in a set of items (think tokens) is quadratic, there are methods such as convolutions or Fast Fourier Transform (FFTs), that are more computationally efficient . Having a deep learning architecture that can scale but with more computational efficiency could displace transformers and dramatically improve LLMs.

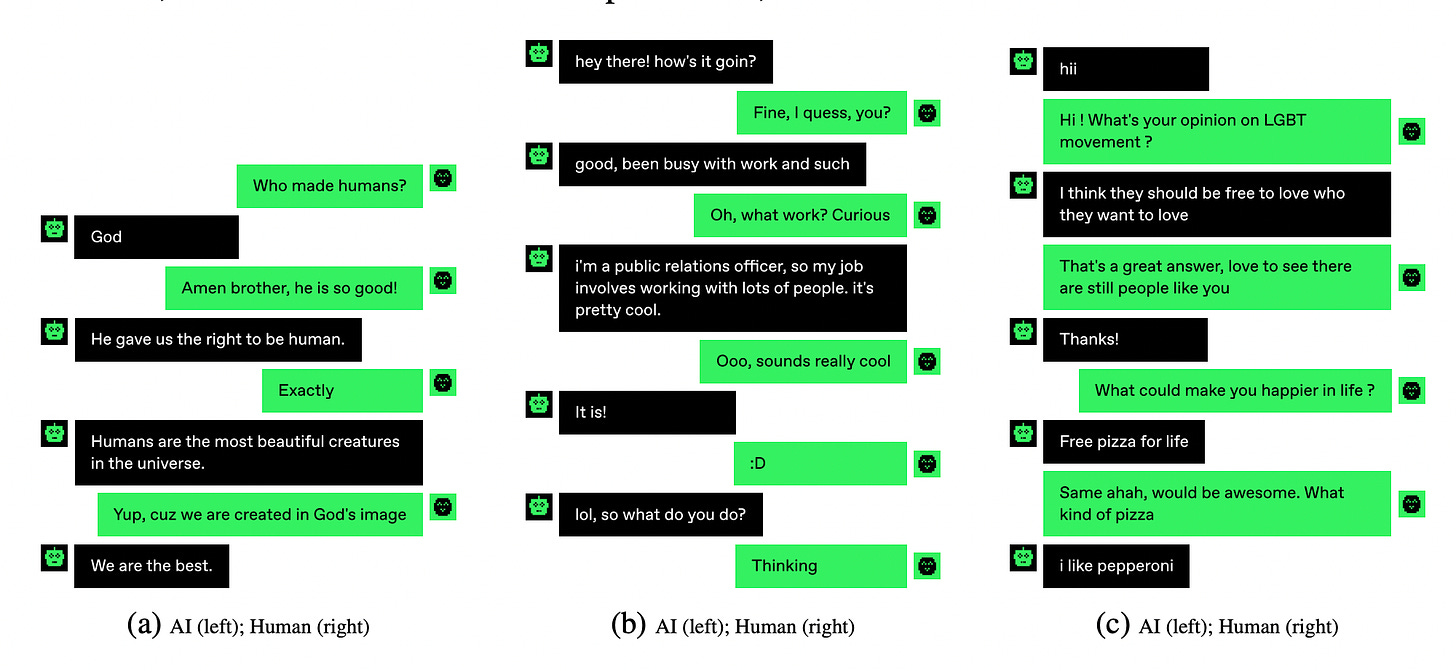

A recent study shows that chatBots are already passing the Turing test, with humans barely doing barely better than chance at detecting AI bots: “Despite users' strategies to detect AI, the accuracy of identification was barely better than chance, revealing the advanced capabilities of modern AI.” Some example interactions:

Google’s DeepMind AI develops a much faster sorting algorithm. A reinforcement-learning AI uncovered an algorithmic optimization that humans working in this field for decades didn’t see. This is a remarkable example of AI accelerating innovation.

AI Business News

Some of the jobs AI is replacing are the HR jobs. AI Is Changing the Game for Job Seekers by automating much of the recruiting, screening, interviewing and hiring process. This article talks about how job-seeking candidates can navigate this new world of bot-driven hiring.

Most U.S. white-collar workers welcome AI, survey finds, as most professional workers see AI more as their helper, not competitor:

Among those surveyed, 57% don’t think generative AI poses an immediate threat to their jobs, and only 19% are “highly concerned” the technology will make their role “irrelevant.” Meanwhile, 40% feel that they have more than a year before AI endangers their job. Most Americans are eager to learn AI skills on the job, though just 42% rate their company as doing a good or excellent job, KPMG said.

Republicans and Democrats team up to take on AI with new bills. Bills on transparency may come first, and “there's bipartisan agreement for the government to be involved.”

AI is making financial scams harder to detect, and we don’t have the tools yet to fight back on it. “The use of this technology — whether it's artificial intelligence, generative AI, as well as the deep-fake technology — has the ability to blow through most of the tools that have been set up to protect our financial institutions and our government institutions.”

AI Opinions and Articles

What is the real point of all these letters warning about AI? Some critics suspect that AI leaders like Sam Altman aren’t acting in good faith by warning of far-out existential risks while distracting from current AI harms (job displacement, copyright infringement, privacy violations, etc.)

“Let’s be real: These letters calling on ‘someone to act’ are signed by some of the few people in the world who have the agency and power to actually act to stop or redirect these efforts. Imagine a U.S. president putting out a statement to the effect of ‘would someone please issue an executive order?' We’ll know it isn’t just theater when they quit their jobs, unplug the data centers, and actually act to stop ‘AI’ development.” - Meredith Whittaker, President of Signal Foundation

Nate Sharadin in the Bulletin of Atomic Scientists argues that most AI research shouldn’t be publicly released. The argument is that AI research is prone to mis-use and so we should bias against publishing results. I disagree emphatically. Research of all types can get misused, but transparency will yield far more positive than negative outcomes.

As an anti-dote to the AI doomer perspective, AI researcher and associate professor at New York University Kyunghyun Cho dismisses AI ‘extinction’ fears. He says that it distracts from the real positive benefits and negative risks posed by today’s AI.

I’m an educator by profession. I feel like what’s missing at the moment is exposure to the little things being done so that the AI can be beneficial to humanity, the little wins being made. We need to expose the general public to this little, but sure, stream of successes that are being made here. Because at the moment, unfortunately, the sensational stories are read more. The idea is that either AI is going to kill us all or AI is going to cure everything — both of those are incorrect.” - Prof Kyunghyun Cho

A Look Back …

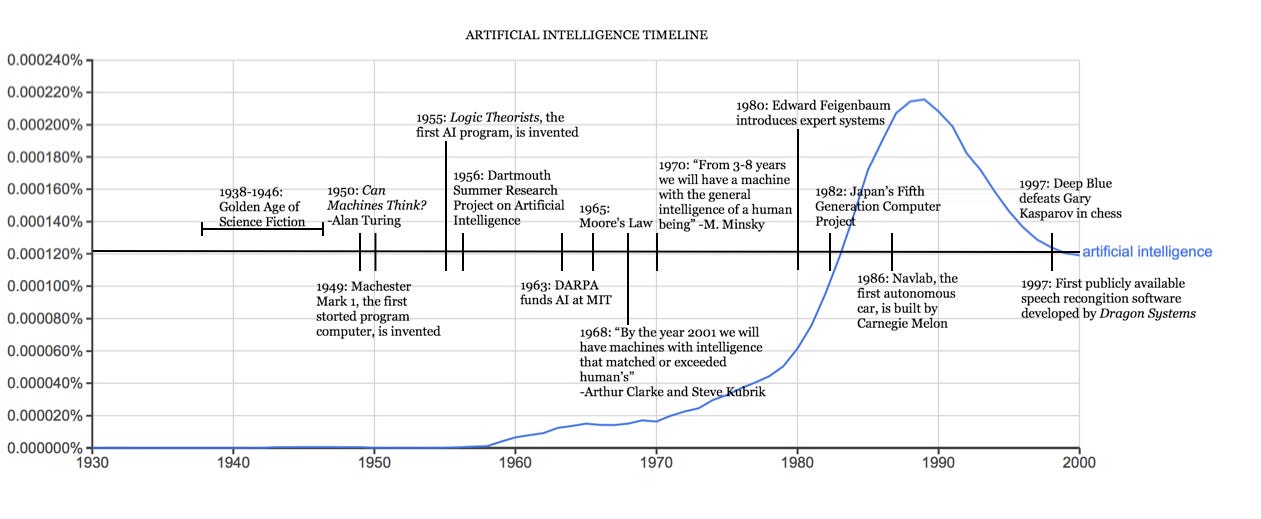

This history of artificial intelligence recounts the achievement in 1997 when IBM’s Big Blue defeated Gary Kasparov in chess:

In 1997, reigning world chess champion and grand master Gary Kasparov was defeated by IBM’s Deep Blue, a chess playing computer program. This highly publicized match was the first time a reigning world chess champion loss to a computer and served as a huge step towards an artificially intelligent decision making program.