Orca, a new killer Fine-Tuned AI Model

Orca-13B uses Explanation Tuning to yield near-ChatGPT-level performance

We interrupt our series on AI Agents for a deep dive into an important AI research result just published.

Orca: Progressive Learning from Complex Explanation Traces of GPT-4 is a paper from Microsoft researchers that takes prior efforts in fine-tuning smaller AI models from outputs larger AI models to a new level. They present a best-in-class 13B parameter model, beating out prior leader 13B Vicuna. But more importantly, how they did it points to both the limitations of prior fine-tuning methods and a path forward for even better highly-parameter-efficient AI models.

Fine-Tuning from Teacher LFMs

The Orca authors used a term I’ve not seen before: Large foundation models or LFMs. Its appropriate to distinguish these foundation AI models, because we are using the term LLM to also refer to 7B and 13B models that are 10 times to 100 times smaller than the largest LFMs like GPT-4 and PaLM-2.

The concept of fine-tuning from a larger ‘teacher’ LFM to a smaller LLM was first presented a few months ago in Alpaca, the 7B model based on a LLaMA model that was fine-tuned with chatGPT outputs. Since then, there have been a number of efforts in instruction-following fine-tuning, using as the ‘teacher’ LFM ChatGPT and GPT-4. There have also been efforts to use human-written responses for training smaller LLMs; an example of that is the open source Dolly model from databricks.

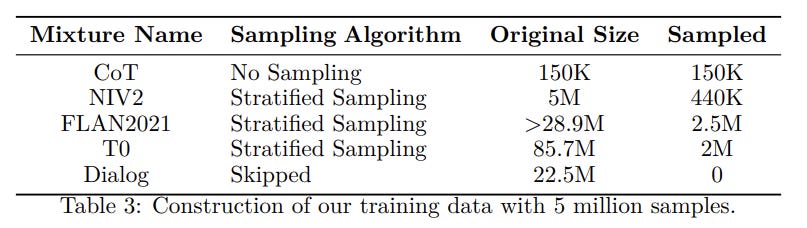

The original Alpaca model was fine-tuned on 52,000 prompt-response pairs, and other followup models did similar. The Orca model expanded this to a much larger set of prompts: 5 million prompt pairs run on chatGPT, with 1 million run on GPT-4 as well.

To get there, they used the Flan-2 collection of instruction-tuning prompts and other collections - NIV2, Chain-of-Thought, T0, Dialog - sampling from the prompt databases to obtain 5 million total queries.

Reasoning versus Style Imitation

Yet it wasn’t the size of the prompt training set alone that set the Orca work apart, but how they used chain-of-thought in prompts and responses.

The researchers in Orca addressed issues uncovered in a prior paper “The False Promise of Imitating Proprietary LLMs,” which highlighted the limitations of the fine-tuned smaller models that are trained on larger models. Specifically, they found that: “imitation models are adept at mimicking ChatGPT’s style but not its factuality.”

The small models suffer from hallucinations and factual gaps that are similar to but more serious than their larger teacher LFMs. How can we address this?

To boost the reasoning quality of GPT-4 and other AI model outputs, we can use review, reflection and producing step-by-step output. It stands to reason that instruction prompts that include these would yield superior instruction-tuning pairs.

The Orca authors guided reasoning in the prompt answer with system instructions that guide the response, including chain-of-thought reasoning steps, explain like I’m five, being helpful and informative, etc.

We hand-craft a total of 16 system messages designed to evoke different kinds of responses from the LFM. This allows us to train Orca to generate long and short answers; follow guidelines, instructions, and format; generate creative content as well as address information seeking queries; and most importantly, generate explanations and step-by-step reasoning for the responses, as prompted.

They call this approach Explanation Tuning. Here’s an example of a (GPT-4) prompt-response training pair:

System Instruction: You are an AI assistant. User will you give you a task. Your goal is to complete the task as faithfully as you can. While performing the task think step-by-step and justify your steps. User Instruction: Use the given data to calculate the median. Input: [7, 3, 8, 2, 10]

Output: To calculate the median, I will follow these steps:

Step 1: Arrange the data in ascending order [2, 3, 7, 8, 10]

Step 2: Determine if the data set has an odd or even number of values The data set has 5 values, which is an odd number.

Step 3: Find the middle value

Since the data set has an odd number of values, the median is the middle value in the ordered list. The middle value in the list [2, 3, 7, 8, 10] is 7.The result of this is to invoke prompting that yields higher quality responses from ChatGPT and GPT-4, as shown below:

The fine-tuning for Orca used ChatGPT as an intermediate teacher assistant. They first trained on 5 million FLAN queries on ChatGPT responses, followed by training on 1 million FLAN queries on GPT-4 augmentations. Using ChatGPT up-front lowers API token cost, and it also served as a form of ‘progressive learning’ to reduce the ‘capacity gap’. Think of it as starting with easier examples in learning first (ChatGPT) then going to harder ones (GPT-4).

Orca Benchmarks

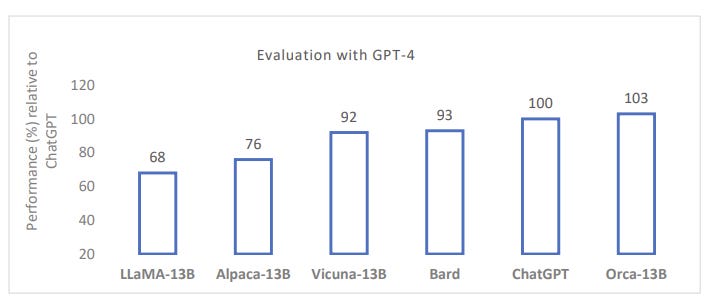

The resulting fine-tuned model shows remarkable improvements compared with similar fine-tuned open source models like Vicuna 13B. On a range of benchmarks, such as BigBench Hard (BBH) and AGIEval, a benchmark derived from human standardized tests, including the GRE, GMAT, SAT and LSAT.

Orca generally improved greatly on Vicuna 13B and got much closer to ChatGPT-level results overall:

On Big-Bench Hard: “Orca performs marginally better than ChatGPT on aggregate across all tasks; significantly lags GPT-4; and outperforms Vicuna by 113%.”

On open-ended generation: “Orca retains 95% of ChatGPT quality and 85% of GPT-4 quality aggregated across all datasets as assessed by GPT-4. Orca shows a 10-point improvement over Vicuna on an aggregate.”

On AGIEval: “Overall, Orca retains 88% of ChatGPT quality; significantly lags GPT-4; and outperforms Vicuna by 42%” with an overall score of 41.7 versus 47.2 for ChatGPT, 62 for GPT-4, and 29.3 for Vicuna-13B.

Overall, this is remarkable AI model performance. Now, it could well be that the prompts and the step-by-step approach may help with these specific areas that AGIEval and other reasoning-heavy benchmarks are concerned with. So, to be clear, some areas were not testing. But if there are gaps, it may well be the ‘step-by-step’ explanation training would be the way to extend to those as well.

The Capacity of AI Models

Now that this model is released, it sets a new standard for performance expectations. The main lesson of this research is to once more show the power of explanations (Explanation Tuning) in improving AI model quality.

“ … learning from step-by-step explanations (generated by humans or more powerful AI models) could significantly improve the quality of models regardless of their size.”

While not open sourced, I suspect it won’t be long before an open source version or imitator of this model is created, and other fine-tuning models use the ‘step-by-step’ Explanation Tuning approach and expand training examples into the millions as Orca has done. The fine-tuning training cost, even with 5 million instruction-tuning examples, remains very reasonable and far lower than building a new model.

Can we get even better at fine-tuning? The paper “Let’s verify step by step” that we discussed prior improves the reasoning capability of models like GPT-4 by having a reward model around the process. It seems logical that a process-based reward model can improve these teacher-student trained models in the same way.

There may be even more headroom left in the capabilities of a 13B parameter model. For example, if we trained it on 10 trillion tokens, could we get it to GPT-4 level, or is there a performance ceiling based on the parameter count? What’s interesting about this result is that the ceiling may be much higher that we imagined before.

Consider that if a 13B model can get to ChatGPT-level quality, effectively compressing a 175B model quality into a 13B imitation-student model, then other performant AI models could be similarly compressed by an other of magnitude.

The consequences of this are clear: There’s a lot more room to optimize AI models for parameter efficiency.

Postscript. The speed of AI research

“Let’s research this step-by-step.”

Science progresses step by step, result by result, and paper by paper, each researcher or scientist standing on the shoulders of those who came before. Pre-internet, science would progressed at the speed of the publication cycle. It would take years for results to disseminate in prior centuries.

More recently, different fields would iterate and progress on a regular cycle of conference or journal papers. Sometimes it would take months or even more than a year to publish. The pace sped up with the arrival of internet-era publishing and communication. Research evolved faster as results were shared more quickly.

Thanks to Arxiv, papers are uploaded, shared, digested and used by others instantaneously.

This Orca paper, released (submitted to Arxiv) on June 4, referred to a paper a mere two weeks old, which in turn had referred to several generations of papers citing prior papers, iterating on variations of the original LLaMA and Alpaca papers in February and March.

Progress is fast in this area because fine-tuning a smaller model is fast and cheap. We are not iterating in years or even months, we are iterating on research progress every week.