TL;DR Feedback drives learning and improvement generally, and LLMs can review and revise query responses to on-the-fly iteratively improve their final result. Advanced LLM models like GPT-4 have the ability to critique their own results.

To get most useful results out of chatGPT, you need to use the right prompts. Just as “garbage in, garbage out”, the right prompt can make for the best output. That art of giving the right input to obtain best results is called Prompt Engineering. Improving prompts can take the LLM from providing non-useful to great results.

But not always. There are times though that it’s clear the answer missed, but tweaking a prompt request won’t fix the LLM’s mis-steps.

One way is ask the LLM to judge it’s answer, to figure out where it went wrong. The simple way to nudge it back is to ask “Was the answer correct?” or “Did the response follow the instructions?”

Even that can fall flat - see my postscript about a failed attempt to get Bing Chat to write a non-rhyming poem, and failing thrice despite it acknowledging its failure. My attempt was inspired by an Eric Jang blog post “Can LLMs Critique and Iterate on Their Own Outputs?” He shares both success and failures at prompt engineering and asking LLMs to review their own output for correctness. He says:

The implications of this are that we have another tool beyond “prefix prompt engineering” and fine-tuning to get LLMs to do what we want.

This blog post shows that there is some preliminary evidence that GPT-4 possess some ability to edit own prior generations based on reasoning whether their output makes sense. Even when it is not able to provide corrections to incorrect outputs, it can still assert that its past solutions are incorrect.

Reflection in the LLM can help correct common cases of hallucination and poor instruction-following, if the LLM is powerful enough to ‘understand’ its own output. We suggested in our prior article on ground-truthing LLMs how to give LLMs a chance to iterate to improve their answer: “Prompt for "step-by-step” or chain-of-thought reasoning, or review, critique and revise the LLM response iteratively.”

What we have learned from recent AI research is that, indeed, LLMs are getting that powerful.

Review, Critique, Reflect, & Revise to Improve

Here are some of the results from recent AI research addressing the topic, some of which we have mentioned in prior AI Week in Review posts. The common thread is to use some of feedback to evaluate or critique and therefore improve the result.

The first is “DERA: Enhancing Large Language Model Completions with Dialog-Enabled Resolving Agents.”1 In DERA, or “dialog enabled resolving agents”, multiple LLM agents dialog to get better results: a Researcher, who processes information and identifies crucial problem components, and a Decider, who makes final output judgments. This approach yielded improvements over GPT-4 alone on MedQA questions and human evaluations.

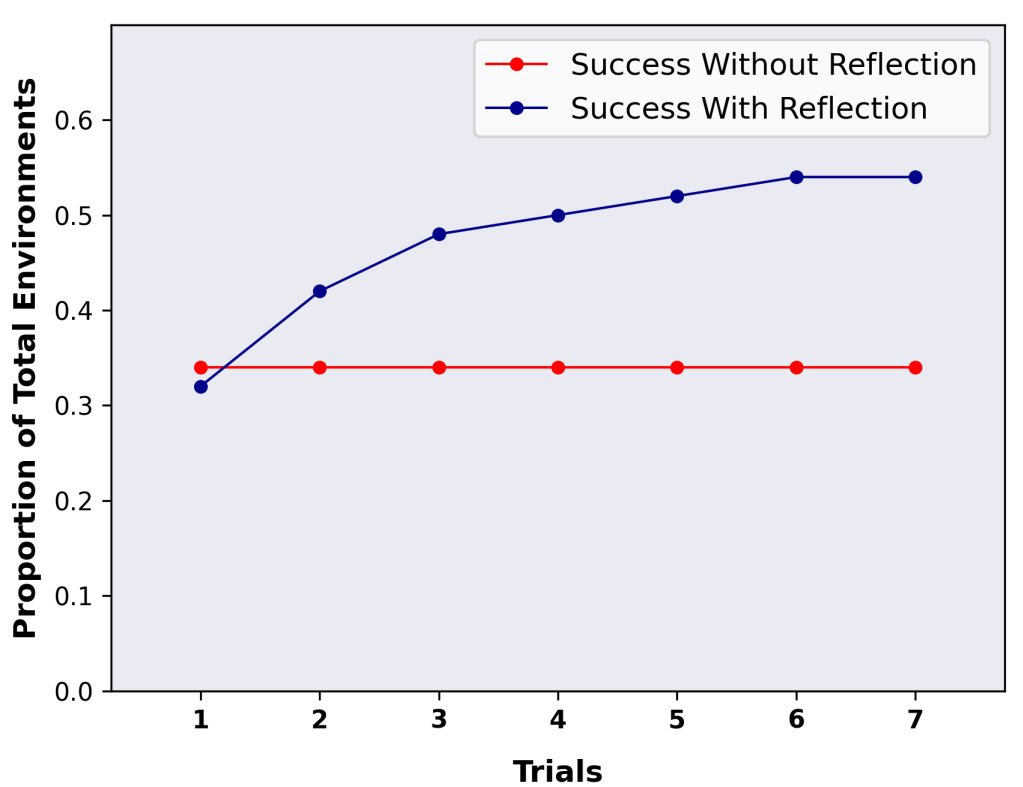

Likewise Reflexion2 creates an autonomous agent that uses a pattern of self-reflecting and iterating on answers to yield improved reasoning skills. Reflexion is based on the ReAct framework of an agent that uses the LLM (in this study GPT-3 and GPT-3.5 was used) and then takes an action, and it adds dynamic memory and a Reflection component that uses the LLM to reflect on its own reasoning and task-specific action choices performance.

Reflexion shows drastic improvements on the final results on some benchmarks: On the AlfWorld benchmark, the Reflexion agent achieved 97% success discovery rate in just 12 autonomous trials, outperforming the base ReAct agent’s 75% accuracy. Results on HotPotQA using reflexion improved from 34% to 54% “demonstrating that the growing, dynamic working memory promoted learning through trial.”

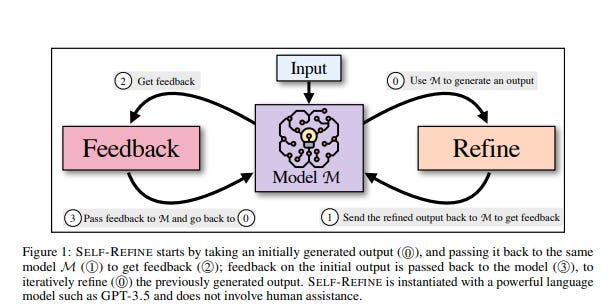

“Self-Refine: Iterative Refinement with Self-Feedback”3 introduces a framework that improves initial outputs from LLMs through iterative feedback and refinement. The main idea is to allow the same model to provide multi-aspect feedback on its own output, and then use that feedback to refine the output. They showed up to 20% improvement on GPT-4 output results on diverse tasks using this method.

In “REFINER: Reasoning Feedback on Intermediate Representations,”4 the authors propose a framework for applying LLM feedback via a separate critic model. Specifically, they fine-tune LLMs (generator model) to explicitly generate intermediate reasoning steps, then prompt the critic model to provides fine-grained structured feedback on intermediate representations of the response, which the generator LM uses to iteratively improve its intermediate arguments. See workflow below.

Getting the feedback to correct reasoning steps improves performance on a variety of reasoning tasks. Using GPT-3.5 with the REFINER critic model to iteratively improve results, they were able to show significant improvement on reasoning benchmarks like MWP (Math Word Problem) compared to GPT-3.5 with Chain-of-thought (CoT) as a baseline, as show in this table.

The Power of Feedback

What does this tell us? A few points:

The general principle that feedback drives learning and improvement can be applied effectively to the specific loop of analyzing and revising LLM responses to prompts.

This feedback can be a fine-grained evaluation of chain-of-thought or specific facts or elements of the response. More specific and fine-grained is more useful.

A separate ‘critic’ model could be the evaluation step, or if the LLM is powerful enough, it can construct its own evaluation on what it just created. This feedback can use a rubric to determine how close to correct the answer is.

As LLMs scale, they get not just better at generating answers, but having enough understanding to determine how good their answer is. GPT-3.5 and GPT-4 in particular are powerful enough to review their own work effectively. These LLMs can further express their own rubrics based on their world understanding.

Just as human need to concentrate to keep their chain-of-thought correct, and check their work to get the best results, so too do LLMs.

GPT-4-level LLMs are powerful enough to iterate through this loop:

Respond to a prompt with an answer.

Compare the answer to the prompt and evaluate it. ‘Is the answer correct?’ and determine flaws, errors, hallucinations or needed corrections.

Feed the answer critique into dynamic memory.

Reload answer critique with the prompt and generate a new response, with specific attention on correcting errors and flaws in prior response.

Consider how powerful this could be in the context of code development. An initial attempt at LLM coding often is a close-to-good but flawed program with some errors. It will give you the boiler-plate, but the human has to go in and do the specifics to get that last 5% right. However, if the model is powerful enough to find its own errors, it can flag them and then generate a modified result that removes those bugs. Even if some remain, it can then keep going until it’s done and there are no bugs left. That’s the entire code debugging process in a nutshell and it turns a ‘co-pilot’ level AI solution into a plausibly complete one.

Feedback is a powerful and general way to learn, and LLMs are built on feedback: RL is a feedback learning technique; so too is back-propogation; so too is fine-tuning. So it’s not surprising that putting an LLM response itself into a feedback loop makes it much more powerful. There is a “surprising ease and effectiveness of AI in a loop.”

We can expect “AI in the loop” to become the norm, with many types of feedback loops to improve AI, because the method is powerful and there is a lot of room for using it to improve LLM results.

PostScript: Bing Chat Cannot Stop Rhyming

This is an example where the word play just seems to be too much for the LLM. It’s writing a poem so it can’t help itself from making the poem rhyme, not matter how much it’s corrected and chastised on it. This may be an artifact of poor instruction-following due to the word “poem”.

Nair, V., Schumacher, E., Tso, G., & Kannan, A. (2023). DERA: Enhancing Large Language Model Completions with Dialog-Enabled Resolving Agents. arXiv preprint arXiv:2303.17071.

Shinn, N., Labash, B., & Gopinath, A. (2023). Reflexion: an autonomous agent with dynamic memory and self-reflection. arXiv preprint arXiv:2303.11366.

Madaan, A., Tandon, N., Gupta, P., Hallinan, S., Gao, L., Wiegreffe, S., Alon, U., Dziri, N., Prabhumoye, S., Yang, Y., Welleck, S., Majumder, B. P., Gupta, S., Yazdanbakhsh, A., & Clark, P. (2023). Self-Refine: Iterative Refinement with Self-Feedback. arXiv preprint arXiv:2303.17651.

Paul, D., Ismayilzada, M., Peyrard, M., Borges, B., Bosselut, A., West, R., & Faltings, B. (2023). REFINER: Reasoning Feedback on Intermediate Representations. arXiv preprint arXiv:2304.01904.