AI Week In Review 23.09.30

BIGGEST WEEK! Cloudflare AI, GPT-4V, ChatGPT Browser, Meta Emu & custom AIs, Qwen-14B, MistralAI 7B, Microsoft AutoGen, the iPhone for AI

TL;DR We say it many weeks, but this week has been a HUGE week in AI. Read on …

Top Tools

We wrote on OpenAI’s GPT-4V release this week in “ChatGPT Gets Vision and a Voice.” This is a profound advance on GPT-4 and we will give it our ‘top tool’ spot, on account of the amazing use cases already being reported:

Multi-modal educational tutor explains details from a screenshot of a human cell diagram.

Check homework from screenshot.

Takes a screenshot of python code and GPT-4V will translate it into Javascript

GPT-4V-powered agent for front-end website building from Matt Schumer: “Autonomously designs webapps -- writes code, looks at the resulting site, improves the code accordingly, repeat.”

It can read obscure handwriting.

The OCR plus visual plus LLM capabilities can help this fix so many issues with paperwork and document workflows, that one can easily see how this massively moves the needle on business productivity. Yet it will go far beyond this.

AI Tech and Product Releases

Cloudflare launches new AI tools to help customers deploy and run models. They are partnering with Hugging Face and offering several new products and services to help users leverage AI in simpler and lower-cost ways: Workers AI lets customers run AI models on a pay-as-you-go basis; Vectorize provides vector database storage for AI models; AI Gateway provides monitoring of AI apps.

In addition to GPT-4V, OpenAI this week brought back ChatGPT Browser mode: “OpenAI Enables ChatGPT Bing Web Browser Plugin After 3 Dark Months”

Meta made a slew of AI-related announcements, which we covered in “Meta Offers AI to Billions,” including AI image generation capabilies, custom AI chatbots on their messenger platforms, and AI-enabled smart sunglasses. Meta is driving AI features across their social-media platforms.

Alibaba released Qwen-14B, trained on 3 trillion tokens (more than Llama2 and far beyond GPT-3), its the most powerful open-source model for its size. It comes in 5 different versions: Base, Chat, Code, Math and Vision. They produced a Quen Technical Report and Qwen-14B open models are available on HuggingFace.

Mistral AI, a recently formed well-funded startup, dropped a startlingly good 7B AI model by just releasing the parameter weights as a torrent link. The 7B punches well above its weight:

Mistral 7B’s performance demonstrates what small models can do with enough conviction. Tracking the smallest models performing above 60% on MMLU is quite instructive: in two years, it went from Gopher (280B, DeepMind. 2021), to Chinchilla (70B, DeepMind, 2022), to Llama 2 (34B, Meta, July 2023), and to Mistral 7B.

This shows AI model efficiency can get a lot better, and it will be exciting to see if Mistral AI is able to scale up this higher quality AI models.

Spotify has introduced AI voice translations for podcasts. We have seen other AI companies roll out similar voice translation capabilities (translate the audio but keep the accent and inflection). This translation feature is going to be an embedded AI feature in many platforms.

AI hacker news: This guy got to run GPT-2 on an Apple Watch: “I bought an @Apple Watch Ultra 2 today decided to abuse its 4 core neural engine.”

AI Research News

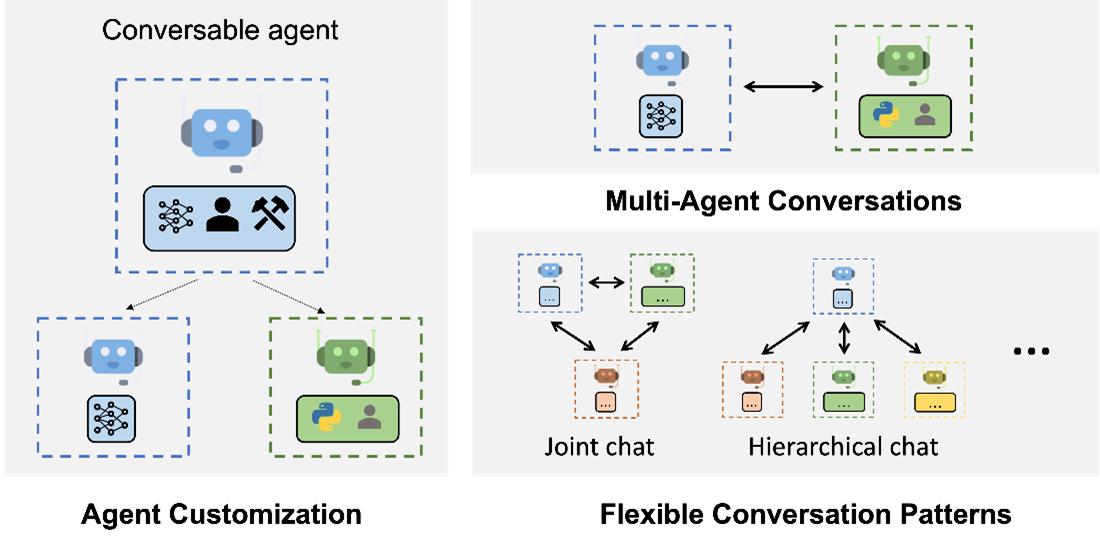

Microsoft has released on Github a framework for AI agents called AutoGen, Enabling next-generation large language model applications. This framework helps “simplifying the orchestration, optimization, and automation of workflows for large language model (LLM) applications.”

AutoGen helps workflows involving multiple AI models and AI agents conversing with each-other. As a sign that this has excited a lot of people working on building AI Agents, it is the number two trending repo on Github.

The paper GPT-Fathom: Benchmarking Large Language Models to Decipher the Evolutionary Path towards GPT-4 and Beyond tries to improve how we evaluate LLMs and improve transparency of LLM performance:

We introduce GPT-Fathom, an open-source and reproducible LLM evaluation suite built on top of OpenAI Evals. We systematically evaluate 10+ leading LLMs as well as OpenAI's legacy models on 20+ curated benchmarks across 7 capability categories, all under aligned settings. Our retrospective study on OpenAI's earlier models offers valuable insights into the evolutionary path from GPT-3 to GPT-4.

The AI pioneer Ilya Sutskevar has compared LLMs to compression algorithms as an analogy for understanding LLMs, but AI researchers have now made it explicit: Language Modeling Is Compression. Along the way, they show that Chinchilla LLM compresses images and speech samples better than prior compression algorithms.

AI Business and Policy

The Information reported that Sam Altman, Jony Ive, and Masa Son are working on a AI hardware device, and FT reported OpenAI and Jony Ive in talks to raise $1bn from SoftBank for AI device venture. Some are calling it the iPhone of AI.

Humane’s ‘AI Pin’ debuts on the Paris runway. It’s unclear what the AI Pin actually does, other than look like a cool device.

The dumbest AI headline of the week: “Energy consumption 'to dramatically increase' because of AI.” There are many ways this is wrong. AI will help us improve productivity on many fronts, and energy is one of them.

Another story of AI book ripoffs annoying real authors: Authors shocked to find AI ripoffs of their books being sold on Amazon. Perhaps this problem might get reduced if Amazon was found liable for participating in this fraud.

US-China 'tech war' over AI leads US to restrict AI chips going to Middle East.

AI Opinions and Articles

Smerconish asks: “What if the biggest threat of all is to human relationships?” and suggests “AI girlfriends imperil generation of young men.” It’s certainly a valid concern, as the artificial is so convincingly real.

On a related noted, some are concerned by the dangers posed by Meta’s AI celebrity lookalike chatbots and by social acceptance of 'counterfeit people'.

An interview with “The Princeton researchers calling out ‘AI snake oil’”, who publish at AI Snake Oil. They have a lot of interesting and valid perspectives on AI. Regarding the “snake oil” in AI, they say that “most of the snake oil is concentrated in predictive AI,” making predictions that don’t work:

You have AI hiring tools, for instance, which screen people based on questions like, “Do you keep your desk clean?” or by analyzing their facial expressions and voice. There’s no basis to believe that kind of prediction has any statistical validity at all. There have been zero studies of these tools, because researchers don’t have access and companies are not publishing their data.

They also have doubt about AI’s existential risk to humanity:

Narayanan: There are just so many fundamental flaws in the argument that x-risk [existential risk] is so serious that we need urgent action on it. We’re calling it a “tower of fallacies.” I think there’s just fallacies on every level. One is this idea that AGI is coming at us really fast, and a lot of that has been based on naive extrapolations of trends in the scaling up of these models. But if you look at the technical reality, scaling has already basically stopped yielding dividends. A lot of the arguments that this is imminent just don’t really make sense.

Another is that AI is going to go rogue, it’s going to have its own agency, it’s going to do all these things. Those arguments are being offered without any evidence by extrapolating based on [purely theoretical] examples. Whatever risks there are from very powerful AI, they will be realized earlier from people directing AI to do bad things, rather than from AI going against its programming and developing agency on its own.

So the basic question is, how are you defending against hacking or tricking these AI models? It’s horrifying to me that companies are ignoring those security vulnerabilities that exist today and instead smoking their pipes and speculating about a future rogue AI. That has been really depressing.

The above argument captures it so well: AI Security is the real issue, no AI-will-kill-us-all. We humans will continue to be the weakest link and biggest risk and abuser of technology.

A Look Back …

If the future is wearing Meta’s smart AI RayBan sunglasses that can help you live-stream hand’s free while conversing with your AI assistant and look like Tom Cruise in Risky Business, I’m okay with that. Back to the Future!

I wouldn’t call what ChatGPT does vision or listening ... seems like a gross mischaracterization.