AI Week In Review 23.12.02

Amazon Q, Pika 1.0, Qwen 70B, Meta's Audiobox, Stability AI's SDXL Turbo, Perplexity's pplx-70B, and GNoME changes the material world.

Our cover photo comes from the story “Scientists 3D Print a Complex Robotic Hand With Bones, Tendons, and Ligaments.” The achievement is not so much creating this as it was 3D-printing it in one go, with a 3D printer that can concurrently ‘print’ multiple types of materials.

AI Tech and Product Releases

Amazon shared a few AI related announcements at their Re:Invent conference this week:

Amazon unveiled Q, an AI chatbot assistant that looks at a company’s information and generate content. “Q indexes all connected data and content, “learning” aspects about a business, including its organizational structures, core concepts and product names.” Q has an understanding of AWS, and it is tied in with Amazon products.

Amazon announced the Titan image generator for high-quality image generation with guardrails. For developers to make apps for AI images; watermarks for images generated.

Pika Labs released the Pika 1.0 text-to-video platform, and also announced via a blog post that they have raised $55 million.

The non-release of the week is OpenAI’s delay of the GPT Store until next year. Got this in an email from OpenAI:

“In terms of what’s next, we are now planning to launch the GPT Store early next year. While we had expected to release it this month, a few unexpected things have been keeping us busy!”

Alibaba’s Qwen is adding to their Qwen-7B and Qwen-14B LLMs with a 72-B Qwen model and a 1.8B model. The 72-B model was trained on 3T tokens and has a 32K context length. How well does it perform? They claim out-performance relative to Llama-2-70B and better than GPT-3.5 on many benchmarks.

Microsoft Paint now has Dalle-3 integrated, available via a “Cocreator” button.

Stability AI released a real-time text-to-image generation model - SDXL Turbo. This achieves state-of-the-art performance by cutting a 50 step diffusion process to 1 step. AI image generators can now “generate as fast as you can type.”

Unsloth launched, announcing “30x faster LLM training.” This team of two open-sourced a version for fine-tuning that makes fine-tuning 5x faster. That could be a game-changer for the fine-tuning community.

Perplexity AI unveils ‘online’ LLMs that could dethrone Google Search, releasing the pplx-7B-online and pplx-70B-online LLMs. These are fine-tuned on Mistral-7b and Llama2-70b models, and incorporate online capabilities so they remain “fresh” with respect to the latest online content.

RunwayML is showing off more camera moves and more control in Gen 2.

Top Tools & Hacks

Real-time image generation is getting amazing, with real-time image generation, control images from a canvas, and upscaling features that enhance the resolution.

You can access StabilityAI’s new real-time image generation at clipdrop.co and their stable diffusion turbo option.

LeonardoAI has released “live canvas” where you paint freely on a canvas to control the image, see results in real-time, then upscale to get better quality.

KREA AI is an AI-power image and generation tool that this week announced Upscale and Enhance. The X user TitusTeatus shows “Making hyperrealistic AI portraits in 4 mins. with the Krea real-time tool and enhancer”

Magnific AI is also providing an enhancer with stunning results.

AI Research News

Deep Mind used GNoME, a Graph Neural Net model, to discover two million crystals and increase the number of known stable crystal by 10x. Biggest AI-based science advance since DeepMind solved protein folding with AlphaFold.

The new Multi-modal Large Language Model (MLLM) CoDi-2 provides in-Context, interleaved, and interactive Any-to-Any Generation. Trained on a dataset encompassing in-context multi-modal instructions across text, vision, and audio, this MLLM can take inputs and produce outputs across all modalities - text, image and audio - and produce grounded and coherent multimodal outputs. The authors shared both a paper and code.

Meta’s Audiobox generates audio from voice and natural language prompts. This successor to Voicebox is “Meta’s new foundation research model for audio generation.” You can combine voice inputs and text prompts, making it easier to create custom audio.

GPT-4 with vision demonstrated great results on radiology, out-performing humans on some tasks.

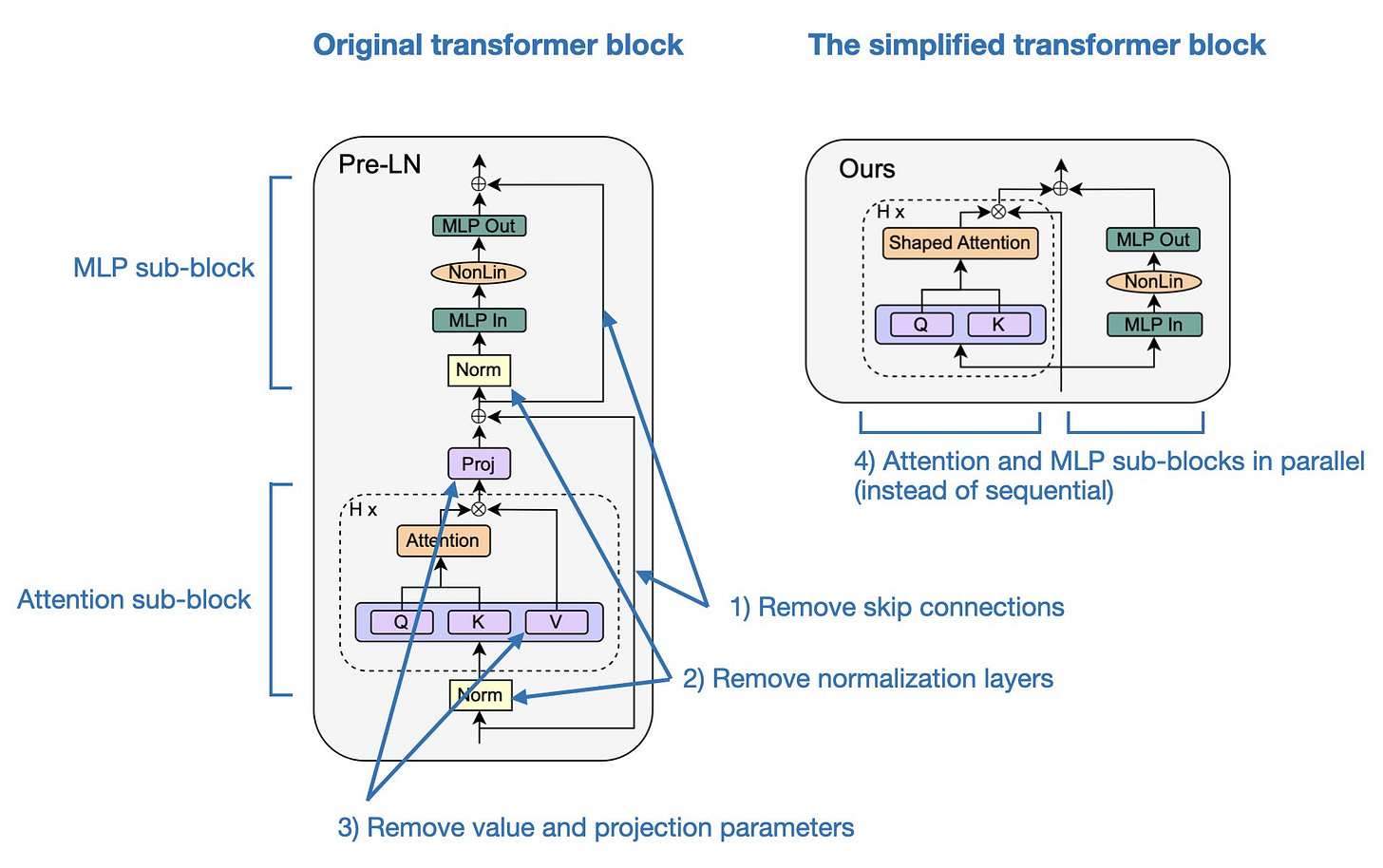

X user VigneshWarar shared “the ML paper 'Simplifying Transformer Blocks,' explained by stand-up comedian Andrew Schulz.” It’s a lighter-side presentation of a seriously helpful result. The paper “Simplifying Transformer Blocks” modifies and slims down the transformer architecture for LLMs - removes skip connections, normalization layers, adjusts value & projection parameters. This yields 15% fewer parameters and faster training throughput. The architecture was tested and works on smaller models, but it remains to be seen if it can scale up to larger LLMs.

Tiny robots made from human cells heal damaged tissue. The research “points the way to a ‘tissue engineering 2.0’ that synthetically controls a range of developmental processes”, says Alex Hughes, a bioengineer at the University of Pennsylvania in Philadelphia.

New from Microsoft is TaskWeaver, A Code-First Agent Framework. This open-source framework “converts user requests into executable code and treats user-defined plugins as callable functions.” TaskWeaver provides an open-source equivalent to OpenAI’s Code Interpreter / data analysis capabilities, making it extremely useful part of the Agent ecosystem.

Nathan Lambert weighs in a technical question of DPO (direct preference optimization) versus PPO/ RL: “Is RL needed for RLHF?”

AI Business and Policy

In a blog post, OpenAI officially announced Sam Altman’s return and the new Board and leadership. Sam Altman and new board chair Bret Taylor made personal statements about the leadership, with Sam thanking practically everyone and using the word “love” repeatedly.

We also recently learned that OpenAI capped profits rules were changed to allow increase in values of 20% per year starting in 2025. Not much of a cap! Charlie Munger (RIP) wouldn’t consider that a cap, but a reach-out ROI target.

Is Stability AI not-so stable? Fortune dropped this bomb: Fresh off an Intel investment, Stability AI looks for buyers as investors pressure CEO to resign. Stability AI CEO Emad Mostaque responded on X denying most of the report. No, they are not for sale, the CEO is still CEO.

AI video generation startup HeyGen raised $5.6 million in funding and announced another feature - instant avatar.

US, Britain, other countries ink agreement to make AI ‘secure by design.’ This is a non-binding agreement for the countries to work on making AI safer.

AI-powered digital colleagues are here. Some 'safe' jobs could be vulnerable. This BBC article discusses AI job risks to white-collar workers: “While there is reason to think that AI may be a boon to workers, there are reasons that many people working in knowledge-work positions should be looking over their shoulders.”

This is disturbing; AI deep fakes used against . Family fights for AI protections for women, girls after viral AI-generated nude images. “A mother and her 14-year-old daughter are advocating for better protections for victims after AI-generated nude images of the teen and other female classmates were circulated at a high school in New Jersey.” Lawmakers are looking to strengthen laws against this kind of abuse. They should.

AI Opinions and Articles

New AGI predictions: At the 2023 NYT DealBook Summit, Nvidia CEO Jensen Huang said AGI will be reached in five years. Huang defined AGI as tech that exhibits basic intelligence "fairly competitive" to a normal human, and said we aren’t there yet.

"There's no question that the rate of progress is high, but there's a whole bunch of things that we [AI] can't do yet. This multi-step reasoning that humans are very good at, AI can't do." - Nvidia CEO Jensen Huang

Elon Musk has a more aggressive time-line for that: AGI within three years.

While I still have 2029 as my target for AGI, every surprise about AI this year has been on the upside. The future is closer than you think.

A Look Back …

A year ago, on Nov 30, 2022, OpenAI released ChatGPT. Within days, it reached 100 million users and changed the world. Here are five Things to know about ChatGPT on its first birthday.

When I look back on the incredible explosion in AI since ChatGPT was launched, it’s truly been The Year of AI. And yet, like a one-year old human, ChatGPT at one has much more growing and learning to do. There is more, much more, yet to come.