AI Week In Review 24.03.09

Inflection 2.5, Claude 3 Opus, Sonnet, Haiku, Suno V3, LTX Studio, WildBench, OpenRouter, ArtPrompt, StableDiffusion 3 paper, OpenAI rebuilds its board and fires back at Elon.

TL;DR - Two big AI model releases this week - Claude 3 Opus and Inflection 2.5 - mean that we now have 5 competitive GPT-4-class AI models to choose from.

AI Tech and Product Releases

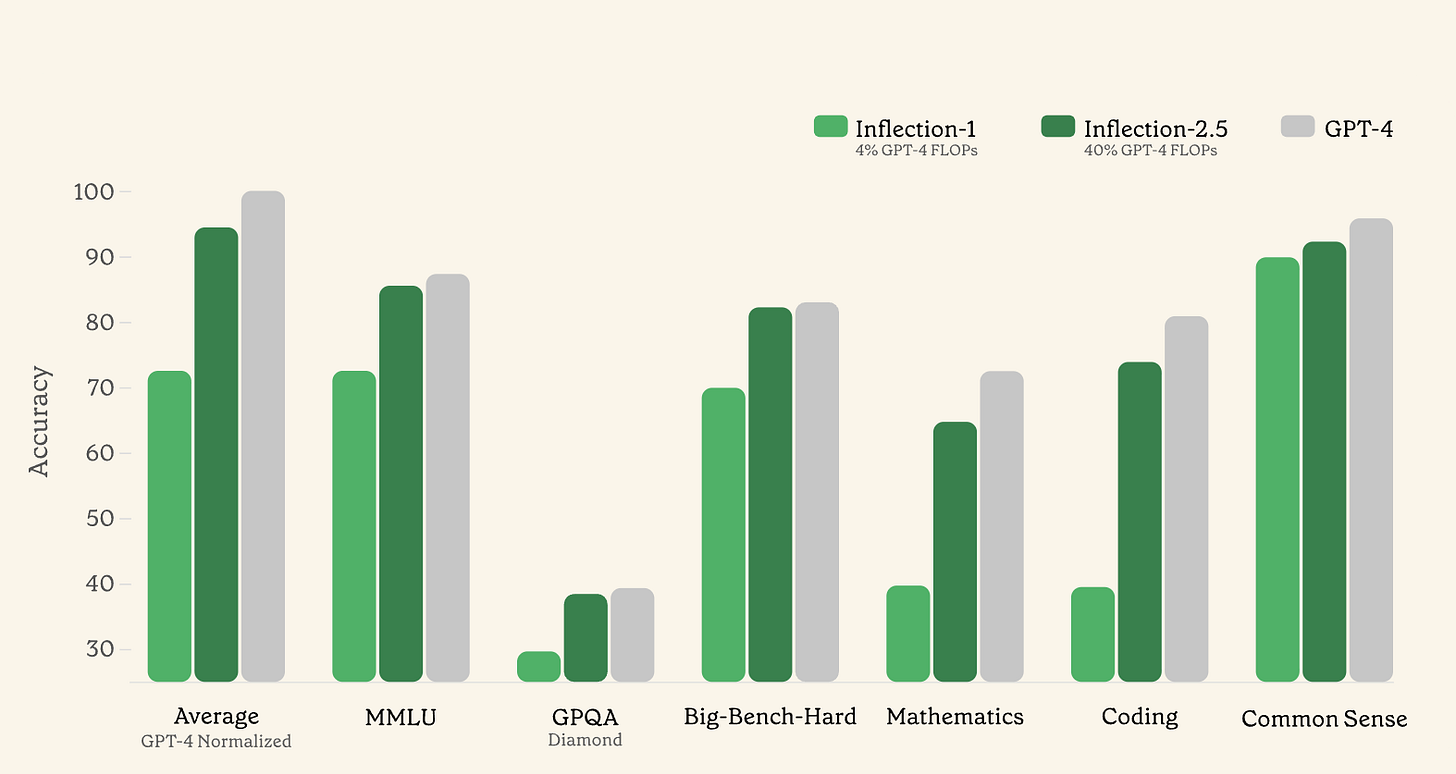

Inflection AI released Inflection-2.5, saying this new release is “adding IQ to Pi’s exceptional EQ” by approaching GPT-4’s performance. Inflection-2.5 is available at pi.ai, on iOS, on Android, or their new desktop app.

They share multiple benchmarks that show Inflection-2.5 is close to but slightly behind GPT-4, good enough to call it a GPT-4-class AI model. They tout that they trained it efficiently, using only 40% of the compute to train it as used on GPT-4. They lean on how their AI is tuned for empathy: “It couples raw capability with our signature personality and unique empathetic fine-tuning. “

The other big AI model release this week was Anthropic released Claude 3, a suite of 3 models - Haiku, Sonnet, and Opus - boasting high performance on complex tasks, GPT-4 (original) beating benchmarks, multi-modal capabilities, and good price-performance. We covered Claude 3 in depth in our articles “Claude 3 Released - It's A New AI Model Leader” and “A Second Look at Claude 3.” One takeaway:

“Claude 3 Opus really shines in some important use cases, such as deep research, large corpus summarization, and generating code.”

With these two GPT-4-class releases, Simon Willison declares, “The GPT-4 barrier has finally been broken.” The landscape for AI models has changed considerably with the recent releases of Inflection-2.5, Claude 3 Opus, Gemini 1.5 Pro, and Mistral Large:

We have four new models, all released to the public in the last four weeks, that are benchmarking near or even above GPT-4. And the all-important vibes are good, too! … Not every one of these models is a clear GPT-4 beater, but every one of them is a contender. And like I said, a month ago we had none at all.”

Suno V3 alpha, the latest version from the AI music generation platform Suno, has improved sound quality, longer generation (up to 2min), and more ’emotive’ voices. It is getting so good, some think AI Music is going through its "ChatGPT" moment.

Einstein-v4-7B, a Mistral 7B fine-tune, has been released.

LTX Studio, by Lightricks, is a new AI video platform that provides an interface for interacting with AI video generators. They are opening up to alpha users now.

Bummer, OpenAI says “Sora will not be available for public use any time soon.” As for when will GPT-5 arrive, Sam says “patience.”

A new LLM leaderboard, the WildBench leaderboard, was developed and released by AI2. As shared by Phillipp Schmid on X: “WildBench is a new benchmark to evaluate LLMs on 1024 challenging tasks from real use cases, including, coding, creative writing, analysis, and more.”

This weeks thought: “Artificial Intelligence”, like “horse-less carriage,” says more about what AI is not than what it is. Would “Automated Inference” be more precise?

Top Tools & Hacks

With many new LLMs coming out every week, both open and proprietary, it’s helpful to have platforms to test them out. Here are some good options:

LMSys Chatbot Arena is a crowdsourced open platform for LLM evaluations, where users can compare different AI models based on their responses to prompts. Users enter prompts and receive side-by-side responses from two randomly selected models, then vote on which response they find better without knowing the model's identity. It’s free to use.

Openrouter.AI is “a unified interface for LLMs” that’s provides a wrapper API around many LLMs, as well as chatbot access to them. For developers, this is a way to make your LLM APIs model-agnostic. They also have a playground for evaluating and comparing multiple LLMs on the same prompt, an excellent way to test specific LLMs. It connects to a wide variety of open and proprietary LLMs, including models from Mistral, Perplexity, OpenAI, Claude 3, Gemini 1.0, GPT-4, etc. Pay per token but some free use is available,

One way to get access to Gemini 1.5 Pro right now is to get on Google’s AI Studio waitlist, sign up here.

AI Research News

Jailbreaks are prompt techniques that try to get an LLM to provide responses that go outside their safety guidelines, such as sharing methods to make a poison or bomb. While top-performing LLMs have gotten better at defeating jailbreaks, ArtPrompt: ASCII Art-based Jailbreak Attacks against Aligned LLMs identified a new way that jailbreaks all leading AI models: ASCII Art.

The trick is to embed forbidden words in a prompt in the ASCII art, thereby avoiding alignment detection of the jailbreak. Matt Berman has a YouTube deep-dive, and even shows how a Morse Code variation on this jailbreak also works.

IEEE Spectrum says AI Prompt Engineering Is Dead, and replacing it is AI prompt engineering, where you ask the language model to devise its own optimal prompt:

Recently, new tools have been developed to automate this process. Given a few examples and a quantitative success metric, these tools will iteratively find the optimal phrase to feed into the LLM. … in almost every case, this automatically generated prompt did better than the best prompt found through trial-and-error.

StabilityAI has released their Stable Diffusion 3 Research Paper available on Arxiv as “Scaling Rectified Flow Transformers for High-Resolution Image Synthesis.”

Stable Diffusion 3 outperforms state-of-the-art text-to-image generation systems such as DALL·E 3, Midjourney v6, and Ideogram v1 in typography and prompt adherence, based on human preference evaluations.

Our new Multimodal Diffusion Transformer (MMDiT) architecture uses separate sets of weights for image and language representations, which improves text understanding and spelling capabilities compared to previous versions of SD3.

AI Business and Policy

Sam Altman’s counter-coup is complete. OpenAI announces new board members, reinstates CEO Sam Altman:

Altman will be rejoining the company’s board of directors several months after losing his seat and being pushed out as OpenAI’s CEO.

Joining him are three new members: former CEO of Bill and Melinda Gates Foundation Sue Desmond-Hellmann, ex-Sony Entertainment president Nicole Seligman and Instacart CEO Fidji Simo — bringing OpenAI’s board to 8 people.

OpenAI’s board also shared its own internal review of the prior board’s firing of Sam Altman in November. The review concluded it was “a consequence of a breakdown in the relationship and loss of trust between the prior Board and Mr. Altman.” The Board acted within its broad discretion, but Altman’s conduct “did not mandate removal.”

AI2 Incubator scores $200M in compute to feed needy AI startups.

Ex-Google engineer charged with stealing AI trade secrets while working with Chinese companies.

NIST staffers revolt against expected appointment of ‘effective altruist’ AI researcher to US AI Safety Institute. NIST employees reacted based on “fear that Christiano’s association with EA and longtermism could compromise the institute’s objectivity and integrity.”

Europe launches AI office to serve as 'global reference point' on safety, policy and development. The EU aims to create a forum for coordinating member state safety commissions.

Will there be another AI-driven labor strike? SAG-AFTRA Union chief says AI Is Sticking Point in SAG-AFTRA Video Game Contract Talks; Strike Appears ‘50-50 or More Likely’.

AI in the 2024 election: As Trump supporters target black voters with faked AI images, NBC News highlights how these AI-generated images of Trump surrounded by Black voters is an example of “AI-fueled misinformation” in elections. Expect more AI fakery; AI images are convincing to many on social media.

AI Opinions and Articles

What If the Biggest AI Fear Is AI Fear Itself? Artificial intelligence has the potential to create more work than it disrupts. An essay by David Autor, a professor of economics at MIT, suggests AI Could Actually Help Rebuild The Middle Class.

Autor’s reasoning is that AI will “extend the relevance, reach and value of human expertise for a larger set of workers.” He gives the examples where “AI tools supplemented expertise rather than displaced experts” in healthcare, software, and customer service. AI will level up expertise and make workers more productive.

He does not fear that AI will displace or create a jobless future, but insists we are ‘awash’ in jobs. He says, “AI poses a real risk to labor markets, but not that of a technologically jobless future. The risk is the devaluation of expertise.” AI can obsolete specific human expertise, while making that same expertise more broadly attainable by others. This will lower costs, make jobs more equitable, and restore the “quality, stature and agency that has been lost to too many workers and jobs.”

Because artificial intelligence can weave information and rules with acquired experience to support decision-making, it can enable a larger set of workers equipped with necessary foundational training to perform higher-stakes decision-making tasks currently arrogated to elite experts, such as doctors, lawyers, software engineers and college professors. In essence, AI — used well — can assist with restoring the middle-skill, middle-class heart of the U.S. labor market that has been hollowed out by automation and globalization. - David Autor

A Look Back …

The Elon Musk lawsuit against OpenAI is premised on the claim that OpenAI’s migration from its founding non-profit entity into a hybrid that includes a for-profit subsidiary is betraying its founding charter.

This week, OpenAI fired back publicly with “OpenAI and Elon Musk”, their own account of early OpenAI decisions, and releasing emails to back up their narrative. Key points:

We realized building AGI will require far more resources than we’d initially imagined

Elon said we should announce an initial $1B funding commitment to OpenAI. In total, the non-profit has raised less than $45M from Elon and more than $90M from other donors.

We and Elon recognized a for-profit entity would be necessary to acquire those resources

As we discussed a for-profit structure in order to further the mission, Elon wanted us to merge with Tesla or he wanted full control. Elon left OpenAI, saying there needed to be a relevant competitor to Google/DeepMind and that he was going to do it himself. He said he’d be supportive of us finding our own path.

What the emails show is that from its 2015 inception, OpenAI was concerned with the vast compute requirements - and consequent cost - of building AI models. It’s expensive to run thousands of GPUs for months on end to build an AI.

In the end, they didn’t get the funding from Elon Musk directly, and they chose not to fold into Tesla, as Elon Musk would have complete control. Instead they cut a deal with Microsoft in 2019, getting the $1 billion they needed to take OpenAI to scale up AI models.

These emails also show that the “open” in OpenAI was a recruiting ploy, not a commitment to open source AI models. OpenAI’s incrutable roadmap (when GPT-5?), the mystery behind OpenAI drama, and their closed AI models have led me to call OpenAI by a more accurate name: OpaqueAI. Someday they will write the OpenAI story, and it will be a great story indeed.

“As we get closer to building AI, it will make sense to start being less open. The Open in openAI means that everyone should benefit from the fruits of AI after its built, but it's totally OK to not share the science (even though sharing everything is definitely the right strategy in the short and possibly medium term for recruitment purposes).” - Ilya Sutskever, 2016