AI Week In Review 24.03.30

Grok-1.5, DBRX, AI21's Jamba, Qwen1.5 MoE 2.7B, LTX Studio, Pinokio 1.3, Hume EVI, Adobe Firefly Services and Custom Models, AutoDev, OpenAI previews Voice Engine, $100B Stargate planned.

TL;DR - This week we’ve had a slew of new AI model releases: X.AI announced Grok-1.5, Databricks released DBRX, AI21 announced Jamba, Qwen team released Qwen1.5 MoE-A2.7B. The future is many AI models; the future is Mixture-of-Experts.

AI Tech and Product Releases

X.AI announced the release Grok-1.5. It will be available soon to early testers and existing Grok users on X. Grok-1.5 is a big advance on the capabilities of Grok-1:

Improved reasoning: Grok-1.5 has 81% MMLU score and HumanEval score of 74%, better than Mistral Large and in the range of GPT-4-class AI models.

Context window length of 128,000 tokens. Further architecture and training details not shared.

Databricks released DBRX and DBRX-Instruct, and its benchmarks show it to be a state-of-the-art open LLM. It uses mixture-of-experts (MoE) 32x9B architecture with 132B total parameters, with 36B parameters are active on any input. Features of DBRX:

Training: DBRX was trained on 12 trillion tokens at an estimated cost of $10 million, and it has a context length of 32k tokens.

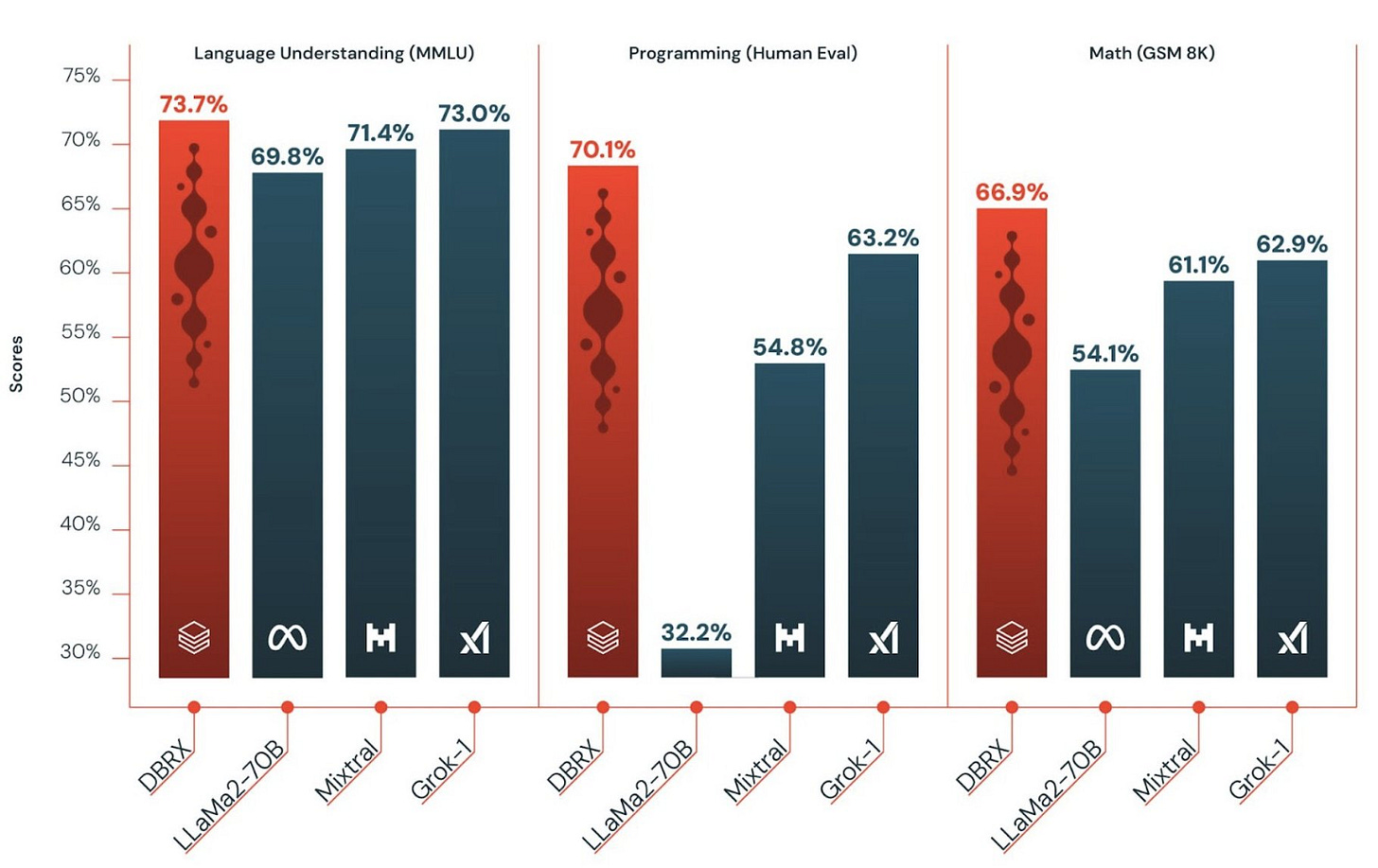

Benchmarks: The DBRX MMLU score of 73.7% and other scores show it to be slightly better than GPT-3.5 and leading open LLMs. Really good numbers on HumanEval of 70%, which puts it above even GPT-4.

License: It has a Llama-like license, so a (sort-of) open AI model.

Jamba has been released by AI21Labs. Jamba is a 52B parameter hybrid SSM-Transformer Mixture of Experts (MoE), rivaling open transformer-based LLMs in performance, released under Apache 2.0 as an open AI model. Some of its features:

High throughput on long contexts, 3 times that of Mixtral 8x7B.

Long context length: Supports 256K context length, and fits up to 140K context on a single A100 80GB, so it is very efficient in handling long context.

Benchmarks: It achieves 67% on MMLU, similar performance to Mixtral 8x7B.

Architecture: The Jamba architecture is quite novel. It interleaves transformer, Mamba, and Mamba+MoE layers. It has 52B parameters total, with 12B active during generation, due to 16 experts with 2 active in generation.

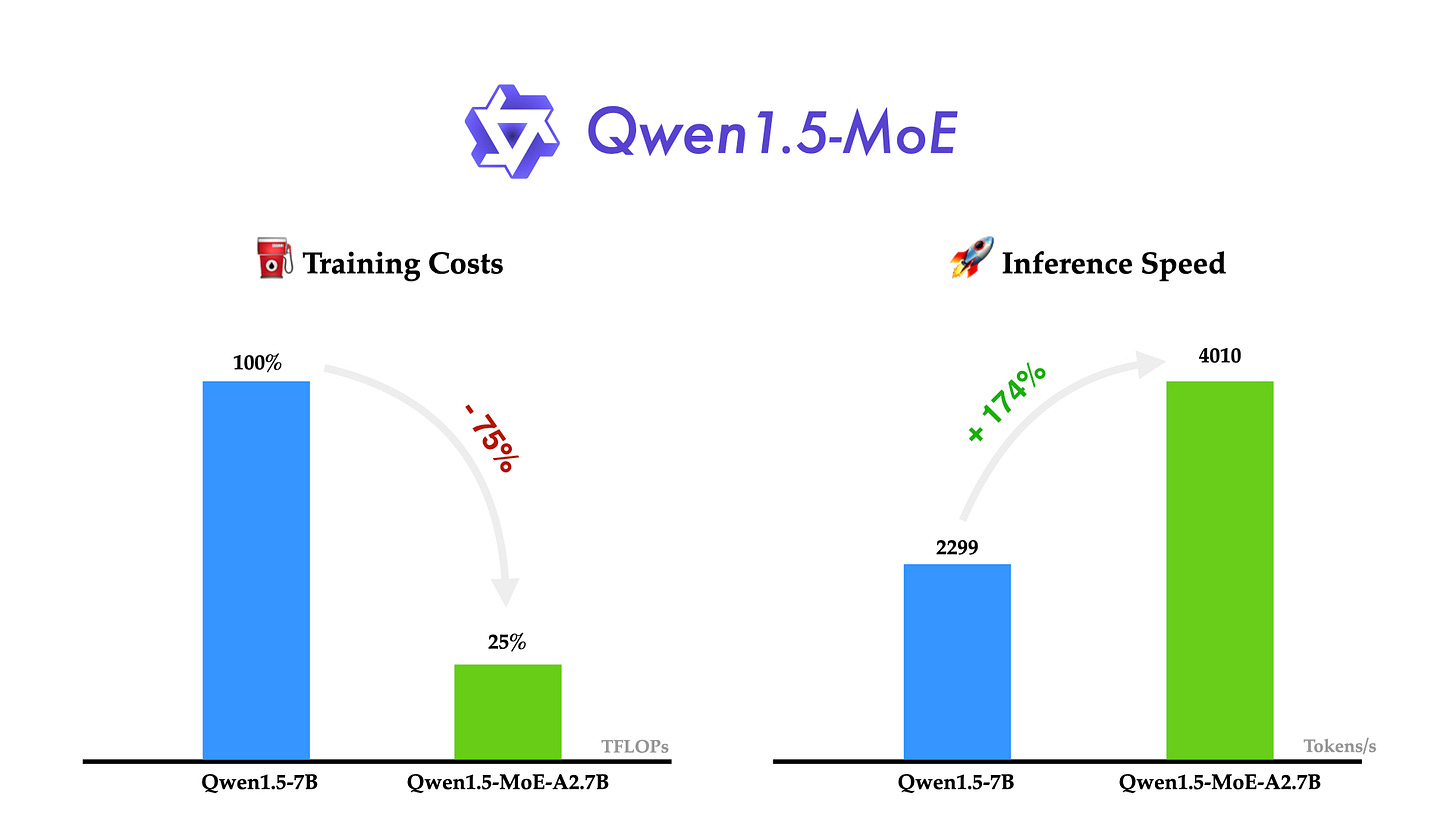

The Qwen team from Alibaba released their first MoE model, Qwen1.5-MoE-A2.7B. This is a small MoE model with 2.7B activated parameters, but achieves 7B model performance on benchmark evaluations. It shows significant advantages in training costs and inference speed over its dense counterparts, proving the value of MoE LLMs over dense LLMs.

The chart above summarizes why the future of LLMs is Mixture of Experts (MoE). Every LLM release mentioned this week (Grok-1.5, Jamba, DBRX, Qwen1.5-MoE-A2.7B) has MoE architecture. It’s just more efficient for both training and inference. Sparse MoE LLM training is more efficient thanks to Sparse Upcycling, training on a small dense model for early pre-training than replicating it into an MoE to continue pretraining.

LTX Studio, a platform for AI video generation, is now released (need to get on a waitlist). Their platform, pitched as “Storytelling Transformed,” can do text-to-storyboard then to video: Prompt a basic story concept; it generates the storyboard of multiple scenes, shots, with consistent cast characters; then videos. This review of using LTX Studio to make AI short films shows it is impressive but not ready for production.

Pinokio 1.3 has been released. Pinokio is an “AI browser” that lets you install, run, and automate AI applications and models automatically in-browser. New updates with this release:

Pinokio script is now a full fledged AI scripting language. Script anything AI: installing, launching, querying, automating, etc. Not just AI, any task.

Adobe introduced Firefly Services and Custom Models. Firefly, Adobe’s Generative AI platform for content creation, is adding APIs, tools and services for content generation, editing and assembly, to help organizations automate high-quality content creation. Over 20 new generative and creative APIs are available to developers in Firefly Services.

Adobe also introduced Custom Models, generative AI models trained on a company’s IP, product and brand styles, so companies can maintain brand consistency in generated content.

Top Tools & Hacks

Hume has previewed their new Empathic Voice Interface (EVI), an emotionally intelligent ChatBot that can both detect emotion and respond in a natural synthetic voice that conveys emotions.

Described on X, EVI has “unique empathic capabilities” for emotionally intelligent conversational AI: Responds with human-like tones of voice; reacts to your expressions with language that addresses your emotional state; learns to make you happy by applying your reactions to self-improve over time; understands when users are finished speaking.

This demo is worth playing with, at demo.hume.ai. One reviewer said: “I just had a conversation with an empathic AI chatbot — and it creeped me out.”

My own conversation with EVI was more engaging than creepy. I came away very impressed with the emotional realism of the voice, but I was less impressed that the chatbot asked questions or kept saying “I’m all ears!” and then continued to talk. It’s still got work to do detecting when to stop chatting.

My experience with the voicebot so far was one of amazement at the impressive display of technology and complete fear at the fact it correctly predicted I hadn’t eaten breakfast. - Tom’s Hardware review of Hume AI’s EVI

AI Research News

This week’s AI research roundup covered these AI research results:

VBC: Visual Whole-Body Control for Legged Loco-Manipulation

ViTAR, a Vision Transformer for Multiple Image Resolutions

Extreme Quantization - Towards 1-bit Machine Learning Models

The Unreasonable Ineffectiveness of the Deeper Layers

Long-Form Factuality in LLMs - Using Agents to Check Facts

InternLM2 open-source LLM - Technical Report

There’s been open-source alternatives to Devin, the AI Agent software engineer. One of them is Devika, and another is OpenDevin. Another AI Agent software development framework is AutoDev from Microsoft, presented in the paper “AutoDev: Automated AI-Driven Development.” AutoDev gets 91% on HumanEval, a clear sign that the future of AI code generation is agentic.

AI Business and Policy

OpenAI and Microsoft reportedly planning $100 billion datacenter project for an AI supercomputer. A multi-gigawatt supercomputer sounds overly grandiose, but to put it in perspective, this is part of a larger long-term data-center capacity plan for AI: “Stargate would be the largest in a string of datacenter projects the two companies hope to build in the next six years, and executives hope to have it running by 2028.”

Amazon doubles down on Anthropic, completing its planned $4B investment. Amazon added $2.75 billion to the $1.25 billion it invested in September. Perhaps Anthropic’s Claude 3 results are confirming their money is getting used well.

Amazon, Anthropic, and Accenture also recently teamed up to build Generative AI solutions for businesses. “Enterprises will be able to deploy models to address their specific needs, while keeping their data private and secure.”

Google.org, Google’s charitable wing, launches $20M generative AI accelerator program. There are many accelerators out there, but this one has a twist: It’s helping non-profit organizations get on the AI bandwagon.

Cognition Labs, the Peter Thiel-Backed AI Startup behind the “Devin” AI Agent software engineer, seeks $2 Billion Valuation. This would be a six-fold increase in its value from sex weeks ago, “reflecting AI frenzy” according to WSJ.

The emotionally-intelligent Chatbot maker Hume AI Raises $50M in Series B Funding. In case you want to know why fundraising news happens proximate to releases and demos: “In connection with the fundraise, Hume AI has released a beta version of its flagship product, an Empathic Voice Interface (EVI).”

Generative AI ‘FOMO’ is driving tech heavyweights to invest billions of dollars in startups. “In 2023, investors pumped $29.1 billion combined into nearly 700 generative AI deals, an increase of more than 260% in value from the prior year, according to PitchBook.” However, the bulk of that has come from outsized investments into some large recipients, such as Anthropic, mentioned above.

U.S. updates export curbs on AI chips and tools to China. The new rules clarify rules issued in recent years to prevent access to the latest AI chips by China:

They clarify, for example, that restrictions on chip shipments to China also apply to laptops containing those chips.

AI Opinions and Articles

A non-release and a concern about voice cloning: OpenAI has shared an update on Voice Engine, their technology for synthetic voice generation that can create natural-sounding speech from a 15-second audio clip. This technology was developed in 2022 and is behind OpenAI’s text-to-speech API and ChatGPT’s voices for audio output.

This has also been used in various applications by others, including reading assistance, translating audio for various purposes, audio assistance for those with communication disabilities, etc. In particular, HeyGen has used it for their audio translation feature.

Their final message on Voice Engine is about concerns over mis-use of voice cloning AI. They are not releasing Voice Engine now due to those concerns: “We are choosing to preview but not widely release this technology at this time.”

Instead, they encourage steps to prepare for a world of voice cloning technology:

Phasing out voice based authentication as a security measure for accessing bank accounts and other sensitive information

Exploring policies to protect the use of individuals' voices in AI

Educating the public in understanding the capabilities and limitations of AI technologies, including the possibility of deceptive AI content

Accelerating the development and adoption of techniques for tracking the origin of audiovisual content, so it's always clear when you're interacting with a real person or with an AI

How to categorize this OpenAI article? It’s a non-release preview of technology that’s already out and not unique (ElevenLabs has similar). It’s not announcing policy but suggesting it. It’s informative and important nonetheless.

This Voice Engine preview highlights that OpenAI has and will, based on their internal compass on AI safety concerns, choose or not choose to release technology.

This suggests OpenAI could be gating other technology releases as well. They are AI safety concerns around Sora and a future GPT-5. Will they hold Sora or GPT-5 until after the election due to concerns it could get abused? Perhaps. Sama doesn’t have “e/acc” in this X handle bio.

A Look Back …

The Hume EVI reminds me of the AI in the movie “Her”, made in 2013. Empathy and relatable conversation in a friendly female voice will bring its own set of issues when it gets good enough.