AI Week In Review 24.06.08

Qwen2 7B and 72B, KLING video gen, Stable Audio Open, Suno sound-to-song, Udio Audio Upload, Meta AI for WhatsApp, MSoft recalls Recall, AMD's MI325X, NVidia reaches new highs.

AI Tech and Product Releases

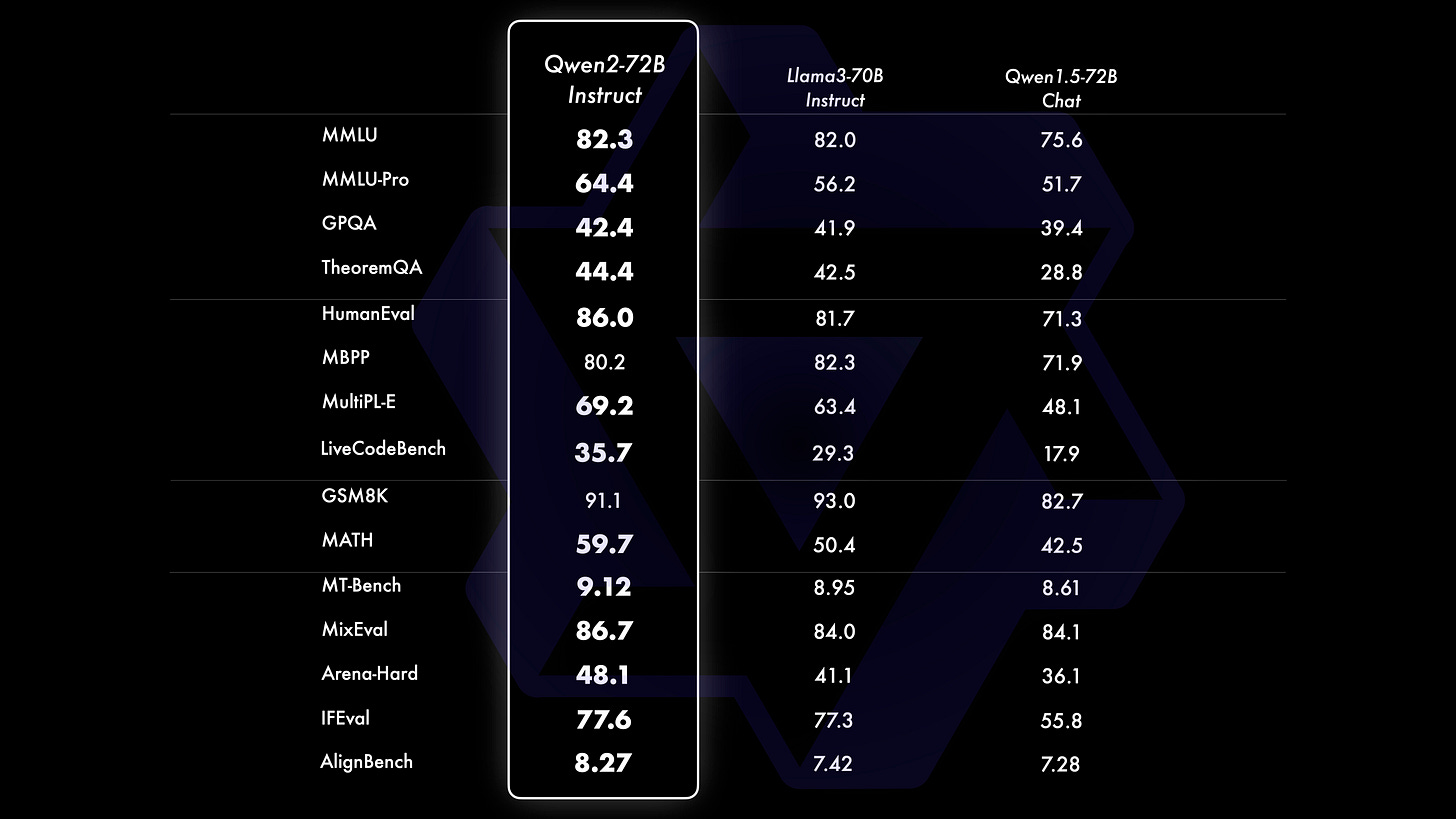

The Qwen Team from Alibaba has released Qwen 2, a series of base and instruction-tuned models of 5 sizes: Qwen2-0.5B, Qwen2-1.5B, Qwen2-7B, Qwen2-57B-A14B (mixture-of-experts), Qwen2-72B. The smaller models have 32K context and the 7B and 72B models have a context length of 128K. Qwen2-72B performance beats Llama 3-70B and all prior open LLMs on major benchmarks:

Qwen2-72B: MMLU 82.3, MT-Bench 9.12, HumanEval 86.0, IFEval 77.6. On MMLU-Pro, Qwen2 scored 64.4, outperforming Llama 3's 56.2.

Qwen2-7B: MMLU 70.5, MT-Bench 8.41, HumanEval 79.9. Its strong HumanEval performance is due to taking advantage of the training done in CodeQwen 1.5.

Multilingual capabilities: Qwen2 models are trained in 29 languages including European, Middle East and Asian languages, giving them strong multilingual performance.

While Qwen2-72B uses the original Qianwen License, all other models are released under permissive open-source Apache 2.0 license.

Kuaishou has announced KLING, a new text-to-video AI model is capable of generating high-quality 1080p videos up to 2 minutes long.

Shushant Lakhyani has an X thread of 32 prompt generations of KLING videos, and other video generations have been shared on X. The quality is on par with OpenAI's SORA, with realistic physics and impressive life-like movement. For example, Figure 3 shows a cook chopping onions, where the motions, reflections and textures are all very realistic.

The Chinese maker of KLING requires a Chinese phone number for the waitlist, so we in US don’t have access yet.

Stability AI introduces Stable Audio Open, an open source text-to-audio model for generating samples and sound effects. It can generate up to 47 seconds of drum beats, instrument riffs, ambient sounds, foley and production elements. The model enables audio variations and style transfer of audio samples.

Udio announced ‘Audio Uploads’ feature to Extend and Remix User Clips:

You can upload an audio clip of your choice, and extend this clip either forward or backward by 32 seconds using up to 2 minutes of context.

Suno also just released has a similar “sound-to-song” feature, where users can upload their own audio and Suno generates songs from it. An example is here. Ben Nash on X calls it a “gamechanger” and shared generated songs:

I used the new sound-to-song feature of Suno to generate new songs (album of 8 songs) based on my sample and new prompting. New lyrics were also generated and added.

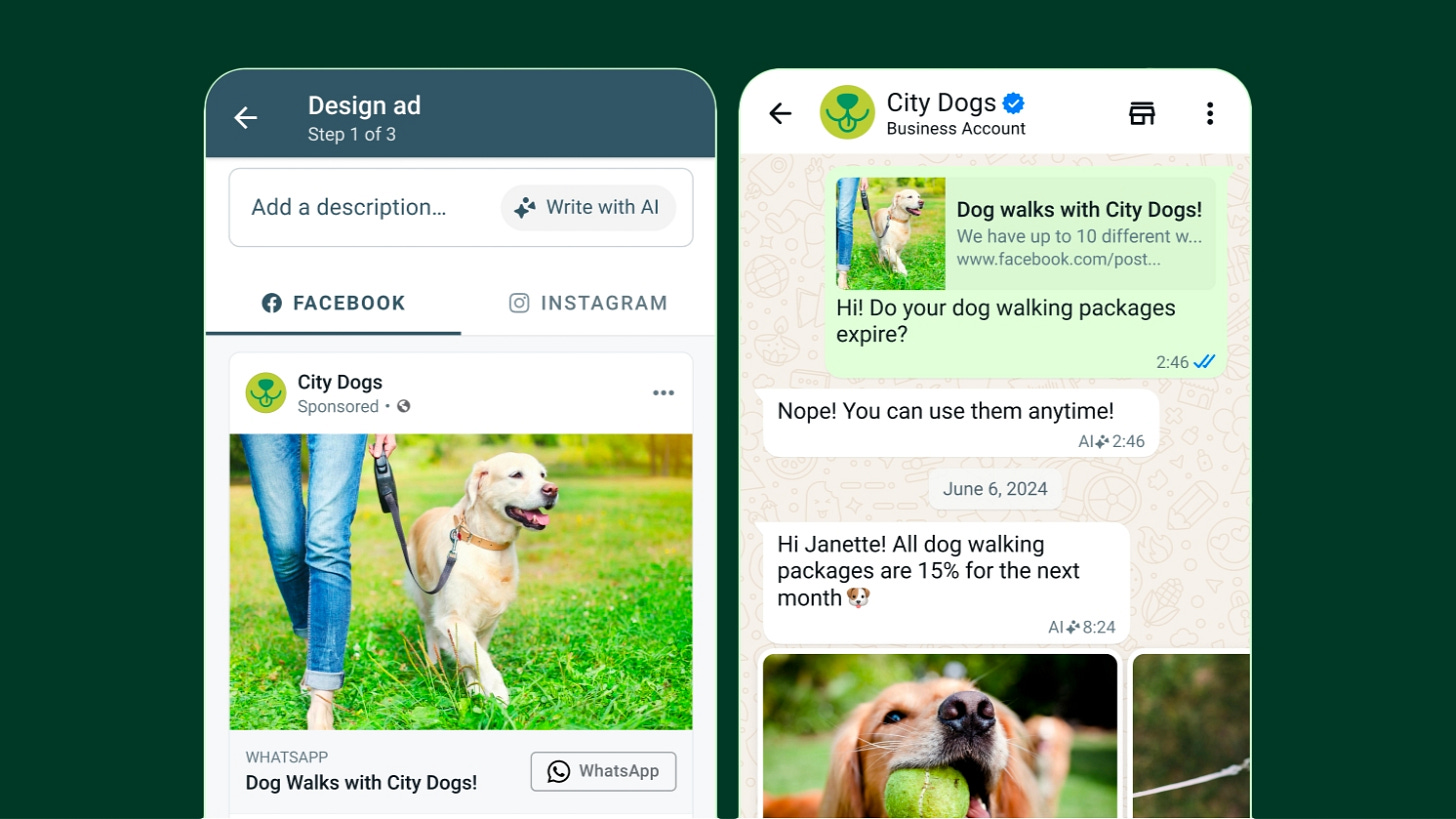

Meta adds AI-powered features to WhatsApp Business app. AI will help with creation of click-to-WhatsApp ads and generating responses to frequent customer messages.

Microsoft is recalling Recall. Microsoft changes course on the controversial Windows 11 feature that constantly takes screenshots of your PC's screen. Responding to concerns about data security and ‘big brother’ fears, Microsoft is encrypting all Recall data, requiring sign-in to access data, and you'll have to opt in to use it.

Apple’s WWDC is in a few days, and the rumor mill is kicking up. Apple is reportedly going to introduce Apple Intelligence, their brand for putting AI on the iPhone, iPad and Mac, and could include an OpenAI partnership and chatbot.

The Clip team at JasperAI have released Flash-Pixart model. This AI image generation model is based on Flash Diffusion, a diffusion distillation method they present in the paper Flash Diffusion: Accelerating Any Conditional Diffusion Model for Few Steps Image Generation. Their distilled version of Pixart-α model is able to generate 1024x1024 images in 4 steps. They have a live demo and official Github repo for their model.

Top Tools & Hacks

With good local AI models like Llama 3 and Qwen2, we can now build good local AI agent applications to run locally. LangChain has shared their Recipes for open source local agents with Llama 3, with YouTube explainer videos and Github source code for their “Llama3 Cookbooks.”

For example, they show how to build RAG agents using LangGraph and Groq, that are capable of complex self-corrective control flow.

AI Research News

Our AI research highlights for this week include:

Mamba-2 State Space Model and Structured State Space Duality (SSD)

FineWeb and FineWeb-Edu Datasets

Perplexity-Based Data Pruning Improves Training Efficiency

OpenAI has extracted GPT-4’s internal representations to better understand AI models, and published a paper on it. “Our methods scale better than existing work, and we use them to find 16 million features in GPT-4.”

Co-authors on the paper include their AI super-alignment team members, Ilya Sutskever and Jan Leike. Now that they quit OpenAI, will this work be continued or fall by the wayside? Jan Leike has joined Anthropic, who have published their own good work on this topic.

AI Business and Policy

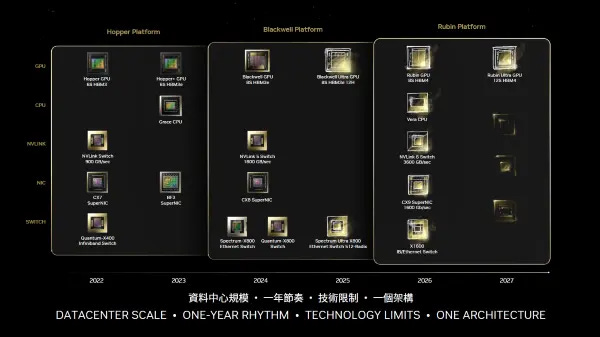

Nvidia, Intel and AMD made big announcements At Computex 2024. NVidia announced their extended roadmap, with Blackwell being followed by Blackwell Ultra, then Rubin, a 1 year upgrade cadence, with each cycle “pushed to the limits.”

Nvidia stock price hit new highs on confidence that NVidia will continue to dominate the growing AI datacenter chip market and news that Elon Musk plans to build a massive AI supercomputer with NVidia chips by next year.

AMD announced 288-GB Instinct MI325X GPU at Computex, coming later this year and claiming 1.3 pataflops of FP16 performance, aiming to compete with NVidia’s H200.

Via the Information, the AI startup Harvey, which builds generative AI for law firms, is in talks to raise $600M at a $2B valuation and is looking to buy legal research company vLex.

From Andrew Curran on X, OpenAI’s Sora is meant for the studios, not consumers:

Bryan Lourd, CEO of Creative Artists, said yesterday that OpenAI took the Sky voice down when he asked them to because they are mid-deal with multiple studios over Sora use, and those deals will be announced imminently.

Airbus presents new Wingman concept at ILA Berlin. Airbus’s Wingman is an unmanned AI-powered escort drone for manned fighter jets; a full-scale model of its Wingman drone was showcased at the International Aerospace Exhibition (ILA) in Berlin this week.

AI Opinions and Articles

OpenAI employees and ex-employees wrote an open letter, calling for A Right to Warn about Advanced Artificial Intelligence. They state that AI companies possess many capabilities but weak obligations to share AI safety/risk information to the public. So they are calling for AI companies to commit to AI Safety information-sharing principles, by protecting employees who speak out on these matters:

That the company will not enter into or enforce any agreement that prohibits “disparagement” or criticism of the company for risk-related concerns …

That the company will facilitate a verifiably anonymous process for current and former employees to raise risk-related concerns …

That the company will support a culture of open criticism and allow its current and former employees to raise risk-related concerns about its technologies to the public …

That the company will not retaliate against current and former employees who publicly share risk-related confidential information after other processes have failed.

OpenAI has already rescinded their ‘non-disparagement’ legal demands in separation agreements. There could be more AI companies do to be open, but I don’t believe proprietary AI model builders will want to be fully open.

“We are hopeful that these risks can be adequately mitigated with sufficient guidance from the scientific community, policymakers, and the public. However, AI companies have strong financial incentives to avoid effective oversight, and we do not believe bespoke structures of corporate governance are sufficient to change this.” - From “A Right to Warn” open letter

OpenAI gave an update on GPT-4o and their text-to-speech tech, Expanding on how Voice Engine works and safety research. They will restrict GPT-4o’s audio outputs to a selection of preset voices for general release, due to safety and abuse concerns (such as deepfake voices).

About the voices that were selected, OpenAI said “These voices were sourced from professional voice actors that were selected through a carefully considered casting process.” No mention was made of Scarlett Johansson or the legal battle between OpenAI and her.