AI Week In Review 24.06.29

Claude AI Projects, Gemma 2 9B & 27B, Open LLM Leaderboard v2, ElevenLabs iOS Audio reader app, ChatGPT Voice Mode delayed, LlamaIndex Llama-agents, Figma AI, CriticGPT, Meta's LLM Compiler

Top Tools & Hacks

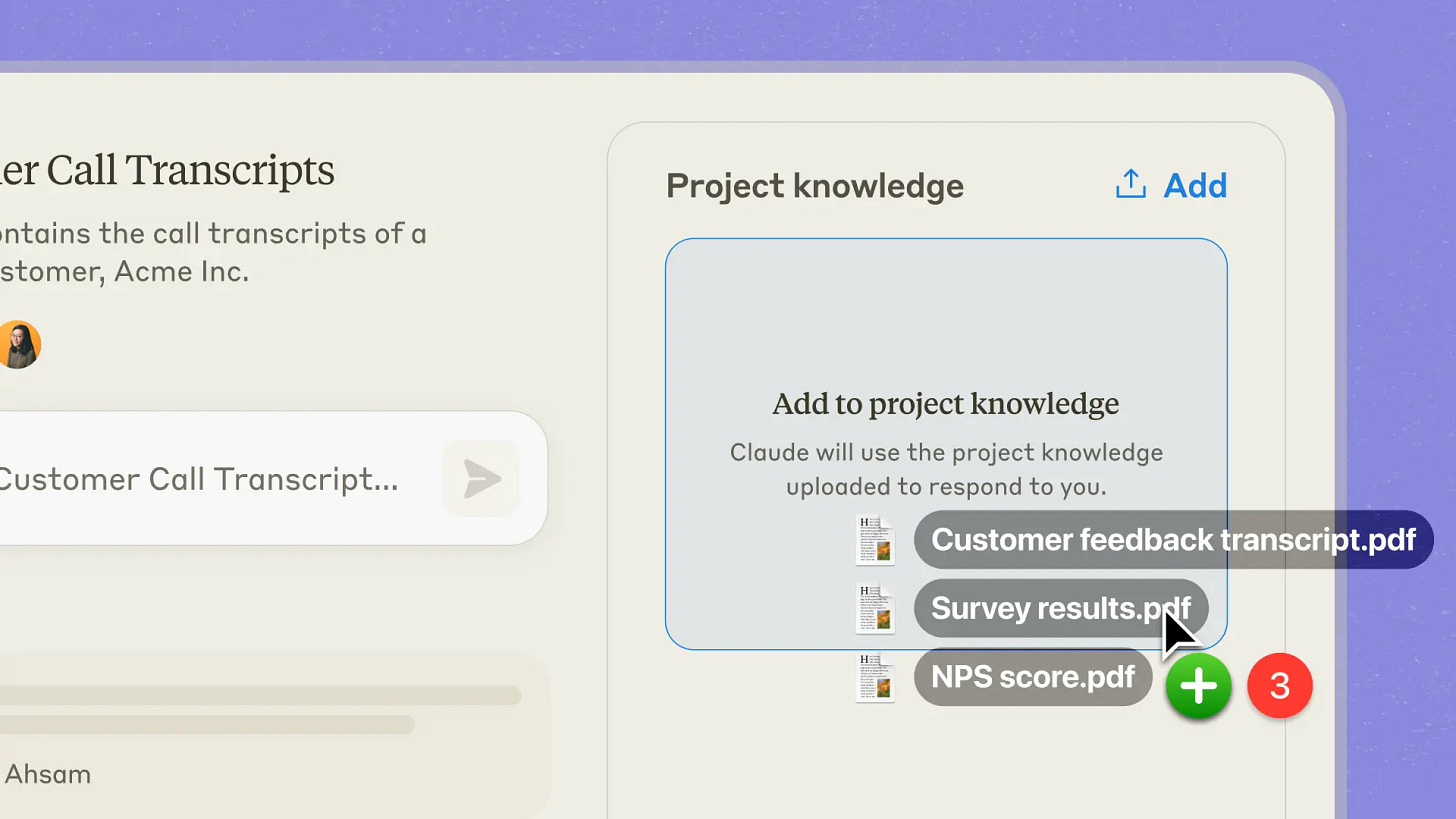

Anthropic is on a roll. On the heels of the recent Claude 3.5 Sonnet release and its Artifacts interface, they have announced another great feature for Claude - Projects. Projects lets users import sets of knowledge to ground Claude outputs. Users can also organize chat activity for an activity or project in one place and share their chats with Claude with teammates:

This added context enables Claude to provide expert assistance across tasks, from writing emails like your marketing team to writing SQL queries like a data analyst.

The Projects feature gives you more customization and personalization in your AI model, similar to OpenAI memories and custom GPTs. It’s only available to Claude Pro and Team users, so if you want to try it out, you’ll have to get into a paid plan.

AI Tech and Product Releases

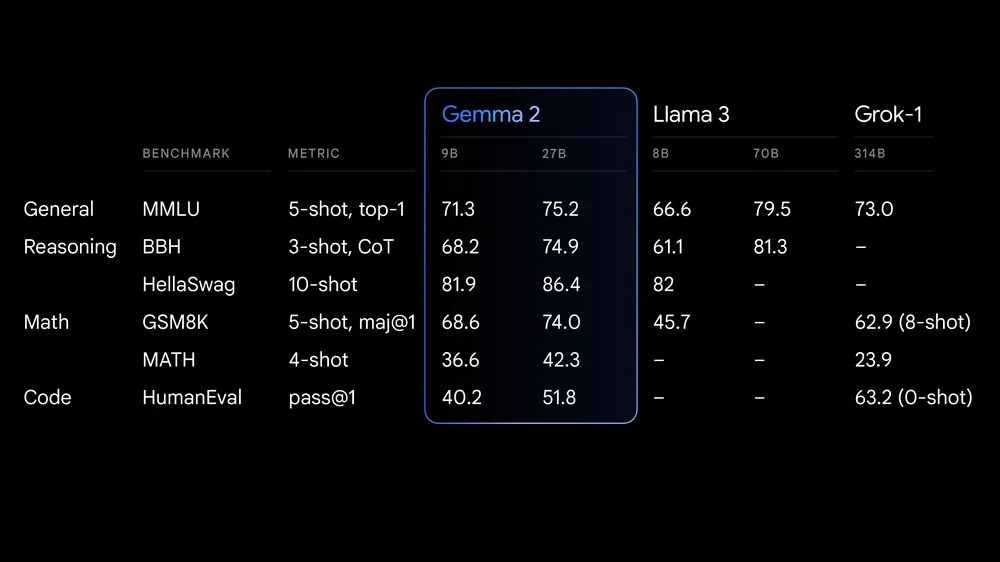

Google launched Gemma 2 open LLMs, in 9B and 27B parameter sizes. The 27B model offers competitive performance to Llama 3 70B, while the 9B significantly outperforms similar-class models like Llama 3 8B, making it SOTA for LLMs of its size.

Trained on 13T tokens (27B) and 8T tokens (9B) respectively, with 8k context, Gemma 2 got its good performance from a number of innovations in training: including sliding window attention, knowledge distillation (the 9B distilled knowledge from the larger model), RLHF and model-merging of fine tunes. Another feature is its rich Gemini/Gemma tokenizer, making it efficient and adaptable for many languages.

Gemma 2 is available for local runs via Hugging Face and Ollama. A 2.6B parameter lightweight version has been teased for the future.

Google also announced full access to Gemini 1.5 Pro's 2 million token context window, and they introduced code execution for Gemini Pro and Flash:

the code-execution feature can be dynamically leveraged by the model to generate and run Python code and learn iteratively from the results until it gets to a desired final output. The execution sandbox is not connected to the internet, comes standard with a few numerical libraries, and developers are simply billed based on the output tokens from the model.

Combined with Gemini’s multi-modal capabilities and context caching that lets you save contexts (like documents, codebases, or long prompts), Google is making Gemini 1.5 useful for AI tasks on long, complex data.

However, a counterpoint was reported in TechCrunch: Gemini’s data-analyzing abilities aren’t as good as Google claims. As shown in a couple of academic studies, more complex recall tasks than simple needle-in-haystack shows that the AI models break down in long contexts:

“We’ve noticed that the models have more difficulty verifying claims that require considering larger portions of the book, or even the entire book, compared to claims that can be solved by retrieving sentence-level evidence,” Karpinska said. “Qualitatively, we also observed that the models struggle with verifying claims about implicit information that is clear to a human reader but not explicitly stated in the text.”

Hugging face makes the LLM Leaderboard harder, better, faster, stronger. Problem: Top AI models are saturating and outpacing benchmarks, and data corruption in AI models is making existing benchmarks unreliable. Hugging Face addressed these issues by upgrading their leaderboard to make Open LLM Leaderboard v2.

This major upgrade features new tougher benchmarks: MMLU-Pro, GPQA, MuSR, MATH, IFEval, BBH. It also normalizes scores and refines evaluation methods to make it fairer and . The new Open LLM Leaderboard v2 shows Qwen2 72B the leading open AI model with an average score of 43 on the new benchmark suite. In fact, Qwen models take up 4 of the top 10 slots, showing Qwen’s strength as an AI model.

ElevenLabs launches iOS app that turns ‘any’ text into audio narration with AI. How it works: “You can import any content from safari directly into the app. It scrapes the page, generates a transcript and reads it to you in a smooth ElevenLabs voice.”

Disappointed: OpenAI has postponed the long-awaited ChatGPT Voice Mode. Originally set for June, OpenAI will now start with a small test group in July, with a full release to paying users expected in autumn. “Exact timelines depend on meeting our high safety and reliability bar,” which suggests that safety concerns is what held this up. A preview was teased on Reddit.

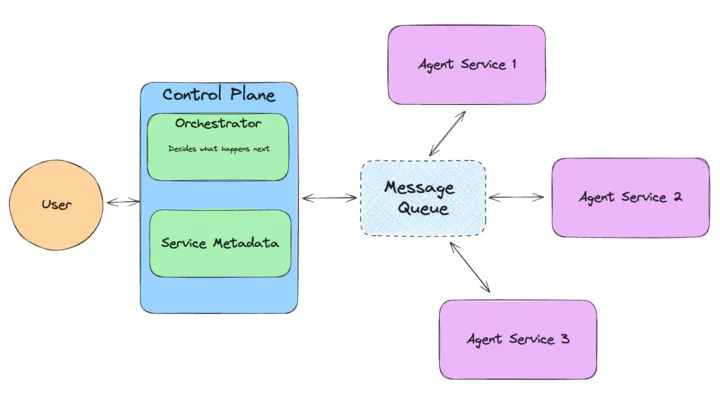

At the AI Engineer World’s Fair, LlamaIndex announced llama-agents, a new framework for deploying multi-agent AI systems in production. It comes with distributed architecture that turns agents into microservices, can define agentic and explicit orchestration flows, and add real-time monitoring.

Figma announced Figma AI at its Config conference and is offering a free year of Figma AI to explore AI-powered design tools without immediate cost. Alongside Figma’s major UI redesign, the new generative AI tools help users get started with a design and generate graphical content. It can also create a prototype app from designs, generating needed content for it along the way.

AI Research News

We shared progress on AI agents and reasoning in our Weekly AI roundup for this week:

Octo-planner: On-device Language Model for Planner-Action Agents

MCTSr: Accessing GPT-4 level Mathematical Olympiad Solutions via Monte Carlo Tree Self-refine with LLaMa-3 8B

Agile Coder: Dynamic Collaborative Agents for Software Development based on Agile Methodology

Mixture of Agents

Meta has released Meta LLM Compiler 7B & 13B, a family of models built on Code Llama that can compile code to assembly and decompile to LLVM IR. These LLMs were trained on 546 Billion tokens of high-quality data then further instruction tuned to emulate a compiler, predict optimal passes for code size, and disassemble code. This research was shared in a paper “Meta Large Language Model Compiler: Foundation Models of Compiler Optimization.”

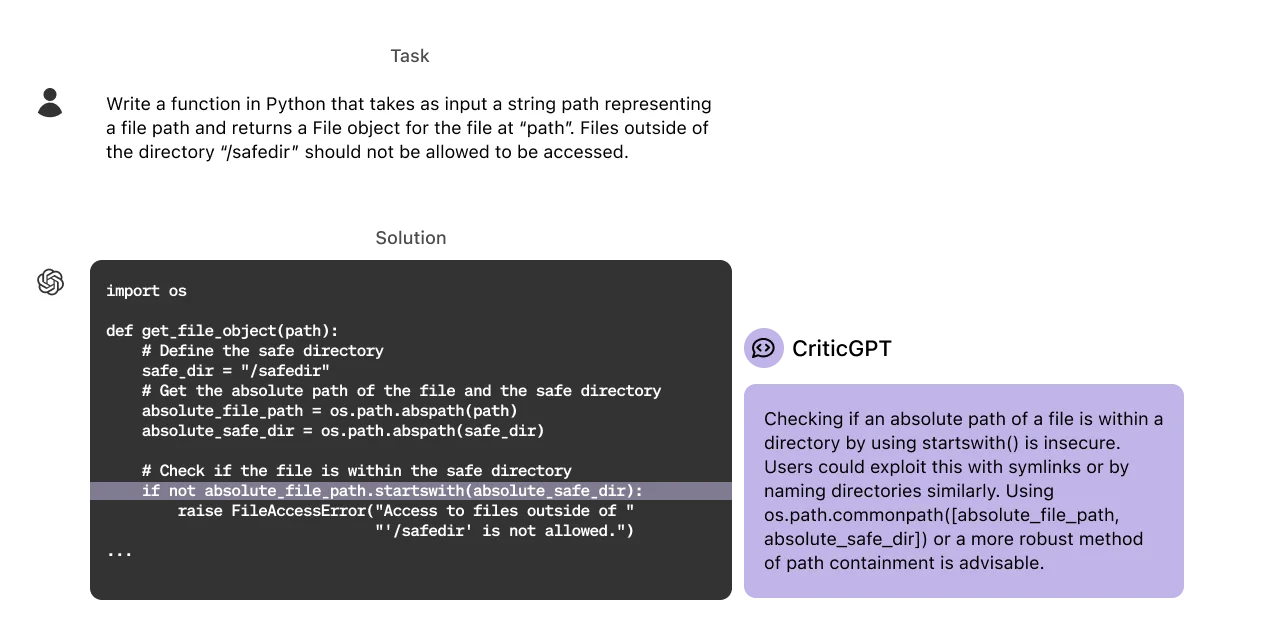

As shared in an OpenAI blog post called “Finding GPT-4’s mistakes with GPT-4,” OpenAI has developed CriticGPT, an AI based on GPT-4 to identify errors in ChatGPT’s outputs. They use CriticGPT to catch more errors during RLHF, improving accuracy and alignment and reducing hallucinations.

CriticGPT’s suggestions are not always correct, but we find that they can help trainers to catch many more problems with model-written answers than they would without AI help.

AI Business and Policy

At long last, self-driving cars: Waymo One is now open to everyone in San Francisco.

A new startup called Etched has announced themselves and the world’s first specialized ASIC chip for Transformers - Sohu. They have bold claims for their Sohu ASIC Transformer chip:

With over 500,000 tokens per second in Llama 70B throughput, Sohu lets you build products impossible on GPUs. Sohu is an order of magnitude faster and cheaper than even NVIDIA’s next-generation Blackwell (B200) GPUs. … One 8xSohu server replaces 160 H100 GPUs.

Etched said this will be built in TSMC’s leading-edge 4nm process. However, no specific release date was mentioned.

OpenAI announced a Strategic content partnership with TIME. Like many other collaborations with media companies, OpenAI gains access to TIME's extensive journalistic archives (101 years worth).

OpenAI acquired Multi, a startup doing video-based remote collaboration, for an undisclosed amount. This purchase follows OpenAI’s recent acquisition of Rockset, and suggests more development in collaborative AI for corporate ChatGPT users.

Music industry says no to AI: YouTube tries convincing record labels to license music for AI song generator. They aren’t getting many takers. Their effort last year to get AI music licensed in Dream Track effort led to only 10 artists joining up.

Instead, music industry giants are suing Sudo and Udio, alleging mass copyright violation. Suno and Udio could face damages of up to $150,000 per song allegedly infringed.

In startup funding news:

SoftBank to invest in AI search startup Perplexity AI at $3 bln valuation, as part of a $250 million funding round.

Custom AI assistant provider Dust raised $16m in additional funding, led by Sequoia.

Clay raises $46m on $500m valuation to enhance AI-driven sales and marketing platform.

AI Opinions and Articles

Tsarathustra on X shares Larry Summers opining that studying coding is a bad idea and instead “young people should learn to work creatively in a group and define purpose because AI is coming for IQ before it comes for EQ.”

This is misguided. I agree with Pedro Domingo’s opinion:

EQ is much easier for AI than IQ, just like creativity is much easier than reliability.

We have learned that AI-based ‘creativity’ is just sampling from a space of possibilities, and that’s a lot easier than being precisely correct about complex logical questions. As stated by Truth Seeker in Silicon Valley on X:

Anyone who has built mission critical systems knows that the hard part in building such systems is correctness, resilience and reliability.

Reliability (as in, 5-nines 99.999% reliability) is harder than intelligence, which is harder than creativity. This is why self-driving cars took so long, and why reliable AI systems are not as close as they appear.

A Look Back …

The Etched announcement post shared a link to the The Bitter Lesson, a 2019 essay by Rich Sutton expressing the lesson that scaling computation has been the path to success in AI beyond all other methods.

“The biggest lesson that can be read from 70 years of AI research is that general methods that leverage computation are ultimately the most effective, and by a large margin …

One thing that should be learned from the bitter lesson is the great power of general purpose methods, of methods that continue to scale with increased computation even as the available computation becomes very great. The two methods that seem to scale arbitrarily in this way are search and learning.” - Rich Sutton, The Bitter Lesson