AI Week In Review 25.01.25

DeepSeek R1, OpenAI Operator, Gemini 2.0 Flash Thinking 01-21, ByteDance UI-TARS, Anthropic Claude citations, Perplexity AI assistant, Humanity's Last Exam (HLE) benchmark, $500B Project Stargate.

AI Tech and Product Releases

OpenAI introduced Operator, their computer-using AI agent (CUA) that performs tasks autonomously. They introduced it with a live demo of Operator making dinner reservations, buying from a shopping list, and taking quizzes online. This threat mentions several other Operator use cases. The feature works through its own custom web browser interface rather than controlling local devices. OpenAI claims SOTA performance on interfacing with the GUI:

CUA establishes a new state-of-the-art in both computer use and browser use benchmarks by using the same universal interface of screen, mouse, and keyboard.

The tool is available as a research preview for users on the $200 Pro subscription plan, at operator.chatgpt.com.

OpenAI may store chats and associated screenshots from users of its new AI tool Operator for up to 90 days, even after manual deletion. This is longer than the 30-day retention period for ChatGPT, designed to combat abuse with enhanced fraud monitoring.

DeepSeek has shaken the AI world by releasing DeepSeek-R1, an MIT licensed SOTA open-source reasoning model. As we shared in DeepSeek Releases R1 and Opens up AI Reasoning, this model achieves o1-level performance on benchmarks, and ‘vibe checks’ on R1 are coming back positive. Because it is open-source, incredibly cheap compared to o1, and the AI model training pipeline is public, it will accelerate progress on AI reasoning models tremendously.

Google has released Gemini 2.0 Flash Thinking 01-21 to update their AI reasoning model, bringing with it 1 million token context, code execution, and better AI reasoning performance. Noam Shazeer on X:

We’ve boosted performance across challenging math, science, and multimodal reasoning benchmarks (AIME: 73.3%, GPQA: 74.2%, MMMU: 75.4%). We also enabled a 1M long context window to help with more complex research tasks and are giving devs the ability to turn on code execution.

It’s good but far from perfect (failed the cup, marble and microwave question).

ByteDance has released UI-TARS, a GUI agent model family (in 2B, 7B, and 72B sizes) designed to control GUI interfaces using human-like perception, reasoning, and GUI actions. They claim SOTA performance, with an OS World score of 24.6%, although just-released OpenAI’s Operator outshines that at 38.1%.

The ByteDance GUI Agent model is available via HuggingFace. ByteDance also released a MacOS desktop app on GitHub, so users can use the GUI agent as an application on their local PC. While they warn that the quantized model performance cannot be guaranteed, this app is a sign that useful GUI Agents are coming.

Bringing another AI Agent into the mix, Perplexity announced their new multi-app AI Assistant. Assistant uses reasoning, web search, and apps to help with daily tasks ranging from simple questions to multi-app complex daily tasks. It could be used to book dinner, find a forgotten song, call a ride, draft emails, set reminders, and more. It is available in 15 languages.

Google added Gemini multitasking capabilities across apps, and Samsung's new phones replaced Bixby with Google Gemini as the default AI assistant. This switch benefits users with a more capable AI assistant and provides Google with a significant boost in user interactions, crucial for improving Gemini’s performance.

OpenAI announced the addition of o1 model to ChatGPT Canvas interface, including enhanced capabilities to render HTML and React code.

Anthropic unveiled Citations feature for its Claude API. Citations allows Anthropic’s Claude AI models to automatically cite source documents like emails, enhancing document summarization and Q&A accuracy.

Hugging Face released SmolVLM-256M and SmolVLM-500M, the smallest AI models for analyzing images, videos, and text. These models are optimized for devices with limited resources and can perform tasks such as image description and document analysis. Despite their small size, they outperform larger models on visual understanding benchmarks and are available to download and use under an open-source license.

Nonprofit Center for AI Safety (CAIS) and Scale AI have launched a new benchmark called Humanity’s Last Exam to test frontier AI systems with complex questions across multiple subjects. The exam includes thousands of crowdsourced questions in various formats and is so challenging no current AI model scores better than 10%. CAIS and Scale AI aim to make the benchmark accessible to researchers for further evaluation of new AI models.

ByteDance has introduced an AI coding assistant, Trae, that looks like a Cursor competitor.

Tencent announced Hunyuan 3 2.0, the newest version of their 3D asset creation model, claiming “revolutionary effects that rival those of commercial products.”

Top Tools & Hacks

DeepSeek R1 being open source means that AI reasoning is cheap and available everywhere. Many will take advantage of R1’s openness and extend it creatively. For example, you can run R1 to “think” even more by using a Gist script that extends R1 thinking. LangChain on YouTube presents building a fully local "deep researcher" with DeepSeek-R1.

Expect fine-tunes and modifications of R1, as well as building AI reasoning on other LLMs using the R1 training methods. To that end, HuggingFace has developed a full reproduction of the R1 pipeline, so open-source AI researchers makers can their own R1-like models.

Sharing a GitHub repo called “7B Model and 8K Examples: Emerging Reasoning with Reinforcement Learning is Both Effective and Efficient,” AI researchers from Hong Kong’s HKUST show an R1 replication result on a 7B model:

We replicated the DeepSeek-R1-Zero and DeepSeek-R1 training on 7B model with only 8K examples, the results are surprisingly strong.

This result indicates that RL-based fine-tuning for reasoning can be effective even at a level that will cost little.

AI Research News

Our AI research review for this week highlighted progress in AI reasoning, in particular sharing the technical reports for the DeepSeek R1 and Kimi k1.5 AI reasoning models:

Towards Large Reasoning Models: A Survey on Scaling LLM Reasoning Capabilities

DeepSeek-R1: Incentivizing Reasoning Capability in LLMs via Reinforcement Learning

Kimi k1.5: Scaling Reinforcement Learning with LLMs

Mind Evolution: Evolving Deeper LLM Thinking

The Lessons of Developing Process Reward Models in Mathematical Reasoning

AI Business and Policy

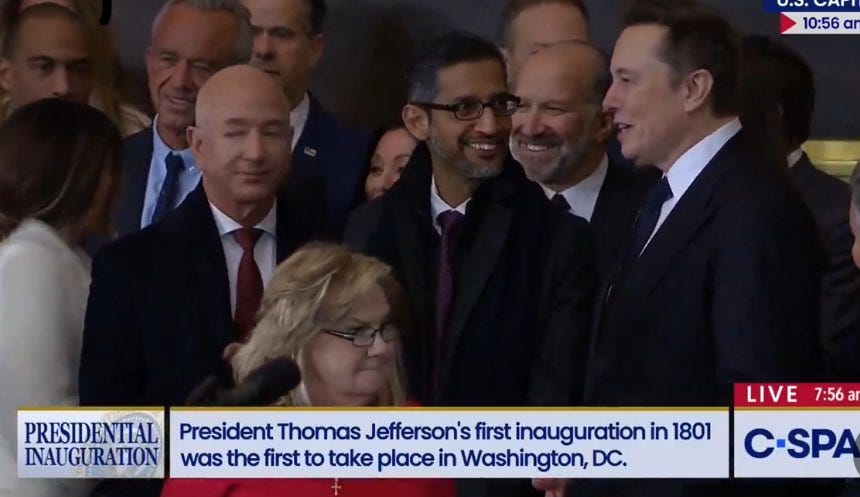

SoftBank, Oracle, and OpenAI joined President Trump in the White House to announce the Stargate Project. This ambitious joint venture project will build $500B in AI infrastructure over four years to support AI workloads for OpenAI models. Masayoshi Son, Sam Altman, and Larry Ellison joined Trump in a White House event to announce initial tech partners including Arm, Microsoft, Nvidia, Oracle, and OpenAI for the buildout.

SoftBank and OpenAI are each committing $19 billion to Stargate and development on a 1 million-square foot data center facility in Texas is underway.

Despite public commitments totaling $100 billion from Softbank, OpenAI, Oracle, and MGX, Elon Musk questioned the financial backing of The Stargate Project on X, claiming insider information that they have less than $10B secured. Sam Altman countered Musk's skepticism, continuing a long-standing public spat between the two men.

Microsoft’s CEO Satya Nadella deflected questions about Stargate funding for AI infrastructure, saying “I’m good for my $80 billion” in a CNBC interview, confirming Microsoft's planned annual $80 billion investment in AI.

Where does Stargate leave the Microsoft-OpenAI alliance? Microsoft and OpenAI adjusted their partnership terms, extending it through 2030. OpenAI can now access other compute infrastructure but with Microsoft retaining the right of first refusal on new capacity, supporting research and model training beyond Azure’s previous exclusive use.

According to a recent report shared by The Verge, Siri lacks intelligence, despite Apple promoting its AI advances and ChatGPT integration. In the report, Siri correctly identified the winners of only 20 out of 58 Super Bowls, highlighting persistent issues with its accuracy.

LG has taken a significant step in its commercial robotics division by acquiring a majority stake in Bear Robotics, an AI-powered service robot startup. The electronics giant had previously invested $60 million for a 21 percent stake last March, aiming to enhance its “CLOi” robotics business with this integration.

Meta CEO Mark Zuckerberg plans to spend up to $65 billion on AI in 2025, including a massive data center in Louisiana. Zuckerberg aims for Meta’s AI division to serve over 1 billion people by 2025 with advancements such as Llama 4 and AI engineers contributing to R&D efforts.

AI voice generation startup ElevenLabs secured $250 million in funding at a valuation over $3 billion. ElevenLabs has partnered with major companies for speech-based services and has grown its recurring revenue significantly since 2023.

Fundraise Up, a Brooklyn-based fundraising platform for nonprofits, integrates AI to tailor donation suggestions and reduce donor frustration, offering a competitive 4% transaction fee model without upfront costs. The startup recently raised $70 million to expand its services and enter new markets.

In his first executive actions, President Trump rescinded former President Biden’s AI Safety executive order that required AI model developers to share safety test results with the US government and established standards for assessing AI risks.

Companies significantly increased AI lobbying spending at the U.S. federal level in 2024 compared to 2023, reflecting regulatory uncertainty. OpenAI and Anthropic notably boosted their expenditures, supporting specific legislative acts aimed at benchmarking and researching AI systems.

AI Opinions and Articles

There has been something of a melt-down from some in the AI community in the US who see the DeepSeek R1 release as a win for China and a risk for US AI firms.

I see it differently. DeepSeek R1 is an important release for sure, and it is a credit to the DeepSeek AI lab. However, it is really a win for open-source and proof “there is no moat,” as a smaller AI lab creatively developed a top-tier AI model. OpenAI and Google can surely do even better. The competition will sharpen the US AI labs.

I agree with Dr Jim Fan that the real story is AI acceleration and more scaling - The AI timeline got compressed:

Many tech folks are panicking about how much DeepSeek is able to show with so little compute budget. I see it differently - with a huge smile on my face. Why are we not happy to see *improvements* in the scaling law? DeepSeek is unequivocal proof that one can produce unit intelligence gain at 10x less cost, which means we shall get 10x more powerful AI with the compute we have today and are building tomorrow. Simple math! The AI timeline just got compressed. - Dr Jim Fan

Please keep it going! Really enjoy reading your analysis!