AI Week In Review 24.07.13

Claude Artifacts Remix, Claude Workspace prompt evaluator, Paints-Undo, MobileLLM, FlashAttention-3, AI predicts Alzheimer's, OpenAI's Preparedness Framework, AI influencer wins "Miss AI" competition.

Cover pic story: The AI influencer world had a beauty pageant, and Fanvue’s first-ever 'Miss AI' competition crowned Kenza Layli, an AI-created Moroccan lifestyle influencer, as its first winner.

AI Tech and Product Releases

Samsung’s Unpacked event mainly showcased new hardware tech, but there were some AI related developments:

Google and Samsung confirmed that their deal for Gemini AI features on Galaxy devices will bring updates (announced at I/O earlier this year) that let it read and respond to what’s on your phone screen or in a video you’re watching, as well as new Circle to Search feature.

Claude is allowing users to share (publish) and remix Artifacts, so programs, diagrams, and similar outputs from Claude in Artifacts can be shared.

Also, Anthropic has shared that you can now fine-tune Claude 3 Haiku in Amazon Bedrock.

Claude also has updated their developer console to allow you to evaluate prompts in the developer console.

Paints-Undo is a recently released AI model set that generates human drawing behaviors from an image. It takes an image as input and then output the drawing sequence of that image:

The model displays all kinds of human behaviors, including but not limited to sketching, inking, coloring, shading, transforming, left-right flipping, color curve tuning, changing the visibility of layers, and even changing the overall idea during the drawing process.

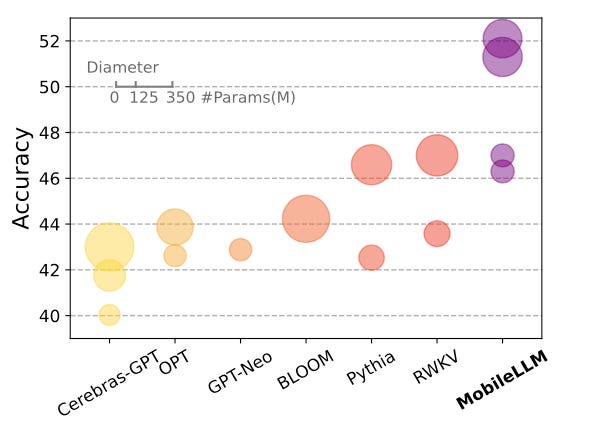

Meta AI developed and shared compact language model for mobile devices, called MobileLLM. Meta’s research on MobileLLM led to multiple innovations and learnings, such as embedding sharing, a block-wise weight-sharing technique, and increasing AI model depth over width for performance.

Notably, the 350 million parameter version of MobileLLM demonstrated comparable accuracy to the much larger 7 billion parameter LLaMA-2 model on certain API calling tasks.

MobileLLM code is available on Github and the paper presenting this research will be at ICML.

Via Min Choi: “Video editing will never be the same. You can now create slow motion and bullet time using Luma AI.”

Top Tools & Hacks

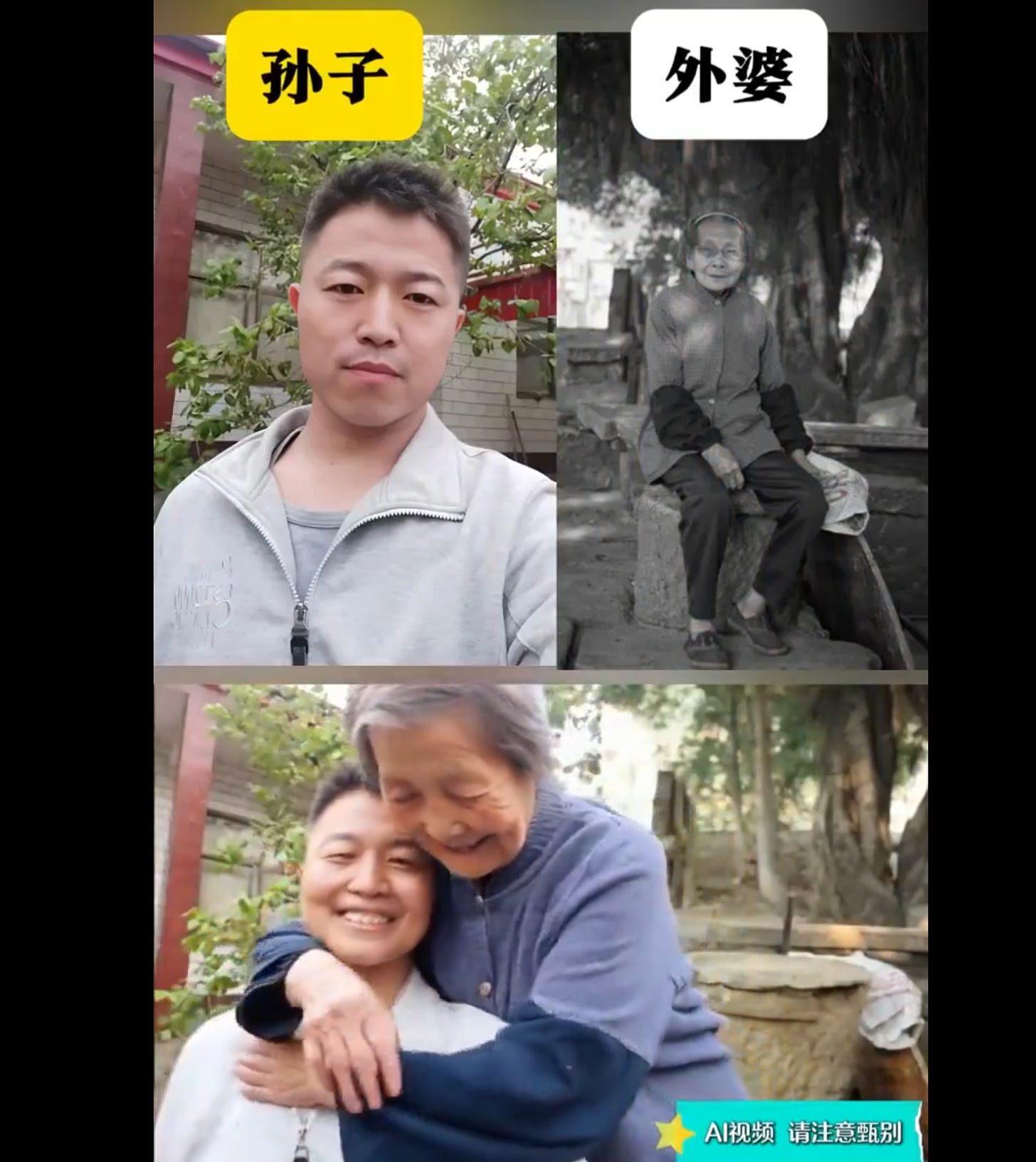

We are seeing new amazing AI video creations. One interesting one starts with two personal photos and creates a video of the two people embracing, so you can create a video of yourself with a loved one who passed on.

AI Research News

Our AI research roundup for this week covered:

NuminaMath: Winning the AI Math Olympiad with MuMath-Code

LLEMMA: An Open Language Model for Mathematics

Internet of Agents: Weaving a Web of Heterogeneous Agents for Collaborative Intelligence

AriGraph: Learning Knowledge Graph World Models with Episodic Memory for LLM Agents

PaliGemma: A versatile 3B VLM for transfer

FlashAttention has been instrumental in making LLM training on GPUs more efficient. Now FlashAttention-3: Fast and Accurate Attention with Asynchrony and Low-precision from TogetherAI explains the latest version FlashAttention-3. It offers 2-4x speedup over previous methods while maintaining accuracy, using asynchronous IO and low-precision computation.

I didn’t know that teeth were that different between men and women, but AI system achieves 96% accuracy in determining sex from dental X-rays. Researchers used an ML image classification model based on

Another AI health breakthrough: Artificial intelligence outperforms clinical tests at predicting progress of Alzheimer’s disease. Researchers used cognitive tests and structural MRI scans to build a model that could assess and predict Alzheimer’s progression. It was able to correctly identify individuals who went on to develop Alzheimer’s in 82% of cases.

AI Business and Policy

OpenAI blocked access to its services from China, and now Chinese developers are scrambling. OpenAI has been driven by IP concerns

“We are taking additional steps to block API traffic from regions where we do not support access to OpenAI’s services,” an OpenAI spokesperson told Bloomberg last month.

While this is partly motivated by Chinese AI companies abusing API access to get data to train their AI models, this may provide an opening to Chinese AI firms like SenseTime to gain market share in China, as they now have fairly competitive AI models.

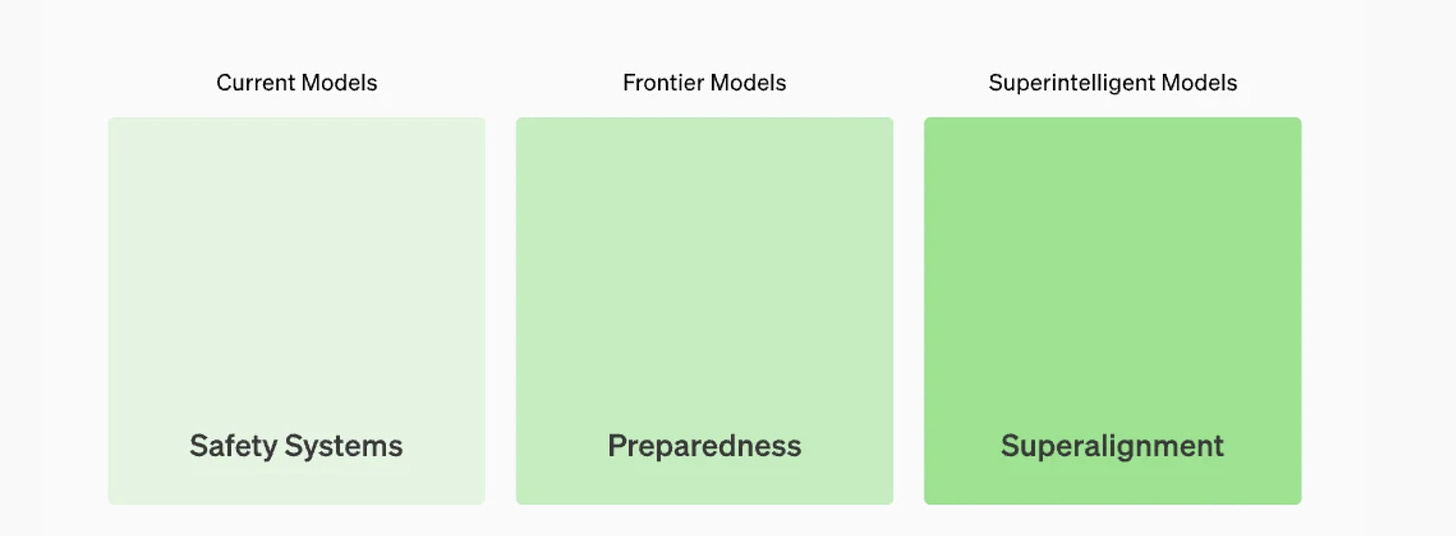

OpenAI’s Roadmap: OpenAI has introduced a new system called AI Preparedness Framework to monitor and assess progress towards human-level AGI. The Preparedness team is part of a 3-part AI safety effort: Safety Systems for current AI models, Preparedness for Frontier Models, and Superalignment for Superintelligent models.

The Preparedness Framework (beta) is a 27-page document. It identifies risk types to assess AI risks and AI safety: Cybersecurity; CBRN (chemical, biological, radiological, nuclear); Persuasion; Unknown unknowns. It scores AI systems across the tracked risk categories, creating and updating scorecard for AI models.

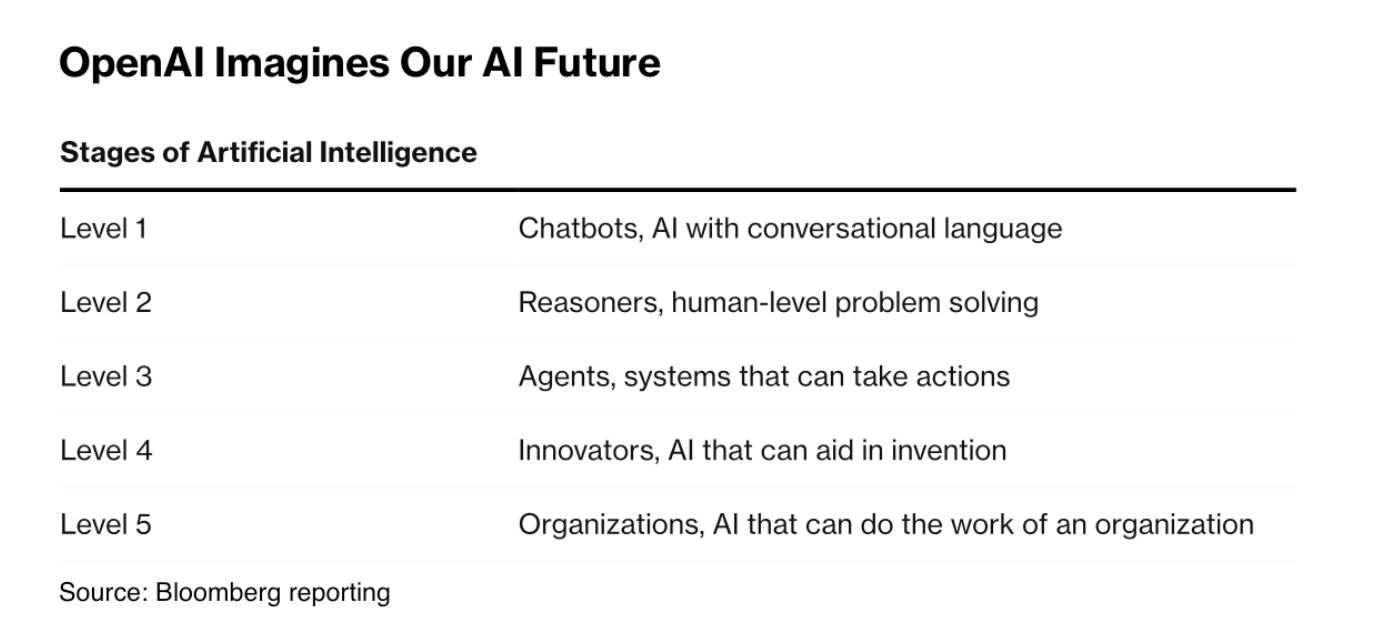

OpenAI sees AI progressing in multiple ways on multiple levels, using a 5-level scale ranging to ranges from Level 1 (conversational AI) to Level 5 (AI capable of running entire organizations).

A report by futureresearch estimates OpenAI's revenue breakdown:

$1.9B for ChatGPT Plus (7.7M subscribers at $20/mo)

$714M from ChatGPT Enterprise (1.2M at $50/mo)

$510M from the API

$290M from ChatGPT Team ( 80k at $25/mo)

As a third party estimate extrapolating from public comments by Sam Altman, I’d take it with a grain of salt, but what jumps out is how small AI market share still is. We have a lot of room to grow in AI usage.

In yet more OpenAI news, Microsoft, Apple Drop OpenAI Board Plans as Scrutiny Grows. Big Tech is getting shy of having a board seat on OpenAI as a way to fending off Antitrust concerns.

TechCrunch has a full list of 28 US AI startups that have raised $100M or more in 2024. Notable recent funding raises:

Hebbia, which uses generative AI to search large documents, raised $130 million in a round led by Andreessen Horowitz, at a $700 million valuation.

Pittsburgh-based Skild AI announced a $300 million Series A round on July 9 that valued the company at $1.5 billion.

Senators Introduce COPIED Act to Combat AI Content Misuse. The Content Origin Protection and Integrity from Edited and Deepfaked Media Act (COPIED) Act, aims to combat unauthorized use of content by AI models by creating standards for content authentication and detection of AI-generated material. The bill would make removing digital watermarks illegal, allow content owners to sue companies using their work without permission, and require NIST to develop standards for content origin proof and synthetic content detection, while prohibiting the use of protected content to train AI models.

AI Opinions and Articles

Andrew Ng sounds alarm over California’s proposed SB 1047 AI regulations, with a very detailed breakdown of why the bill would cripple AI innovation:

I continue to be alarmed at the progress of proposed California regulation SB 1047 and the attack it represents on open source and more broadly on AI innovation. As I wrote previously, this proposed law makes a fundamental mistake of regulating AI technology instead of AI applications, and thus would fail to make AI meaningfully safer. …

It puts in place complex reporting requirements for developers who fine-tune models or develop models that cost more than $100 million to train. It is a vague, ambiguous law that imposes significant penalties for violations, creating a huge gray zone in which developers can’t be sure how to avoid breaking the law. This will paralyze many teams.

Federal Govt’s FTC assesses open weights AI models, given them an overall positive report:

In summary, open-weights models have the potential to drive innovation, reduce costs, increase consumer choice, and generally benefit the public – as has been seen with open-source software. Those potential upsides, however, are not all guaranteed, and open-weights models present new challenges.

Sam Altman and Arianna Huffington say AI-Driven Behavior Change Could Transform Health Care.

A Look Back …

A Karpathy notes a great sign of AI progress on lowered cost of training. Five years after OpenAI released GPT-2, you can train your own GPT-2 for ~$672, running on one 8XH100 GPU node for 24 hours:

…costs have come down dramatically over the last 5 years due to improvements in compute hardware (H100 GPUs), software (CUDA, cuBLAS, cuDNN, FlashAttention) and data quality (e.g. the FineWeb-Edu dataset).

This week, OpenAI said that GPT-4 model cost has come down 85-90% since its launch and “there’s no reason why that trend will not continue.” AI will keep getting dramatically cheaper to train and cheaper to use.