AI Week In Review 24.08.03

Black Forest Labs' FLUX.1, Midjourney 6.1, Runway Gen3 image-to-video, Gemma 2B, GemmaScope, ShieldGemma, Gemini 1.5 Pro 0801, Stable Fast 3D, Github Models, GPT-4o voice mode beta, Arcee DistillKit

AI Tech and Product Releases

Black Forest Labs has announced their launch and released FLUX AI image generation model suite. The team at Black Forest Labs includes AI developers behind Stable Diffusion, and they received $31 million in VC funding to launch their company. Black Forest Labs previewed their next milestone: “SOTA Text-to-Video for All.”

FLUX image generation is a 12B parameter model diffusion model with three variations: FLUX.1 pro - their highest-quality closed version; FLUX.1 dev - open weights, good quality and available to try on Fal.ai or via download; and FLUX.1 schnell – their open and fastest variant.

FLUX is fast, has quality and aesthetics on par with Midjourney, and shows excellent text adherence and complex prompt understanding. See examples here.

Not standing still, Midjourney released V6.1 of their AI image generation model, a faster, smarter version with greater realism and coherence. Now humans look more real than ever.

Runway has added image to video into Gen 3 alpha model. Posters on X have shared selfies-to-videos with mind-blowing backgrounds and excellent MidJourney 6.1 + Runway Gen 3 generations. Runway Gen 3 image-to-5-second video yields results far more photo-realistic than earlier text-to-video generations. Nearly Hollywood-level.

OpenAI finally started beta-testing GPT-4o voice mode to select users and it’s blowing minds. Cris Giardina and others on X have shared wild examples of GPT-4o voice interaction. One that went viral was “ChatGPT Advanced Voice Mode counting as fast as it can to 10, then to 50 (this blew my mind - it stopped to catch its breath like a human would).”

OpenAI launches experimental GPT-4o Long Output model, with up to 64,000 tokens of output instead of initial 4,000.

Google updated Gemini 1.5 Pro with a 0801 release, and its now the best-performing AI model on the LMsys arena leaderboard, surpassing GPT-4o and Claude-3.5. The model excels in multi-lingual tasks and performs well in math, coding, and long context prompts.

Google has released open-source Gemma 2 2.6B. This is a small but high-performing model; its chatbot Arena Elo beats much larger models like Llama 2 70B and GPT-3.5. Its small size make it a great model for edge devices; it can run locally on multiple platforms including iOS, Android, and web browsers using the MLC-LLM framework.

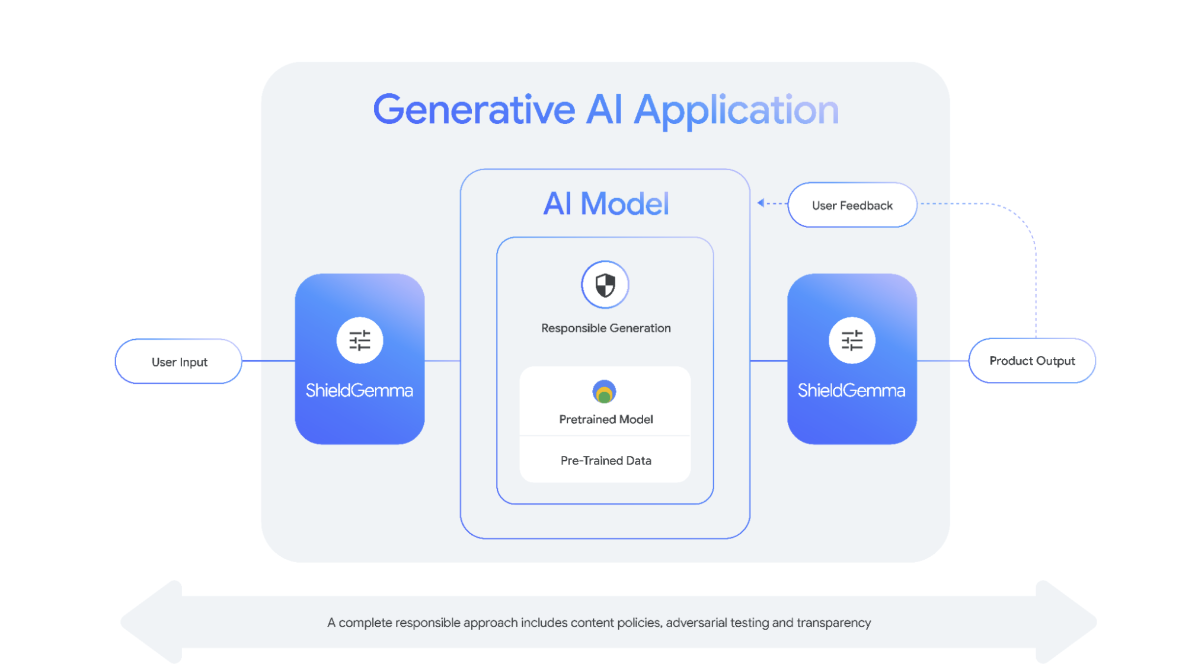

They also released ShieldGemma, safety content classifier models based on Gemma 2, that filter AI model inputs and outputs to prevent harmful content generation (explicit or dangerous content, hate speech, etc.)

Lastly, Google released Gemma Scope, an open model interpretability tool that uses sparse autoencoders (SAEs) to gain insight into how Gemma 2 models work internally. This will help enormously in understanding better how LLMs work at the discrete neural weights level.

Apple released a beta of iOS 18.1 with Apple Intelligence. However, this is a limited set of features, and Apple is reportedly delaying the full release of Apple Intelligence from September to October. Apple Intelligence features will slowly roll out over the coming months.

Stability released Stable Fast 3D, a model that generates high-quality 3D assets from a single image in just 0.5 seconds:

Stable Fast 3D's unprecedented speed and quality make it an invaluable tool for rapid prototyping in 3D work, catering to both enterprises and indie developers in gaming and virtual reality, as well retail, architecture and design.

Microsoft has launched GitHub Models, promising to bring “industry leading large and small language models” directly to GitHub users, by letting users select, try out and integrate AI models in their code easily. Developers can test out models in a built-in playground, then bring models into Codespaces and VS Code for development.

GitHub Models is in limited public beta. This will be a challenge to Hugging Face in the AI model marketplace.

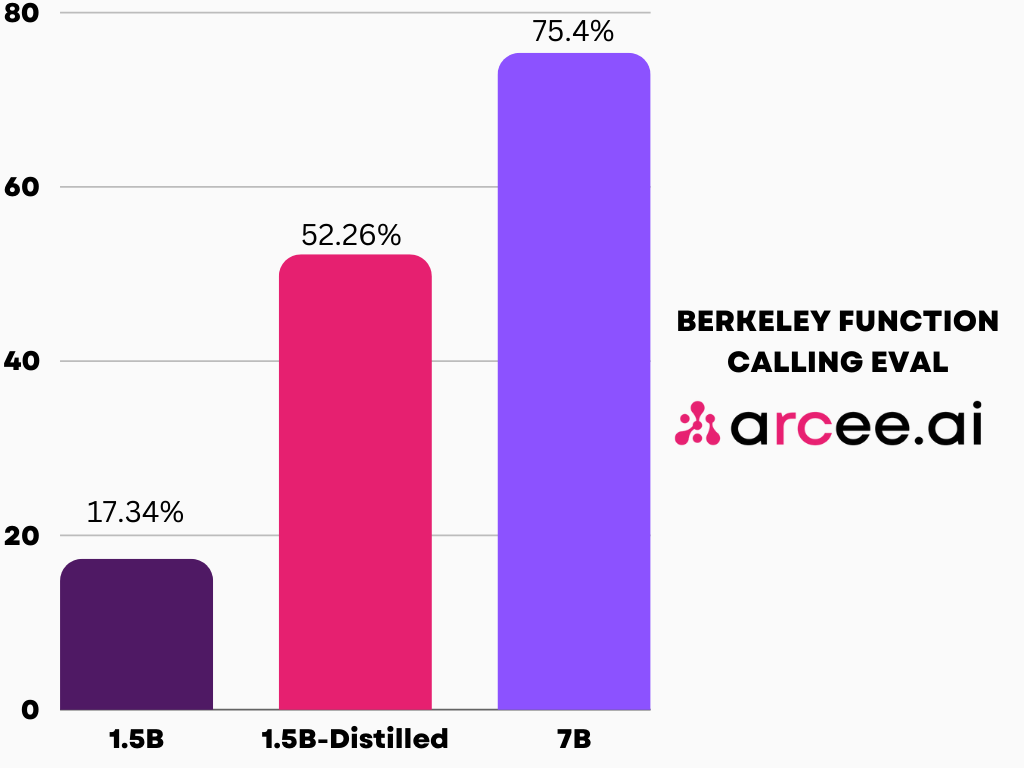

Arcee AI announced DistillKit, an open-source kit to distill LLMs to make compact, powerful AI models easily. This uses Supervised Fine-Tuning (SFT) with distillation to infuse capabilities of larger LLMs into smaller models, and will be very useful to AI developers fine-tuning AI models.

Meta could show AI voiced by celebrities like Judi Dench next month.

AI Research News

Meta’s SAM-2 - Segment anything for image and video - was the highest profile of our AI research highlights for this week, the full list shared here:

SAM 2: Segment Anything in Images and Videos

AFM: Apple Intelligence Foundation Language Models

Meltemi: The first open Large Language Model for Greek

SeaLLMs 3: Open Foundation and Chat Multilingual Large Language Models for Southeast Asian Languages

SaulLM-54B & SaulLM-141B: Scaling Up Domain Adaptation for the Legal Domain

AI Business and Policy

“Over the past two years, however, the landscape has shifted; many more pre-trained models are now available. Given these changes, we see an advantage in making greater use of third-party LLMs alongside our own. This allows us to devote even more resources to post-training and creating new product experiences for our growing user base.” - Character.AI

Character.AI CEO Noam Shazeer returns to Google, as part of a move by Character.AI to re-focus their team and mission. The company spoke of their “next phase of growth” in a blog post. Noam and other researchers will join Google, while Character.AI’s team will continue to build out the Character.AI product by customizing existing foundation AI models, not building from scratch.

More hints of Meta’s plans for Llama 4. On the latest Meta earnings call, Zuckerberg says Meta will need 10x more computing power to train Llama 4 than Llama 3, and future models will grow beyond that. He also said:

“I’d rather risk building capacity before it is needed rather than too late, given the long lead times for spinning up new inference projects.”

AI music startup Suno has replied to copyright lawsuits, claiming training model on copyrighted music is ‘fair use.’ They aren’t wrong. Training itself is not a problem, although some generations too close to plagiarism might be.

Canva acquired AI image generation firm Leonardo.ai to boost its generative AI efforts.

Airtable Acquires Dopt to Enhance AI-Powered No-Code Solutions. Dopt sells an AI-powered onboarding platform; AirTable will bring their expertise into their AI-driven, no-code user activation and product growth.

AI startups ramp up federal lobbying efforts. This report cites increases in the lobbying efforts by OpenAI, Anthropic and others, and mentions:

… the number of groups lobbying the federal government on issues related to AI grew from 459 in 2023 to 556 in the first half of 2024 (from January to July).

AI Opinions and Articles

The future is here, but it is not evenly distributed. - Latent Space

Latent Space notes a ‘vibe shift’ in a piece titled The Winds of AI Winter. They note a number of mis-steps (Microsoft Recall recalled), bad product launches (Rabbit R1, Humane pin), companies selling out or imploding (Stability AI, Adept to Amazon, Inflection to Microsoft):

In aggregate, they point to a fundamentally unhealthy industry dynamic that is at best dishonest, and at worst teetering on the brink of the next AI Winter.

They go on to cite the reports from Goldman Sachs and Sequoia Capital fretting about the ROI from AI spend. AI needs to make several hundred billion dollars to be worth the spend.

We have gone from the “shock and awe” of marveling at new AI technology to asking “show me the money” questions: What can AI actually do, and how much will people pay for AI to do it?

Gary Marcus says the collapse of the Generative AI bubble may be imminent. Maybe Nvidia losing almost $1 trillion in market cap in the past few weeks after more than tripling in the prior year is our sign of a “vibe shift.”

Gary’s point is that AI needs to stop hallucinating and get useful, and he doesn’t see LLMs as being up to the task. Hence, AI hype is headed for a fall.

“To be sure, Generative AI itself won’t disappear. But investors may well stop forking out money at the rates they have, enthusiasm may diminish, and a lot of people may lose their shirts. Companies that are currently valued at billions of dollars may fold, or stripped for parts. Few of last year’s darlings will ever meet recent expectations, where estimated values have often been a couple hundred times current earnings. Things may look radically different by the end of 2024 from how they looked just a few months ago.” - Gary Marcus

I fully expect many bumps on the road to AGI, but I think we are entering more of a vibe ‘cold front’ than an AI winter. There is churn when expectations don’t meet results, but AI apps done right can and will meet high demands and then some. AI has consistently moved faster than most expect, and AI’s exponential ride is far from over.