AI Week in Review 25.03.22

Nvidia GTC: Nemotron, Isaac GR00T N1, Cosmos, Newton. OpenAI FM, TTS, & transcribe, Baidu Ernie 4.5 & Ernie X1, Mistral Small 3.1, Claude web search, EXAONE Deep 32B, Orpheus 3B TTS, Google Canvas.

Top Tools

Nvidia’s GTC was the biggest event of the week, that underscored Nvidia’s role in “scaling up the AI revolution,” as we stated in our deeper dive on the GTC ’25 event. Key Nvidia AI announcements at GTC:

Jensen Huang’s keynote highlighted new GPU and AI system innovations and releases, including Nvidia’s GPU and AI supercomputer roadmap: Blackwell, Blackwell Ultra, Vera Rubin, Rubin Ultra, and Feynman.

Nvidia released Llama Nemotron reasoning models for AI agents. The open-source Llama Nemotron model suite includes Nemotron Nano (8B), Super (49B), and an Ultra (253B) version planned for future release. These models are distilled and pruned from Meta Llama models and fine-tuned for hybrid reasoning, which can be toggled on or off via the system prompt.

Nvidia released Isaac GR00T N1 foundation model to open source for humanoid robot development.

Nvidia released the Cosmos platform for physical AI development, including Cosmos Predict for intelligent world generation, Cosmos Reason for multi-modal spatial reasoning, and Cosmos-Transfer1 AI Model for Realistic Robot Training Simulations. These models support realistic simulation environments for training robots and autonomous vehicles.

Nvidia announced a collaboration with Disney and Google DeepMind to develop Newton, a physics engine for robotic movements.

Nvidia announced DGX Spark and DGX Station AI personal supercomputers.

Nvidia announced RTX Pro Blackwell series of GPUs for professionals, including the RTX Pro 6000 workstation GPU, putting the latest Blackwell GPUs into workstations, servers, and laptops.

Nvidia launched the Accelerated Quantum Research Center (NVAQC) to advance quantum computing.

AI Tech and Product Releases

OpenAI has upgraded its transcription and voice-generating AI models, releasing a suite of advanced audio models for developers. Their next-generation text-to-speech model, gpt-4o-mini-tts, delivers more nuanced speech and can be instructed in natural language to speak in distinctive styles or tones, such as sympathetic voices or expressive storytellers.

Their new speech-to-text models, gpt-4o-transcribe and gpt-4o-mini-transcribe, set new standards for accuracy in noisy environments and with diverse accents, outperforming the existing Whisper models. These models are available through OpenAI’s API and Agents SDK, with a new testing platform, OpenAI FM, launched to encourage creative uses.

Baidu launched two new versions of its AI model Ernie: Ernie 4.5, a multimodal model, and Ernie X1, an AI reasoning model. Ernie 4.5 is a native multimodal foundation model capable of processing text, images, audio, and video, which claims to outperform GPT-4.5 in multiple benchmarks. Ernie X1 is a deep-thinking reasoning model that delivers performance on par with DeepSeek R1.

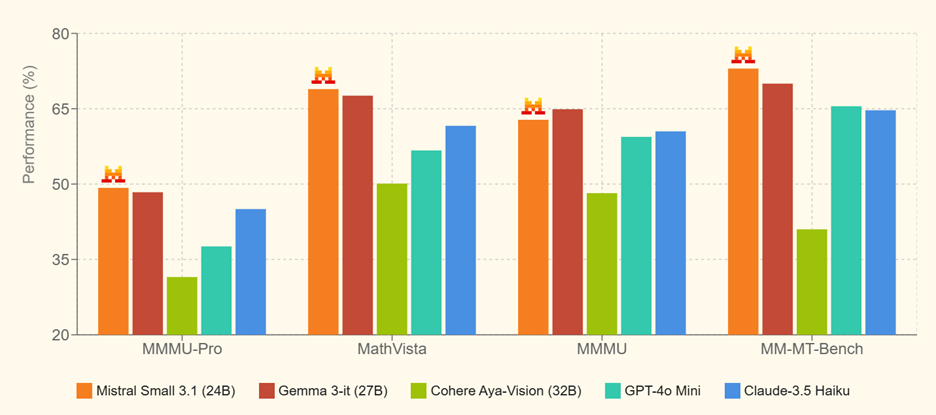

Mistral AI released Mistral Small 3.1, an open source (Apache 2 license) 24B parameter multimodal model with a 128k token context. It is competitive with Gemma 3 and GPT-4o Mini on major benchmarks, while delivering speedier inference speeds of 150 tokens per second. Mistral Small 3.1 is strong on vision tasks and function calling for AI agent uses, and it supports fine-tuning for specialized domains.

Anthropic announced web search for Claude, enabling Claude (specifically, Claude 3.7 Sonnet) to access real-time information from the web and return direct citations in responses. This update transforms Claude into a more comprehensive digital assistant and addresses a key competitive gap with ChatGPT and other AI models. It’s available now for paid Claude users in the U.S., with plans for broader rollout to free users and international markets soon.

LG’s AI research team released EXAONE Deep 32B, an AI reasoning that delivers SOTA reasoning capabilities for its size, besting DeepSeek R1 on some benchmarks despite using 5% of its parameters. It’s an open model for research purposes, with technical details for EXAONE Deep shared on LG’s AI research blog and the model weights for EXAONE Deep 32B, 7.8B, and 2.4B AI reasoning models shared on Hugging Face.

Canopy Labs has introduced Orpheus, high-quality natural-sounding speech language models that are open source and available in several sizes: Orpheus 3B, 1B, 500M, and 150M. The model supports zero-shot voice cloning and marks a significant advancement in open-source voice technology.

Gmail’s search will now take your most-clicked emails and frequent contacts into account to provide better results. The new AI-powered upgrade will allow users to toggle between chronological keyword results and the new "most relevant" option.

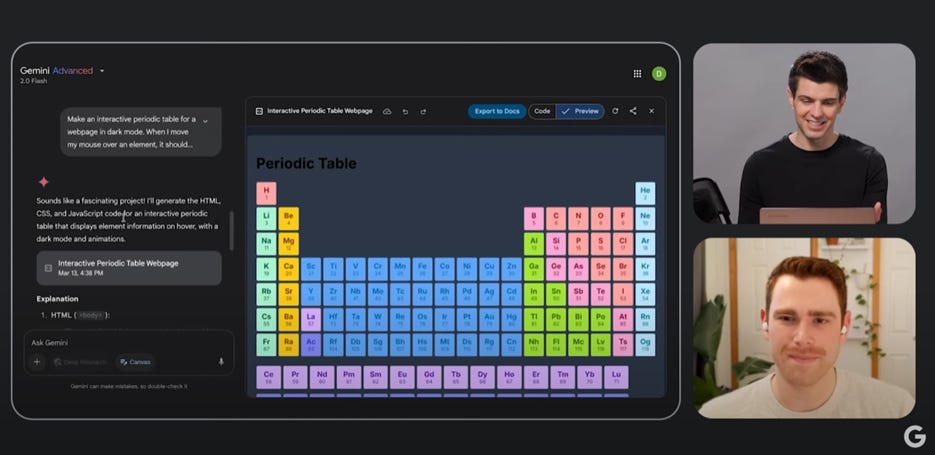

Google has introduced Canvas within Gemini, a feature that allows users to build and visually preview applications and outputs from an AI model. Similar to Canvas in OpenAI and Artifacts in Claude, this enhances Gemini’s interface for application development and other creative tasks.

Adobe introduced Project Slide Wow, an AI tool for generating PowerPoint presentations from raw data. The tool integrates with Adobe Customer Journey Analytics (CJA) to automatically generate slides with data visualizations and speaker notes.

StepFun has launched TI2V, an open-source image-to-video model that generates high-quality videos from static images and text prompts. TI2V exhibits impressive motion controls and effectiveness in producing anime-style video content. It is available via Hugging Face and GitHub.

Tencent has updated its Hunyuan 3D model to version 2.0 MV (MultiView) and introduced a Turbo variant that achieves near real-time 3D generation. Hunyuan3D-2mv is finetuned from Hunyuan3D-2 to support multi-view-controlled shape generation. The updated model offers state-of-the-art performance in geometry, texture, and alignment, significantly enhancing the quality of 3D outputs. Users can generate high-quality 3D models quickly through the tool, which is available on Hugging Face.

AI Research News

ByteDance has released DAPO, a new reinforcement learning method that outperforms the previous GRPO algorithm (which was used to make the DeepSeek R1 reasoning model). Presented in “DAPO: An Open-Source LLM Reinforcement Learning System at Scale,” DAPO achieves a 50% improvement in accuracy on AIME 2024 with 50% fewer training steps versus prior methods.

SEARCH-R1 trains LLMs to generate search queries and integrate search engine retrieval into their reasoning. The technique trains LLMs through pure reinforcement learning, and it enables LLMs to interact with search engines during their reasoning process, enhancing accuracy and reliability.

AI builders are using Minecraft to assess generative AI models. For example, a collaborative website called MC-Bench (Minecraft Benchmark) pits AI models against each other in Minecraft creation challenges, with users voting on the best result. With AIRIS, Minecraft is being used to evaluate AI agents' ability to learn in the Minecraft environment, and interact in Minecraft’s complex, open-world environment.

AI Business and Policy

AI-powered search startup Perplexity is reportedly in early talks to raise up to $1 billion, potentially valuing the company at $18 billion. Perplexity's annual recurring revenue has reached $100 million, doubling its valuation since December.

OpenAI has been accused of violating its data responsibilities under GDPR. A Norwegian man, Arve Hjalmar Holmen, filed a privacy complaint that ChatGPT falsely accused him of murder and attempted murder. Austrian advocacy group Noyb filed the complaint, citing GDPR violations and demanding fines, removal of defamatory output, and model improvements.

Due to Apple's AI-enhanced Siri update facing significant delays, Apple is shaking up Siri leadership. Tim Cook is replacing the current AI head John Giannandrea with Mike Rockwell, the head of Vision Pro development, due to a lack of confidence in the AI team.

Adding further brand insult to injury, Apple is facing a class-action lawsuit alleging false advertising of its Apple Intelligence features. Plaintiffs claim that owners of Apple Intelligence-capable iPhones haven't received the promised features.

Microsoft is researching how to estimate the influence of specific training examples on generative AI model outputs. The project aims to demonstrate that models can be trained to efficiently estimate the impact of particular data like photos and books.

LexisNexis' Protégé leverages small language models (SLMs) to enhance legal workflows, distilling and fine-tuning smaller LLMs for speed and precision in legal use cases. The LexisNexis legal AI assistant uses a multi-model approach, assigning the best model for each specific task.

Hugging Face tells the Trump admin open-source AI is America’s competitive advantage. They argue open-source models match or exceed the capabilities of closed commercial systems at a lower cost, and propose democratizing AI through open AI ecosystems, efficient models, and transparent security.

AI Opinions and Articles

The terminology around AI is a mess, so it’s no surprise then that the term “AI agent” is all over the place. TechCrunch says, “No one knows what the hell an AI agent is.”

Much like other AI-related jargon (e.g., “multimodal,” “AGI,” and “AI” itself), the terms “agent” and “agentic” are becoming diluted to the point of meaninglessness.

… “I think that our industry overuses the term ‘agent’ to the point where it is almost nonsensical,” Salva told TechCrunch in an interview. “[It is] one of my pet peeves.”

Every AI vendor is making their own definition for their own marketing. I’ve noted the problem, even while defining AI agents myself:

AI agents are autonomous AI systems designed to perceive their environment, make decisions, and take actions to complete specific tasks.

This doesn’t define an AI agent’s level of capabilities; an AI agent can be simple (limited chatbot automations) or complex (a robot navigating through an obstacle course). However, all AI agents exhibit autonomous decision-making based on environmental feedback.