Nvidia GTC '25: Scaling Up the AI Revolution

Nvidia affirmed Blackwell and Rubin GPU and AI system roadmaps, announced silicon photonics innovation, DGX Spark and DGX Station AI PCs, and GROOT-N1, Newton and Cosmos world models for robotics.

Jensen Huang’s GTC 25 Keynote Recap

Nvidia’s annual developer conference, GTC, has turned from a technical event (GTC stands for GPU Technology Conference) to a rock-concert-like event, an important event for the AI world, Nvidia investors, and Nvidia’s biggest customers, the Big Tech hyper-scalers and cloud computing behemoths, as well as for developers.

CEO Jensen Huang dubbed it the AI Super Bowl. Nvidia is continuing to scale up and scale out the AI compute they will deliver, announcing huge demand for the latest Blackwell and a continued aggressive roadmap for GPUs and AI systems: Blackwell Ultra, Rubin, and Rubin Ultra will be released in the next 3 years.

He sees insatiable demand for Nvidia GPUs for AI computing, claiming the rise of AI reasoning models has raised AI model needs for tokens by 100-fold what they estimated last year. He sees the value of the AI data center buildout to be a trillion dollars in the next 3 years. Agentic AI in the enterprise will drive significant demand for AI everywhere. He sees further multi-trillion-dollar opportunities in self-driving cars and robotics, what he calls physical AI, stating that:

Physical AI for industrial and robotics is a $50 trillion opportunity.

Beyond their core GPU roadmap, key announcements from Nvidia at this GTC focused on advancing Nvidia’s dominance in AI infrastructure in both the data center and the edge, and their support for robotics, automotive (self-driving cars), and enterprise computing, reinforcing its dominance in the GPU and AI markets.

GPU and AI Data Center Roadmap

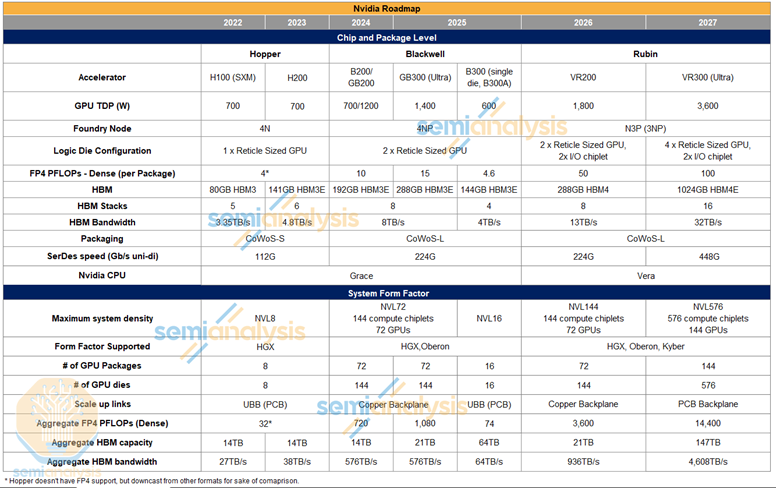

Nvidia has a one-year cadence for releasing their flagship GPUs and AI supercomputer systems, scaling up GPU performance (measured in FP4 PFLOPs in the AI inference era), system bandwidth, and memory capacity each generation.

Nvidia’s Grace Hopper architecture, released in 2023 as H100s and H200s, has been the system used to build the current generation of AI models, such as Grok 3, built with 100,000 H100s.

Blackwell: The Blackwell GPU (B200) and Blackwell NVL 72 rack systems were introduced in the fall of 2024 and are in full production, with 3.6 million GPUs already delivered. Blackwell GPU has a 2.5x performance speedup over the prior generation H200.

In his keynote, Jensen Huang touted prior innovations that made the Blackwell NVLink 72 a one exaFLOP AI supercomputer. They disaggregated the NVLink switch and placed it at the chassis' center and made the complete system fully liquid-cooled, so they could pack 144 GPUs into a single rack.

Blackwell Ultra will release in the second half of this year. The Blackwell Ultra GPU (GB300) boasts 15 FP4 PFLOPs, a new instruction for attention, 1.5 times more memory over its predecessor, and two times more bandwidth, enabling support for larger AI models. Leveraging the higher performance GPU, Blackwell Ultra NVLink 72 offers 1.5 times more computing power in a rack system over Blackwell.

Vera Rubin: Nvidia’s next architecture, named after astronomer Vera Rubin, is set for release in late 2026. It will feature a new CPU (Vera) with twice the performance, and a new GPU (Rubin) that performs at 50 PF4 PFLOPs (3x the Blackwell) and comes with new faster memories (HBM4) with higher bandwidth, and new networking NVLink 6.

Rubin Ultra: Following Vera Rubin, Rubin Ultra is planned for the second half of 2027. The GPU package will have 4 GPUs per package, offering 100 FP4 PFLOPs system will be an extreme scale-up, quadrupling GPUs per rack to 576 GPUs, connected via an NVLink 576.

This single rack Rubin Ultra system will have an incredible 15 exaFLOPs of performance, support 4,600 terabytes per second scaled-up bandwidth, consume 600 kilowatts per rack, and require 2.5 million parts.

The successor to the Vera Rubin system will be named Feynman and released in 2028.

SemiAnalysis has further details on Nvidia’s GPU and System roadmap and shared specifications charts for the Nvidia roadmap.

Scaling Up with Innovations

The level of innovation in the Nvidia AI supercomputer is stunning. Nvidia is “transforming every layer of computing” to meet their aggressive goals to further scale up computing and increase system performance. Huang announced upcoming innovations in silicon photonics and networking that will be introduced in coming generations of Nvidia systems.

In Silicon Photonics, Nvidia announced its first co-packaged option silicon photonic system, a world-beating 1.6 terabit per second switch based on micro ring resonator modulator technology. This greatly improves the energy efficiency and scalability of their system interconnect, enabling potentially millions of GPUs within an interconnect fabric.

This innovation improves their Spectrum-X Ethernet networking platform efficiency.

Nvidia isn’t just building GPUs, but they are building AI factories. To support building AI factories, Nvidia announced Omniverse Blueprint to help design AI factories via simulation.

Nvidia also announced Dynamo, “the operating system of an AI factory.” Dynamo is an open-source software layer for accelerating and scaling AI models in AI factories. Its selling point is increased GPU utilization when serving AI inference workloads in the data center:

When serving the open-source DeepSeek-R1 671B reasoning model on NVIDIA GB200 NVL72, NVIDIA Dynamo increased the number of requests served by up to 30x, making it the ideal solution for AI factories looking to run at the lowest possible cost to maximize token revenue generation.

Powerful Desktop AI – DGX Spark and DJX Station

“AI has transformed every layer of the computing stack. It stands to reason a new class of computers would emerge — designed for AI-native developers and to run AI-native applications,” – Jensen Huang

AI PCs are a great concept, but the specs on Microsoft AI PCs from last year don’t match Apple’s best M4-based PCs, and neither match what you get from an RTX 40 series or RTX 50 series GPU you can add to a PC. Nvidia introduced DGX Spark and DGX Station to redefine the AI PC their way.

First announced at CES, DGX Spark is a small form-factor AI PC, powered by a GB10 Grace Blackwell superchip and positioned as a competitor to high-end like Apple’s top-tier Macs. It offers 1 PFLOP of AI compute and 128 GB of unified memory, suitable for inference on AI models up to 200B parameters.

DGX Station is an AI workstation, designed for AI developers to perform training, fine-tuning and inference on large models directly on a desktop. It brings a formidable 20 petaflops of performance powered by Blackwell Ultra GB300 GPU, a Grace CPU with 72 cores, and up to 784GB of coherent HBM memory. It runs the Nvidia DGX OS, which can scale to Nvidia DGX cloud, and acts like a slice of Nvidia’s AI factory.

Robotics and Physical AI

Nvidia released several open-source AI models and datasets to accelerate robotics and physical AI development.

Isaac GR00T N1 Humanoid Robot Foundation Model was open-sourced. Unveiled as the world’s first open-source humanoid robot foundation model, this model is aimed at accelerating robotics development by integrating physical AI with real-world physics understanding. It includes development tools to support robotics innovation.

Nvidia announced Newton Open-Source Physics Engine and their collaboration with Google DeepMind and Disney Research. Designed for robotics simulation, this physics engine can model fine-grain rigid and soft bodies to make sure simulation environments adhere to physical realities. It enhances physical AI development by providing a robust, accurate GPU-accelerated simulation environment for robotics and autonomous systems.

Nvidia announced the release of Cosmos world foundation models. These open and customizable reasoning models for physical AI development. They are integrated with Nvidia’s Omniverse platform to generate infinite, controlled environments for AI training and simulation, in particular to support training for robots.

Conclusion – Roadmaps, Agents, and Robots

Since Nvidia is supporting the foundations of AI both with chips, systems, and software, and since AI is touching every industry, their conference announcements cover an ever wider front of activity.

For example, Nvidia announced models and systems supporting healthcare and life sciences, such as MONAI; they had several updates on AI for self-driving cars, including announcing a GM partnership and highlighting automotive safety with their "Halos" system.

The bottom-line theme from GTC is that Nvidia’s core GPU and AI system roadmap is on track, and Nvidia will continue to support and build their AI software stack to advance progress in AI, especially physical AI (self-driving cars and robots in particular). Doing so locks Nvidia in as the leader in the multi-trillion-dollar opportunity to sell AI systems for agentic AI and physical AI.