AI Week in Review 25.07.26

OpenAI & DeepMind AI models win IMO gold. Qwen3 2507: Qwen3-235B-A22B-Instruct-2507, Thinking, Qwen3-Coder. Higgs Audio v2. Google Opal, GitHub Spark. OpenReasoning-Nemotron. America’s AI Action Plan.

Top Story – AI Gets the Gold

An advanced version of Gemini Deep Think officially achieved a gold-medal level score (35/42) in the International Mathematical Olympiad (IMO), the premier global math competition. OpenAI also announced their AI reasoning model achieved a gold-medal score of 35/42 on this year’s IMO. Both OpenAI and Google solved (the same) five of six problems.

ByteDance's Seed-Prover scored a Silver, solving 4 out of 6 problems.

We share further details on this achievement in “AI Cracks the Code and Solves Math.” It’s impressive that both OpenAI and Google used general-purpose AI reasoning models that used natural language input and output; no special AI models or tools were used. Consequently, techniques used in these AI models can be applied to other domains.

Top Tool – Qwen 3 2507 Update

Alibaba’s Qwen team has launched new open-source generative AI models for reasoning and coding that exceed performance of leading proprietary AI models. First, they updated the Qwen3 235B parameter mixture‑of‑experts (MoE) hybrid thinking model, creating separate instruct (Qwen3-235B-A22B-Instruct-2507) and chain‑of‑thought (Qwen3-235B-A22B-Thinking-2507) variants for specific tasks. Then they released a new SOTA MoE coding agent model, Qwen3-Coder-480B-A35B-Instruct.

The updated Qwen3-235B-A22B-Instruct-2507 offers a 256 K‑token context window without thinking token overhead. This model surpasses models like Kimi-2, DeepSeek V3, and Claude Opus 4 in non-thinking mode on benchmarks, including 77.5% on GPQA, and new state‑of‑the‑art function‑calling scores on BFCL. All are huge leaps from prior Qwen 3 non-thinking performance, and it proves you don’t need explicit thinking mode to have strong AI capabilities.

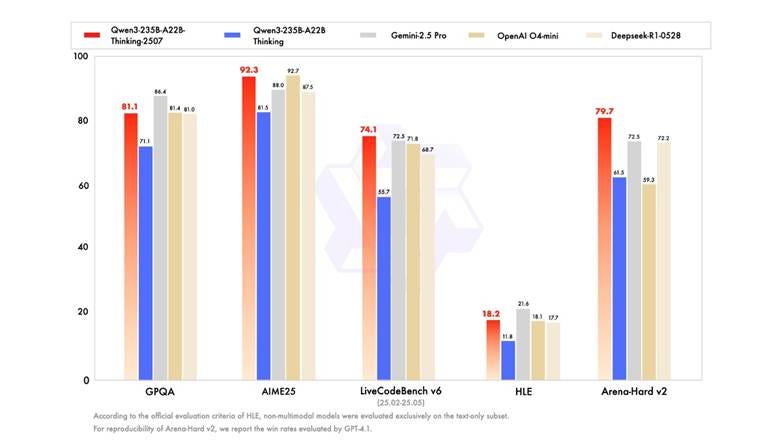

Qwen3-235B-A22B-Thinking-2507 adds thinking to the base Qwen3 235B MoE model and has stellar benchmark results that outperforms even Gemini 2.5 Pro and o4 mini on some benchmarks: 92.3% on AIME25, 74% on LiveCodeBench, and 18.2% on Humanities Last Exam. It’s not just a state-of-the-art AI reasoning model; it achieves SOTA status as an open AI model with modest 22B active parameters.

The Qwen team also released Qwen3-Coder-480B-A35B, an MoE with 480B total parameters (35B active) designed for agentic code generation. Trained on 7.5 trillion tokens, 70 % of which are code, this model achieved a record 69.6 % on SWE‑bench verified. With up to 1 million token context via YaRN extension, Qwen3‑Coder delivers Claude 4 Sonnet‑level performance at significantly reduced cost.

Although billed as a ‘minor’ update, these improvements make Qwen 3 the best open source AI model and state-of-the-art for AI models overall. They achieved this by scaling reinforcement learning. As Junyang Lin, development lead at Alibaba Qwen, explained on the ThursdAI podcast, they used “reinforcement learning with over 20,000 parallel sandbox environments.” This scaled RL learning with continuous code‑write‑test‑learn cycles.

The Qwen3 models are open AI models licensed under Apache 2.0, available via Qwen chat and open AI model platforms as well as downloadable from HuggingFace.

But wait, there’s more: The Qwen team also released Qwen Code, a CLI tool and coding agent forked from Gemini CLI to provide access to their new coding model. It’s available via GitHub.

AI Tech and Product Releases

Boson AI open‑sourced Higgs Audio v2, a powerful text-to-speech (TTS) model that generates expressive speech in real time, supporting zero‑shot multi‑speaker dialogue, voice cloning, and hummed melodies. Combining a 3B parameter Llama 3.2 core with a 2.2B parameter audio Dual‑FFN trained on ten million hours of speech and music, Higgs Audio v2 has deep language and acoustic understanding and surpasses GPT‑4o‑mini in multi-speaker dialog. The model runs efficiently on a single A100 GPU and is available through HuggingFace.

Google has launched Opal, a new AI-powered "vibe-coding" tool from Google Labs that allows users to create and share their own AI mini-applications. This tool enables the chaining together of prompts, models, and other tools through simple natural language and visual editing. Opal is designed to empower creators to build applications without needing to write complex code.

GitHub Spark, another tool to create web apps from single-shot prompts, was released to public preview for Copilot subscribers. GitHub says:

“GitHub Spark helps you transform your ideas into full-stack intelligent apps and publish with a single click.”

Simon Willison did inception-level vibe-coding with Spark, Using GitHub Spark to reverse engineer GitHub Spark and proving how useful prompt-to-app tools can be.

Google announced the stable release of Gemini 2.5 Flash-Lite, its most cost-efficient and fastest Gemini 2.5 AI model, faster and more cost-efficient than 2.0 Flash while outperforming it on coding, math, and multimodal understanding. It’s available to try (free) on Google AI studio.

Google launched a new AI-powered feature called Web Guide that organizes search results by grouping web pages according to various aspects of a user's query. Web Guide is a Search Labs experiment that uses Gemini AI to group relevant search results for open-ended or complex queries. Initially available on the Web tab, it will expand to the "All" tab.

Google has rolled out an AI feature letting U.S. users virtually try on clothes by uploading their own photos across its shopping platforms. This builds on previous virtual try-on features by personalizing the experience.

Google has updated its NotebookLM technology to allow users to create “expert notebooks.” This new feature enables the AI to be trained on a user's notes and documents, facilitating more powerful and context-aware conversations.

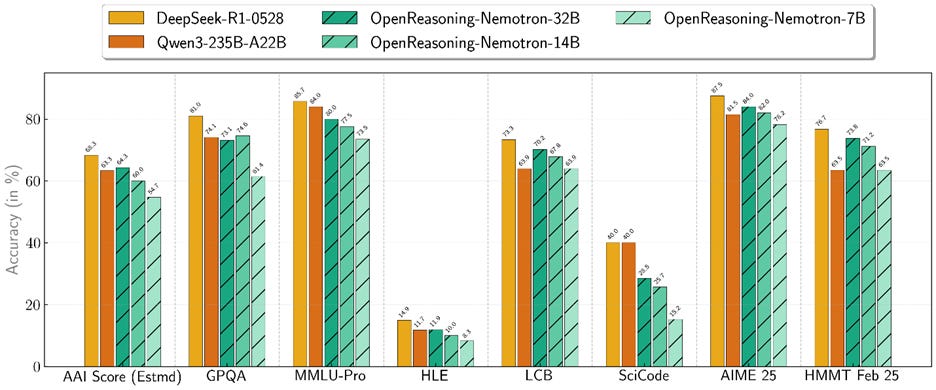

Nvidia released an update to their Nemotron reasoning models: OpenReasoning-Nemotron, a family of small AI reasoning models from 1.5B to 32B, derived from Qwen2.5 and distilled from DeepSeek R1 0528. Benchmark performance on OpenReasoning-Nemotron is excellent. They are available on HuggingFace.

Nvidia has also made Kimi-K2-Instruct available as an Nvidia NIM. This makes the powerful K2 MoE model more accessible for developers to use in their applications.

Anthropic rolled out new ways to engage with artifacts on mobile, allowing users to create interactive tools, browse a gallery, and share work directly from their phones.

LlamaIndex released a fully open-source agent for automating Request for Proposal (RFP) responses. The application, built on the LlamaIndex framework and LlamaCloud, handles document extraction, analysis, and report generation.

AI Research News

Anthropic found more isn’t always better in scaling Test-Time compute, reporting it in the research paper “Inverse Scaling in Test-Time Compute.” Anthropic observed cases in benchmarks for Opus 4 where longer reasoning time led to lower accuracy. They note that scaling test-time compute may “reinforce problematic reasoning patterns,” limiting scaling of reasoning.

Sapient Intelligence released the Hierarchical Reasoning Model (HRM), “a novel recurrent architecture that attains significant computational depth while maintaining both training stability and efficiency.” As explained in the paper Hierarchical Reasoning Model (HRM), even a tiny 27M parameter HRM achieves complex reasoning on specific tasks such as solving complex Sudoku puzzles.

Apple presented their latest ideas for multi-token prediction in the paper “Your LLM Knows the Future: Uncovering Its Multi-Token Prediction Potential. These improvements in multi-token prediction speed up LLM inference by up to 5 times without quality loss.

AI Business and Policy

The White House released “America’s AI Action Plan,” with policy proposals aiming to “win the AI race” by accelerating AI progress and deployment and securing U.S. leadership. We have a detailed review of it in “Trump’s AI Action Plan to Win in AI.”

Framing AI as both an economic opportunity and national security imperative, the AI Action Plan contains 90 recommendations: Rolling back AI regulations, streamlining data center and related infrastructure development, establish AI testing facilities, funding AI research and workforce training, support for open‑source models, AI adoption across the Federal Government, and grants and investments via DoD and other agencies to spur AI investment and progress.

For the most part, Silicon Valley is celebrating the WH AI Plan, since it prioritizes growth over guardrails in AI and was developed with Silicon Valley input.

OpenAI and Oracle have agreed to expand Stargate data center capacity by an additional 4.5 gigawatts in the U.S., bringing the total to over 5 GW. This partnership is a significant investment in the data centers with millions of GPUs needed to run advanced AI models. The expansion is expected to create over 100,000 jobs in construction and operations, and the Stargate I site in Abilene, Texas is beginning to come online.

OpenAI has launched a $50 million fund to support nonprofit and community organizations in using AI to address critical challenges. The fund aims to foster innovation in areas such as education, economic opportunity, and healthcare.

Meta has joined with Amazon Web Services (AWS) to launch a program to support promising early-stage startups that are building AI applications with Llama models. The program will provide selected startups with resources and support to help them innovate and grow.

Meta named Shengjia Zhao as chief scientist of Superintelligence Labs (MSL), Meta's new AI superintelligence unit. Former OpenAI researcher Shengjia Zhao, a key contributor to ChatGPT and GPT-4, will lead research and set MSL's research agenda under Alexandr Wang, complementing the leadership team with his expertise in frontier AI models.

Sam Altman warned there’s no legal confidentiality when using ChatGPT as a therapist. OpenAI CEO Sam Altman highlights that users' sensitive conversations with AI lack legal privilege, meaning the company could be legally forced to disclose them. He advocates for establishing legal frameworks to ensure privacy for AI interactions.

Tesla is significantly behind its 2025 goal of producing 5,000 Optimus humanoid robots, having only made hundreds so far. Amid declining revenue, Elon Musk now targets Optimus 3 production by early next year and "a million units a year" within five years. This ambition echoes Musk's past unfulfilled projections, like robotaxis.

Reka announced a $110M funding round from investors, including Nvidia and Snowflake.

Google CEO Sundar Pichai confirmed a new partnership to supply OpenAI with Google Cloud computing resources. Despite OpenAI being Google Search's biggest competitor, this deal provides a massive new customer for Google Cloud, which is seeing significant revenue growth from AI companies.