Anthropic Claude Update Adds Computer Use

Anthropic releases updated Claude 3.5 Sonnet and Haiku and introduces Computer Use. Will we finally get AI Agents that work?

Introduction

The AI revolution continues. Every week, new AI models and AI tools get released and improvements are announced. When the news slows, the gap is filled with speculation about what is coming next. Recently, there’s been buzz about the next releases to expect from OpenAI (GPT-Next), Google (Gemini 2.0), and Anthropic (Claude 3.5 Opus), with speculation we will get at least two of three by year-end.

Today, we got the next shoe to drop from Anthropic: Anthropic today released two new models - Claude 3.5 Sonnet, Claude 3.5 Haiku, and a groundbreaking new feature – computer use.

Claude 3.5 Sonnet and Haiku

First, let’s review the new Claude 3.5 Sonnet and Haiku releases:

Claude 3.5 Sonnet is now the leading model for many uses. Claude 3.5 Sonnet has been on par with GPT-4o and Gemini 1.5 Pro in many areas. Many already prefer Claude 3.5 Sonnet for coding, writing, and with its improved score on MMMU (visual tasks) and GPQA (academic reasoning tasks), this this update improves Claude 3.5 Sonnet enough to make it more definitively a leader.

Claude 3.5 Sonnet particularly excels at coding. With significant improvements in coding, Claude 3.5 Sonnet leads the field for coding, delivering superior performance over its predecessor and other contenders, except for the OpenAI o1 models. Anthropic in particular notes its SWE-bench performance:

On coding, it improves performance on SWE-bench Verified from 33.4% to 49.0%, scoring higher than all publicly available models—including reasoning models like OpenAI o1-preview and specialized systems designed for agentic coding.

Claude 3.5 Haiku matches 3.0 Opus at lower cost: The Claude 3.5 Haiku model brings the same AI power and speed as Claude 3 Opus, but at a more affordable price. It outdoes GPT-4o mini on some benchmarks but falls behind Gemini 1.5 Flash in others. Anthropic points out:

With low latency, improved instruction following, and more accurate tool use, Claude 3.5 Haiku is well suited for user-facing products, specialized sub-agent tasks, and generating personalized experiences from huge volumes of data—like purchase history, pricing, or inventory records.

They are aiming Haiku as the LLM to run AI agents and embedded AI applications.

Computer Use

The Computer Use feature, the most interesting part of this release, is in public beta. Claude now can interface with computers by understanding the visual screen and navigating like humans do:

Available today on the API, developers can direct Claude to use computers the way people do—by looking at a screen, moving a cursor, clicking buttons, and typing text. Claude 3.5 Sonnet is the first frontier AI model to offer computer use in public beta.

They warn that this is still experimental and error prone. They also explain this is just the start.

When we think about building AI agents, the critical initial step isn’t the AI reasoning, it’s being able to interact with the world. The most important interaction for AI agents doing most informational grunt-work is with the computer. As Anthropic explains:

Developers can integrate this API to enable Claude to translate instructions (e.g., “use data from my computer and online to fill out this form”) into computer commands (e.g. check a spreadsheet; move the cursor to open a web browser; navigate to the relevant web pages; fill out a form with the data from those pages; and so on).

One way to think about this is to consider actions as a different modality. Just as now multi-modal LLMs can take video as input or can input and output speech, now we have an LLM that can output action-based tokens that relate to a computer screen (“go to this part of webpage and click on a link”). It’s the embodied action modality.

Anthropic’s Computer Use feature isn’t unique, but previously controlling the computer required an AI agent controlling the features, outside the LLM. Tools like Multi-On and their Agent Q already combined planning and control of computer interfaces to intelligently navigate websites and applications.

What’s new here is that Claude 3.5 Sonnet with Computer Use is internally incorporating capabilities that were once outside the AI model, either as part of external control code, or part of a separate AI model.

How to Use Latest Claude

One takeaway from this update is that Claude 3.5 Sonnet is now more than ever your best AI model for code generation and writing. Add Claude 3.5 Sonnet in your rotation for AI prompting uses if you don’t use it already, and consider Claude 3.5 Sonnet as your favored AI model for code generation and writing.

Haiku is a competitive offering as well, as a fast, cheap, and efficient AI model on par with GPT-4o mini and Gemini 1.5 Flash. With an 88% HumanEval, it has uses in coding and beyond.

The Computer Use feature may be the most exciting yet, because it gives us a path to more enabled AI models and better AI agents. It may take a while to shake it out and evaluate it though. Many will soon beta-test the feature to see how it works and how best to integrate it. It will be exciting to see what new AI tools can be built on it.

Will we get good AI agents soon?

“2025 is when AI agents will work.” – Sam Altman, at OpenAI Dev-Day

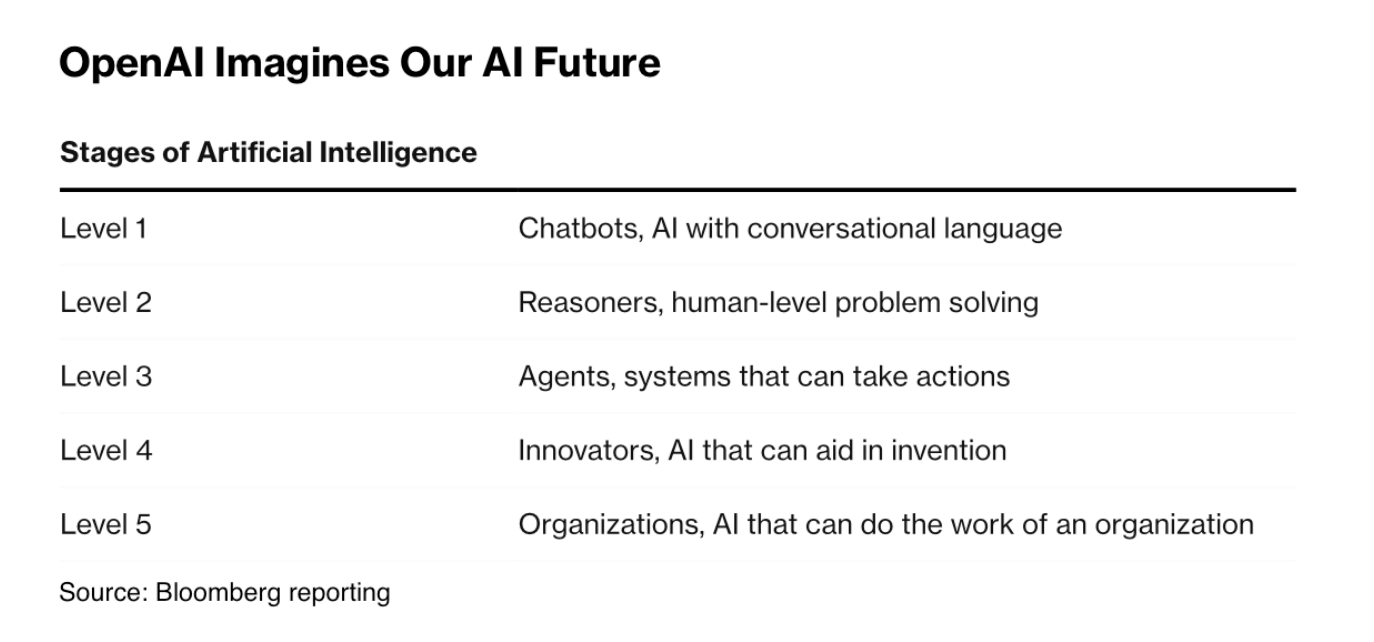

There is no doubt OpenAI has some types of AI agent capabilities like Computer Use on their roadmap, as they have made public statements on it. OpenAI also has in their own chart of the levels toward AGI indicating “AI agents” as Level 3 in the evolution. It’s coming.

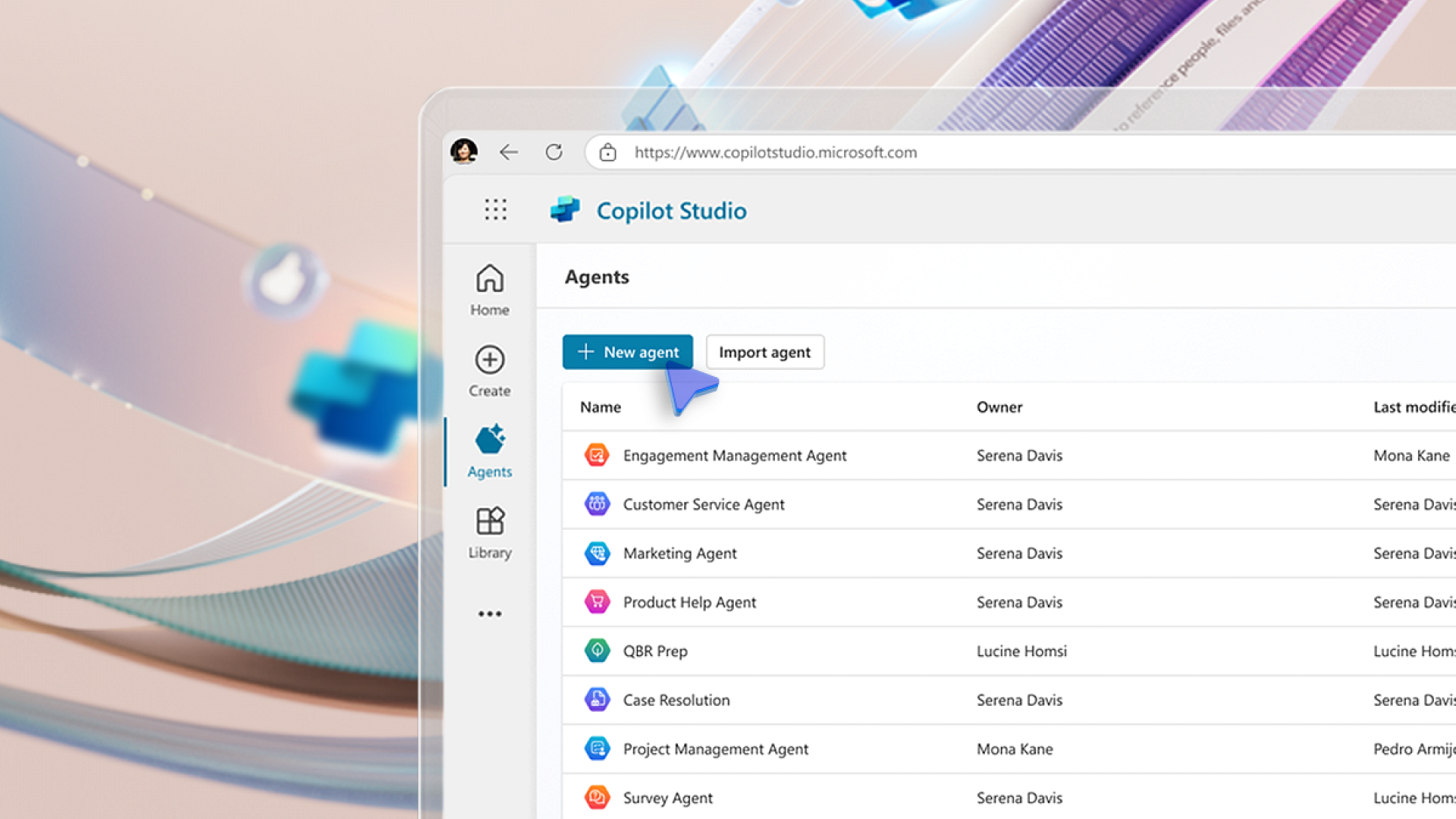

Microsoft this week announced new agentic capabilities for Microsoft Copilot:

Today, we’re announcing new agentic capabilities that will accelerate these gains and bring AI-first business process to every organization.

First, the ability to create autonomous agents with Copilot Studio will be in public preview next month.

Second, we’re introducing ten new autonomous agents in Dynamics 365 to build capacity for every sales, service, finance, and supply chain team.

This will roll out in coming weeks and is part of Microsoft’s ongoing development of Copilot agents for business automation. Enterprise adoption of AI agents still has a long way to go. Some of it has to do with AI agents not yet being dependable enough.

Microsoft themselves tout the application of Copilot agents in business use cases: “At Microsoft, we’re using Copilot and agents to reimagine business process across every function while empowering employees to scale their impact.”

Conclusion - How to Use AI

As capable as AI is, the best AI model is still a junior-level colleague or an intern. This is why the use model of human plus AI, in a copilot or supervised mode, is the most productive use case for AI currently.

Ethan Mollick reminds us how we can use AI as a proposer and human as judge-editor:

We are used to hoarding professional writing & analysis, with limited time and ability to consider alternatives. Most people haven’t realized they can get words in abundance thanks to AI. “Give me 10 variations on this email. Generate 200 ideas” You provide key taste & selection.

An inappropriate use of AI is to take unreliable AI output as it is, treating it like it’s perfect. The other extreme is to not use AI at all. You should instead let AI tools give you AI insights that you improve further. Human plus AI trumps either one on its own.

Even though I am steeped in AI, I often must remind myself to use AI more. One barrier is that AI needs to be in your workflow to be convenient enough to use. What we need is to bring the AI to the use-case, not the use-case to the AI.

The Computer Use feature opens the door to wider use cases where AI a “gopher” or intelligent tool, automating grunt tasks for us that require a sequence of steps. We all have many such cases that chew up our time.

The ChatGPT moment happened when it did not because ChatGPT was a better model. The better model Instruct-GPT had been out for ten months, but never went viral. The simple ChatGPT interface changed everything.

In the same way, Computer Use is another AI capability innovation that opens doors for more use cases and greater utility for AI, just like code execution, Artifacts, Canvas and audio input and output are doing already. Bring it on.

PS. Note to Readers

I intend to write more about AI tools you can use and ways you can leverage AI to your benefit. If there is a topic, use case, or a particular tool you’d like me to cover, put a comment below.

A good example is AI coding tools. They are rapidly evolving, with several new and quickly improving tools. We wrote about it just over a month ago, in AI for Coding – AI Coding Assistants. Yet since then, tools like Cline (formerly Claude.dev) have been renamed and updated. Let me know if you want more of that, or something different.