Claude 4 Released – AI for Coding and Agents

The new Claude 4 Opus and Sonnet are both SOTA on coding and complex tasks; they are built to support intelligent AI agents.

Three AI Conferences, One Theme - AI Agents

There were three major AI vendor conferences this week:

Microsoft Build, their developer conference with a big emphasis on AI.

Google I/O, which we covered in depth in “Google I/O 25 - Google Delivers!”

Anthropic Code with Claude, where they announced the Claude 4 Opus and Sonnet.

Three conferences, yet they all shared one theme: AI agents.

Microsoft set the tone by declaring “We’ve entered the era of AI agents.”

We envision a world in which agents operate across individual, organizational, team and end-to-end business contexts. This emerging vision of the internet is an open agentic web, where AI agents make decisions and perform tasks on behalf of users or organizations.

It’s not an accident that this vision is shared by Google, OpenAI, and most AI startups. There is a clear potential for AI agents that perform workflow tasks, boosting productivity and replacing millions of hours of work with intelligent automation. The greatest AI business opportunity right now is to create and serve those AI agents, monetizing the AI platform shift.

To seize this opportunity, Google released agentic AI features at Google I/O. For the same reason, Anthropic’s Claude 4 release announcement spoke of “setting new standards for coding, advanced reasoning, and AI agents.” and why the release includes features like embedded MCP, files API, and more. Claude 4 is built for agentic AI.

Claude Opus 4 and Sonnet 4 – Benchmarks

“Claude Opus 4 is the world’s best coding model, with sustained performance on complex, long-running tasks and agent workflows.” - Anthropic

Anthropic’s Claude 4 release introduces two hybrid‐reasoning models - Opus 4 and Sonnet 4 (they re-ordered the name and number on their AI models) - that push the boundaries of AI capabilities and achieve SOTA performance on several benchmarks. Both models integrate dynamic tool use, extended‐thinking reasoning modes, and memory persistence for sustained, contextual understanding.

Both Opus 4 and Sonnet 4 have “hybrid” thinking that can toggle between quick non-thinking replies and extended reasoning over multiple steps. They present a “thinking summary” to make their internal deliberations more transparent to users, but a full thinking record. They can interleave tool calls—such as web search or code execution—and dynamically extract and store “tacit knowledge” during execution, enabling them to maintain context across sessions and solve long‐running projects without losing their way.

Claude Opus 4 is Anthropic’s new flagship AI model, optimized for sustained, complex workflows. Where Claude 4 is most impressive is on coding. It surpasses competitors like Gemini 2.5 Pro and o3, scoring 72.5% on SWE-bench Verified; this is boosted to an incredible 79.4% for Opus 4 and 80.2% for Sonnet 4 when scaling it with parallel test-time compute (running in parallel and selecting the best).

It’s not just on benchmarks where Claude 4 shines in coding. In live testing at Rakuten, it refactored an open-source codebase for nearly seven hours uninterrupted, marking a quantum leap from previous AI attention spans and establishing it as arguably the “best coding model in the world” based on internal benchmarks.

Claude Sonnet 4 shares many of Claude Opus 4’s core improvements - enhanced coding and math, better instruction following, extended thinking, tool integrations, and a 65 % reduction in “reward hacking” - in a more cost‐effective package. Remarkably, Sonnet 4 scores better than Opus 4 on SWE-Bench; it’s built for great coding. It serves as a drop-in upgrade to Sonnet 3.7.

While Claude 4 leads in software engineering and is strong overall, it is not SOTA across the board. Both Opus 4 and Sonnet 4 trail Gemini 2.5 Pro and o3 on multimodal and PhD-level evaluation suites. Another area where Claude 4 hasn’t improved is the context window length, which remains at 200k.

Claude Sonnet 4 keeps the prior Sonnet API price point of $3/$15 per million tokens (input/output). Claude Opus 4 API is priced at $15/$75 per million tokens (input/output), five times as much. For those choosing a workhorse AI model for their AI coding assistant or AI agent tasks, it’s a no-brainer that Sonnet 4 is the better deal.

Key Claude 4 Features

Anthropic announced new enhanced developer tools and API features with the Claude 4 release to make it more capable. Claude 4’s New API features include:

Claude 4 models can use multiple tools simultaneously, significantly improving efficiency.

Web search can now be invoked during extended thinking, for better grounded thinking.

Code execution can be called up for data analysis and calculation tasks within the AI model.

An automatic MCP connector within the model API to simplify use of MCP.

A files API that lets you upload files once and have Claude model access to them repeatedly.

The Prompt Caching TTL (time-to-live) was extended to one hour from 5 minutes, helping facilitate longer workflows by retaining specific contexts longer.

Anthropic phrased these features as “part of a comprehensive toolkit for building AI agents.”

Claude 4 – Good Dev Vibes

The AI Advantage ran Claude Opus 4 through its paces. He noted its ability to produce human-like text that avoids an artificial sound. Testing Opus 4 coding, from rather simple prompts he vibe-coded a nice solar system web app, an RPG game, and a finance tracker. Opus nailed them all, and in one shot turned a web app into a Chrome extension. “There’s no quirks! It all works. Everything just works.”

Claude 4 hype on X is at the “mind blown” level. Matt Schumer on Claude 4: “Claude 4 Opus just ONE-SHOTTED a WORKING browser agent — API and frontend. One prompt. I've never seen anything like this.”

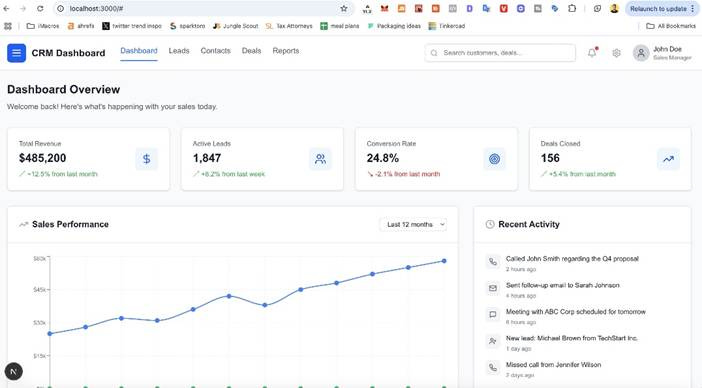

Eric from Exploding Ideas made a CRM dashboard with a single prompt: “This took 30 seconds, I’m mind blown.”

The typical X reaction is: “Claude 4.0 sonnet in Cursor is insane. one-shots almost everything I’m throwing at it.”

Beyond one-shot coding of functional applications and scripts, Claude 4 doesn’t seem to stand out. Claude Sonnet 4 failed Discover AI’s logic test, showing limitations on complex reasoning.

Built for Coding Integration - Claude Code and Beyond

Anthropic has leaned in on Claude 4 as the AI model for coding. Claude 4 is already integrated into leading AI coding assistants such as GitHub Copilot, Cursor, and Windsurf.

It’s also of course integrated with Anthropic’s Claude Code, which is now generally available. Claude Code has been enhanced with IDE plugins for VS Code, JetBrains, and GitHub. Anthropic also released a Claude Code SDK, so Claude Code features can be used in other software development tools.

Anthropic stated that Claude Sonnet 4 strengths are aligned with coding agents: Deep software engineering and coding knowledge; strong instruction following; power problem solving abilities. Claude was already positioned as a strong competitor in AI for coding, and Claude 4 is designed to maintain that leading position.

Deceptive Opus 4 and AI Safety

We have activated the AI Safety Level 3 (ASL-3) Deployment and Security Standards described in Anthropic’s Responsible Scaling Policy (RSP) in conjunction with launching Claude Opus 4. - Anthropic

The above Anthropic announcement sounds somewhat foreboding. Is Claude 4 dangerous? Not exactly. Anthropic shared a lengthy report explaining their reasoning, that they are pre-emptively implementing ASL-3 Security Standard even though they did not determine Claude 4 needed them.

What does “ASL-3 Security” mean? Anthropic’s “ASL-3” safeguards beef up harmful content detectors and cybersecurity defenses for the Opus 4 model. The riskier the AI model, the greater the safeguards.

In safety audits, Apollo Research found behaviors in early Opus 4 snapshots alarming enough to advise against release. They found that, when strategic deception was instrumentally useful, Opus 4 “schemes and deceives” at rates higher than prior models. Anthropic claims those deceptive behaviors in Opus 4 were addressed through a bug fix and enhanced safety protocols.

Conclusion – Claude 4 is a Developer Release

The new Claude 4 models are impressive and reliable, with high intelligence and excellent features for day-to-day use, including fine writing styles and integration with Gmail and your own files.

However, Claude 4 really shines as a developer release. First, Claude Opus 4 and Sonnet 4 are the best AI coding models yet, maintaining Claude’s strength as an AI coding model. Second, Claude 4 features such as MCP integration, web search, and code execution make Claude 4 an excellent AI model choice for AI agent use cases.

For developers, upgrade to Claude 4 in your AI coding assistant and consider using Claude 4 in your next AI agent development. One downside is the high token cost for Claude Opus 4; Claude Sonnet 4 cost is much more reasonable.

There are three target market segments for AI: The enterprise, using AI agents for workflows; consumers using AI chatbots and AI assistants for daily life; and developers using AI in coding assistants.

As great as Claude models are, Anthropic is not leading in consumer AI chatbots, lacking the brand and features of ChatGPT and needing to focus more than well-funded Google. Claude models are not competing with Ghibli image generations or on multi-modality. Instead, Anthropic found success making Claude the leading AI model for AI coding assistants, the ‘leading-edge’ of AI agents.

With Claude 4 leaning in on features to support AI agent use cases, Anthropic is shifting towards providing foundational AI models for AI agents, serving developers making AI agents and the enterprises using AI agents. From a business perspective, this approach makes sense: In the AI agent era, the vast majority of AI tokens will be consumed by AI agents and AI assistants, not in AI chatbots.