Design Patterns for Effective AI Agents

Anthropic gives advice on building AI Agents. Simple workflows connecting capable tools and advanced AI models can yield powerful AI Agents.

Building Better AI Agents

Our recent articles on AI agents “Manus and the New AI Agents” and “Building Next-Gen AI Agents with OpenAI Tools and MCP” highlighted the new releases of highly capable AI Agents.

AI agent builders are cracking the next level of capabilities.

AI agent frameworks have moved ‘upstream’ and expanded in scope, enabling a new generation of more capable AI agents.

This essay discusses design choices and AI agent workflows that improve the effectiveness of AI agents, highlighting insights from Anthropic.

Automating complex tasks with AI agents requires getting several pieces working together:

Planning - Tasks with multiple steps and internal decision paths require planning.

Reasoning - Complex situations and queries require good AI reasoning.

Knowledge retrieval and grounding - Tasks involving knowledge acquisition incorporating knowledge into AI model context via Retrieval Augmented Generation (RAG). For general knowledge research queries, this relies on reliable use of web search.

Code execution – Tasks requiring any sort of calculation, database retrieval, data analysis, or code generation benefit from code execution. Better code generation output makes this more reliable.

Tool use – Web search, browser use and other computer tools and APIs give AI agents ability to execute tasks, going far beyond chatbot capabilities. Full general computer use enables many virtual tasks.

AI agents are constructed by organizing AI models and ecosystem components, connecting them with AI agent frameworks to execute workflows. The latest AI agents are more powerful because their underlying AI models and AI components are more reliable and capable.

AI model and agent builders have worked through challenges in RAG, tool calling, code execution, browser use, controlling computers, and more to make AI agents work. They trained AI models to have improved tool calling for agentic use and improved components and algorithms for AI workflows.

One lesson learned in these efforts has been that most powerful AI agents can be built on simple flows that follow basic patterns. Anthropic has both learned and shared this lesson in their article “Building effective agents.”

AI Workflows and AI Agents

We've worked with dozens of teams building LLM agents across industries. Consistently, the most successful implementations use simple, composable patterns rather than complex frameworks. - Anthropic

In late 2024, Anthropic shared lessons learned from building AI agents in “Building effective agents,” promoting the use of “simple, composable patterns” over complex frameworks to address most AI use cases.

In defining AI agents, Anthropic describes a taxonomy of workflows and distinguishes between two primary architectures in agentic systems:

AI Workflows: These involve orchestrating LLMs and tools through predefined code paths, ensuring predictability and consistency for well-defined tasks.

AI Agents: In contrast, agents dynamically direct their processes and tool usage, maintaining control over task execution. This approach offers flexibility and model-driven decision-making at scale.

We define AI agents more broadly as AI automation that execute automatically either a fixed or dynamic workflow, while Anthropic only considers dynamic workflows to be AI agents. This semantic distinction is less important than their message about these workflows:

When building applications with LLMs, we recommend finding the simplest solution possible, and only increasing complexity when needed.

AI Workflow Patterns

Anthropic describes the following types of fixed AI workflows, where the structure of the task doesn’t change:

Augmented LLM: This augments the LLM with retrieval and memory for knowledge-grounding and tool capabilities. Current AI models can generate their own search queries, select appropriate tools, and determine what information to retain. ChatGPT running Search and Canvas in the chatbot interface is using this flow.

Prompt chaining workflow: This decomposes a task into a sequence of steps, where each LLM call processes the output of the previous one. A Chain is a sequence of operations (prompting an LLM, getting a response, feeding it into another prompt, etc.) that can be composed to form complex workflows. This approach is for tasks that can be easily divided into fixed subtasks, trading off latency for higher accuracy.

Routing workflow: This classifies an input and directs it to a specialized LLM or task, allowing for separation of concerns and the development of more specialized prompts. This is effective for complex tasks with distinct categories better handled by separate AI models.

Parallelization workflow: This calls multiple LLMs in parallel to aggregate outputs either to break a task into independent subtasks or to get diverse responses to collate.

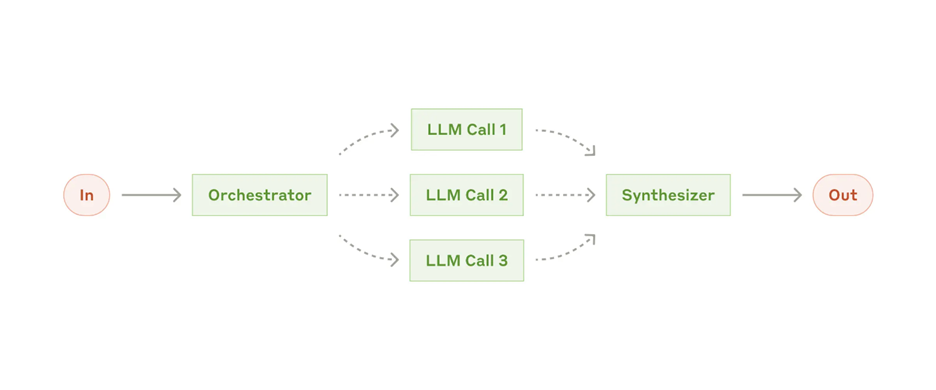

Orchestrator-workers workflow: a central LLM dynamically breaks down tasks, delegates them to worker LLMs, and synthesizes their results. This has some dynamism for complex tasks where the required subtasks are unpredictable.

Evaluator-optimizer workflow: One LLM call generates a response while another provides an evaluation (LLM-as-a-judge), which can loop back as feedback to further generation, iteratively refining the output. This is effective when clear evaluation criteria exist, for example, in math and coding tasks.

Anthropic has shared cookbooks for these flows, but they are also simple and clear enough, you can just prompt for a boilerplate code example to start from, using a prompt like this:

Use python and the latest lang-chain library to write a program that implements a Prompt-Chaining flow for LLMs. This flow decomposes a task into sequential subtasks, where each step is an LLM call that builds on earlier results. The LLMs called can be configurable, either Gemini, OpenAI or Ollama.

The AI Agent Workflow

The final AI workflow pattern is the most powerful one, since it is the dynamic flow of an AI agent, but it is conceptually simple: The AI agent workflow. In it, the Autonomous AI agent takes action, checks the environment, and changes its behavior based on feedback from the environment. It’s simple in structure, yet general and powerful in its application. The AI agent workflow is suitable for open-ended AI agent tasks, such as Anthropic’s Computer Use.

The main downside of the AI agent workflow is that errors can compound, making reliability challenging. For example, the Computer Use AI agents are still in beta release, as they fail to complete many tasks.

We recommend extensive testing in sandboxed environments, along with the appropriate guardrails.

‘Tis a Gift to be Simple: The Case of Open Deep Research

The extremely useful Deep Research tools from Google and OpenAI are based on a powerful yet simple framework. While we don’t know the innards of proprietary Deep Research tools, we can infer that they iteratively run search queries and interpret collected information, then they draft the final report.

Open-source versions of Deep Research were developed that replicate the deep research workflow:

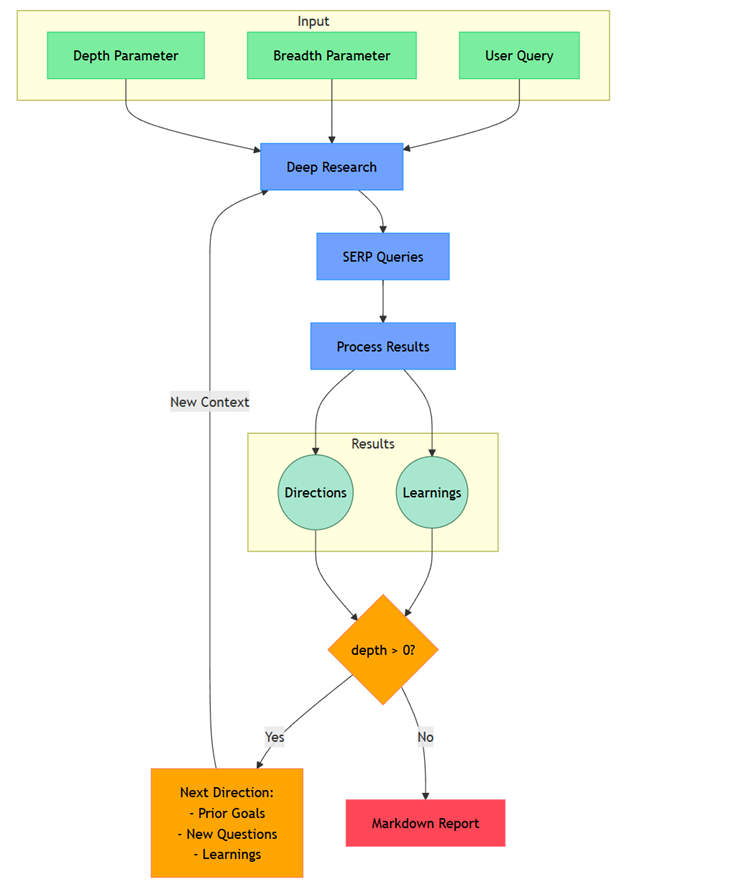

David Zhang has an open-source deep research implementation called Open Deep Research on GitHub, which was coded up in under 500 lines of code. He documented and shared a flowchart for this AI research agent.

LangChain implemented Ollama Deep Researcher, an open-source web research agent that runs locally and uses LLMs through Ollama. The high-level flow is the same as with these other tools, an iterative loop of web searching and data collection, followed by the final summarization of research.

As shown by the example of Deep Research, extremely useful AI agents can be based on straightforward structured AI workflows. Similar to Deep Research, the Manus release has been followed by open-source Manus AI agent clones, like OpenManus. These releases likewise show that powerful AI agents are built from capable tools and advanced AI models connected into fairly simple frameworks and flows.

Conclusion – Best Practices for Implementing Agents

Frameworks can help you get started quickly, but don't hesitate to reduce abstraction layers and build with basic components as you move to production. - Anthropic

Robust, reliable software is based on standard algorithms, structures and designs, such as those design patterns expressed in the “Gang of 4” book Design Patterns. Robust, reliable AI agents similarly depend on standard interfaces (like MCP), common structures, and well-defined AI workflows.

Anthropic has emphasized that the best practices for implementing effective AI agents are based on simplicity and transparency:

Maintain Simplicity: Design AI agents with straightforward architectures to enhance reliability and maintainability.

Ensure Transparency: Explicitly display the AI agent’s planning steps to foster trust and help debugging.

Carefully craft Agent-Computer Interfaces (ACI): Thorough tool documentation and testing are important for seamless, reliable AI agents.

These principles help developers create AI agents that are not only powerful but also reliable, maintainable, and trusted by users.

Given that effective AI agents often rely on simple AI workflows that are not hard to clone, open-source alternatives to proprietary AI agents will continue to proliferate quickly.

Any patterns with human-in-the-loop? I am referring to the Partial to Full Autonomy journey that Karpathy talked about, which of these patterns would fit that?