“Directly competing with open source is a losing proposition” - Google memo author

An internal memo written by a Google engineer was leaked in the past day which makes remarkable admissions about the power of open-source AI in the LLM landscape, with the headline “We Have No Moat And neither does OpenAI” and the thesis that open-source AI is beating both Google and OpenAI in the AI arms race:

we aren’t positioned to win this arms race and neither is OpenAI. While we’ve been squabbling, a third faction has been quietly eating our lunch. I’m talking, of course, about open source. Plainly put, they are lapping us.

As if to make the point, a new AI research paper came out in the past day as well: Distilling Step-by-Step! Outperforming Larger Language Models with Less Training Data and Smaller Model Sizes. This is one of many papers since the seminal Alpaca paper, released a mere two months ago, using distilling and fine-tuning techniques to create excellent small fine-tuned LLMs that match performance of larger LLMs.

These items make for a worthwhile addendum to my article on the LLM Landscape, but before we dig into the Google memo itself, a question: Is it real? Simon Willison, who first shared this memo news yesterday, has a tweet supporting its validity, and a Bloomberg report confirmed the author and authenticity. They identified that it was written in April and has been shared widely within Google.

The Open Source AI Challenge

What the Google memo outlines starkly is the remarkably rapid and low-cost rate of progress in open source AI, building on LLM developments:

They are doing things with $100 and 13B params that we struggle with at $10M and 540B. And they are doing so in weeks, not months.

The author raises the alarm about how exposed Google (and OpenAI) is to the rapid evolution of smaller open-source AI models, saying:

We have no secret sauce.

People will not pay for a restricted model when free, unrestricted alternatives are comparable in quality.

Giant models are slowing us down.

His main justification for these points is the “tremendous outpouring of innovation” of building on the LLaMA and Alpaca release:

Here we are, barely a month later, and there are variants with instruction tuning, quantization, quality improvements, human evals, multimodality, RLHF, etc. etc. many of which build on each other.

In particular, he says that LoRA - Low-Rank Adaptation of Large Language Models - “is an incredibly powerful technique we should probably be paying more attention to” because it drastically lowers the cost and difficulty of fine-tuning a model:

The barrier to entry for training and experimentation has dropped from the total output of a major research organization to one person, an evening, and a beefy laptop.

In this respect, he compares it to stable diffusion and what has happened in the generative image AI space. The August 2022 open-source release of stable diffusion models that could be run on home hardware led to an explosion of interest, innovation, and derivative model. The March release of LLaMA weights and Alpaca was a “Stable Diffusion moment” for LLMs, as Simon Willison put it.

Using LoRA on these LLaMA models is a game-changer. It “allows model fine-tuning at a fraction of the cost and time. Being able to personalize a language model in a few hours on consumer hardware is a big deal.”

His other top-line points are also compelling and interesting:

Retraining models from scratch is the hard path

Part of what makes LoRA so effective is that - like other forms of fine-tuning - it’s stackable. … as new and better datasets and tasks become available, the model can be cheaply kept up to date, without ever having to pay the cost of a full run.

By contrast, training giant models from scratch not only throws away the pretraining, but also any iterative improvements that have been made on top. In the open source world, it doesn’t take long before these improvements dominate, making a full retrain extremely costly. … we should invest in more aggressive forms of distillation that allow us to retain as much of the previous generation’s capabilities as possible.

Large models aren’t more capable in the long run if we can iterate faster on small models

LoRA updates are very cheap … Training times under a day are the norm. … the pace of improvement from these models vastly outstrips what we can do with our largest variants, and the best are already largely indistinguishable from ChatGPT. Focusing on maintaining some of the largest models on the planet actually puts us at a disadvantage.

Data quality scales better than data size

Many of these projects are saving time by training on small, highly curated datasets. This suggests there is some flexibility in data scaling laws.

Directly Competing With Open Source Is a Losing Proposition

We need them more than they need us.

But holding on to a competitive advantage in technology becomes even harder now that cutting edge research in LLMs is affordable. Research institutions all over the world are building on each other’s work, exploring the solution space in a breadth-first way that far outstrips our own capacity.

His plea for Google is to embrace open-source and aim towards “Owning the Ecosystem: Letting Open Source Work for Us.”

The memo is chock-full of perceptive thoughts on the state of play in LLMs, so much that it’s hard to distill further, but if there’s a one paragraph summary it’s this:

LoRA-based fine-tuning of LLMs is a fast, cheap, stackable, and effective way to make better AI models, and is a process accessible to many. Many open-source users and AI researchers are iteratively improving open-source AI models this way right now, and this faster-cycle-time approach is superior to training large LLMs from scratch each time with long lead times. Google cannot compete if it relies solely on the large monolithic AI model approach.

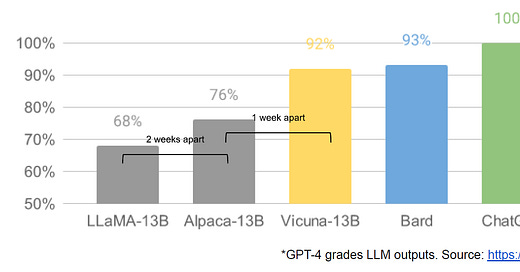

While I agree with this perspective, I would hedge the bet on the aspect of smaller fine-tuned AI model quality. These smaller AI models have gotten great results - for their size. The Google memo leans heavily on the idea that open-source fine-tuning of models can get to levels comparable to the original larger parameter models. While there’s evidence these models do well, I’ve not seen enough solid benchmark comparisons to be confident about how close specific models really are to say GPT-4 level, or how broadly they perform.

The Google memo included a link to a list of open-source LLMs. I’ll be keeping an eye on both progress and the metrics of the AI models.

Getting to 95% of chatGPT on a 11B parameter model is remarkable, but doesn’t make the model preferable by consumers who will take the best model over the runner-up. So while the efficiency and speed of innovation of open-source fine-tuned AI model is winning and is a great challenge to Google and OpenAI, in the end, top-line quality of results matters the most.

In the end, it confirms my view of the LLM landscape expressed earlier this week. We have a space in the LLM landscape for multiple types of AI models for various uses and purposes, both the ‘flagship’ large Foundational AI models and the smaller open-source Edge LLMs.

AI Research for fine-tuning and distilling models

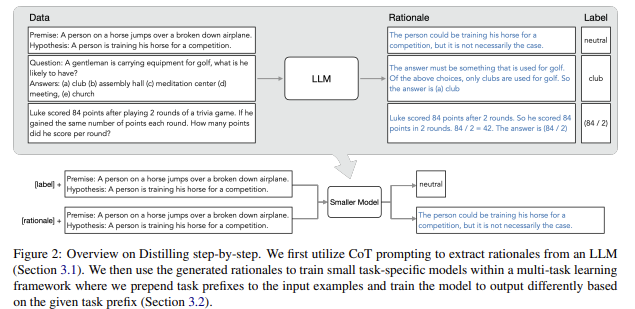

The latest AI research paper I mentioned above is: Distilling Step-by-Step! Outperforming Larger Language Models with Less Training Data and Smaller Model Sizes.

Finetuning updates a pretrained smaller model using downstream human annotated data. Distillation trains the same smaller models with labels generated by a larger LLM. Distilling step-by-step goes beyond labels and “extracts LLM rationales as additional supervision for small models within a multi-task training framework.”

Their results from this:

We present three findings across 4 NLP benchmarks: First, compared to both finetuning and distillation, our mechanism achieves better performance with much fewer labeled/unlabeled training examples. Second, compared to LLMs, we achieve better performance using substantially smaller model sizes. Third, we reduce both the model size and the amount of data required to outperform LLMs; our 770M T5 model outperforms the 540B PaLM model using only 80% of available data on a benchmark task.

This method is more efficient and effective than prior fine-tuning and distillation methods, so expect this to be quickly applied to leapfrog prior fine-tuned AI models.

This is the rapid cycle of innovation the Google memo author was talking about. Academic researchers do not have the compute capacity to implement a GPT-4 level model, but they do have the ability to develop improved methods for fine-tuning smaller models, so the latter will see more improvements and innovations.

The Data Moat

Other than embrace Open Source, what are the incumbents OpenAI and Google to do?

Microsoft announced improvements to Bing Chat and Edge this week. Bing Chat is no longer just a model with a chat interface, it’s becoming an ecosystem. Innovating and improving that will keep Microsoft ahead.

One thing Microsoft touted in this release was the scale of use of Bing Chat: Over 500 million chats, over 200 million images created, and over 100 million daily active users on Bing Chat. My reaction to seeing that: Well, there’s your moat. Data. Massive data.

Those 500 million chat interactions are two orders of magnitude larger than the chats the open-source AI community collected to develop OpenAssistant.

Google and Microsoft/OpenAI still have an advantage they can leverage: Data, at scale. As stated on twitter by @valb00:

The 'AI moat' is data. Always has been. Proprietary data, constantly updated, with clear provenance and lineage. That's the evergreen AI moat. - Valb00 on Twitter