Luma AI Video Gen - The Dream Gets Real

Luma Labs AI Dream Machine is very good, but more importantly, you can use it now.

AI Video Generation - The Dream Gets Real

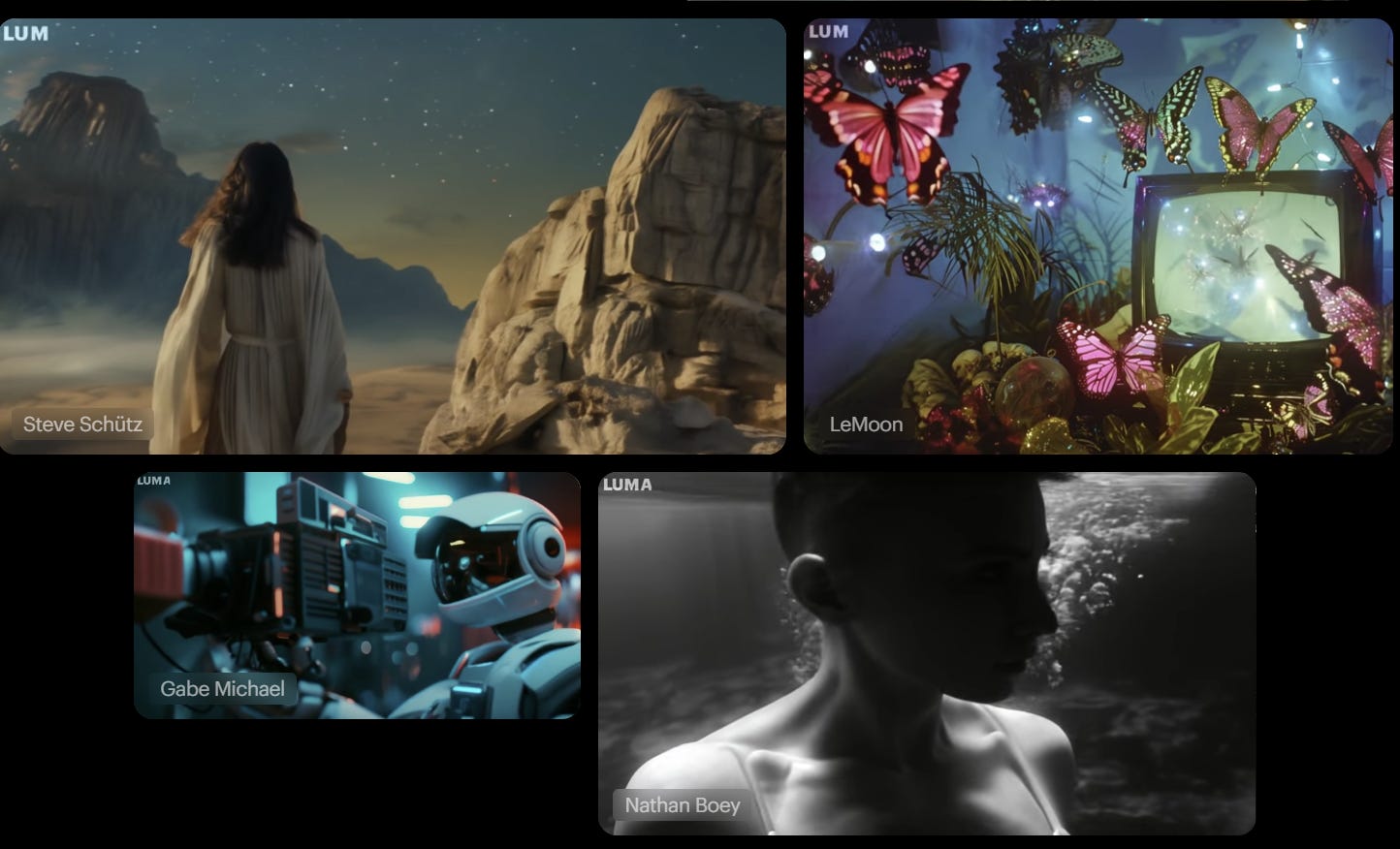

Today’s AI hype-of-the-day: Luma Labs AI just dropped Dream Machine.

Luma Labs AI Dream Machine is an AI model that makes high quality, realistic videos fast from text and images.

Their claims:

Fast: 120 frames in 120 seconds. Iterate faster, explore more ideas and dream bigger!

Quality: It can generate 120 frames at 1280x720. At 24 frames-per-second, that gives us high-quality 5 second generations.

Consistency: It exhibits consistent characters and allows you to create videos with accurate physics and consistent scenes.

Action-packed: Generates shots with a realistic smooth motion, cinematography, and drama. It provides an “endless array of fluid, cinematic and naturalistic camera motions.”

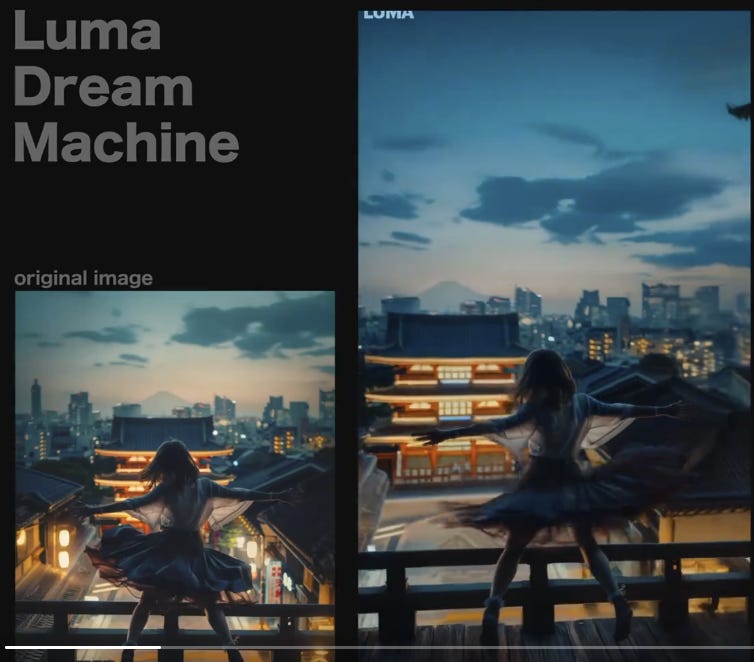

Generate from images: You give it an image as a seed and then it animates forward from that image for the 5 second scene.

Exploring the Dream Machine

I've been testing it for several days and I think it is definitely Kling and Sora-level. - Heather Cooper

Unlike Sora, it's open to public today. The reaction from early users and beta testers? “The quality is insane.” “I feel like this is like Midjourney going from version 3 to about version 4-5.”

From UncannyHarry, whose work on Runway Gen2 I shared over a year ago: “It's so good!”

Dream Machine seems to live up to its Luna Labs AI claims: Consistent characters, realistic scenery, (reasonably) accurate physics, quality and smooth camera movement.

Since only video can do justice to video generation, you can go to X for a sample:

Kaku Drop converted images of Chinese women characters into high-realism video.

Next On Now has a music video. A Suno + Luna mix.

Uncanny Harry shares pro tips and has a one tweet review:

Here is a quick teaser of the image2video you can make with #LumaDreamMachine, midjourney images, no face swaps, no speed ups, 1st rolls. Twitter doesn't do it justice, needs to be seen in 4k.

Justin Hart - Has one of the best generations - “Envision a teaser trailer for a new streaming channel!”

Abel Art produced “Tales of the Other Side.”

Christopher Fryant has a trailer “Gala.”

Curious Refuge - Realistic but strange animals, showing off its creative abilities.

There’s more and plenty more will come out in upcoming days. For those not on X, you can see Dream Machine in action in YouTube reviews by MattVidPro and WesRoth; they share a number of the generations done so far.

MattVidPro:

Wes Roth:

Limitations

Luna Labs acknowledges some limitations to the AI video generation:

Morphing & Janus: some slippage and morphing of objects and animals, while less severe than earlier video models, can happen.

Movement: Some movement is not natural or physically correct.

Text: Not able to faithfully generate text.

You can also sometimes observe wrong numbers of fingers or other physical errors, while in other cases the intricate facial and human details are quite good. However, the excitement about Dream Machine is that while these errors are still there, it’s much less noticeable than in prior video generation models.

It possible some of these limitations relate to how its prompted, and users will have to learn how to tease out the best quality.

Trends

Several early AI video generation models landed in 2023. The first was the groundbreaking Runway Gen 2; it was cutting-edge, but not ready for production use. In November, Stability AI released Stable Video Diffusion and later Stable Video.

In February, Sora was introduced and it changed the game. It was next-level in image quality and the adherence to physical reality, a level of quality unseen before.

We have since had Google’s Veo, decent but not wowing people due to the shadow that Sora cast. The latest competitor prior to Dream Machine is KLING. With Dream Machine, that makes at least six competitors, and at least three of them are “Sora-level” - Dream Machine, KLING and Sora itself.

Our prediction that AI video generation in 2024 would be like AI image generation in 2023 is panning out. AI video generation is getting better, quickly.

The Tech

Luma AI touts that “Dream Machine understands how people, animals and objects interact with the physical world,” and they speak of “a universal imagination engine” down the road. Regarding the model itself:

It is a highly scalable and efficient transformer model trained directly on videos making it capable of generating physically accurate, consistent and eventful shots.

How did it get to be physically accurate?

Luna Labs AI in the past has delivered NeRF models, 3D image AI models, as well as video to 3D. Luna Lab very likely built a 3D image representation into their model that enables highly consistent characters and objects along with fluid camera angles.

It turns out that thousands of Gaussian splats can EASILY be turned into a POWERFUL video generation model.- Justin Hart

Some claim that Sora was trained using generations from Unreal Engine. Under the hood, Sora used a diffusion transformer AI model to do the magic. However it’s done, some 3D representation is needed to get to high-quality video generation.

The other AI video generation model makers will realize this and develop their own versions to catch up. Higher quality AI video generation from many competitors is coming.

Summary

Don’t take my word for whether Dream Machine is for real: Try it out.

They have a freemium pricing model. First 30 generations per month are free, and then its $30/month up to 120 generations, then more beyond that.

You can hop on and try it without a waitlist, but Dream Machine model has gone viral and so your request will get queued (like mine was). Maybe they’ll amp up the servers or the hype will abate in a few days.

Or maybe not. Luna AI has a hit on their hands, and maybe they will become the Midjourney of AI video generation.

Great round-up Patrick - thank you!