On Situational Awareness 2: AI Automation and Acceleration

AI automation, AI acceleration, and the quick take-off ASI scenario

Situational Awareness Top-Line

Leopold Aschenbrenner’s “Situational Awareness” discusses the rapid rise in AI capabilities in the coming decade and presents provocative predictions and proposals worth consideration.

Our previous article “On Situational Awareness Pt1: Race to AGI” dealt with his prediction that we are on course for AGI by 2027. Our conclusion agreed with his thought process and projected path to get from here to AGI, while being more conservative on the AGI timelines.

Advances in computing, algorithms and AI system breakthroughs will continue for at several more years and get us to AGI before the end of the decade. Then what?

Part II of “Situational Awareness” and the article deals with the next step in the AI journey: AI Acceleration into ASI, Artificial Superintelligence.

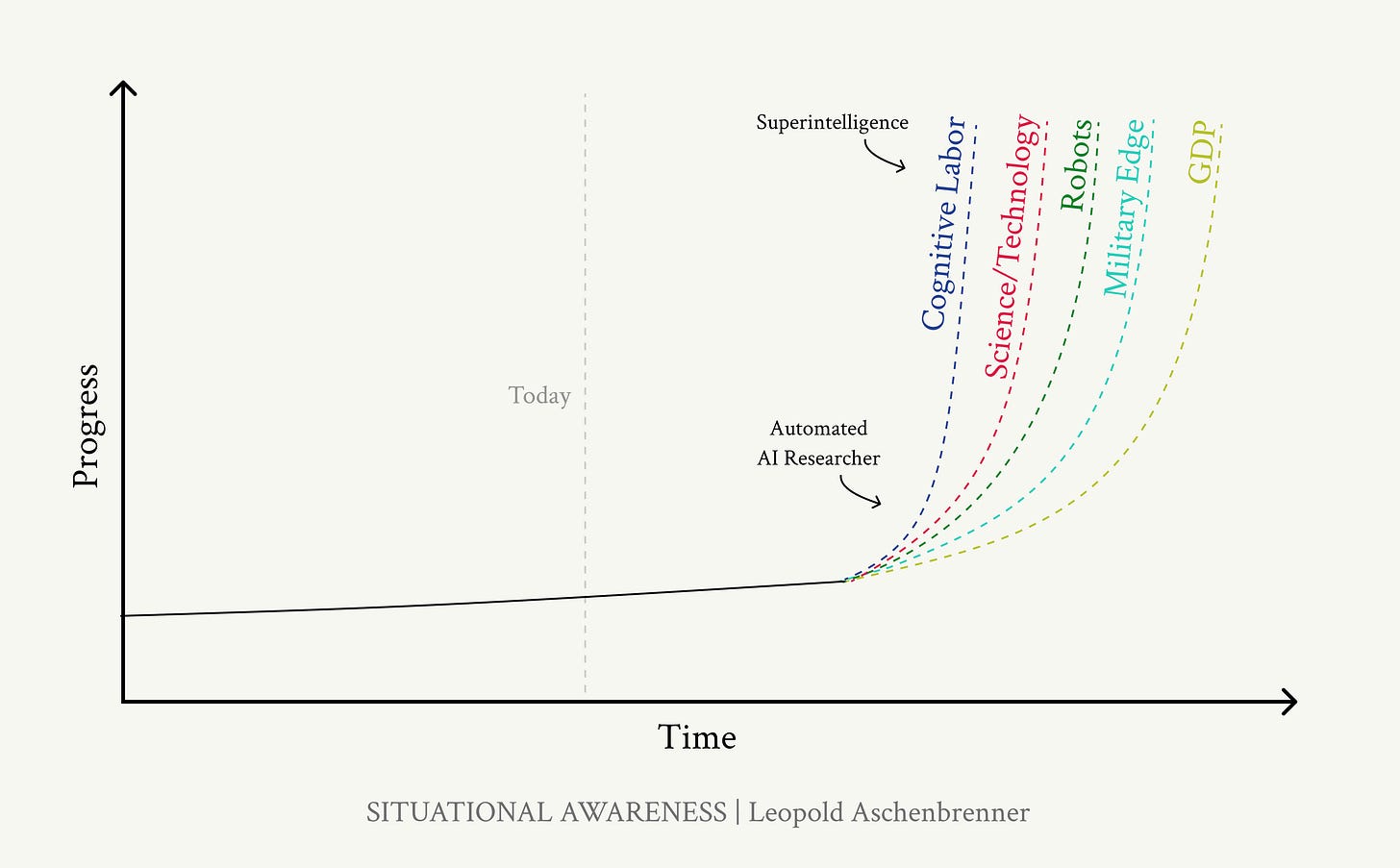

The “Situational Awareness” perspective on the path from AGI to ASI is as follows:

Automation: AGI-level AI systems will basically be able to automate basically all cognitive jobs (think: all jobs that could be done remotely).

Acceleration: This AGI-enabled automation will cause a massive acceleration in technology progress in many things, in particular accelerating AI development itself.

Superintelligence: AI progress acceleration will quickly move us from AGI into vastly superhuman AI systems.

Bottlenecks: There are several plausible bottlenecks—including limited compute for experiments, complementarities with humans, and algorithmic progress becoming harder, but none seem sufficient to definitively slow things down.

ASI’s Progress and Peril: AGI-level automation and then ASI will birth massive acceleration in technology and science and super-charge economic growth and power shifts; some outcomes are dangerous. The power—and the peril—of superintelligence would be dramatic.

Accelerating AI

“Situational Awareness” sets up a “quick take-off” scenario for AI: AGI-level AI progress will enable massive automation that can accelerate AI advances. This idea is related to the Singularity concept, where technology progress accelerates into infinitely fast technology progress.

Here’s how AI automation will accelerate the path from AGI to ASI:

We don’t need to automate everything—just AI research.

That is: expect 100 million automated researchers each working at 100x human speed not long after we begin to be able to automate AI research.

We’d be able to run millions of copies (and soon at 10x+ human speed) of the automated AI researchers.

… able to do ML research on a computer, furiously working on algorithmic breakthroughs, day and night. Yes, recursive self-improvement, but no sci-fi required …

Automated AI research could probably compress a human-decade of algorithmic progress into less than a year (and that seems conservative).

The thesis that automating AI research will rapidly accelerate AI progress has both valid points - AI will drive automation of many cognitive tasks that will feed technology progress, including in AI.

It also has these challenges:

Automation: AI-enabled automation will be more a gradual process of adoption than a sudden shift. GPT-4o -level AI already enables some automation, and this will increase as both AI models improve and AI system integrations improve.

Practical limits: Compute, data, and energy limits on scaling AI models are far above where AI models today. As we scale AI models 10x, 100x or 1000x, we may hit some limits.

Fundamental limits or ceiling on capabilities: Do LLMs have some inherent limits? The surprise with LLMs has been that next-token-prediction has delivered t’s likely there is an asymptote of capabilities for LLMs directly; we can’t get beyond perfection or below 0% perplexity.

Automation Nation

Since much of the Situational Awareness narrative hinges on AI automation accelerating progress, it’s worth asking the questions: How much automation does AI enable now? How much will AGI-level AI change that?

AI-enabled automation of many tasks is already here: AI chatbots, order-taking kiosks, AI-generated news articles, etc.

Repetitive, rote and fixed tasks are learned easily and do not require advanced AI. Automation methods like Robotic Process Automation have been applied for some tasks. Current AI is being woven into co-pilots and other forms of “human plus AI” applications to solve and complete tasks in partial automations.

What remains not yet fully automated by AI are complex and unstructured tasks, that tap into our preferences, and require flexible reasoning, novel behavior, or qualitative understanding that you can’t easily express in fixed definitions.

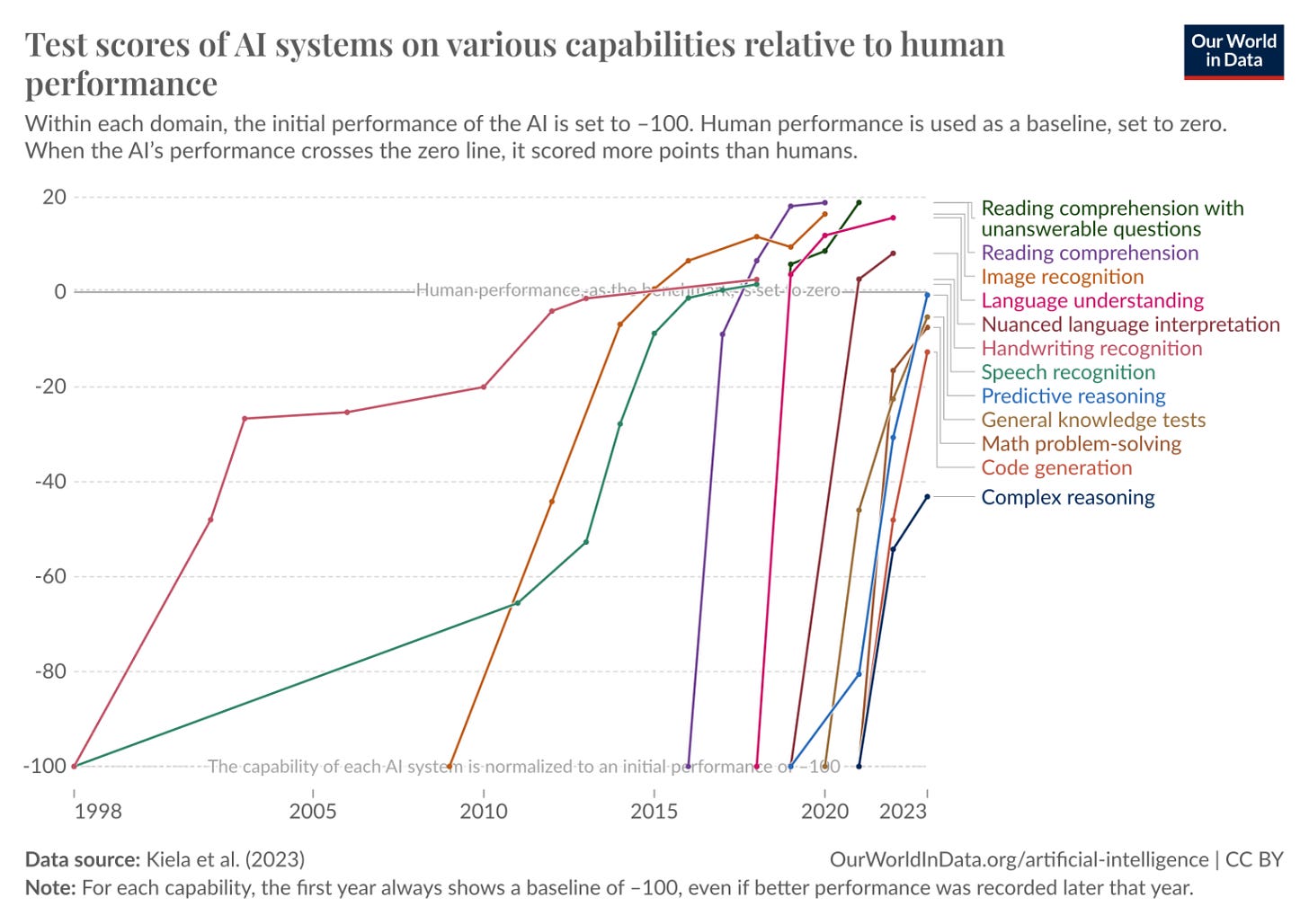

One way to look at it is to reconsider this Figure 3 on tasks where AI reaches human-level performance. For remainder of the 2020s, we will find more and more tasks where AI reaches human-level performance. Those tasks will be ripe for automation.

As for the AI workflow itself, AI automation is increasing and yielding accelerated AI model development:

AI can implement basic data science on the fly, accelerating data science explorations.

AI can curate data, using AI models to clean data, assess data quality, and generate synthetic data.

AI automatically improves pre-training, with ML-based iterative improvement of hyper-parameters.

AI curation of fine-tuning, from labor-intensive RLHF to RLAIF, with AI-based evaluation of AI model outputs.

These are feeding into the algorithmic improvements in AI, and are essential to the current rate of AI progress.

Thus, the “AGI soon, then automation-driven acceleration” obscures the significant AI-driven acceleration already happening. We will see more acceleration as pre-AGI AI improves.

Automation versus Reasoning

Automation of a task is about a lot more than reasoning. To do a task automatically, there’s the need to understand context, user preferences, complex background information; it may require multi-modality, such as visual understanding; the breadth of task automation will depend on ability to plan and break down complex tasks.

Each advance in AI models and AI agents will yield some advance in automation, until with an AGI-level agentic AI is expected to be “over-powered” to do most any work task a human could do.

However, automation and reasoning performance of foundation AI models are two different things. The easy versus hard tasks for automation might not line up with traditional human intelligence.

For example, we may find it’s easier to automate theorem-proving than to automate fixing an under-the-sink plumbing leak. Certainly, many real-world jobs - Nurse, doctor, biology lab technician, plumber, roofer, auto mechanic, etc. - can’t be replaced by a computer-bound AI. We will need embodied intelligent robots to even attempt it.

Thus, even getting to AGI will not translate into full task or job automation. Many of us will find some relief in knowing that AI won’t be coming for most jobs any time soon.

Automation’s last-mile problem

In “Situational Awareness,” the automated AGI-level AI agents are viewed as drop-in employee replacements - Real automated AI researchers - who can do the following:

They’ll be able to read every single ML paper ever written, have been able to deeply think about every single previous experiment ever run at the lab …

They’ll be easily able to write millions of lines of complex code … They’ll be superbly competent at all parts of the job.

You won’t have to individually train up each automated AI researcher … Instead, you can just teach and onboard one of them—and then make replicas.

Vast numbers of automated AI researchers will be able to share context (perhaps even accessing each others’ latent space and so on), enabling much more efficient collaboration and coordination compared to human researchers.

This promise of replacing humans with automation reminds me of self-driving cars. Self-driving full automation was promised 10 years ago, but it took longer than expected.

Partial automation, or automating within a confined environment is possible, because you can exclude edge-cases or cover them with adequate data. But a truly open environment leads to exponential explosion in edge-cases.

Partial automation is easy; complete automation is hard. This is the ‘last-mile’ problem for automation. Low-hanging fruit automation of some tasks can be done already; it will expand and extend across more tasks. It will not be hard to get to 60% or even 80%. That last 20% of automation is orders of magnitude harder.

Some things are easy to automate and other things are hard to automate. What gets automated are the highest ROI tasks and the most easily automated tasks. Low-benefit or hard-to-automate tasks will be last to get automated.

Just as you can’t accelerate a pregnancy, you cannot accelerate actual physical experiments with automation. AI can accelerate how you hypothesize something, reason about it, or even simulate it, but not actual physical verification. That may become the long pole in the tent across much of science and engineering, including in AI development itself.

Automation is not a binary thing or single-step solution, but a gradual process of first refactoring of work flows to automate some tasks and then eventually automating it fully.

Automation, Acceleration, AI Limits & ASI

A more likely scenario for automation trends takes shape, based on the above points:

Partial AI-enabled automation is happening now.

The low-hanging fruit tasks get automated first, with some lag due to adoption challenges.

As AI improves and AI adoption increases, more automation is unlocked. This AI automation is accelerating and improving AI model development itself.

Full automation will not be unlocked at the AGI level. There is a long road to full automation, and AI will be a great co-pilot productivity enhancer in the interim.

As with my perspective on “Situational Awareness” AGI timeline, I don’t see a reason to question the overall trend - it’s up and to the right.

But I don’t see AGI as the AI automation inflection point: AI-driven automation is already here, is increasing now, continues to gradually increase with each AI advance, and will not achieve full automation even when AGI is reached.

AI-driven automation will follow a traditional technology adoption curve, as shown in the below Figure. It will likely take a decade or even longer to play out, as is happening with self-driving cars. Social factors will cause automation adoption to lag technology capabilities.

Still, that doesn’t alter the trend-line much, just timelines. AI is projected to make five OOMs, orders of magnitudes, of progress in the next five years, giving us AGI. ASI will come soon after AGI and lead to an AI capabilities explosion. As Leopold puts it:

Whether or not you agree with the strongest form of these arguments—whether we get a <1 year intelligence explosion, or it takes a few years—it is clear: we must confront the possibility of superintelligence.

Right now, with new AI models releases each week - the latest being Claude 3.5 Sonnet, better than any other prior AI model - it looks like there are no near-term limits to progress.

While we are far from hitting a data wall, or limits on compute or energy right now, AI will eventually hit barriers to progress. We don’t quite know what those limits are or when we will hit them.

“Situational Awareness” explains why those limits will not stop progress to either AGI or ASI.

In our next installment, we will discuss the “Situational Awareness” perspective on the energy and compute investments needed to overcome possible limits, the Superintelligence explosion that will inevitably follow, and what it means for technology, science, economy and society.