AI Engineer World's Fair 3: Infrastructure

Building the AI stack for the AI Era: GPUs; Inference servers - Octo, FireworksAI, Covalent; Frameworks - LangChain, LangGraph and LangGraph Cloud; Modular's MAX and Mojo.

World’s Fair Debrief Part Three

This is part three of my AI Engineer World’s Fair (AIEWF) review; you can find AIEWF Part 1: Highlights here and AIEWF Part 2: Models, Training and AI Code Assistants here.

This article covers the infrastructure and tools in the AI stack to help AI builders create great AI applications:

GPUs.

AI inference-serving platforms.

AI application frameworks, including LangChain.

Modular’s MAX and Mojo.

The AI Ecosystem and AI Infrastructure

As the AI ecosystem evolves and matures, AI companies can be divided into those that are selling to other AI application developers within the stack - AI infrastructure providers, and those that are supplying solutions to end users - AI application providers.

Some are doing both: Foundation AI model companies like OpenAI and Anthropic are supplying underlying AI models that others build AI applications with, while also directly selling their AI models direct to enterprise users. AI agent framework company CrewAI is now in the ‘doing both’ category, as they introduced an enterprise offering, CrewAI+, at the conference.

GPUs In the AI Era

Let’s start at the base of the AI stack: GPUs. Dylan Patel writes about AI chip developments at his SemiAnalysis substack, and gave an insightful talk on the AI chip needs to make AI progress.

He notes that GPT-4, a ‘last-generation’ model with 208B active parameters, is expensive to run, “each token requires 560 gigaflops.” We haven’t gone beyond GPT-4 in performance because we haven’t gone much beyond it in training FLOPS, and are using fewer parameters besides.

That will change. It would take 3 days on 100k H100s to train GPT-4. The GPT-next / GPT-5 could be 20T parameters and require 10x-100x compute of GPT-4 (10e26 -10e27 training FLOPS). A100k H100 cluster could train that in about 100 days:

On a 100k H100 cluster training run for 100 days, you can achieve an effective FP8 Model FLOP of ~6e26 (600 million ExaFLOP).

Note: While Dylan Patel suggested a 20T parameter GPT-5, the trends we are seeing is much more training and more data, but with model size (parameter count) staying flat or even declining; larger AI models are costlier and slower. I personally doubt the ‘next-GPT’ will get as large as 20T; we can do much more with less.

He notes that LLM prefill (processing the input tokens) is compute intensive, while decoding (processing output tokens) is memory bandwidth intensive. Prefill is cheaper because memory is the more serious constraint on scaling GPUs. You can combine prefill and decode in batches to balance compute and memory capabilities, batching strategies and system design to get best efficiency and performance can become complex.

Dylan Patel says context caching is a big deal, as a way to cut the cost of AI on large documents sets. Only Gemini 1.5 has context caching, but hopefully this feature will come to other AI models soon.

Inference Serving Providers

Several inference providers, and not just the major cloud providers like Microsoft Azure or AWS, were pitching their services:

Fireworks AI provides inference service for open AI models such as Llama3, Code Llama, Mixtral, Yi Large, and Stable Diffusion.

Covalent is an open-source library to write python locally and deploy applications including AI apps as functions. They have a pay-as-you-go GPU cluster where you also run inference and GPU jobs (like LLM fine-tuning) remotely.

FriendliAI, based in Korea but moving to US, serves AI inference endpoints for open AI models like Mixtral, Llama3, etc. They offer a choice of dedicated endpoints, containers, or serverless endpoints.

Octo.ai offers Octostack, an optimized production GenAI inference stack that customers can run in their own private environment or in the cloud, a good option for enterprises that want to control their inference environment beyond cloud offerings.

Substrate claims to be “The only API optimized for multi-inference workloads.” Their pitch is that multi-inference AI agent flows or RAG tasks need the AI endpoint calls co-located for efficiency. They handle that in an environment designed to execute compound AI workloads with automatic parallelism.

LangChain, LangGraph and LangGraph Cloud

LangChain adds LangGraph Cloud: Harrison Chase of LangChain made a release announcement and presented on LangGraph Cloud, a managed service for deploying and hosting LangGraph applications, with a LangGraph Cloud API. This is a paid service, so a different model from an open source library. Some of the features he touted were:

Assistants: LangGraph Cloud API exposes LangGraph functionality through Assistants.

Runs: LangGraph Cloud lets you do cron jobs or background runs as well as streaming or interactive runs. Other features include persistence, configuration of how assistants respond, such as double texting.

LangGraph Studio, which provides a developer environment (similar to no-code toolkits) for building LangGraph applications.

Lance Martin presented at LangChain’s AIEWF workshop, and gave a great overview on the challenges of RAG and RAG use-cases of Langgraph: Corrective RAG (CRAG) using local LLMs.

Frameworks & deploy toolkits - Convex and Hypermode

Convex gave a talk titled “we accidentally built an AI platform.” Convex is an an open source backend framework that extends a React-style data-flow paradigm into the backend. It brings a database, connectors and functions together so developers implement a connected backend in TypeScript.

So what’s the AI angle? Convex has the ability to sync state between multiple server-side tasks, which makes it amenable to support gen AI and AI agent applications. To support AI further, they’ve added vector indexing to support RAG.

Hypermode is a toolkit for building GenAI apps, with the goal of improving the experience of developing with AI. Hypermode provides a runtime, integrating models, functions, and tools - selling it as “the fastest way to augment your app with AI.”

They sell a hosted service but also have their API available on GitHub make it easy to rapidly iterate.

“Iteration is the compound interest of software. .. But iteration can’t happen if you are afraid of getting it wrong.” - Hypermode CEO

Rebuilding the AI stack - Mojo and MAX

The core of the AI development stack is the programing language it’s built on. Python is widely used for machine learning and AI model building, but it suffers from single-threading, slow performance, and inability to naturally utilize GPUs. Is there a better way?

Chris Lattner, co-founder and CEO of Modular presented on MAX and Mojo in an AIEWF talk. Mojo is a new pythonic programming language, to fix Python’s performance and other issues; MAX is a framework for deploying Gen AI and PyTorch AI models. As he put it:

“Most of the stack was not built for Gen AI. Fragmentation slows innovation and progress.”

Modular has the goal to help developers “own, control, and deploy GenAI with ease.” If you can accelerate AI’s programming languages and tools, you can accelerate AI. To get there, they built Mojo.

As a ‘pythonic’ language, Mojo is fully compatible with Python to reuse code and take advantage of extensive Python libraries and ecosystem. The magic of Mojo is its speed, 10x, 100x, or even 1000x faster than Python, depending on the use case. The performance precludes needs to use C and CUDA, potentially avoiding multiple languages in the stack.

MAX, which stands for Modular Accelerated Xecution (MAX), is a serving stack for Gen AI, with a unified set of APIs and tools that help you build and deploy AI pipelines, both locally and in the cloud. With the advantage of being built on Mojo, it’s trying to fight to reduce the fragmented array of tools in the AI/ML stack today.

Since MAX is designed in the LLM era for the tasks of building high-performance AI pipelines, it can be optimized for the AI stack as it is now, versus the tool-chains built for the ML era stack, such as PyTorch. Chris Lattner touted MAX for:

Performance: MAX gets 5x on llama3 vs llama.cpp

Portability: Write once, deploy to any hardware, i.e. local or cloud, with the best possible cost-performance ratio.

Interoperability: It works with Pytorch, ONNX, and a number of other toolkits for ML or AI.

Developer velocity: Move faster with common local or cloud development.

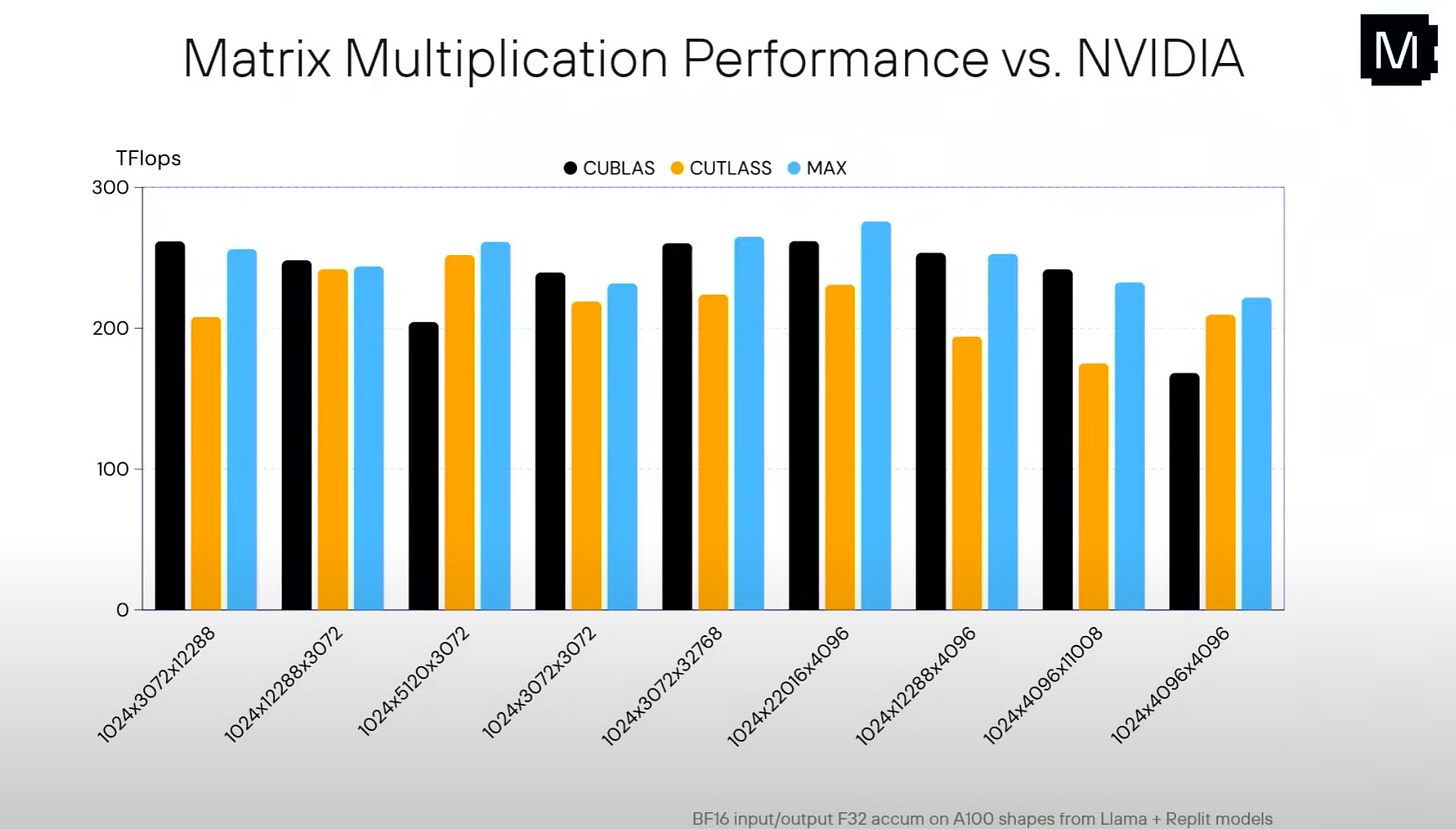

The real pain for programmers though is GPUs. Their dream is “programming a GPU in python as easy as CPU” and get “the full power of CUDA” with ease of programming CPUs. They needed the Mojo programming language to get there, but they have hooks that not only can expose GPU features, but he shared results where the MAX mat-mul beats vendor CUBLAS, CUTLASS.

MAX GPU is in development and is planned for release later this year.

Conclusion

The GPUs, infrastructure, frameworks and developer tools supporting AI are gating the speed of AI progress, and all parts of the AI stack are evolving to keep up with the fast rate of change of AI itself. The main takeaway from AIEWF is that the force of AI innovation is quickly evolving all layers of the AI stack, from AI chips, AI frameworks and AI tool-sets to AI models and AI agents.

Swyx aka Swawn Wang, co-host of Latent Space podcast was co-organizer of the AI Engineer World’s Fair. Visa issues threw a monkey wrench in his plans and he was unable to attend a conference he put together in person. However, the show went on, and he dialed in from Singapore (see Figure 1). Kudos to his personal resilience and the team around him that helped make AIEWF happen; it was a very successful conference.

One topic the AIEWF covered was the challenges of basic RAG and advanced RAG concepts to fix them, such as GraphRAG, hybrid search, etc. I didn’t cover that in these reviews because I intend to cover that in upcoming articles soon. Stay tuned!

One final personal note: I met some great people at the conference. If that’s you, thanks for subscribing, and let’s stay in touch! Whether you are a new or old subscriber, please let me know what you’d like me to cover in future articles, so I can keep “AI Changes Everything” relevant to your needs.