AI Video Generation Soars with Sora

OpenAI releases Sora, with quality AI video generation and useful video editing features. But competition is not far behind as AI Video generation keeps getting better.

Sora Released

This year has been The Year of AI for video. I predicted in January that:

By the end of 2024, AI video generation will make leaps and bounds improvement in video quality, speed, length, attention to detail, and adherence to prompts.

I can happily report that this prediction has been fulfilled. Sora is here, finally bringing the AI video generation model out of research and into the hands of ChatGPT Plus and Pro users. Reviews of Sora as released are that it mostly lives up to the hype as the best AI video generation model out there.

Yet Sora is far from alone. Between the announcement of Sora in February and the release of Sora this week, half a dozen credible contenders in the AI video generation space have added their own releases, several at or near Sora-like quality. We’ll cover both topics below.

Sora Unboxed

When it was released on Monday, the first question many asked was: How good is it? Was it much improved on the February release? OpenAI stated the model is “Sora Turbo” and they made Sora faster and cheaper than the early demo model.

Sora can generate 480p, 720p, and 1080p and from 5 seconds up to 20 seconds length. With enough credits, you can make a high resolution longer cut in widescreen, vertical or square aspect ratios. Sora puts high resolution to effective use, showing impressive photorealism and accuracy in details.

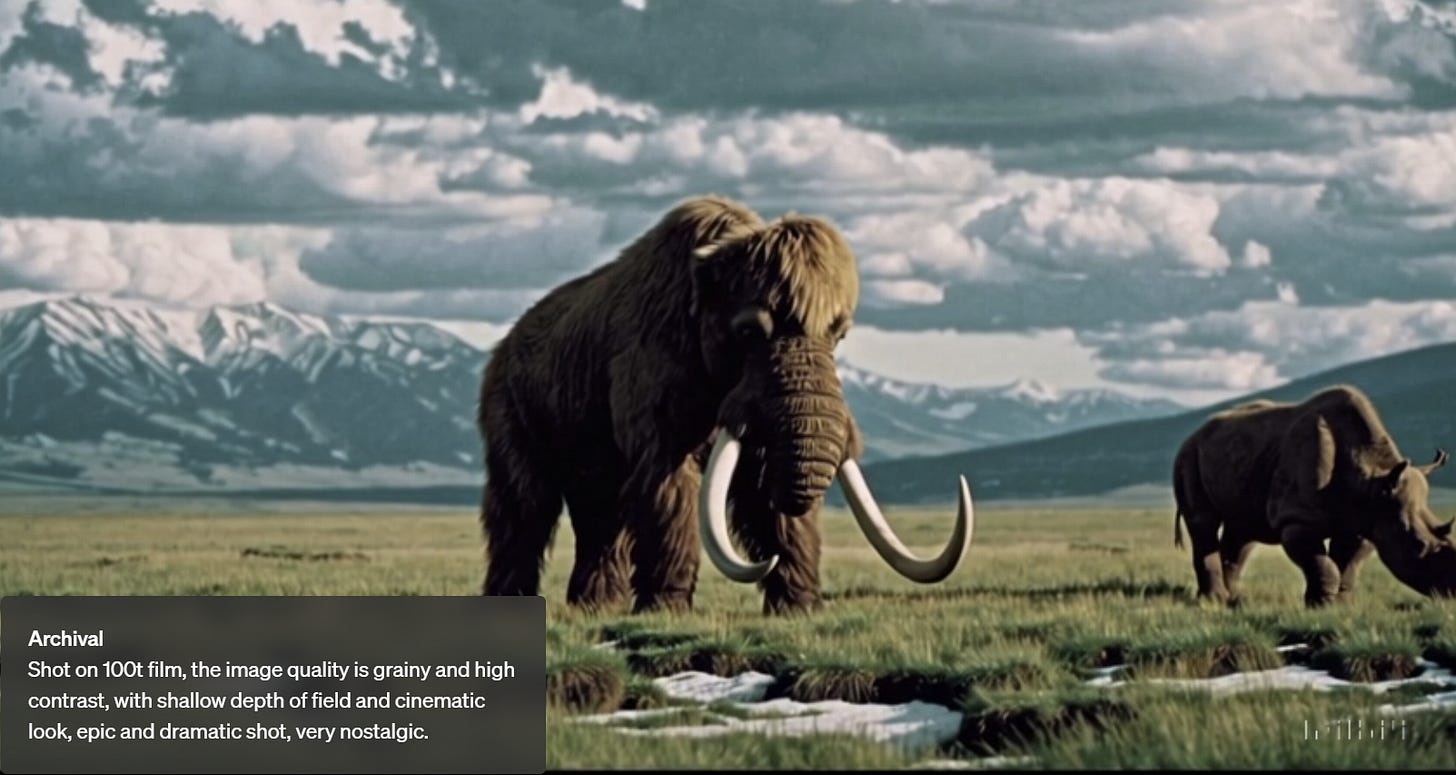

Sora is quite photorealistic for many kinds of videos: Generated landscape scenes look like real drone shots; nature and animal videos look like real documentaries; close-ups lighting effects, shadows and smoke are realistic; reflections are particularly good. On a frame-by-frame basis, it’s hard to detect if they were real or AI. It’s passed the uncanny valley.

While there is a lot that Sora gets right, there are still many gaps in Sora capabilities. Morphing transition failures occur. Some examples: A ball turns into a shark; a dog morphs backwards; a cat has 2 tails and turns from ginger tabby to robotic shell; three Christmas themes dogs turn into four; doors and walls shift or disappear as they go out and come back into view.

The other main problem Sora and other image generation models suffer from is physical un-realities. Balls sometimes don’t bounce but float; people can move in unrealistic ways. While their showcase videos generally show backgrounds staying consistent, there are glitches.

Since OpenAI is displaying their best videos, users will have to learn from experience how difficult it is to get to the best video. However, OpenAI brought several editing features into Sora to make the user experience better and turn Sora more than AI model and more of an AI-driven video generation toolbox.

Sora Features and the AI Video Creation Toolbox

“This is a tool for creators”- OpenAI.

In their presentation, they mentioned that Sora was not just about putting in a prompt and getting a finished video. Sora is more than a text-to-video model, it’s a tool for creators. To make this work, they needed breakthroughs in human-computer interface design.

Sora brings a smooth yet pared-down video generation interface, a video editing studio. This new AI video generation interface weaves traditional video editing interfaces with AI model prompting capabilities. Sora interface features include:

Controls: Users can control aspect ratio and resolution (480p, 720p, 1080p)

Remix: You can replace, remove, re-imagine and “Re-skin” a video with remix. Users can specify how to change a video with a text prompt and the strength of a remix (weak to strong) to add in new elements.

Recut: Cut down or trim a video and then generate extensions to complete the scene.

Storyboard: Allows users to set prompts at timestamps to indicate when an action should happen, to control the setting and flow of a video.

Style Presets: There are several distinctive style presets available to restyle a video – papercraft (like paper animation), archival (like older 16mm film), film noir (black and white), balloon world. You can also restyle a video in a custom way.

Loop: Turns a video into a continuous loop.

Blend: Combines the content of two videos into one video.

Sora is quite locked down for safety and liability reasons. It will avoid rendering copyright infringing content or celebrity faces. They won’t allow people under 18 to use it. They say, “Our top priority is preventing especially damaging forms of abuse,” and will report cases of abuse such as sexual deepfakes. Generated videos are watermarked, although pro users can remove the watermarks.

Accessing Sora

How to get it? You sign up to access and run Sora on the sora.com website, although access is temporarily disabled as demand has overwhelmed their servers.

Sora uses a lot of inference, so Sora pricing is steep. ChatGPT plus users get 1000 credits a month for Sora, which is enough to prompt for 50 priority 720p videos of 5s each. The expensive $200/month ChatGPT plus gets you a more generous 500 priority videos a month, access to 1080p resolution and unlimited “relaxed” use.

Sora.com includes an explore space where video creators share their results. You can view videos created by others and the prompt used to create it, helping to inspire other creations.

The Year in AI Video Generation

Let’s review how far we’ve come and the landscape of offerings we now have.

February: OpenAI introduced Sora, a text-to-video AI model that generates realistic videos from text prompts at a much higher level of physical realism and continuity than prior AI models, and includes features like image animation and video remixing. However, Sora was demonstrated but not released, leaving others to beat OpenAI in actual releases …

May: Google introduced Veo, an advanced video generation model designed to create high-quality videos in 1080p from text prompts or images in various cinematic and visual styles. In December, Google released Veo to preview on the Vertex AI platform, allowing developers to build applications with Veo and integrate with platforms like YouTube Shorts.

June: Kuaishou launched Kling AI, capable of producing longer videos with improved movement and multi-shot sequences, enhancing the realism and complexity of generated content. They followed up in September with Kling 1.5, which generates 1080p videos and added the Motion Brush feature for precise control over animations and improved video quality.

Also in June: Luma Labs unveiled Dream Machine, focusing on cinematic-quality video generation from text or images, producing 120 frame (5 second) clips. Luma AI Ray 2 was announced in December to be available in Amazon Bedrock.

July: Runway released Gen-3 Alpha, a model that generates 10-second video clips from text, image, or video prompts, autonomously learning 3D dynamics for enhanced realism. They followed up with a speedier Runway Gen3 Turbo in August, and Advanced Camera Control in November, incrementally improving Gen-3 as a faster more controllable AI video generation system.

October: Genmo launched Mochi-1, an open-source good quality short-clip video generation model, and Meta researchers shared their work on MovieGen, which generates not just videos but embedded sound effects.

December: Tencent has released HunyuanVideo, bringing high-quality (720p resolution) video generation to open source. Also, Amazon announced Nova Reel for creating short videos, targeting applications in product showcasing and entertainment.

Several proprietary and open-source AI video generation models released this year can produce “Sora-level” quality video, and choice and quality keep improving with each new release.

Conclusion - Several Great AI Video Options

While Sora is the best-in-class tool for AI video, competitive AI models like Runway Gen 3, KlingAI 1.5, Luma AI Ray 2, and Google Veo perform at or near the same levels. Curious Refuge did a head-to-head comparison to compare leading video generation models on some prompts. His comparison gave the edge to Sora overall, as have most reviews, but alternatives are not far behind. As with all things AI, your mileage may vary and what model is best depends on the use-cases.

“This is the worst it will ever be.” We said that a lot for AI video generation, since Runway Gen-2 came out 20 months ago. Gen-2 was both primitive yet very compelling, showing the promise of what AI video generation could do.

The advances in 2024 in AI video generation and the release of Sora fulfilled some of that promise, as the quality continues to improve. But there is further to go: Better adherence to physical realism; consistency in objects and motions; sound effects; more fine-grained controls; cheaper, faster, and longer video generations. Expect much on these fronts in 2025 model updates.

PS Happy Shipmas

Last week, I speculated that OpenAI would release Sora this week, because Sora is their third biggest reveal in their “12 days of OpenAI” release announcements. They led with their second biggest announcement, o1 and o1 pro models, and they will likely save the best for last. I expect that to be their GPT-4.5 / GPT-next frontier model. In the meantime, they gave updates on Canvas and ChatGPT for Apple Intelligence.

Google made their own Shipmas news with their Gemini 2.0 announcement today, making Gemini 2.0 flash available for developers and sharing progress on Agents and Project Astra. Enjoy the new models this holiday season!