AI Week In Review 24.06.01

Codestral, Claude 3 tool use, CodeCompose, Perplexity Pages, Gemini 1.5 model tuning, Samba-1-Turbo speed record, ChatGPT Edu, You.com custom assistants, Showrunner, Seal Leaderboards, Cartesia Sonic

AI Tech and Product Releases

Mistral AI released Codestral-22B, a 22B parameter open-weight AI model for code generation with a 32K context window. It was trained on 80+ programming languages, including Python, and achieves 81% human eval on Python, SOTA for an open LLM for coding. The small size means it can run locally and can be integrated as a copilot on VSCode.

Last week, Meta AI released the CodeCompose LLM for code completion, that can generate code within code development environments comparable to GitHub’s Copilot. The largest CodeCompose models has 6.7B parameters.

Anthropic launched a tool use feature for its Claude 3 models, enabling users to create personalized assistants that interact with external tools and APIs to complete tasks. Anthropic cites several use-cases: Search databases; extract structured data from unstructured text; convert natural language requests into structured API calls; automate simple tasks through software APIs.

Perplexity announced Perplexity Pages, a new tool for creating content from research artifacts that are shareable and created with AI, Perplexity Pages can turn research into visually dynamic content like reports and guides, and you can also generate blog posts and articles by simply prompting from a title. Users can publish their work in a user-generated library and share with others. Pages requires an account and offers both free and Pro versions with varying features.

Google released Gemini 1.5 Flash and Pro models on their API for general availability. The best part for developers is a JSON Schema mode and Gemini 1.5 model tuning, allowing users to make customized models with no added costs beyond running the base model:

Starting June 17th, will also support model tuning, allowing developers to customize models for better performance in production environments. Tuning will be available both in Google AI Studio and the Gemini API, with tuning jobs currently being free of charge and no additional per-token costs for using a tuned model.

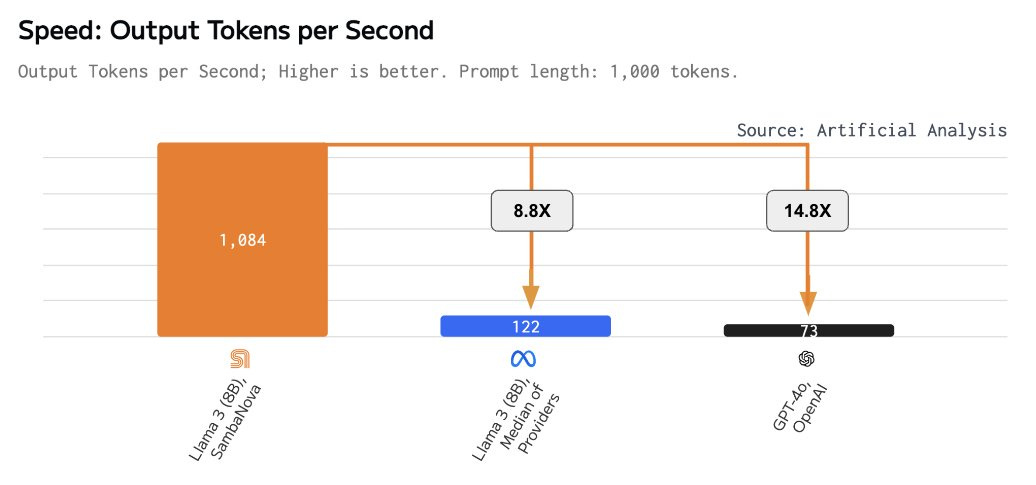

SambaNova Systems broke records with Samba-1-Turbo. As reported by Artificial Analysis, they achieved a world record by processing 1000 tokens per second at 16-bit precision on Llama 3 8B. This is powered by SambaNova’s custom SN40L RDU chips built on TSMC’s 5nm process.

OpenAI launched ChatGPT Edu, a version of ChatGPT tailored for universities:

Powered by GPT-4o, ChatGPT Edu can reason across text and vision and use advanced tools such as data analysis. This new offering includes enterprise-level security and controls and is affordable for educational institutions.

Opera browser will use Google’s Gemini to power AI features. As shared in Opera’s announcement, this integration will use Gemini models, including for image generation, and adds voice response output, using Google text-to-audio.

You.com launched Custom Assistants, a feature enabling users to create personalized AI assistants using top language models like GPT-4o, Claude 3, and Llama 3. Creators can choose from leading LLMs, provide custom instructions, enable live web access and personalization, and upload files for the assistant to work with.

As mentioned in our prior article “New AI Updates and UI / UX for AI,” Hugging Chat added Command R model access to Open Source Tools: Image generation and editor, calculator, parse document parser, web search.

Also mentioned in our article was the newly launched Cartesia Sonic, a model for speech synthesis. Using efficient state-space models, Sonic provides blazing fast text-to-speech, with under 150 ms latency.

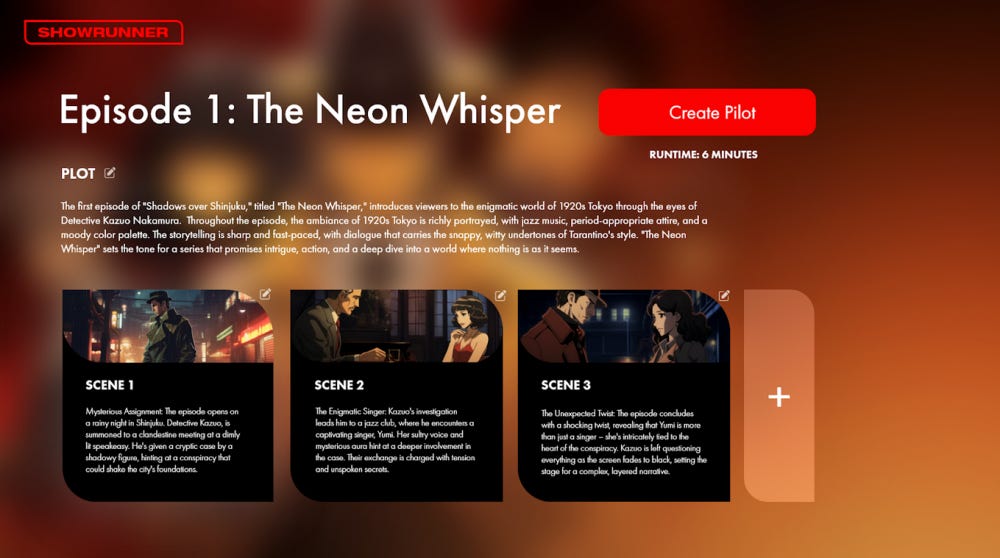

Fable announces Showrunner, a platform that allows users to generate and watch AI-powered TV shows set in virtual simulated worlds. The reaction from Hollywood: Hollywood Nightmare? New Streaming Service Lets Viewers Create Their Own Shows Using AI. Fable is the studio behind the viral AI-generated 'South Park' clips, which they are using to promote the Showrunner streaming platform.

Scale's SEAL Research Lab Launches Expert-Evaluated LLM Leaderboards. Scale AI is trying to fix AI model benchmarking with Seal leaderboards, that use private evaluation datasets and expert evaluations. The concept is great, but the private nature of the evaluation dataset makes it opaque.

Aider scored a SOTA 26.3% on the SWE Bench Lite benchmark, and it it did it without agentic behavior, RAG, or vector search. Instead, they used “static code analysis, reliable LLM code editing, and pragmatic UX for AI pair programming.”

ElevenLabs debuts AI-powered tool to generate sound effects:

The tool, available to all users starting today, allows users to type in prompts like “waves crashing,” “metal clanging,” “birds chirping” and “racing car engine” to generate snippets of sounds.

Top Tools & Hacks

Custom GPTs, memory, and more are now available for free users of ChatGPT:

OpenAI has significantly upgraded its free ChatGPT service, granting access to a suite of features previously exclusive to paid subscribers. These include custom GPTs, data analytics, chart creation, and the ability to ask questions about photos.

Unless you are a heavy user of ChatGPT, you can get pretty much all you need, including GPT-4o, on the free tier.

AI Research News

Our AI research highlights for this week covered on several topics:

Vision-language models are improving: ConvLLaVa can handle higher resolution image inputs efficiently, RLAIF-V can align multimodal LLMs, and Matryoshka Multimodal Models (M3) can calibrate detail of visual token description of images.

GFlow extracts 4D generations (Gaussian splats) from video.

Gzip Predicts Data-dependent Scaling Laws, and observational scaling laws can be extracted from observing LLM performance.

Transformers Can Do Arithmetic with the Right Embeddings.

LLMs achieve adult human performance on higher-order theory of mind tasks.

There have also been several new fully-open AI models released, sharing not just model weights, but the data and code frameworks:

From a collaboration between Mohamed bin Zayed University of Artificial Intelligence (MBZUAI), Petuum, and LLM360 in UAE comes K2 65B, a dense AI model that is a fully open source model.

Researchers from the University of Waterloo, Wuhan AI Research, 01.AI released MAP-Neo, a fully open-source and transparent bilingual LLM suite. Their 7B parameter model was trained on 4.5T “high-quality tokens” of both English and Chinese data, giving the bilingual LLM strong performance metrics.

They shared technical details in the paper “MAP-Neo: Highly Capable and Transparent Bilingual Large Language Model Series.” They also open-source all details, providing the pre-training corpus, data cleaning pipeline, checkpoints, and well-optimized training/evaluation framework.

Yuan 2.0-M32 is an MoE (mixture-of-experts) model with 32 experts and 40B total parameters, and 3.7B parameters active during inference. It outperforms Llama 3 70B on Math/ARC tasks using only 3.8% of FLOPs in training. It is presented in “Yuan 2.0-M32: Mixture of Experts with Attention Router.”

AI Business and Policy

OpenAI formed a new board for Safety and Security. There are questions about potential conflicts of interest because Sam Altman, the CEO of OpenAI, is also on the board. This is the wake of their super-alignment team leaving, including Jan Leike, who joined rival Anthropic.

Google gave a response to embarrassing AI Overviews fails from last week. It got a lot of egg on its face - and glue on its pizza - over the AI Search Overviews giving terrible answers in some cases. This week, Google admitted its AI Overviews need work, while defensively calling out some ‘fails’ as spoofs and explaining others as edge-cases.

“The vast majority of AI Overviews provide high quality information, with links to dig deeper on the web. Many of the examples we’ve seen have been uncommon queries, and we’ve also seen examples that were doctored or that we couldn’t reproduce. We conducted extensive testing before launching this new experience, and as with other features we’ve launched in Search, we appreciate the feedback. We’re taking swift action where appropriate under our content policies, and using these examples to develop broader improvements to our systems, some of which have already started to roll out.” - Google

Hugging Face says it detected ‘unauthorized access’ to its AI model hosting platform.

If you want in on the AI investment wave, VCs are selling shares of hot AI companies like Anthropic and xAI to small investors in a wild SPV market.

AI training data has a price tag that only Big Tech can afford. Big Tech has advantages of access to data, but also the means to acquire licensed data content:

OpenAI has spent hundreds of millions of dollars licensing content from news publishers, stock media libraries and more to train its AI models — a budget far beyond that of most academic research groups, nonprofits and startups. …

AI Opinions and Articles

Helen Toner, who was on the OpenAI Board during the drama last November, was interviewed on the Ted AI Show, and she gave her side of the OpenAI story. She says OpenAI CEO Sam Altman was fired for 'outright lying,' including his financial ties to OpenAI. Her characterization of Sam Altman puts the firing in a whole different context.

"The [OpenAI] board is a nonprofit board that was set up explicitly for the purpose of making sure that the company's public good mission was primary — was coming first over profits, investor interests, and other things. But for years, Sam had made it really difficult for the board to actually do that job by, you know, withholding information, misrepresenting things that were happening at the company, in some cases outright lying to the board." - Helen Toner

Elon Musk doubles down that AGI will be here next year, and that AI will exceed all human intelligence combined by 2030.

“We will have AI that can handle any cognitive task, it’s just a matter of when.” - Elon Musk

George Lucas, asked about using AI in filmmaking, says it’s “inevitable.”

“Well, we've been using it for 25 years, and it's not AI, but we use all the digital technology because we pioneered a lot of that, because especially at ILM, we were the only place that was doing digital. But the thing of it is, it's inevitable. I mean, it's like saying, 'I don't believe these cars are gunna work. Let's just stick with the horses. Let's stick with the horses.' And yeah, you can say that, but that isn't the way the world works.” - George Lucas