Building an LLM, pt 1 - Pre-training

How Its Made - The LLM Episode

What really is inside AI models like ChatGPT or GPT-4? And how are they made?

I’ve been a fan of the “How Its Made” show, which describes the manufacturing steps to make various consumer products. Foundational AI models are the pinnacle of technology of our era, just as the Boeing 747, Cray-1 supercomputer, and Empire State building were testaments to technology progress in their time. I’ve read up on many AI research papers to gain understanding of AI model training, even though I don’t have the supercomputer cluster to build my own, because we can better use these AI models if we understand how they were made.

Given how OpenAI themselves are warning of the risks of super-intelligent AI, it’s important to know what goes into making our most advanced AI technology for reasons of AI safety and risk assessment. I was disappointed that recent announcements of GPT-4 and PaLM2 didn’t come with more details on their architecture and technical development, although there was a leak of PaLM2 dataset and parameter information, which we reported in last week’s Weekly Report. We need transparency in AI models, but transparency without understanding is not useful.

For all these reasons, I’d like to dive deeper into how Large Language Models (LLMs) are trained.

Steps to Making an LLM

Large Language Models (LLMs) are word prediction engines and instruction-following answer engines, trained to predict the ‘correct’ next word in a sequence that generates an appropriate response to a question or instruction. LLMs are trained in two stages:

Pre-training: First, a massive amount of text is tokenized and fed into a deep neural network. To conduct unsupervised pre-training of the model, gaps are made in text whereby the model is trained to predict those gaps or the next word (or token). This yields a model good at predicting the next token, and for large enough models it learns general representations along the way.

Instruction-tuning: The pre-trained model is further improved with large scale instruction tuning and reinforcement learning, to better align to end tasks and user preferences. For example, it could be tuned to make it better at answering questions.

LLM Pre-training and Transformers

Let’s dive deeper into LLM pre-training.

The first step in making LLMs is to take in the raw text input and tokenize it into words (or word-parts), and then each word or token is converted into an embedded vector representation. Mapping words to tokens is not 1-to-1. More than one token is needed for compound and complex words, and tokenization includes numbers and punctuation. Typically there are 100 tokens for each 75 words.

LLMs first were developed out of the work on Transformers, in particular in the wake of the seminal paper “Attention is All you Need” by Vaswani et al. in 20171. A transformer is a mathematical construct that enables attention across a span of text, to connect and relate different words in a span of text.

Transformers solved a problem prior language models had of how to represent relationships between distance related words in a text.

Transformers are also highly parallelizable on GPUs, making them easy to scale up. As a result, transformers opened the door to LLMs. All LLMs, from the GPT series of models to PaLM2 and LLaMA3, use Transformer-based attention as the core structure of their architecture.4

Since the number of connections between any two words in a span of text is quadradic on the size of that text span, Transformer attention structure memory and computational needs grows quadradically as well. To further speed up training, Transformer improvements, such as FlashAttention5, have been used to optimize attention matrix operations in training. This was used to train the LLaMA model:

While the Transformer attention block is a constant part of LLM architectures, there are many variations and improvements around this in LLM implementations. For example, Positional embeddings are used to indicate word position in the input text, as sequential information is lost when processing text in parallel. GPT-Neo and LLaMA used an enhancement called rotary positional embeddings, from the paper RoPE.6

Self-supervised pre-training and token prediction objective

When it comes to any machine learning task, you need to ask the question “What is the learning objective?” Machine learning traditionally relied on supervised learning, which involves labeling items in a dataset, then training the model to minimize its error in outputting the correct label answer for each dataset item.

However, labeling data is human-intensive and hard to scale, while unlabeled data is abundant. So how can you leverage all the available unlabeled data to train models? If we are to scalable train LLMs on large amounts of texts we need self-supervised learning.

The brilliant idea for self-supervised learning to pre-train LLMs is to turn blocks of raw input text into training data by taking out words. Pre-training is an ‘input-to-target’ task to get the model to ‘guess’ the missing words in a block of text, with the removed words being the model’s target training labels. Pre-training is training the LLM to predict missing tokens.

The simplest way to do this LLM pre-training task is to load a text sequence into the model and predict the next token or word. However, the paper “UL2: Unifying Language Learning Paradigms”7 shows that combining types of missing token prediction ‘denoising’ objectives in a Mixture-of-Denoisers (MoD) approach yields the best performance across many tasks. These different denoising objectives train on fill-in-the-blank token prediction in different ways:

X-denoising (extreme denoising) considers extreme span lengths and corruption rates.

S-denoising (sequential denoising) strictly follows sequence order, predicting the next batch of tokens sequentially.

R-denoising (regular denoising) is a standard shorter-span corruption objective introduced in (Raffel et al., 2019) with shorter spans and fewer blank target tokens.

It’s notable that PaLM2’s technical report8, while not mentioning technical details explicitly, pointed towards the UL2 paper as a reference for how they built their model. The paper showed their MoD approach was superior to prior approaches using objectives, so it’s likely Google used these MoD objectives to pre-train PaLM2.

Context Window

The context window of an LLM is the maximum number of tokens that the model can process at time. Both the training input during training and the user input during use will be limited to text input no larger than the the context window. While GPT-3 has a context window of 4096 tokens, the GPT-4 and latest Claude AI models are going beyond that, to 32,000 and 100,000 token context windows respectively.

LLM Architecture

As mentioned, the core of the LLM architecture is the Transformer attention mechanism. The attention mechanism must relate every token to every other token across the span of attention, so the number of attention units (and parameters) in a given layer is quadratic on the span (or ‘hidden dimension’) of that layer.

The GPT line and most LLMs are Transformer decoders (without an encoder), which can be used as a model trained solely for next token prediction. By feeding a token output back one at a time to predict each next token, they can auto-regressively produce a full output sequence one token at a time.

The following chart shows the basic architecture of the BLOOM open-source model9, which like GPT models is a decoder-only model.

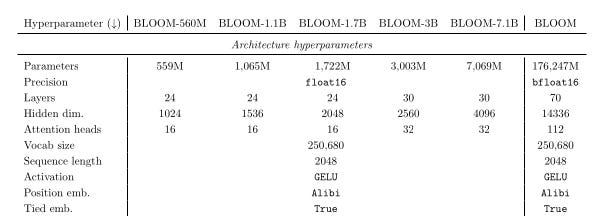

The number of parameters depend on the size of each (hidden) layer, and number of layers. For example, the open source 176 B parameter BLOOM model has 70 layers, hidden dimension of 14336, and 112 attention heads; the context window or sequence length is 2048. The following figure shows the hyper-parameters for various BLOOM models of different parameter sizes from under 1 billion to 176 B:

Training Dataset Size and Training Loss

For the largest LLMs, the dataset size used for training has reached into trillions of words / tokens:

GPT-3 (175B parameters) was trained on 300 billion tokens

BLOOM (176B parameters) was trained on 1.6 trillion tokens

LLaMA (7B - 65B parameters) used 1.4 trillion tokens

Chinchilla (70B parameters) used 1.4 trillion tokens10

PaLM (540B parameters) used 780 billion tokens

PaLM2 (340B parameters) used 3.6 trillion tokens (unofficial leaked numbers)

GPT-4 (1T parameters) has an unknown input dataset size

The more the LLM is trained on these token prediction objectives, the lower the training loss, so it takes both more training data and more compute to make a better model. This figure shows how the LLaMA model training loss tracks with more training and a larger dataset, up to 1.4 trillion tokens.

Instruction tuning

While pre-training makes the LLM a capable next token generator, it’s not useful without instruction fine-tuning. A recent paper, LIMA: Less Is More for Alignment, showed how most of the capability in LLMs is created through the pre-training, and that very little instruction tuning is needed with high quality instructions. We will dive further into instruction-tuning and fine-tuning for LLMs in a followup.

References

Vaswani, A., Shazeer, N., Parmar, N., Uszkoreit, J., Jones, L., Gomez, A. N., Kaiser, Ł., & Polosukhin, I. (2017). Attention is all you need. In Advances in neural information processing systems (pp. 5998-6008).

Chowdhery, A., Narang, S., Devlin, J., Bosma, M., Mishra, G., Roberts, A., et al. (2022). PaLM: Scaling Language Modeling with Pathways. arXiv preprint arXiv:2204.02311v5.

Touvron, H., Lavril, T., Izacard, G., Martinet, X., Lachaux, M. A., Lacroix, T., Rozière, B., Goyal, N., Hambro, E., Azhar, F., Rodriguez, A., Joulin, A., Grave, E., & Lample, G. (2023). LLaMA: Open and Efficient Foundation Language Models. arXiv preprint arXiv:2302.13971.

Lin, T., Wang, Y., Liu, X., & Qiu, X. (2021). A Survey of Transformers. arXiv preprint arXiv:2106.04554v2.

Dao, T., Fu, D. Y., Ermon, S., Rudra, A., Ré, C. (2022). FlashAttention: Fast and Memory-Efficient Exact Attention with IO-Awareness. arXiv preprint arXiv:2205.14135.

Su, J., Lu, Y., Pan, S., & Wen, B. (2021). RoFormer: Enhanced Transformer with Rotary Position Embedding. arXiv preprint arXiv:2104.09864.

Tay, Y., Dehghani, M., Tran, V. Q., Garcia, X., Wei, J., Wang, X., Chung, H. W., Shakeri, S., Bahri, D., Schuster, T., Zheng, H. S., Zhou, D., Houlsby, N., & Metzler, D. (2022). UL2: Unifying Language Learning Paradigms. arXiv preprint arXiv:2205.05131.

Google PaLM 2 technical report: https://ai.google/static/documents/palm2techreport.pdf

Le Scao, T., Fan, A., Akiki, C., Pavlick, et. al., (2022). BLOOM: A 176B-Parameter Open-Access Multilingual Language Model. arXiv preprint arXiv:2211.05100.

Hoffmann, J., Borgeaud, S., Mensch, A., Buchatskaya, E., Cai, T., Rutherford, E., et al., (2022). Training Compute-Optimal Large Language Models. arXiv preprint arXiv:2203.15556.

I love that illustration, would make a great book cover.

The rest was good too, really useful to understand how this particular sausage is made.